Community Articles

- Cloudera Community

- Support

- Community Articles

- Bringing data storage and data flow closer togethe...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 09-19-2017 05:21 PM - edited 08-17-2019 10:52 AM

Traditional data warehousing models espoused by Apache Hadoop and NoSQL stores entrench themselves on the belief that it is better to bring the processing to the data. Data flow, a hallmark of the HDF platform, is the mechanism by which we originally bring that data to processing platforms and/or data warehouses. Data can be processed, minimally, or fully, with products such as Apache NiFi for seamless ingestion into any number of data warehousing platforms.

Apache MiNiFi is a natural extension of these paradigms where processing can be performed with the benefits that NiFi provides, within a much smaller memory footprint; however, this article will discuss using Apache MiNiFi as a data grooming and ingestion mechanism coupled with Apache NiFi to further leverage edge and IoT devices within an organization's infrastructure.

How we view this:

This article is intended to look at two problem statements. The first is using Apache MiNiFi in a slightly different way: as a mechanism to front load some of the ingestion and processing framework. Second, we look to help augment Apache NiFi using Apache MiNiFi’s capabilities in last mile devices. In doing so we can mitigate problems sometimes seen on data warehousing and query platforms. We will do so by using an example of Apache Accumulo. Based on the BigTable design, Apache Accumulo can be replaced here with Apache HBase for your purposes; however, we are using Apache Accumulo because we already have a C++ client built for this system. Coupled with Apache MiNiFi we are able to create data typically built by MapReduce/Yarn/Spark in the MiNiFi agents avoiding issues that may plague the NoSQL store. How much processing is front loaded onto edge devices is up to the architecture, but for the purposes here we are using Apache MiNiFi and Apache NiFi to distribute processing amongst worker nodes where the data originates.

Problem statement:

Since Apache Accumulo can be installed on highly distributed systems it stands to reason that problems that plague BigTable also plague installations with high amounts of ingest and query. One of which are compaction storms on highly loaded systems. A server that is receiving too much data, too quickly, may attempt to compact the data in its purview. One way to mitigate this is combining data to be ingested. While we can’t completely alleviate this entirely with sustained ingest we can reduce compactions dramatically when servers are receiving disproportionate data from many sources. Using MiNiFi we can leverage NiFi with a primary (or set of primary) nodes to balance through a Remote Processing Group [1]. This enables many MiNiFi agents to create data, send it to NiFi and then enact on the created data that is sent to the Apache Accumulo instance.

These problems exist primarily from the motivation to minimize the number of files read per tablet or region server. As the number of files increases the performance is impacted; however, the system may not be able to compact or reduce the number of files fast enough, hence the need to leverage edge devices to do some of the work if the capacity exists.

How it’s done

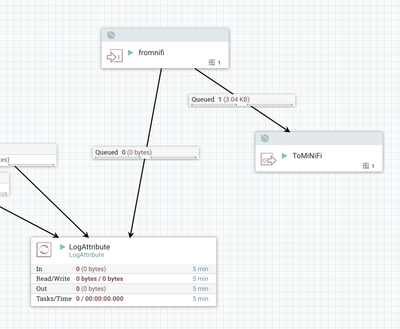

Using a C++ Apache Accumulo client [2], we’ve created a branch of Apache MiNiFi that has extensions [3] built for Apache Accumulo. These extensions are built to simply take content from a flow and put the textualized form of the attributes and content and place it into the Apache Accumulo value. The data is then stored into an Apache Accumulo specific data format known as an RFile[4]. The keys and values are stored into the Rfile and then send to the NiFi instance via the Site-To-Site protocol. Figure 1, below, shows the input and output ports we have configured. Using this information we are able to create a configuration YAML file for Apache MiNiFi that uses the Apache Accumulo writer [5]. This YAML file will simply take an input from a GetFile processor and ship this to the NiFi instance. In the example from paste bin you can see that data is sent to the fromnifi RPG and then immediately shipped back to ToMiNiFi, where we bulk import the Rfiles

Figure 1: Apache NiFi view of Input and Output ports.

Does this make sense?

Since we're taking a slightly different approach by moving processing at the edge, some might question if this makes sense. The answer depends on the use case. We're trying to avoid specific problems we see related to data ingestion on NoSQL systems like Apache Accumulo/HBase. Not having the capacity to keep up with compactions isn't necessarily related to cluster size. There could be a tablet or region server that doesn't have the capacity and may have become a temporary hotspot, so leveraging your edge network to perform more of the ingest and warehousing process may make sense if that network exists and the capacity is sufficient. An edge device similar to that of a Raspberry PI has sufficient capacity to read sensors, create RFiles, and compact data, especially when the data purview is specific to that device.

Of course, to avoid the problem statements of using Apache Accumulo above, it would make more sense to combine and compact relevant RFiles. Doing this intelligently means knowing which tablet servers host what data and splicing RFiles we are sent as needed. In the example in Figure 1, we can do this by simply bulk importing files that are directed at a single tablet and re-writing the RFile to the session to be handled by another MiNiFi agent. This requires significantly more coordination, hence the use of the C2 protocol defined in Apache MiNiFi [6]. Using the C2 protocol defined in the design proposal we were able to use C2 to coordinate which Apache MiNiFi should respond to keys and which RPG queue hosts that data. Since this capability isn’t defined in the proposal we are not submitting this to Apache MiNiFi; however, the code should be available in [3] shortly. The purpose of this C2 coordination is to allow many Apache MiNiFi agents to work in a quorum without the need to access Zookeeper. It makes sense for the agents to handle the data they are given and other agents to compact the data externally, but it does not make sense to access Zookeeper. In the case of the BulkImport processor we do access Zookeeper; however, it makes more sense for Apache NiFi or some other mechanism to do the import.

Using a large set of Apache MiNiFi agents we can perform compactions in a much smaller footprint than a tablet server, external to it. With a minor increase in latency at ingest, we may be able to avoid a large query latency due to compactions falling behind. The fewer RFiles we can create, and the more we can combine, the better.

Apache MiNiFi at the front lines

Apache MiNiFi creating an object to be directly ingested implies we are doing data grooming and access. While not all devices may have the ability to perform the compression and memory to support the sorting of data, Apache MiNiFi can using [2] and [3]. Apache MiNiFi works well in scalable environments leveraging Apache NiFi’s remote processing group capability. The design goal is to take small devices that may have processing and memory capabilities beyond simple sensor read devices and use their capabilities to the fullest. In some ways this serves to minimize the infrastructure in data centers, but also helps us scale since we’re using our entire infrastructure as a whole instead of limited portions. The edge doesn’t necessarily have to be a place from which we send data, it can also be a place where we process data. Distributing computing beyond our warehouse and internal clusters gives us the ability to scale well beyond current sizes.

Apache Accumulo simply serves as an example where we do more at the edge so that we do less in the warehouse. Doing so may avoid problems at the warehouse and speed up the entire infrastructure. Of course, all use cases are different, and this article is simply meant to provide an alternative focus on edge computing.

The next steps will be to better show how we can front load compactions into MiNiFi and use Apache NiFi and Apache MiNiFi to help replicate data to separate Apache Accumulo instances...

[1] https://community.hortonworks.com/questions/86731/load-balancing-in-nifi.html

[2] https://github.com/phrocker/sharkbite/tree/MINIFI

[3] https://github.com/phrocker/nifi-minifi-cpp/tree/MINIFI-SHARKBITE

[4] https://accumulo.apache.org/1.8/apidocs/org/apache/accumulo/core/client/rfile/RFile.html

[5] https://pastebin.com/nbeTqNpD

[6] https://cwiki.apache.org/confluence/display/MINIFI/C2+Design+Proposal