Community Articles

- Cloudera Community

- Support

- Community Articles

- CML Runtime with Nvidia Libs and VSCode Editor

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

11-18-2023

01:26 PM

- edited on

11-20-2023

09:16 PM

by

VidyaSargur

Objective

This article contains basic instructions for creating and deploying a Custom Runtime with VS Code and Nvidia libraries to your Cloudera Machine Learning (CML) Workspace. With this runtime, you can increase your productivity when developing your deep learning use cases in CML Sessions. This example is also valid for Cloudera Data Science Workbench (CDSW) clusters.

If you are using CML or CDSW for deep learning, GenAI, or LLM use cases, please scroll down to the bottom of this page to check out more examples.

Using Custom Runtimes

Cloudera ML Runtimes are a set of Docker images created to enable machine learning development and host data applications in the Cloudera Data Platform (CDP) and the Cloudera Machine Learning (CML) service.

ML Runtimes provide a flexible, fully customizable, lightweight development and production machine learning environment for both CPU and GPU processing frameworks while enabling unfettered access to data, on-demand resources, and the ability to install and use any libraries/algorithms without IT assistance.

PBJ Runtimes

Powered by Jupyter (PBJ) Runtimes are the second generation of ML Runtimes. While the original ML Runtimes relied on a custom proprietary integration with CML, PBJ Runtimes rely on Jupyter protocols for ecosystem compatibility and openness.

Open Source

For data scientists who need to fully understand the environment they are working in, Cloudera provides the Dockerfiles and all dependencies in this git repository that enables the construction of the official Cloudera ML Runtime images.

The open sources PBJ Runtime Dockerfiles serve as a blueprint to create custom ML Runtimes so data scientists or partners can build ML Runtime images on their selected OS (base image), with the kernel of their choice, or just integrate their existing ML container images with Cloudera Machine Learning.

In this example we reuse the Dockerfiles provided this git repository to create a runtime with both VSCode and Nvidia libraries.

Requirements

In order to use this runtime you need:

- A CML Workspace or CDSW Cluster. AWS and Azure Public Cloud, or OCP and ECS Private Cloud OK.

- Workspace Admin rights and access to the Runtime Catalog.

- Basic familiarity with Docker and a working installation of Docker on your local machine.

Steps to Reproduce

Clone the git repository

Clone this git repository to obtain all necessary files.

mkdir mydir

cd mydir

git clone https://github.com/pdefusco/Nvidia_VSCode_Runtime_CML.git

Explore Dockerfile

Open the Dockerfile and familiarize yourself with the code. Note the following:

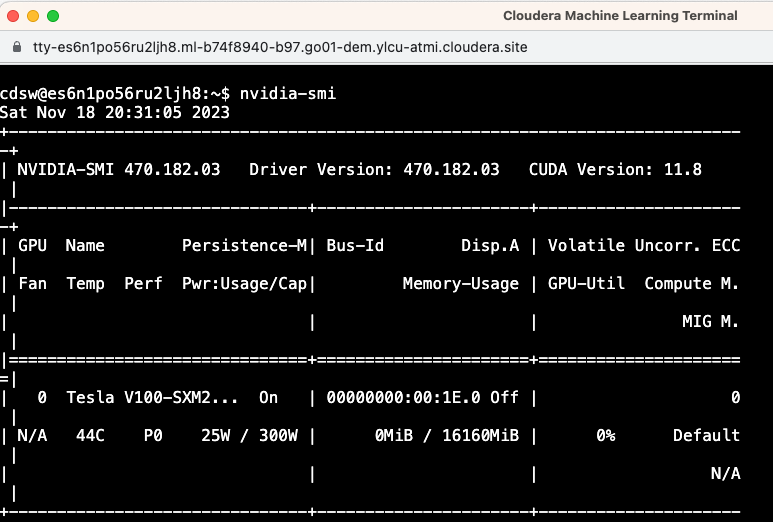

- To create a new image we are extending an existing CML image. This image has the CUDA libraries and is available here.

FROM docker.repository.cloudera.com/cloudera/cdsw/ml-runtime-pbj-workbench-python3.10-cuda:2023.08.2-b8 - We then install the VS Code Editor on top as shown in the PBJ VS Code runtime available here. Creating a symlink is necessary in order to ensure the right editor is launched at runtime.

# Install latest version. See https://github.com/coder/code-server/blob/main/install.sh for details RUN curl -fsSL https://code-server.dev/install.sh | sh -s -- --version 4.16.1 # Create launch script and symlink to the default editor launcher RUN printf "#!/bin/bash\n/usr/bin/code-server --auth=none --bind-addr=127.0.0.1:8090 --disable-telemetry" > /usr/local/bin/vscode RUN chmod +x /usr/local/bin/vscode RUN ln -s /usr/local/bin/vscode /usr/local/bin/ml-runtime-editor - The remaining lines are just environment variables that must be overridden in order to distinguish this build from others. In other words, we need to add some unique metadata for this image. Specifically you must update the values for ML_RUNTIME_SHORT_VERSION and ML_RUNTIME_MAINTENANCE_VERSION and make sure these are congruent with ML_RUNTIME_FULL_VERSION. Adding a unique description is also highly recommended.

# Override Runtime label and environment variables metadata ENV ML_RUNTIME_EDITOR="VsCode" \ ML_RUNTIME_EDITION="Community" \ ML_RUNTIME_SHORT_VERSION="2023.11" \ ML_RUNTIME_MAINTENANCE_VERSION="1" \ ML_RUNTIME_FULL_VERSION="2023.11.1" \ ML_RUNTIME_DESCRIPTION="This runtime includes VsCode editor v4.16.1 and is based on PBJ Workbench image with Python 3.10 and CUDA" LABEL com.cloudera.ml.runtime.editor=$ML_RUNTIME_EDITOR \ com.cloudera.ml.runtime.edition=$ML_RUNTIME_EDITION \ com.cloudera.ml.runtime.full-version=$ML_RUNTIME_FULL_VERSION \ com.cloudera.ml.runtime.short-version=$ML_RUNTIME_SHORT_VERSION \ com.cloudera.ml.runtime.maintenance-version=$ML_RUNTIME_MAINTENANCE_VERSION \ com.cloudera.ml.runtime.description=$ML_RUNTIME_DESCRIPTION

Build Dockerfile and Push Image

Run the following command to build the docker image. Edit the following with your username and preferred image tag:

docker build . -t pauldefusco/vscode4_cuda11_cml_runtime:latest

Push the image to your preferred Docker repository. In this example we will use a personal repository but CML and CDSW can also be used with other enterprise solutions. If your CML Workspace resides in an Airgapped environment you can use your Podman Local Container Registry.

docker push pauldefusco/vscode4_cuda11_cml_runtime

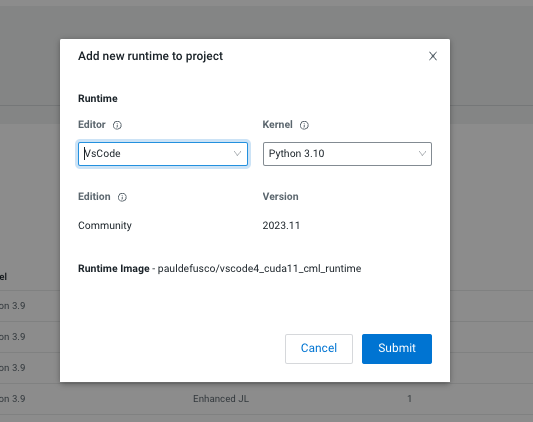

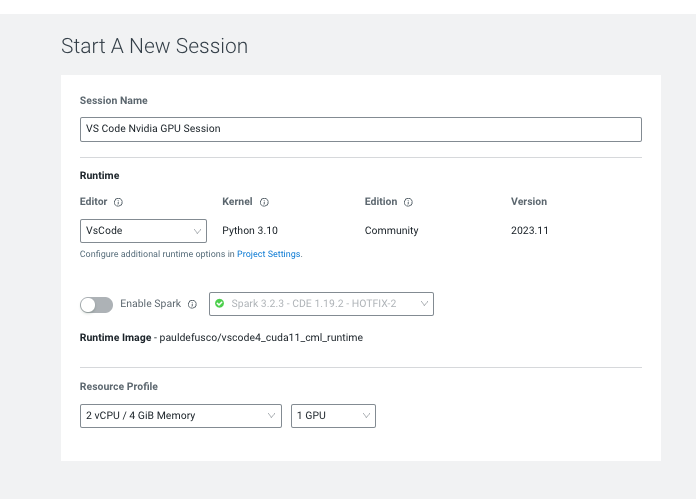

Add the Runtime to your CML Workspace Runtime Catalog and CML Project

This step can only be completed by a CML user with Workspace Admin rights. If you don't have Admin rights please reach out to your CML or CDP Admin.

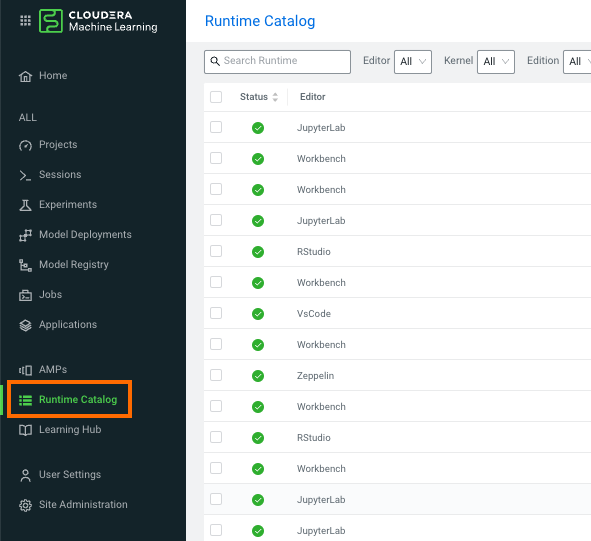

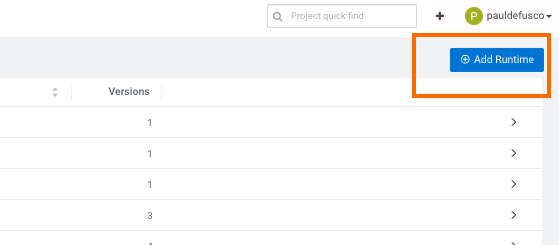

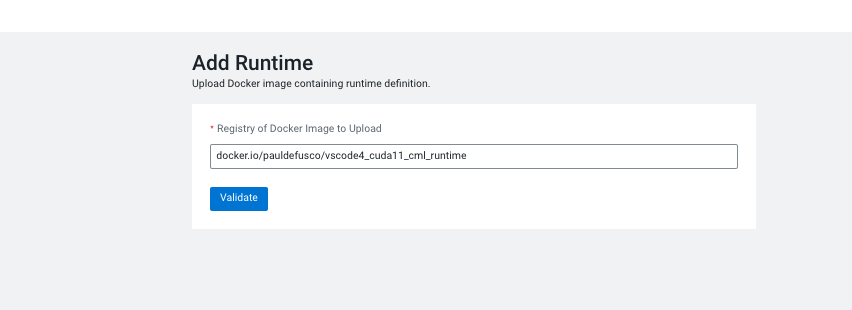

- 1. In your CML Workspace navigate to the Runtime Catalog tab (available on the left side of your screen). Then click on "Add Runtime" at the top right corner of your screen.

- Add the runtime:

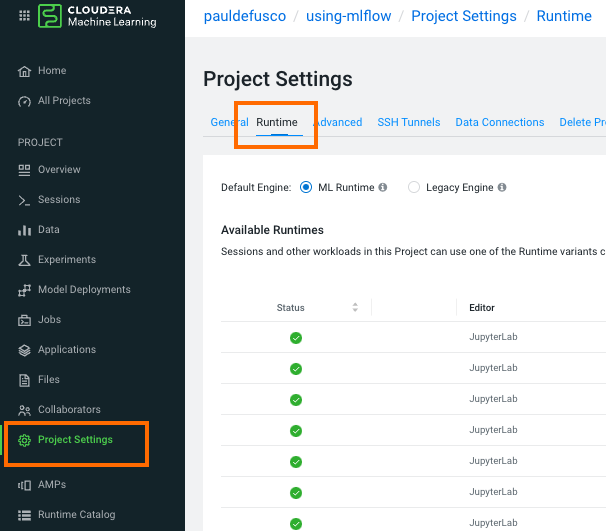

- Navigate back to your CML Project, open Project Settings -> Runtime tab and add the runtime there.

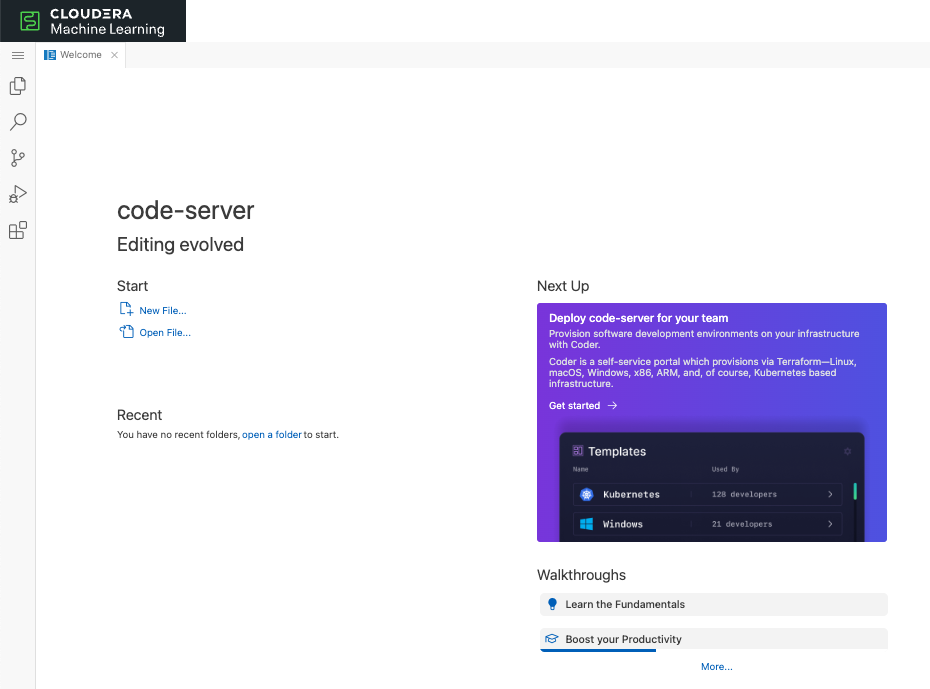

- Congratulations, you are now ready to use VS Code in a CML Session with Nvidia libraries!

Conclusions and Next Steps

Cloudera ML Runtimes are a set of Docker images created to enable machine learning development in a flexible, fully customizable, lightweight development and production machine learning environment for both CPU and GPU processing frameworks while enabling unfettered access to data, on-demand resources, and the ability to install and use any libraries/algorithms without IT assistance.

You can create custom CML Runtimes by extending the Cloudera Machine Learning base runtimes, PBJ and open runtimes available in this git repository as we have done in this example; or create your own from scratch as shown in this example.

If you are using CML for Deep Learning, GenAI and LLM use cases here are some more examples you may find interesting:

- CML LLM Hands on Lab

- LLM Demo in CML

- How to Launch an Applied Machine Learning Prototype (AMP) in CML

- AMP: Intelligent QA Chatbot with NiFi, Pinecone, and Llama2

- AMP: Text Summarization and more with Amazon Bedrock

- AMP: Fine-Tuning a Foundation Model for Multiple Tasks (with QLoRA)

- AMP: LLM Chatbot Augmented with Enterprise Data

- AMP: Semantic Image Search with Convolutional Neural Networks

- AMP: Deep Learning for Anomaly Detection

- AMP: Deep Learning for Question Answering

- AMP: Automatic Text Summarization

- Quickstarts with PyTorch, Tensorflow and MXNet in CML

- Distributed PyTorch with Horovod and CML Workers in CML

- Distributed Tensorflow with CML Workers in CML

- An end to end example of PyTorch and MLFlow in CML

Created on 11-04-2024 02:41 PM - edited 11-05-2024 12:24 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks a lot for this article and well described instructions :). I followed them but got stuck in the very end (starting the engine).

I was hoping you could give me some direction / give some advise for debugging.

Many thanks in advance!

# Dockerfile

engine:1:2: unexpected '/'

1: !/

^Engine exited with status 1.

Created on 11-06-2024 01:57 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @kleinhansda,

Thank you for your kind words. Could you please submit your query under the Support Questions section? There will be more experts available to assist you better. I am also tagging @pauldefusco to see if they can help.

Created on 11-12-2024 02:07 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Followed up via DM