Community Articles

- Cloudera Community

- Support

- Community Articles

- Change Data Capture (CDC) with Apache NiFi (Part 3...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 07-18-2017 02:30 PM - edited 08-17-2019 12:00 PM

Objective

This tutorial is the final article of a three part series. We will look at the flow components in greater detail to highlight best practices and potential trouble spots when working with CDC in NiFi. Part 1 Part 2

Environment

This tutorial was tested using the following environment and components:

- Mac OS X 10.11.6

- MySQL 5.7.13

- Apache NiFi 1.3.0

CDC Flow Configuration Details, Best Practices & Troubleshooting

Prerequisites

It's helpful to have completed the first two articles in this series(Part 1 ; Part 2) to have a point of reference for the items discussed below. But, this article will hopefully still be useful for those who are interested in some of the nuances of the CaptureChangeMysql, EnforceOrder and PutDatabaseRecord processors.

State in CaptureChangeMysql

The CaptureChangeMySQL processor has a "Distributed Map Cache Client" property which is set to a DistributedMapCacheClientService controller service to store State information and keep it updated. The DistributedMapCacheClientService controller service communicates with a DistributedMapCacheServer. The CaptureChangeMySql processor can be configured to monitor thousands of tables. Using this controller service to monitor the state information provides this flexibility.

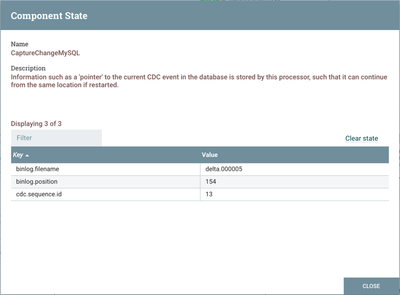

If you stop the CaptureChangeMySQL processor after it has run some binary logs, highlight it and right-click, you will see the option "View State" in the context menu. Selecting that option will show you the state information that has been stored:

Note: This is the default behavior, with the State Update Interval set to 0 seconds, meaning the state is updated when the processor is stopped or shutdown.

If you are debugging CaptureChangeMysql in your flow and wondering why data is not being processed, make sure to "Clear state" so that the processor will look for events at the beginning of your bin logs again.

Additionally, whether the processor has State Information will affect the use of other properties such as Retrieve All Records, Initial Sequence ID, Initial Binlog Filename, and Initial Binlog Position. Look at the CaptureChangeMysql processor's usage documentation for details (https://nifi.apache.org/docs/nifi-docs/components/org.apache.nifi/nifi-cdc-mysql-nar/1.3.0/org.apach...)

State in EnforceOrder

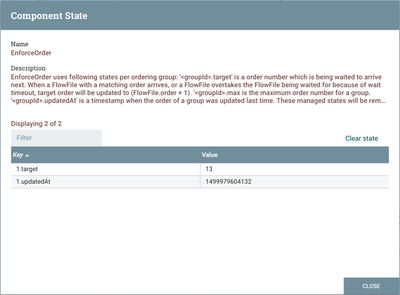

State information is also stored for the EnforceOrder processor.

If you are debugging EnforceOrder in your flow and wondering why data is not being processed, make sure to "Clear state" to reset.

EnforceOrder in a Clustered Environment

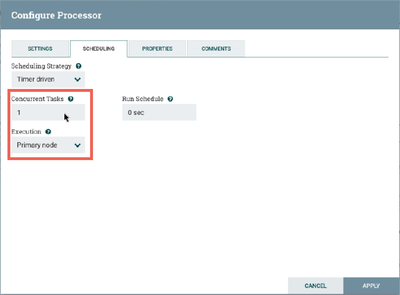

The EnforceOrder processor is used to guarantee the sequence of events. In the tutorial's flow, it plays this crucial role to make sure the PutDatabaseRecord processor applies the SQL from the bin logs in the proper order. If you are running NiFi in a cluster, processors prior to EnforceOrder could be multi-threaded and/or run on multiple nodes to optimize performance. However, the EnforceOrder processor must be running on the primary node and single-threaded:

PutDatabaseRecord Database Connection Pooling Service

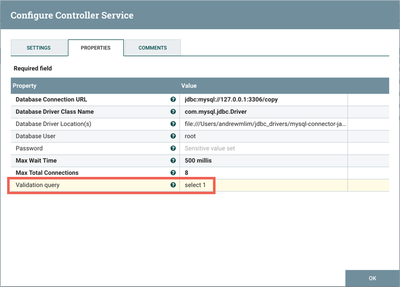

The PutDatabaseRecord processor requires a DBCPConnectionPool controller service. This controller service has a property called "Validation query" which is used to verify connections before returning them:

Setting this property isn't a requirement, but can be helpful if your database connections are inconsistent. For MySQL, I set "Validation query" to "select 1" to open a new connection if the first one fails. The syntax for other database types can be found at https://stackoverflow.com/questions/10684244/dbcp-validationquery-for-different-databases.

Note: Using query validation may have a performance penalty.

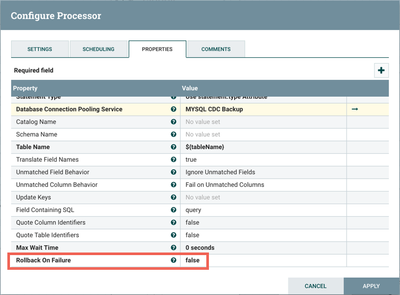

PutDatabaseRecord "Rollback On Failure" Property

The PutDatabaseRecord processor has a "Rollback on Failure" property:

The default value is "false", but setting to "true" will stop the processing of incoming flowfiles if one has failed. Effectively, this stops the processor even if it is running and doesn't allow further processing until the failed flowfile is corrected.

Note: The "Rollback on Failure" property exists in the PutHiveQL, PutSQL, and PutHiveStreaming processors as well.

Created on 06-12-2019 06:22 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great article!

I have a couple of questions

- Is this template being used in production? If so what are the common challenges you faced?

- The LinkedBlockingQueue<RawBinlogEvent> in CaptureChangeMySQL is in memory. When running this processor in cluster and primary node changes from one node to another, don't we loose the number events already stored in the queue?

Created on 12-17-2019 07:30 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @alim

Is there any way to work with CaptureChangeMySQL and EnforceOrder in cluster

environment for better performance?

Created on 10-03-2025 01:47 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Since the previous link (hortonworks.com) has expired, please refer to the updated links below:

Change Data Capture (CDC) with Apache NiFi – Part 1 of 3

Change Data Capture (CDC) with Apache NiFi – Part 2 of 3

Change Data Capture (CDC) with Apache NiFi – Part 3 of 3