Community Articles

- Cloudera Community

- Support

- Community Articles

- Distributed Pricing Engine using Dockerized Spark ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

07-16-2018

01:54 PM

- edited on

02-24-2020

01:38 AM

by

VidyaSargur

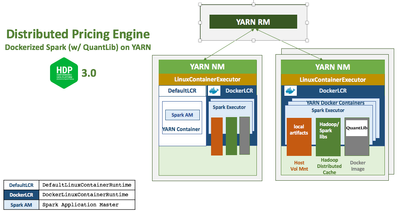

A distributed compute engine for pricing financial derivatives using QuantLib with Spark running in Docker containers on YARN with HDP 3.0.

Introduction

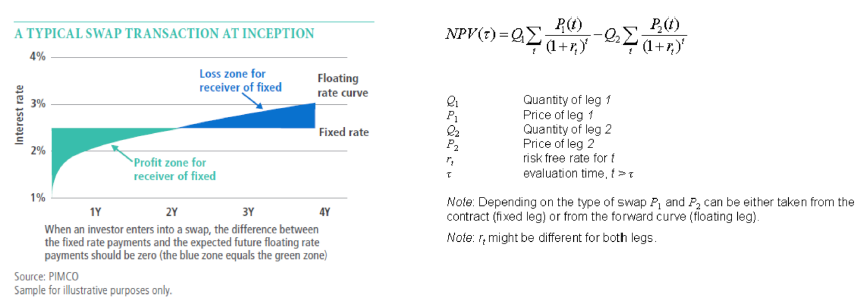

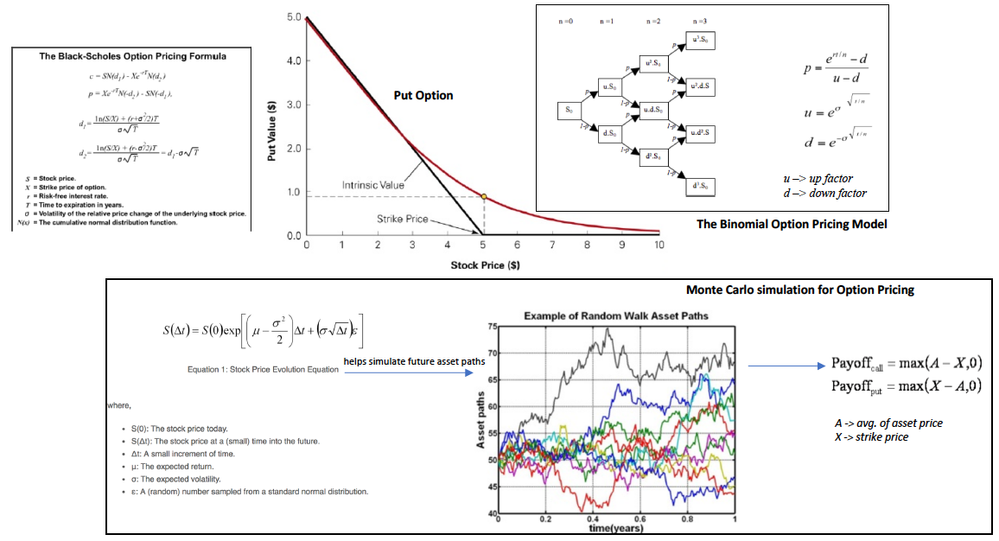

Modern financial trading and risk platforms employ compute engines for pricing and risk analytics across different asset classes (equities, fixed income, FX etc.) to drive real-time trading decisions and quantitative risk management. Pricing financial instruments involves a range of algorithms from simple cashflow discounting to more analytical methods using stochastic processes such as Black-Scholes for options pricing to more computationally intensive numerical methods such as finite differences, Monte Carlo and Quasi Monte Carlo techniques depending on the instrument being priced – bonds, stocks or their derivatives – options, swaps etc. and the pricing (NPV, Rates etc) and risk (DV01, PV01, higher order greeks such as gamma, vega etc.) metrics being calculated. Quantitative finance libraries, typically written in low level programming languages such as C, C++ leverage efficient data structures and parallel programming constructs to realize the potential of modern multi-core CPU, GPU architectures, and even specialized hardware in the form of FPGAs and ASICs for high performance compute of pricing and risk metrics.

Quantitative and regulatory risk management and reporting imperatives such as valuation adjustment calculations XVA (CVA, DVA, FVA, KVA, MVA) for FRTB, CCAR and DFAST in the US or MiFID in Europe for instance, necessitate valuation of portfolios of millions of trades across tens of thousands of scenario simulations and aggregation of computed metrics across a vast number and combination of dimensions – a data-intensive distributed computing problem that can benefit from:

- Distributed compute frameworks such as Apache Spark and Hadoop that offer scale-out shared-nothing, fault-tolerant, and data-parallel architectures that are more portable and have more palatable easier to use APIs, as compared to HPC frameworks such as MPI, OpenMP etc.

- Elasticity and operational efficiencies of cloud computing especially with burst compute semantics for these use cases augmented by the use of OS virtualization through containers and lean DevOps practices.

In this article, we will look to capture the very essence of problem space discussed above through a trivial implementation of a compute engine for pricing financial derivatives that combines the facilities of parallel programming through QuantLib, an open source library for quantitative finance embedded in a distributed computing framework Apache Spark running in an OS virtualized environment through Docker containers on Apache Hadoop YARN as the resource scheduler provisioned, orchestrated and managed in OpenStack private cloud through Hortonworks Cloudbreak all through a singular platform in the form of HDP 3.0.

Pricing Semantics

The engine leverages QuantLib to compute:

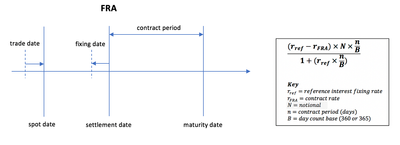

Spot Price of a Forward Rate Agreement (FRA) using the library’s yield term structure based on flat interpolation of forward rates

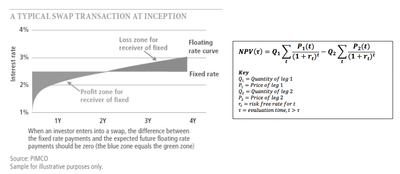

NPV of a vanilla fixed-float (6M/EURIBOR-6M) 5yr Interest Rate Swap (IRS)

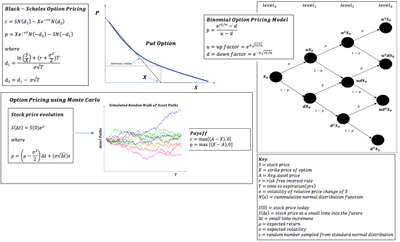

NPV of a European Equity Put Option averaged over multiple algorithmic calculations (Black-Scholes, Binomial, Monte-Carlo)

The requisite inputs for these calculations – yield curves, fixings, instrument definitions etc. are statically incorporated for the purpose of demonstration.

Dimensional aggregation of these computed metrics for a portfolio of trades is trivially simulated by performing this calculation for a specified number of N times (portfolio size of N trades) and computing a mean.

Technical Details

The engine basically exploits the embarrassingly parallel nature of the problem around independent parallel pricing tasks leveraging Apache Spark in its map phase and computing mean as the trivial reduction operation.

A more real-world consideration of the pricing engine would also benefit from distributed in-memory facilities of Spark for shared ancillary datasets such as market (quotes), derived market (curves), reference (term structures, fixings etc.) and trade data as inputs for pricing, typically subscribed to from the corresponding data services using persistent structures leveraging data-locality in HDFS.

These pricing tasks essentially call QuantLib library functions that can range from scalars to parallel algorithms discussed above to price trades. Building, installing and managing the library and associated dependencies across a cluster of 100s of nodes can be cumbersome and challenging. What if now one wants to run regression tests on a newer version of the library in conjunction with the current?

OS virtualization through Docker is a great way to address these operational challenges around hardware and OS portability, build automation, multi-version dependency management, packaging and isolation and integration with server-side infrastructure typically running on JVMs and of course more efficient higher density utilization of the cluster resources.

Here, we use a Docker image with QuantLib over a layer of lightweight CentOS using Java language bindings through SWIG.

With Hadoop 3.1 in HDP 3.0, YARN’s support for LinuxContainerExecutor beyond the classic DefaultContainerExecutor facilitates running multiple container runtimes – the DefaultLinuxContainerRuntime and now the DockerLinuxContainerRuntime side by side. Docker containers running QuantLib with Spark executors, in this case, are scheduled and managed across the cluster by YARN using its DockerLinuxContainerRuntime.

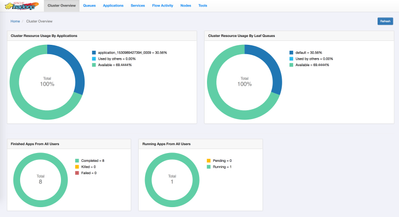

This facilitates consistency of resource management and scheduling across container runtimes and lets Dockerized applications take full advantage of all of the YARN RM & scheduling aspects including Queues, ACLs, fine-grained sharing policies, powerful placement policies etc.

Also, when running Spark executor in Docker containers on YARN, YARN automatically mounts the base libraries and any other requested libraries as follows:

For more details – I encourage you to read these awsome blogs on containerization in Apache Hadoop YARN 3.1 and containerized Apache Spark on YARN 3.1

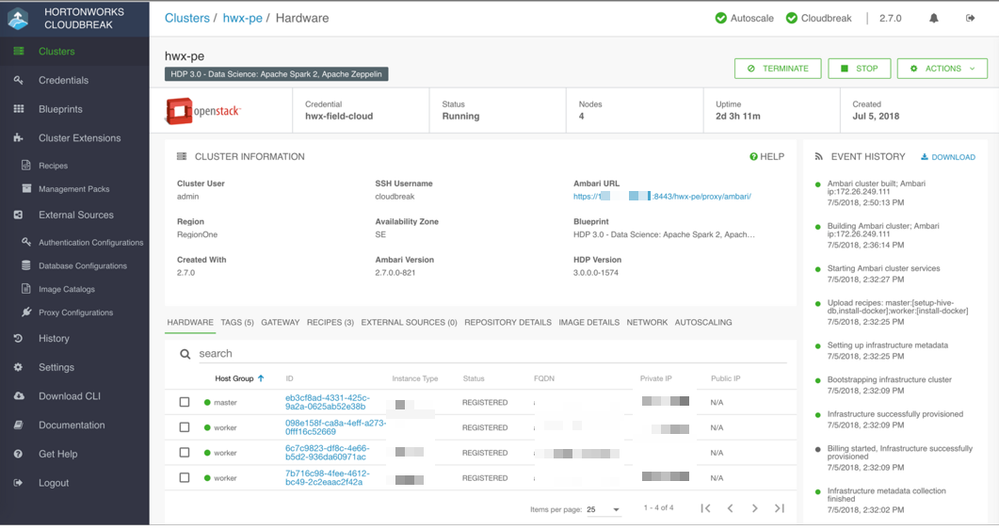

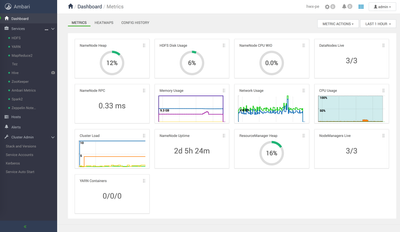

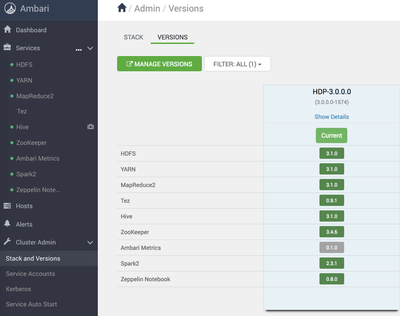

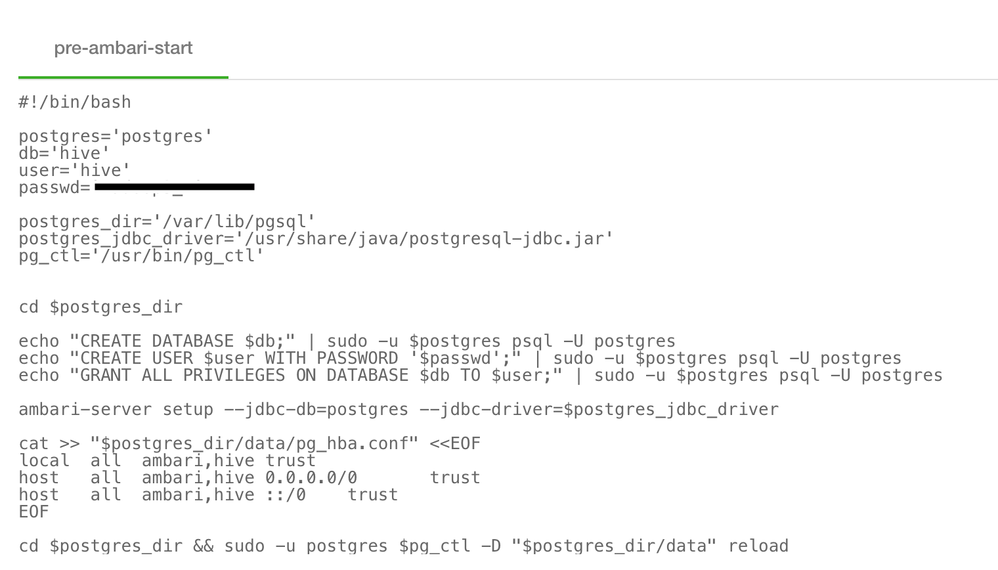

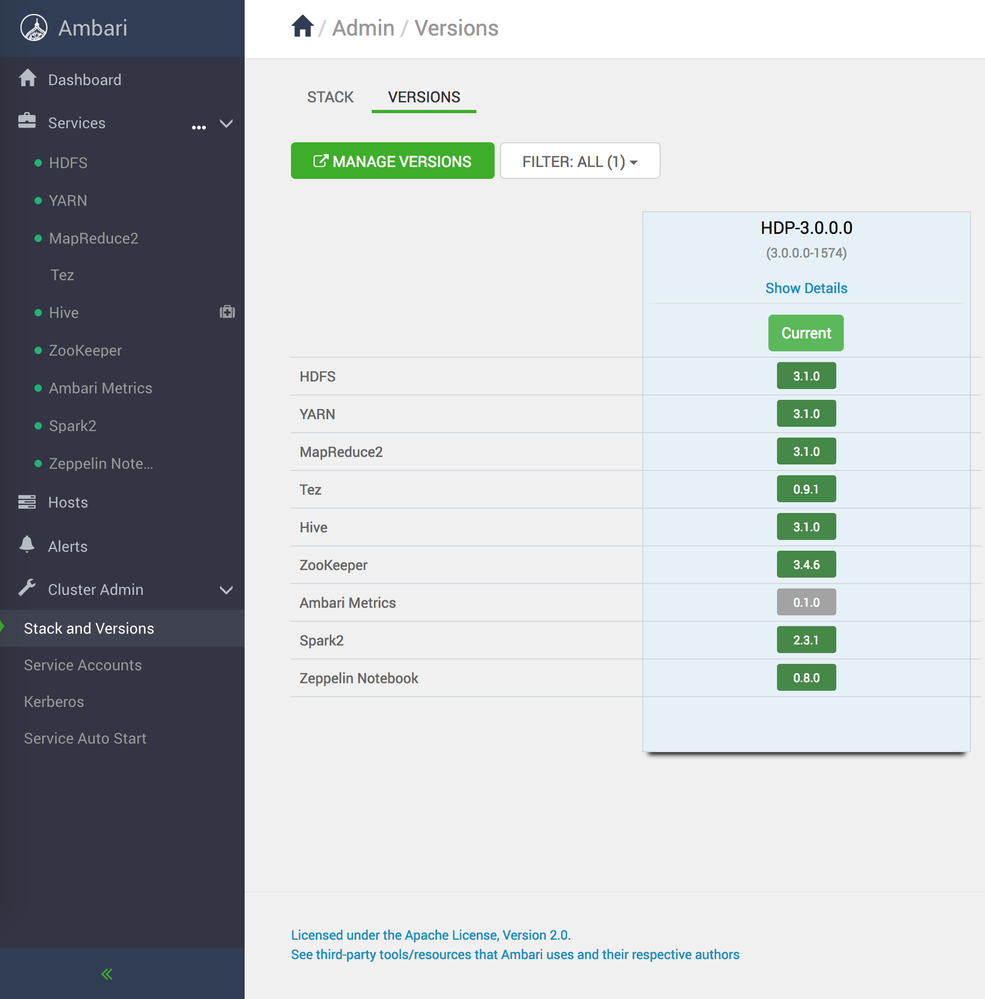

This entire infrastructure is provisioned on OpenStack private cloud using Cloudbreak 2.7.0 which first automates the installation of Docker CE 18.03 on CentOS 7.4 VMs and then the installation of HDP 3.0 cluster with Apache Hadoop 3.1 and Apache Spark 2.3 using the new shiny Apache Ambari 2.7.0

Tech Stack

Hortonworks Cloudbreak 2.7.0 Apache Ambari 2.7.0 Hortonworks Data Platform 3.0.0 (Apache Hadoop 3.1, Apache Spark 2.3) Docker 18.03.1-ce on CentOS 7.4 QuantLib 1.9.2

Please Do Try This at Home

Ensure you have access to a Hortonworks Cloudbreak 2.7.0 instance. You can set one up locally by using this project: https://github.com/amolthacker/hwx-local-cloudbreak.

Please refer to the documentation to meet the prerequisites and setup credentials for the desired cloud provider.

Clone the repo: https://github.com/amolthacker/hwx-pricing-engine

Update the following as desired:

- Infrastructure definition under cloudbreak/clusters/openstack/hwx-field-cloud/hwx-pe-hdp3.json. Ensure you refer to right Cloudbreak base image

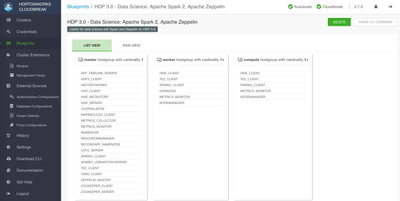

- Ambari blueprint under cloudbreak/blueprints/hwx-pe-hdp3.json

Now upload the following to your Cloudbreak instance:

- Ambari Blueprint: cloudbreak/blueprints/hwx-pe-hdp3.json

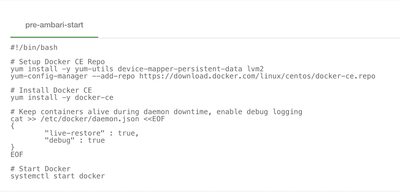

- Recipe to install Docker CE with custom Docker daemon settings: cloudbreak/recipes/pre-ambari/install-docker.sh

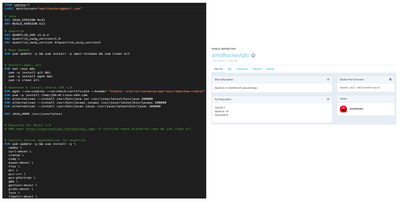

Now execute the following using Cloudbreak CLI to provision the cluster:

cb cluster create --cli-input-json cloudbreak/clusters/openstack/hwx-field-cloud/hwx-pe-hdp3.json --name hwx-pe

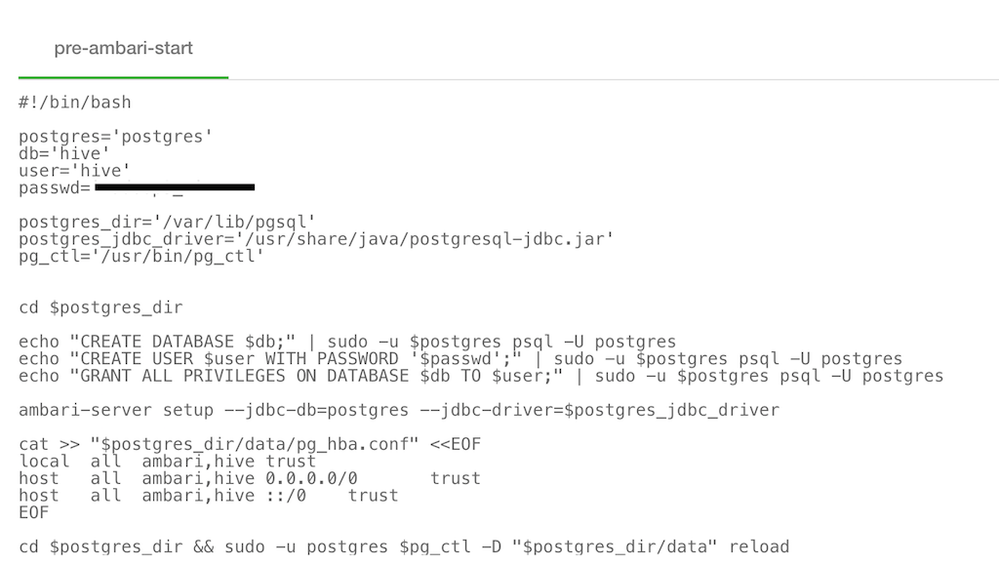

This will first instantiate a cluster using the cluster definition JSON and the referenced base image, download packages for Ambari and HDP, install Docker (a pre-requisite to running Dockerized apps on YARN) and setup the DB for Ambari and Hive using the recipes and then install HDP 3 using the Ambari blueprint.

Once the cluster is built, you should be able to log into Ambari to verify

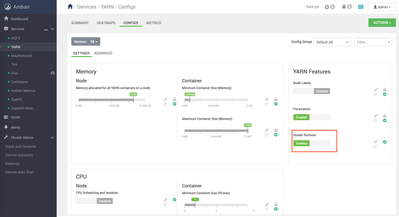

Now, we will configure YARN Node Manager to run LinuxContainerExecutor in non-secure mode, just for demonstration purpose, so that all Docker containers scheduled by YARN will run as ‘nobody’ user. Kerberized cluster with cgroups enabled is recommended for production.

Enable Docker Runtime for YARN

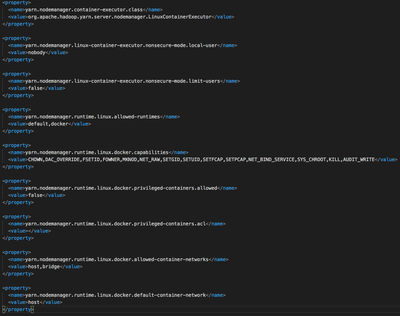

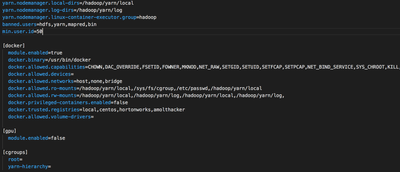

Update yarn-site.xml and container-executor.cfg as follows:

A few configurations to note here:

- Setting yarn.nodemanager.container-exexutor.class to use LinuxContainerExecutor

- Setting min.user.id to a value (50) less than user id of user ‘nobody’ (99)

- Mounting /etc/passwd on read-only mode into the Docker containers to expose the spark user

- Adding requisite Docker registries to the trusted list

Now restart YARN

SSH into the cluster gateway node and download the following from repo:

compute-engine-spark-1.0.0.jar

$ wget https://github.com/amolthacker/hwx-pricing-engine/raw/master/compute-engine-spark-1.0.0.jar

compute-price.sh

$ wget https://github.com/amolthacker/hwx-pricing-engine/blob/master/compute/scripts/compute-price.sh

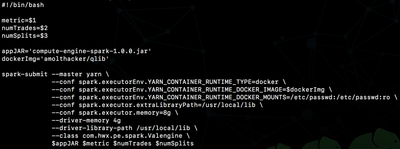

Notice the directives around using Docker as executor env for Spark on YARN in client mode.

You should now be ready to simulate a distributed pricing compute using the following command:

./compute-price.sh <metric> <numTrades> <numSplits>

where metric:

- FwdRate: Spot Price of Forward Rate Agreement (FRA)

- NPV: Net Present Value of a vanilla fixed-float Interest Rate Swap (IRS

- OptionPV: Net Present Value of a European Equity Put Option average over multiple algorithmic calcs (Black-Scholes, Binomial, Monte Carlo)

eg: ./compute-price.sh OptionPV 5000 20

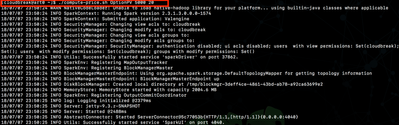

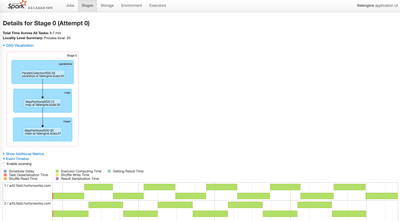

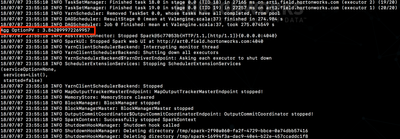

And see the job execute as follows:

Wrapping up …

HDP 3.0 is pretty awesome right !!! It went GA on Friday and I can tell you, if a decade ago you thought Hadoop was exciting, this will blow your mind away!!

In this article here, we’ve just scratched the surface and looked at only one of the myriad compute centric aspects of innovation in the platform. For a more detailed read on platform capabilities, direction and unbound possibilities I urge you to read the blog series from folks behind this.

References

https://hortonworks.com/blog/trying-containerized-applications-apache-hadoop-yarn-3-1/

https://hortonworks.com/blog/containerized-apache-spark-yarn-apache-hadoop-3-1/