Community Articles

- Cloudera Community

- Support

- Community Articles

- Flexing your Compute Muscle with Kubernetes [Part ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 01-03-2019 12:16 AM - edited 08-17-2019 05:02 AM

This is the second article in the 2-part series (see Part 1) where we look at yet another architectural variation of the distributed pricing engine discussed here, this time leveraging a P2P cluster design through the Akka framework deployed on Kubernetes with a native API Gateway using Ambassador that in turn leverages Envoy, an L4/L7 proxy, and monitoring infrastructure powered by Prometheus.

The Cloud Native Journey

In the previous article, we just scratched the surface of one of the top level CNCF projects – Kubernetes - the core container management, orchestration and scheduling platform. In this article, we will take our cloud native journey to the next level by exploring more nuanced aspects of Kubernetes (support for stateful applications through StatefulSets, Service, Ingress) including the use of package manager for Kubernetes – Helm.

We will also look at the two other top level projects of CNCF – Prometheus and Envoy and how these form the fundamental building blocks of any production viable cloud native computing stack.

Application and Infrastructure Architecture

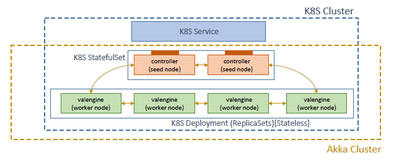

We employ a peer-to-peer cluster design for the distributed compute engine leveraging the Akka Cluster framework. Without going into much details of how the framework works (see documentation for more), it essentially provides a fault-tolerant decentralized P2P cluster membership service through a gossip protocol with no SPOF. The cluster nodes need to register themselves with an ordered set of seed nodes (primary/secondaries) in order to discover other nodes. This necessitates the seed nodes to have state semantics with respect to leadership assignment and stickiness of addressable IP addresses/DNS names.

A pair of nodes (for redundancy) are designated as seed nodes that act as the controller (ve-ctrl) which:

- Provides contact points for the valengine (ve) worker nodes to join the cluster

- Exposes RESTful service (using Akka HTTP) for job submission from an HTTP client

- Dispatches jobs to the registered ve workers

The other non-seed cluster nodes act as stateless workers that are routed compute job requests and perform the actual pricing task. This facilitates elasticity of the compute function as worker nodes join and leave the cluster depending on the compute load.

So, how do we deploy the cluster on Kubernetes?

We have the following 3 fundamental requirements:

- The seed controller nodes need to have state semantics with respect to leadership assignment and stickiness of addressable IP addresses/DNS names

- The non-seed worker nodes are stateless compute nodes that need to be elastic (be able to auto-scale in/out based on compute load)

- Load-balanced API service to facilitate pricing job submissions via REST

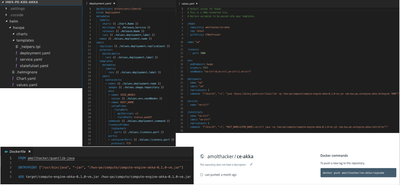

The application is containerized using Docker, packaged using Helm

and the containers managed and orchestrated with Kubernetes leveraging Google Kubernetes Engine (GKE) and using its following core constructs:

- StatefulSets - Manages the deployment and scaling of a set of Pods, and provides guarantees about the ordering and uniqueness of these Pods.

- Deployment/ReplicaSets – Manages the deployment and scaling of a homogenous set of stateless pods in parallel

- Service - An abstraction which defines a logical set of Pods and a policy by which to access them through the EndPoints API that reflects updates in the state of associated Pods

quite naturally, as follows:

- The controller (ve-ctrl) seed nodes are deployed as StatefulSets as an ordered set with stable (across pod (re)scheduling) network co-ordinates

- The valengine (ve) workers are deployed as ReplicaSets managed through a Deployment decorated with semantics for autoscaling in parallel (as against sequentially as is the case with StatefulSets) based on compute load

- The controller pods are front by a K8s Service exposing the REST API to submit compute jobs

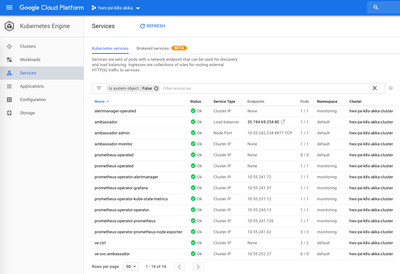

So far so good. Now how do we access the service and submit pricing and valuation jobs? Diving a little deeper into the service aspects – our applications K8s Service is of type ClusterIP making it reachable only from within the K8s cluster on a cluster-internal private IP.

Now we can externally expose the service using GKE’s load balancer by using the LoadBalancer service type instead and manage (load balancing, routing, SSL termination etc.) external access through Ingress. Ingress is a beta K8s resource and GKE provides an ingress controller to realize the ingress semantics.

Deploying and managing a set of services benefits from the realization of a service mesh, as well articulated here and necessitates a richer set of native features and responsibilities that can broadly be organized at two levels –

The Data Plane – Service co-located and service specific proxy responsible for service discovery, health checks, routing, rate-limiting, load balancing, retries, auth, observability/tracing of request/responses etc.

The Control Plane – Responsible for centralized policy, configuration definition and co-ordination of multiple stateless isolated service specific Data Planes and their abstraction as a singular distributed system

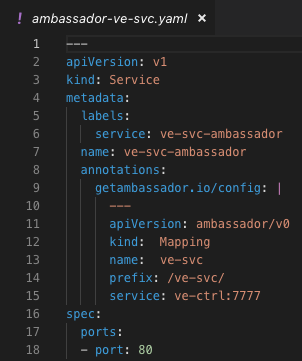

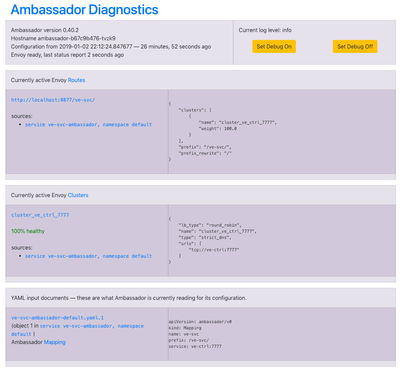

In our application, we employ a K8s native, provider (GKE, AKS, EKS etc.) agnostic API Gateway with these functions incorporated using Envoy as the L7 sidecar proxy through an open source K8s native manifestation in the form of Ambassador.

Envoy abstracts the application service from the Data Plane networking functions described above and the network infrastructure in general.

Ambassador facilitates the Control Plane by providing constructs for service definition/configuration that speak the native K8s API language and generating a semantic intermediate representation to apply the configurations onto Envoy. Ambassador also acts as the ‘ingress controller’ thereby enabling external access to the service.

Some of the other technologies one might consider for:

Data Plane - Traefik, NGNIX, HAProxy, Linkerd

Control Plane - Istio, Contour (Ingress Controller)

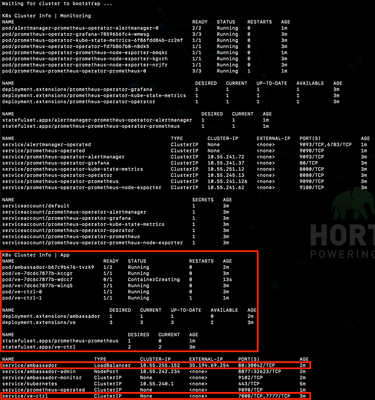

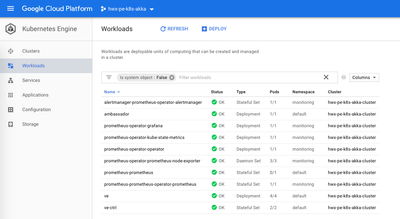

Finally, the application compute cluster and service mesh (Ambassador and Envoy) is monitored with Prometheus deployed using an operator pattern - an application-specific controller that extends the Kubernetes API to create, configure, and manage instances of complex stateful applications on behalf of a Kubernetes user - through the prometheus-operator which makes the Prometheus configuration K8s native and helps manage the Prometheus cluster.

We deploy the Prometheus-operator using Helm which in turn deploys the complete toolkit including AlertManager and Grafana with in-built dashboards for K8s.

The metrics from Ambassador and Envoy are captured by using the Prometheus StatsD Exporter, fronting it with a Service (StatsD Service) and leveraging the ServiceMonitor custom resource definition (CRD) provided by the prometheus-operator to help monitor and abstract configuration to the StatsD exporting service for Envoy.

Tech Stack

Kubernetes (GKE) 1.10 Helm 2.11 Prometheus 2.6 Envoy 1.8 Ambassador 0.40.2 Akka 2.x

To Try this at Home

All application and infrastructure code is the following repo:

https://github.com/amolthacker/hwx-pe-k8s-akka

Pre-requisites:

GCP access with Compute and Kubernetes Engine and following client utilities setup:

Clone the repo

Update `scripts/env.sh` with GCP region and zone details

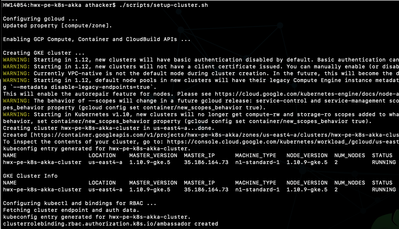

Bootstrap the infrastructure with following script:

$ ./scripts/setup-cluster.sh

Once the script completes

you should the above stack up and running and ready to use

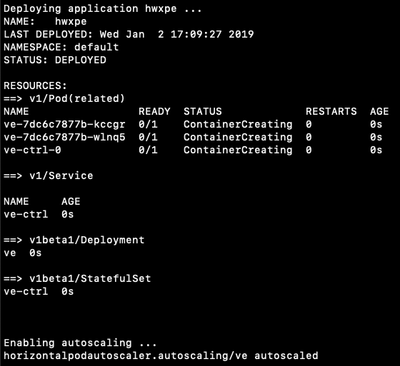

Controller seed node (ve-ctrl) pods

Worker node (ve) pods - base state

Behind the Scenes

The script automates the following tasks:

Enables GCP Compute and Container APIs

$ gcloud services enable compute.googleapis.com $ gcloud services enable container.googleapis.com

Creates a GKE cluster with auto-scaling enabled

$ gcloud container clusters create ${CLUSTER_NAME} --preemptible --zone ${GCLOUD_ZONE} --scopes cloud-platform --enable-autoscaling --min-nodes 2 --max-nodes 6 --num-nodes 2Configures kubectl and defines bindings for RBAC

$ gcloud container clusters get-credentials ${CLUSTER_NAME} --zone ${GCLOUD_ZONE}

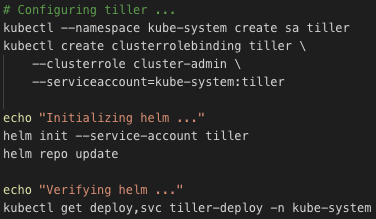

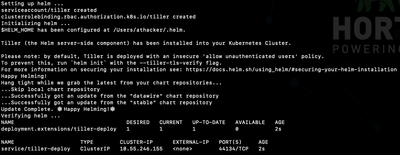

$ kubectl create clusterrolebinding ambassador --clusterrole=cluster-admin --user=${CLUSTER_ADMIN} --serviceaccount=default:ambassadorSets up Helm

Deploys the application `hwxpe` using Helm

$ helm install --name hwxpe helm/hwxpe $ kubectl autoscale deployment ve --cpu-percent=50 --min=2 --max=6

This uses the pre-built image amolthacker/ce-akka

Optionally one can rebuild the application with Maven

$ mvn clean package

and use the new image regenerated as part of the build leveraging the docker-maven-plugin To build the application w/o generating Docker image:

$ mvn clean package -DskipDocker

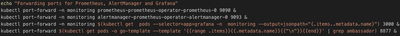

Deploys the Prometheus infrastructure

$ helm install stable/prometheus-operator --name prometheus-operator --namespace monitoring

Deploys Ambassador (Envoy)

$ kubectl apply -f k8s/ambassador/ambassador.yaml $ kubectl apply -f k8s/ambassador/ambassador-svc.yaml $ kubectl apply -f k8s/ambassador/ambassador-monitor.yaml $ kubectl apply -f k8s/prometheus/prometheus.yaml

Adds the application service route mapping to Ambassador

$ kubectl apply -f k8s/ambassador/ambassador-ve-svc.yaml

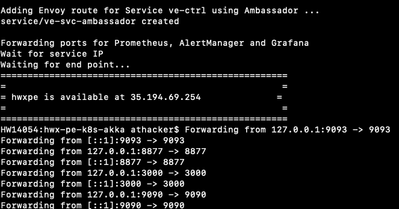

And finally displays the Ambassador facilitated service's external IP and forwards ports for Prometheus UI, Grafana and Ambassador to make them accessible from the local browser

We are now ready to submit pricing jobs to the compute engine using its REST API

Job Submission

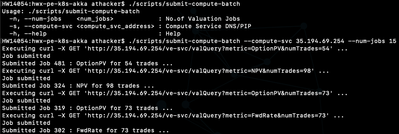

To submit valuation batches, simply grab the external IP displayed and call the script specifying the batch size as follows:

As you can see the script uses external IP 35.194.69.254 associated with the API Gateway (Ambassador/Envoy) fronting the application service and route prefix 've-svc' as provided in the Ambassador service spec above.

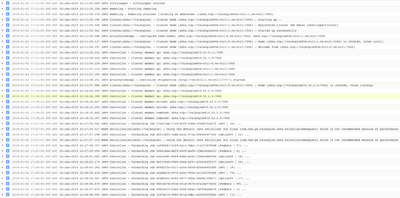

The service routes the request to the backing controller seed node pods (ve-ctrl) which in turn divides and distributes the valuation batch across the set of worker pods (ve) as follows:

Controller Seed Node [ve-ctrl] logs

Worker Node [ve] logs

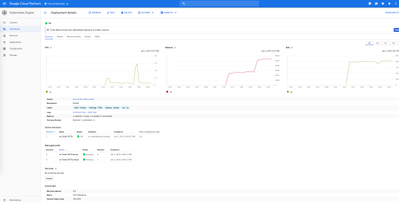

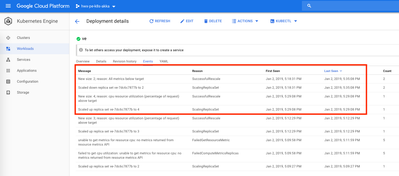

As the compute load increases, one would see the CPU usage spike beyond the set threshold

and as a result the compute cluster autoscale out (eg: from 2 worker nodes to 4 worker nodes in this case) and then scale back in (eg: from 4 back to the desired min 2 in this case)

Worker node [ve] pods - scaled out

Autoscaling event log

Concluding

Thank you for your patience and bearing with the lengthy article ... Hope the prototype walkthrough provided a glimpse of the exciting aspects of building cloud native services and applications !!

Credits and References

https://www.getambassador.io/user-guide/getting-started/

https://www.getambassador.io/reference/statistics#prometheus

https://blog.envoyproxy.io/service-mesh-data-plane-vs-control-plane-2774e720f7fc