Community Articles

- Cloudera Community

- Support

- Community Articles

- From an OS ISO To Local HWX Clusters with a Single...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 05-17-2018 11:32 PM - edited 08-17-2019 07:28 AM

Disclaimer!

Yes, despite some great articles around this topic by the finest folks here at Hortonworks, this is YALC [Yet Another article on spinning up Local HDP Clusters].

But before you switch off, this article will show how to spin up HDP/HDF HA, secure clusters, even using customizable OS images, on your local workstation by just executing a single command!!

…

Glad this caught your attention and you’re still around!

Quick Peek!

For starters, just to get a taste, once you have ensured you are on a workstation that has at least 4 cores and 8 GB RAM and has open internet connectivity and once you have the environment setup with the pre-requisites,

1. Execute the following in a terminal

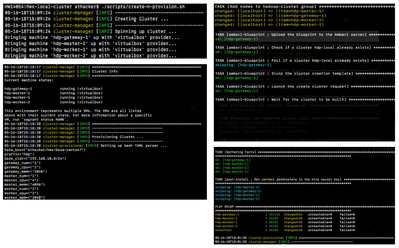

git clone https://github.com/amolthacker/hwx-local-cluster && cd hwx-local-cluster && ./scripts/create-n-provision.sh

2. Grab a coffee as this could take some time (unfortunately the script does not make coffee for you … yet)

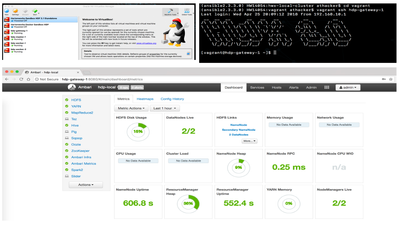

3. Once the script’s completed, you should have a basic HDP 2.6.4 cluster on CentOS 7.4 running on 4 VirtualBox VMs (1 gateway, 1 master and 2 workers) managed by Vagrant equipped with core Hadoop, Hive and Spark all managed by Ambari !

A Look Behind the Scenes

So, how did we do this ?

First, you’d need to setup your machine environment with the following:

Environment Tested:

macOS High Sierra 10.13.4 python 2.7.14 | pip 9.0.3 | virtualenv 15.2.0 Git 2.16.3 VirtualBox 5.2.8 Vagrant 2.0.3 Packer v1.2.2 Ansible 2.3.0.0

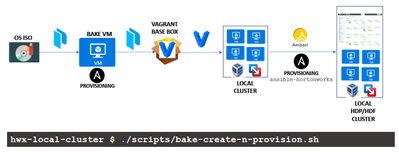

At a very high level, the tool essentially uses just a bunch of wrapper scripts that provide the plumbing and automate the process of spinning up a configurable local cluster of virtual machines running over either VirtualBox or VMWare Fusion type 2 hypervisors using a Vagrant base box I’ve created and make these VMs available as a static inventory to the powerful ansible-hortonworks (AH) tool system that does the heavy lifting of automating the provisioning of cluster via Ambari.

Shout out to the folks behind the AH project. It automates provisioning HDP /HDF HA secure clusters using Ansible on AWS, Azure, GCP, OpenStack or even on an existing inventory of physical / virtual machines with support for multiple OS. Before proceeding, I would encourage you to acquire a working knowledge of this project.

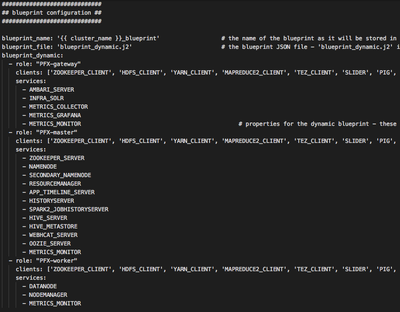

The script create-n-provision runs the tool in its Basic mode where it starts with a base box running CentOS 7.4 that has been baked and hardened with all the pre-requisites needed for any node running HDP/HDF services. The script then uses Vagrant to spin up a cluster driven by a configuration file that helps define parameters such as no.of gateway, master and worker VMs, CPU/Memory configuration, network CIDR and VM name prefixes. The machines created would have names with the following convention:

<prefix>-<role>-<num > where, prefix: VM name prefix specified by the user role: gateway, master or worker num: 1 – N, N being the no.of machines in a given role eg: hdp-master-1

The cluster of VMs thus created is then provisioned with my fork of the ansible-hortonworks (AH) project that has minor customizations to help with a static inventory of Vagrant boxes. AH under the hood validates the readiness of the boxes with the pre-requisites and installs any missing artifacts, bootstraps Ambari and then installs and starts rest of the services across the cluster. The service assignment plan including the Ambari server and agents is driven by the dynamic blueprint template under ansible-hortonworks-staging.

For the more adventurous!

One can also run the tool in its Advanced mode through the bake-create-n-provision script where in instead of using my Vagrant base box, one can bake their own base box starting with a minimal OS ISO (CentOS supported so far) and then continue with the process of cluster creation and provisioning through the steps outlined above. The base box is baked using Packer and Ansible provisioner. One can customize and extend the hwx-base Ansible role to harden the image as per one’s needs to do things like adding additional packages, tools and libraries, add certs especially if you are looking to run this on a workstation from within an enterprise network, hooking up with AD etc. The code to bake and provision the base box re-uses a lot of the bits from the packer-rhel7 project.

Take a look at the repo for further details.

To Wrap Up …

This might seem like an overly complicated way of getting muti-node HDP/HDF clusters running on your local machines. However, the tool essentially provides you with the necessary plumbing and automation to help create lot more nuanced and customizable clusters with just a couple commands – It allows you to create clusters with:

- Services running in HA mode

- Secure configurations for both HDP and HDF

- Customizations that can be purely declarative and configuration driven

- Custom baked OS images – images that could be either enterprise hardened or pre-baked with certain application specific or environment specific pre-requisites - for the choice of your local hypervisor – VirtualBox, VMWare Fusion

- The image baking process can be extended to OS choices other than CentOS 7 and for public/private cloud hypervisors (AWS, Azure, GCP and OpenStack) as supported by the AH project. This now gives you the ability to locally provision miniaturized replicas of your prod/non-prod clusters in your datacenter or private/public clouds using a consistent provisioning mechanism and code base