Community Articles

- Cloudera Community

- Support

- Community Articles

- HDF 3.1: Executing Apache Spark via ExecuteSparkIn...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-09-2018 12:05 AM - edited 08-17-2019 08:55 AM

I want to easily integrate Apache Spark jobs with my Apache NiFi flows. Fortunately with the release of HDF 3.1, I can do that via Apache NiFi's ExecuteSparkInteractive processor.

First step, let me set up a Centos 7 cluster with HDF 3.1, follow the well-written guide here.

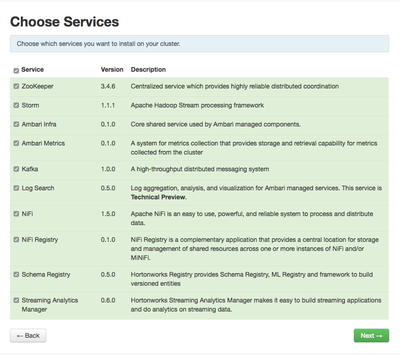

With the magic of time lapse photography, instantly we have a new cluster of goodness:

It is important to note the new NiFi Registry for doing version control and more. We also get the new Kafka 1.0, updated SAM and the ever important updated Schema Registry.

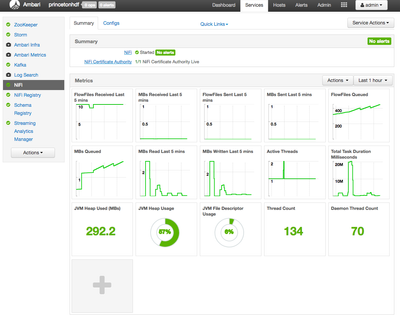

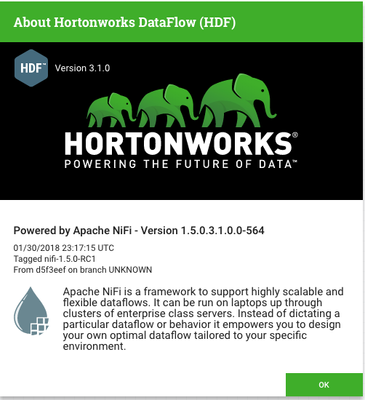

The star of the show today tis Apache NiFi 1.5 here.

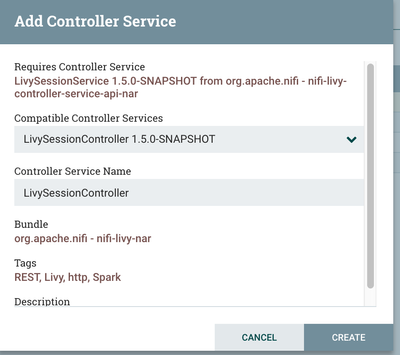

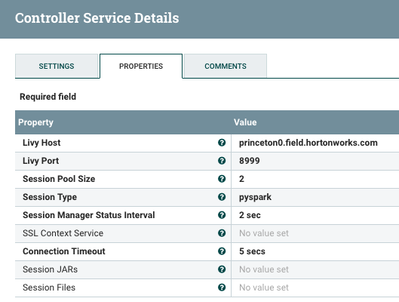

My first step is to Add a Controller Service (LivySessionController).

Then we add the Apache Livy Server, you can find this in your Ambari UI. It is by default port 8999. For my session, I am doing Python, so I picked pyspark. You can also pick pyspark3 for Python 3 code, spark for Scala, and sparkr for R.

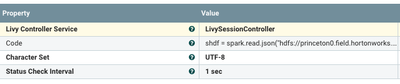

To execute my Python job, you can pass the code in from a previous processor to the ExecuteSparkInteractive processor or put the code inline. I put the code inline.

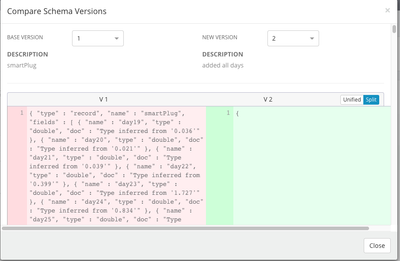

Two new features of Schema Registry I have to mention are the version comparison:

You click the COMPARE VERSIONS link and now you have a nice comparison UI.

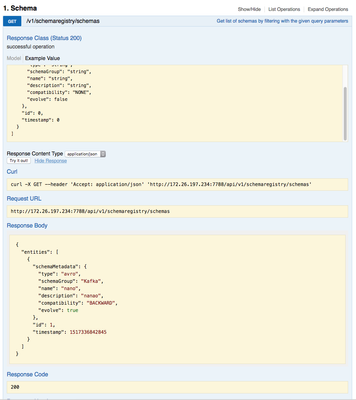

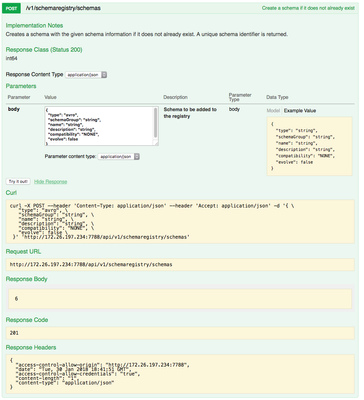

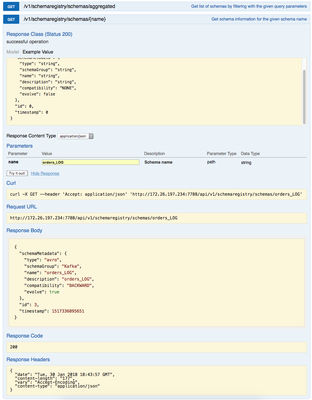

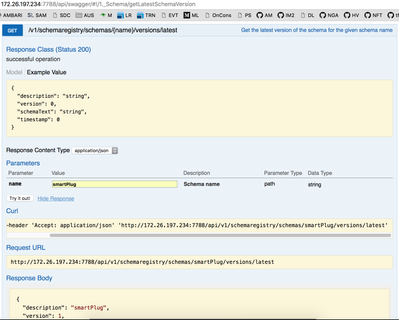

And the amazing new Swagger documentation for interactive documentation and testing of the schema registry APIs.

Not only do you get all the parameters for input and output, the full URL and a Curl example, you get to run the code live against your server.

I will be adding an article on how to use Apache NiFi to grab schemas from data using InferAvroSchema and publish these new schemas to the Schema Registry vai REST API automagically.

Part two of this article will focus on the details of using Apache Livy + Apache NiFi + Apache Spark with the new processor to call jobs.

References

Created on 03-26-2018 01:55 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Is Kerberized server supported by LivySessionController ?

I tried with the same approach on Kerberized Hadoop cluster but not able to get expected results.

Created on 04-03-2018 05:36 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content