Community Articles

- Cloudera Community

- Support

- Community Articles

- HDF/NiFi to convert row-formatted text files to co...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

12-07-2016

09:19 AM

- edited on

02-27-2020

05:36 AM

by

VidyaSargur

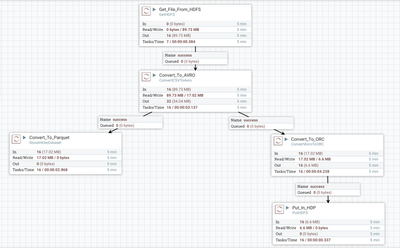

I recently worked with a customer to demo HDF/NiFi DataFlows for their uses cases. One use case involved converting delimited row-formatted text files into Parquet and ORC columnar formats. This can quickly be done with HDF/NiFi.

Here is an easy to follow DataFlow that will convert row-formatted text files to Parquet and ORC. It may look straightforward; however, it requires some basic knowledge of Avro file formats and use of the Kite API. This article will explain the DataFlow in detail.

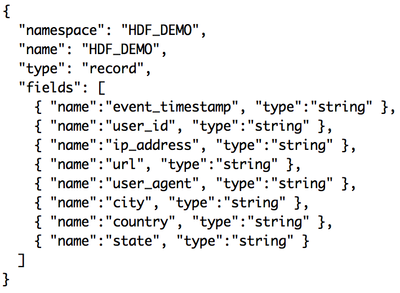

STEP 1: Create an Avro schema for the source data

My source data is tab delimited and consists of 8 fields.

Notice in the DataFlow that before converting the data to Parquet and ORC the data is first converted to Avro. This is done so we have schema information for the data. Prior to converting the data to Avro we need to create an Avro schema definition file for the source data. Here is what my schema definition file looks like for the 8 fields. I stored this in a file named sample.avsc.

More information on Avro schemas can be found here: https://avro.apache.org/docs/1.7.7/spec.html

STEP 2: Use Kite API to create a Parquet dataset

The DataFlow uses the ‘StoreInKiteDataset’ processor. Before we can use this processor to convert the Avro data to Parquet we need to have a directory already created in HDFS to store the data as Parquet. This is done by calling the Kite API.

Kite is an API for Hadoop that lets you easily define how your data is stored:

- Works with file formats including CSV, JSON, Avro, and Parquet

- Hive

- HDFS

- Local File System

- HBase

- Amazon S3

- Compress data: Snappy (default), Deflate, Bzip2, and Lzo

Kite will handle how the data is stored. For example, if I wanted to store incoming CSV data into a Parquet formatted Hive table, I could use the Kite API to create a schema for my CSV data and then call the Kite API to create the Hive table for me. Kite also works with partitioned data and will automatically partition records when writing.

In this example I am writing the data into HDFS.

Call Kite API to create a directory in HDFS to store the Parquet data. The file sample.avsc contains my schema definition:

./kite-dataset create dataset:hdfs://ip-172-31-2-101.xxxx.compute.internal:8020/tmp/sample_data/parquet --schema sample.avsc --format parquet

If you want to load directly into a Hive table, then you would call the Kite API using the following command:

./kite-dataset create sample_hive_table --schema sample.avsc --format parquet

To learn more about the Kite API and to download, follow this link: http://kitesdk.org/docs/current/

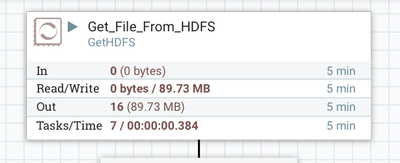

STEP 3: Get source data

The source files could exist in one more places including remote server, local file system, or in HDFS. This example is using files stored in HDFS.

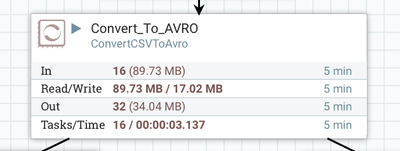

STEP 4: Convert data to Avro format

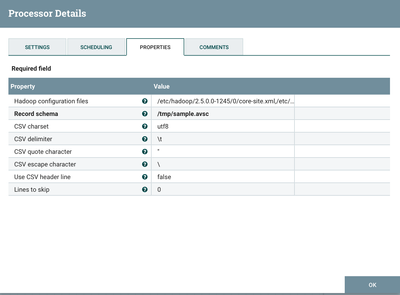

Configure the 'ConvertCSVToAvro' processor to specify the location for the schema definition file and specify properties of the source delimited file so NiFi knows how to read the source data.

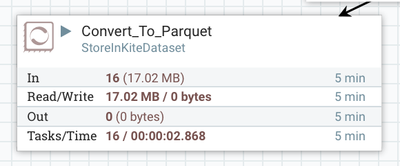

STEP 5: Convert data to columnar Parquet format

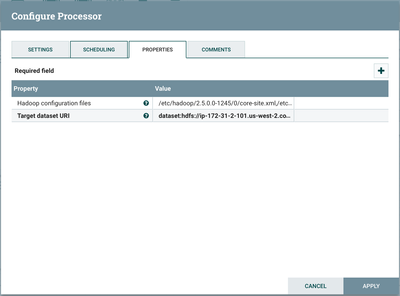

Configure the 'StoreInKiteDataset' processor to set the URI for your Kite dataset.

My target dataset URI is: dataset:hdfs://ip-172-31-2-101.xxxx.compute.internal:8020/tmp/sample_data/parquet

This is the directory I created in STEP 2. I'm writing to an HDFS directory. I could also write directly to a Hive table.

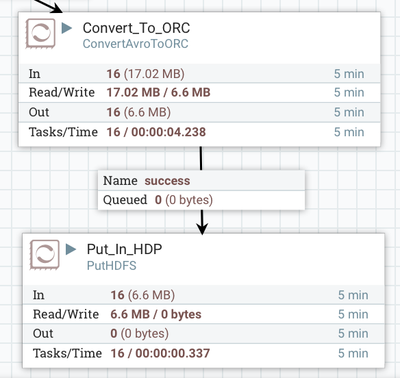

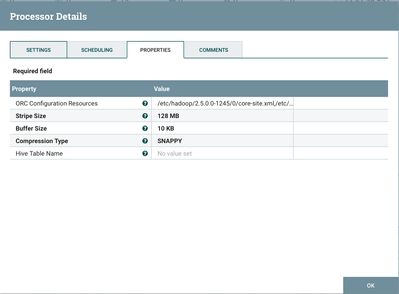

STEP 6: Convert data to columnar ORC format and store in HDFS

These 2 are straightforward. For the 'ConvertAvroToORC' processor you can specify the ORC stripe size as well as optionally compress the data.

'ConvertAvroToORC' processor settings:

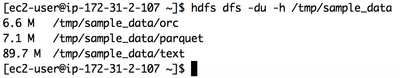

Data before and after conversion

The text data is considerably larger than the Parquet and ORC files.

Created on 04-15-2017 10:31 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello @Binu Mathew

Thanks for the tutorial. I need your help with Kite API. I get "Descriptor location not found" when I try to use below dataset

dataset:hdfs://ip-172-31-2-101.us-west-2.compute.internal:8020/tmp/sample_data/parquet .

And

I get an exception "java.lang.noclassdeffounderror org/apache/hadoop/hive/conf/HiveConf" when I execute below similar command in hadoop server.

./kite-dataset create dataset:hdfs://ip-172-31-2-101.us-west-2.compute.internal:8020/tmp/sample_data/parquet --schema sample.avsc --format parquet

Can you please suggest the configuration I am missing here? Please suggest

Thanks

Created on 06-28-2017 09:19 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi thanks for tuto.

Do you know if it is possible to use "StoreInKiteDataset" with kerberos to write to HDFS ?