Community Articles

- Cloudera Community

- Support

- Community Articles

- Hadoop Using SAP

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 10-08-2015 07:06 PM - edited 09-16-2022 01:32 AM

1 Overview

In this article I am going to talk about three main integration pieces between SAP and Hadoop.

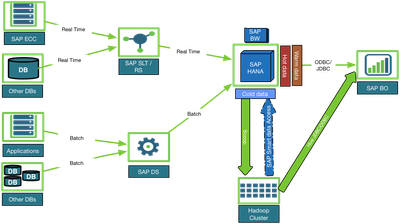

First I will cover the SAP integration products and how they can be used to ingest data into hadoop systems. Second, I am going to talk about SAP hana and how that integrated with hadoop. I will provide a overview of various methods that can be used to push/pull data from SAP Hana as well as aspects around data federation and data offload architecture. Third I am going to touch on topic around how SAP BI tools integrate with Hadoop and talk briefly about SAP Hana Vora.

2 Hadoop using SAP Data Integration components

SAP has three primary products around data integration. Based upon their integration pattern, here is a brief overview of these products

Batch processing

- SAP Data services aka BODS (Business Objects Data Services) is the batch ETL tool from SAP. SAP acquired this product as part of its Business Objects acquisition.

Real time ingestion

- SLT (SAP Landscape Transformation) Replication server can be used for batch or real time data integration and uses database triggers for replication.

- SAP replication server which is much more broader replication capabilities and truly is a CDC (Change Data Capture) product.

Rest of this section provides bit more details on how these products talk to hadoop

2.1 SAP Data services

Data services can be used to create Hive, Pig or MR (MapReduce) jobs. You can also preview the files that is sitting in HDFS.

2.1.1 Hive

Data services (DS) uses Hive Adapter, which is something that SAP provides to connect to the Hive tables. This hive adapter can act as a Data Store or as a source/target for DS jobs. There are various operations you can perform using Hive adapter based data store.

- You can perform some of the “Push Down” functionality, where you can push the entire DS job to hadoop cluster. DS coverts the job into hive SQL commands and run it against the Hadoop cluster as map-reduce job.

- You can use the data store to create SQL transform and sql() function within DS.

2.1.2 HDFS & Pig

In DS, you can create a HDFS based file format where basically you are saying the you have file as source and that file is sitting in HDFS. Now, when you apply certain transformations to those HDFS file format using things like aggregation, filtering etc in DS, it can get converted to pig job by DS. The conversion of DS job as a pig job depends on the kind of transformation that is applied and if DS knows to convert those transformation to pig job.

2.1.3 MapReduce

The integration related to Hive, HDFS & Pig, all eventually gets converted to mapreduce job and gets executed in the cluster. In additional to that, if you use “Text data processing” transformation within data services, then it may also get converted to Mapreduce jobs based upon if the source and target in hadoop for the job is in hadoop.

2.2 SAP SLT (SAP Landscape Transformation)

SAP LT replication server is one of the two real time replication solution options from SAP. SAP LT can be used to load data on batch or real time basis from SAP as well as some of the non SAP systems. This replication mechanism is trigger based, so it can replicate the incremental data very effectively. SAP LT is used for primarily loading data from SAP system to SAP Hana. However, it is bi directional, which means that you can use SAP hana as a source and replicate to other systems as well.

SAP LT does not support hadoop integration straight out of the box. However, there are few workarounds that you can use to load Hadoop via SLT.

Option1: Build a custom ABAP code within SAP LT replication server, so that it can read the incremental data from the trigger and load that into HDFS via a API call.

Option2: SAP LT can write its data into SAP Data service, which does have mechanism to load that data into Hadoop via Hive adapters or HDFS file format.

Option3: Most of the SAP LT jobs are designed to load data into SAP Hana. You can let SAP LT write data to SAP Hana and then push data from SAP Hana to Hadoop using sqoop JDBC connector.

2.3 SAP Replication server

This is truly SAP’s CDC product, which you can use to replicate data between SAP and/or non SAP systems. It has many more features than SAP LT and it also have support for hive on linux. While performing hive based SAP replication, you need to be caution about certain limitations like

- List of columns between hive and table replication definition should match

- You can only load to static partition columns, which means that you should know the value of the partition column before you load.

- You have to perform some level of workaround to achieve insert/update kind of operations on the table.

- You may have scalability challenges using this integration technique

3 SAP Hana and Hadoop

SAP HANA combines database, data processing, and application platform capabilities in a single in-memory platform. The platform also provides libraries for predictive, planning, text processing, spatial, and business analytics.

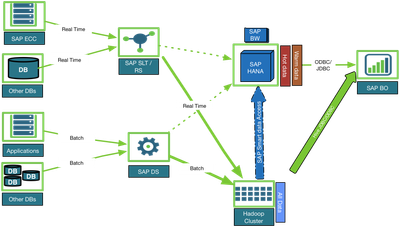

As your Hana systems grows, because of the cost or scalability reasons, you may want to offload some of the cold data to more cost effective storage such as hadoop. When you talk about offload from SAP Hana to hadoop, there are two primary ways to approach it.

Option1) Move only the cold data from SAP Hana to Hadoop

Option2) Move all data to hadoop and let your user/query engine decide to go against SAP for hot/warm data OR go against Hadoop for all data.

3.1 Offload cold data to Hadoop

There are instances when you want to offload cold data (data that is not used very often for reporting) from SAP Hana to much more cost effective storage like hadoop. Here are some high level integration options for offloading data to hadoop.

SAP also has certain products that you can leverage to pull data from Hana as a file. Here are couple of such products.

SAP Open Hub: Using SAP Open Hub, you can get data extract from SAP in the form of a file which then can be inserted into Hadoop via NFS, WebHDFS etc.

SAP BEx: BEx is more of a query tool that can be used to pull data out of SAP cubes and then those file can be used to load to hadoop.

3.2 Move All data to Hadoop

Move all data to hadoop and let your user/query engine decide to go against SAP for hot/warm data OR go against Hadoop for all data. This option would be more compelling in situations where you do not want to have any performance overhead that comes with query federation and also the case where you do not want to build complex process within hana to identify the cold data that needs to be pushed to hadoop.

In this option you should be able to leverage many of your existing integration jobs to point to hadoop as a target beside existing SAP Hana target. Here is a high level architectural diagram that depicts this option

3 Hadoop using SAP BI and Analytics tools

There are three SAP BI tools that are very commonly used within the enterprise. Here are the name of those products and the way they integrate with hadoop

SAP Lumira: It uses Hive JDBC connector to read data from hadoop

SAP BO (Business Objects) & Crystal report: Both of these products use Hive ODBC connector to read data from hadoop.

Beside these three products, there has been a recent announcement from SAP on a new product called SAP Hana Vora. Vora primarily uses apache sparks at its core but also has some additional features that SAP introduced to enhance the query execution technique, as well as improve the SQL capabilities from what SparkSQL provides.

Vora needs to be installed in all the nodes in the hadoop cluster where you want to run vora from. It provides a local cache capability using native c code for map functions, along with apache sparks core capabilities. In the SQL front. it provides much richer features around OLAP (On line Analytical Processing) such as hierarchical drill down capabilities.

Vora also works with SAP Hana, but its not a must have. You can use Vora to move data between SAP Hana and Hadoop using some of SAP’s proprietary integration technique rather than the ODBC technique that Smart Data Access uses. You also have capabilities to write federated queries that used both SAP Hana and hadoop cluster using Vora.

I hope you find this article useful! Please provide feedbacks to make this more article more accurate and complete.

Created on 10-14-2015 06:35 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @Chakra do you know if SAP Hana or SDA support kerberized connections to/from hadoop?

Created on 11-03-2015 09:10 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @Janos, not that i am aware of.

Created on 02-08-2016 11:32 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great article - many thanks for this. Option 2 for DS / SLT mentioned above can also be used to drop delta files to a filesystem which can then be copied to cloud storage instead of HDFS - e.g. S3, WASB which later versions of Hadoop can also access.

Created on 11-16-2016 07:16 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Very useful info...

Created on 09-13-2019 08:06 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great article, wonder if you could update it.

SAP Data Services now had a new way to connect to Hadoop that does NOT require installing an SAPDS job server on the Hadoop edge node.

Also can SLT read BW on Hana objects (ADSO, HANA view, CDS views, etc.) and connect direct to Hadoop?

Thanks

JDR