Community Articles

- Cloudera Community

- Support

- Community Articles

- Hive LLAP deep dive

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 08-31-2018 03:37 PM - edited 08-17-2019 06:32 AM

Thanks to Christoph Gutsfeld, Matthias von Görbitz and Rene Pajta for all their valuable pointers for writing this article. The article provides a indetailed and thorugh understanding of Hive LLAP.

Understanding YARN

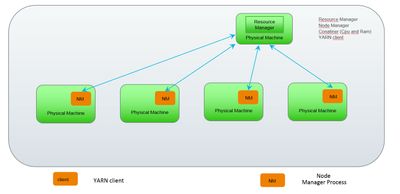

YARN is essentially a system for managing distributed applications. Itconsists of a central Resource manager, which arbitrates all available cluster resources, and a per-node Node Manager, which takes direction from the Resource manager. Resource Manager and node Manager follow a master slave relationship. The Node manager is responsible for managing available resources on a single node. Yarn defines a unit of work in terms of container.. It is available in each node. Application Master negotiates container with the scheduler(one of the component of Resource Manager). Containers are launched by Node Manager

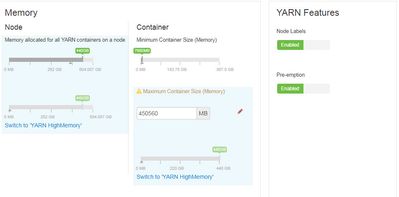

Understanding YARN Memory configuration

- Memory allocated for all YARN container on a node : Total amount of memory that can be used by Node manager on every node for allocating containers.

- Minimum container size : minimum amount of RAM that will be allocated to a requested container. Any container requested will be allocated memory in multiple of the Minimum container size.

- Maximum container size : The max amount of RAM that can be allocated to a single container. Maximum container size <= Memory allocated for all YARN container on a node

- LLAP Daemons run as yarn container hence LLAP daemon size should be >= Minimum container size but <= Maximum container size

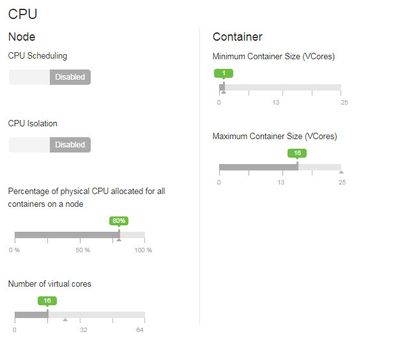

Understanding CPU

Memory configuration

- Percentage of physical CPU allocated for all containers on a node : X% of the total cpu that can be used by the containers. The value should never be 100% as cpu is needed by data Nodes, Node Manager and OS.

- Minimum Container Vcores: minimum number of cpu that will be allocated to a given container.

- Maximum Container Vcore: maximum number of Vcpu that can be allocated to a container.

- CPU isolation : this enables c-groups, enforcing containers to use exactly the number of CPU allocated to them. If this option is disabled then a container is free to occupy all the CPUs available on the machine.

- LLAP daemon run as a big YARN container hence always ensure that Maximum Container Size Vcore is set equal to number of Vcores available to run YARN Container ( 80% of total number of CPU available on that host).

- If CPU isolation is enabled it becomes even more important to set Maximum Container Size Vcore to its appropriate value

Hive LLAP Architecture

https://cwiki.apache.org/confluence/display/Hive/LLAP

known as Live Long

and Process, LLAP provides a hybrid execution model. It consists of a

long-lived daemon which replaces direct interactions with the HDFS Data Node,

and a tightly integrated DAG-based framework.

Functionality such as caching, pre-fetching, some query processing and access

control are moved into the daemon. Small/short queries are largely

processed by this daemon directly, while any heavy lifting will be performed in

standard YARN containers.

Similar to the Data Node, LLAP daemons can be used by other applications as well, especially if a relational view on the data is preferred over file-centric processing. The daemon is also open through optional APIs (e.g., Input Format) that can be leveraged by other data processing frameworks as a building block.

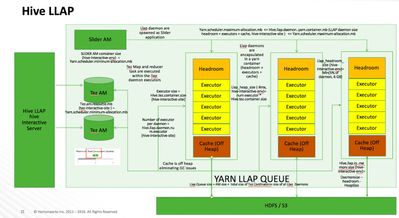

Hive LLAP consists of the following component

- Hive Interactive Server : Thrift server which provide JDBC interface to connect to the Hive LLAP.

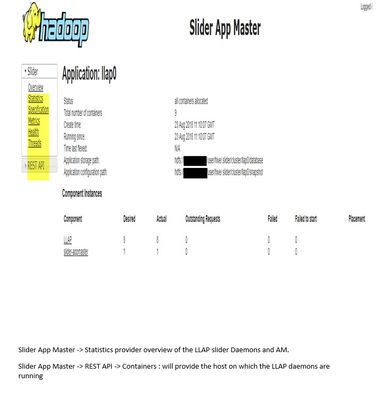

- Slider AM : The slider application which spawns, monitor and maintains the LLAP daemons.

- TEZ AM query coordinator : TEZ Am which accepts the incoming the request of the user and execute them in executors available inside the LLAP daemons (JVM).

- LLAP daemons : To facilitate caching and JIT optimization, and to eliminate most of the startup costs, a daemon runs on the worker nodes on the cluster. The daemon handles I/O, caching, and query fragment execution.

LLAP configuration in details

| Component | Parameter | Conf Section of Hive | Rule and comments |

| SliderSize | slider_am_container_mb | hive-interactive-env | =yarn.scheduler.minimum-allocation-mb |

| Tez AM coordinator Size | tez.am.resource.memory.mb | tez-interactive-site | =yarn.scheduler.minimum-allocation-mb |

| Number of Cordinators | hive.server2.tez.sessions.per.default.queue | Settings | Number of Concurrent Queries LLAP support. This will result in spawning equal number of TEZ AM. |

|

LLAP DaemonSize |

hive.llap.daemon.yarn.container.mb | hive-interactive-site | yarn.scheduler.minimum-allocation-mb <= Daemon Size <= yarn.scheduler.maximu-allocation-mb. Rule of thumb always set it to yarn.scheduler.maximu-allocation-mb. |

|

Number of Daemon |

num_llap_nodes_for_llap_daemons | hive-interactive-env | Number of LLAP Daemons running |

| Number of Daemons | num_llap_nodes_for_llap_daemons | hive-interactive-env | Number of LLAP Daemons running. This will determine total Cache and executors available to run any query on LLAP |

| ExecutorSize | hive.tez.container.size | hive-interactive-site | 4 – 6 GB is the recommended value. For each executor you need to allocate one VCPU |

| Number of Executor | hive.llap.daemon.num.executors | Determined by number of “Maximum VCore in YARN” |

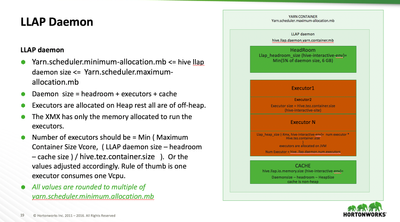

LLAP Daemon configuration in details

| Component | PARAMETER NAME | SECTION | Rule and comments |

| Maximum YARN container Size | yarn.scheduler.maximu-allocation-mb. | YARN settings | This is the maximum amount of memory a Conatiner can be allocated with. Its Recommended to RUN LLAP daemon as a big Container on a node |

| DaemonSize | hive.llap.daemon.yarn.container.mb | hive-interactive-site | yarn.scheduler.minimum-allocation-mb <= Daemon Size <= yarn.scheduler.maximu-allocation-mb. Rule of thumb always set it to yarn.scheduler.maximu-allocation-mb. |

|

Headroom |

llap_headroom_space | hive-interactive-env | MIN (5% of DaemonSize or 6 GB). Its off heap But part of LLAP Daemon |

| HeapSize | llap_heap_size | hive-interactive-env | Number of Executor \* hive.tez.container.size |

| Cache Size | hive.llap.io.memory.size | hive-interactive-site | DaemonSize - HeapSize – Headroom. Its off heap but part of LLAP daemon |

| LLAP Queue Size | Slider Am Size + Number of Tez Conatiners \* hive.tez.container.size + Size of LLAP Daemon \* Number of LLAP Daemons |

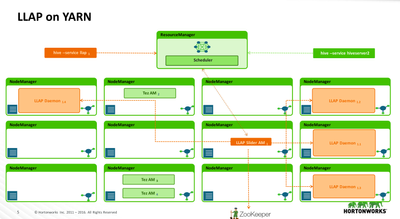

LLAP on YARN

LLAP Interactive Query Configuration.

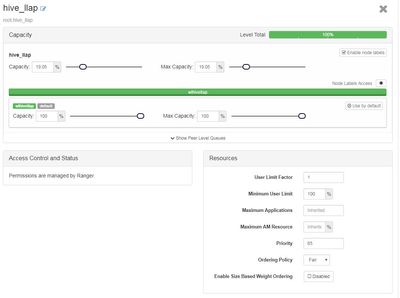

LLAP YARN Queue

Configuration

Key configurations

to set

- 1.User Limit Factor =1

- 2.Capacity and Max capacity = 100

LLAP Daemon in Detail

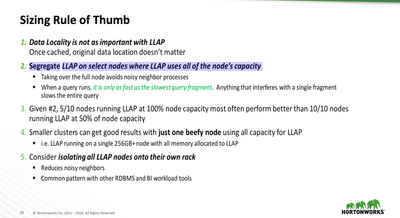

Sizing Rule of Thumb

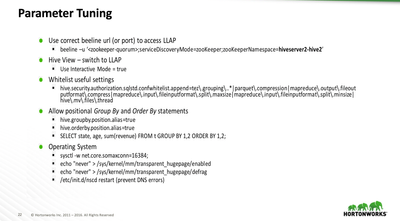

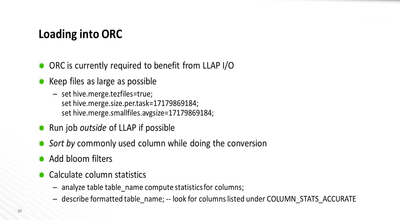

Parameter Tuning

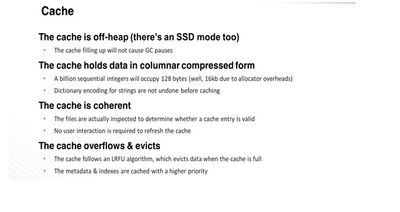

CACHE

Managing LLAP through Command line Utility (Flex)

- List Slider jobs : slider list

- List Slider status : slider status slider-application-name ( llap0)

- List Diagnostic Status of a Slider App : slider diagnostics --application – name slider-application-name (llap0) –verbose.

- Scale down LLAP daemon : slider flex slider-application-name (llap0) --component LLAP -1

- Scale up a new LLAP daemon : slider flex slider-application-name (llap0) --component LLAP +1.

- To stop Slider App : Slider stop slider-application-name (llap0)

Trouble Shooting :

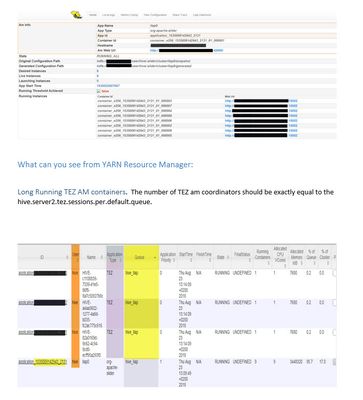

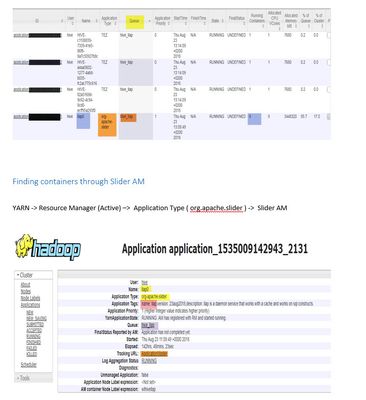

Finding Which Hosts the LLAP daemons are running

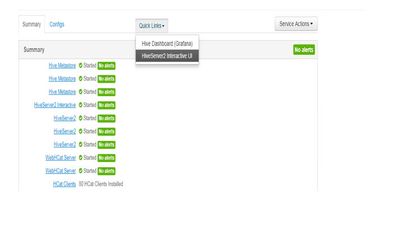

Ambari -> Hive -> HiveServer2 Interactive UI -> Running Instances

Behavior

1. In

HDP 2.6.4 preemption of queries is not supported.

2. If multiple concurrent queries have

exhausted the queue then any incoming

query will bin waiting state.

3. All Queries running on Hive LLAP can be seen in the TEZ UI.

Created on 09-17-2018 12:12 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Kgautam,

Amazing summarized article for Llap beginners and explorers. I have few questions on this article:

1) "In HDP 2.6.4 preemption of queries is not supported" - We have same HDP version but in grafana it shows tasks' pre-emption time and attempt ?

2) How to enable SSD caching ?(I didn't find any doc online related to enablement for HDP 2.6.4 version)

3) "Run job outside of Llap if possible" - We are facing lot of daemon failure issues while writing large tables(>1TB) in ORC partitioned tables, what is your point of view for running ORC write out of Llap ?