Community Articles

- Cloudera Community

- Support

- Community Articles

- How to access data files stored in AWS S3 buckets ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 04-02-2016 12:22 AM - edited 08-17-2019 12:53 PM

Step 1 : Log into AWS your credentials

Step 2 : From the AWS console go to the following options and create a user in for the demo in AWS

Security & Identity --> Identity and Access Management --> Users --> Create New Users

Step 3 : Make note of the credentials

awsAccessKeyId = 'xxxxxxxxxxxxxxxxxxxxxxxxxxxxx';

awsSecretAccessKey = 'yyyyyyyyyyyyyyyyyyyyyyyyyyy';

Step 4 : Add the User to the Admin Group by clicking the button “User Actions” and select the option Add Users to Group and add select your user (admin)

Step 5 : Assign the Administration Access Policy to the User (admin)

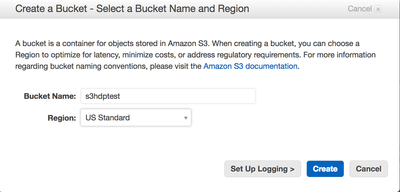

Step 6 : In the AWS Console , Go to S3 and create a bucket “s3hdptest” and pick your region

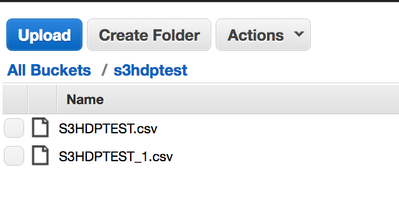

Step 7 : Upload the file manually by using the upload button. In our example we are uploading the file S3HDPTEST.csv

Step 8 : In the Hadoop Environment create the user with the same name as it is created in the S3 Environment

Step 9 : In Ambari do all the below properties in both hdfs-site.xml and hive-site.xml

<property> <name>fs.s3a.access.key</name> <description>AWS access key ID. Omit for Role-based authentication.</description> </property> <property> <name>fs.s3a.secret.key</name> <description>AWS secret key. Omit for Role-based authentication.</description> </property>

Step 10 : Restart the Hadoop Services like HDFS , Hive and any depending services

Step 11 : Ensure the NTP is set to the properly to reflect the AWS timestamp, follow the steps in the below link

http://www.emind.co/how-to/how-to-fix-amazon-s3-requesttimetooskewed

Step 12 : Run the below statement from the command line to test whether we are able to view the file from S3

[root@sandbox ~]# su admin bash-4.1$ hdfs dfs -ls s3a://s3hdptest/S3HDPTEST.csv -rw-rw-rw- 1 188 2016-03-29 22:12 s3a://s3hdptest/S3HDPTEST.csv bash-4.1$

Step 13: To verify the data you can use the below command

bash-4.1$ hdfs dfs -cat s3a://s3hdptest/S3HDPTEST.csv

Step 14 : Move a file from S3 to HDFS

bash-4.1$ hadoop fs -cp s3a://s3hdptest/S3HDPTEST.csv /user/admin/S3HDPTEST.csv

Step 15 : Move a file from HDFS to S3

bash-4.1$ hadoop fs -cp /user/admin/S3HDPTEST.csv s3a://s3hdptest/S3HDPTEST_1.csv

Step 15a : Verify whether the file has been stored in the AWS S3 Bucket

Step 16 : To access the data using Hive from S3:

Connect to Hive from Ambari using the Hive Views or Hive CLI

A) Create a table for the datafile in S3

hive> CREATE EXTERNAL TABLE mydata (FirstName STRING, LastName STRING, StreetAddress STRING, City STRING, State STRING,ZipCode INT) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' LOCATION 's3a://s3hdptest/';

B) Select the file data from Hive

hive> SELECT * FROM mydata;

Step 17 : To Access the data using Pig from S3:

[root@sandbox ~]# pig -x tez grunt> a = load 's3a://s3hdptest/S3HDPTEST.csv' using PigStorage(); grunt> dump a;

Step 18 : To Store the data using Pig to S3:

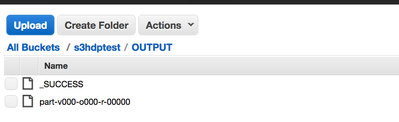

grunt> store a into 's3a://s3hdptest/OUTPUT' using PigStorage();

Checking the created data file in AWS S3 bucket

Note: For the article related to accessing AWS S3 Bucket using Spark please refer to the below link:

Created on 04-05-2016 04:13 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

great article!!

Created on 04-10-2016 11:21 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi, Thanks much for the step by step information to access aws s3 files from Hadoop. I followed the same steps, I think I am connecting to s3 file but still giving an error have an issue accessing s3 bucket . We are using HDP2.2 and here is the message I am getting. I have got the same issue when I try from pig as well as hive. Need help to resolve this issue.

java.io.IOException: org.apache.hadoop.fs.s3.S3Exception: org.jets3t.service.ServiceException: S3 Error Message. -- ResponseCode: 404, ResponseStatus: Not Found, XML Error Message: <?xml version="1.0" encoding="UTF-8"?><Error><Code>NoSuchBucket</Code><Message>The specified bucket does not exist</Message><BucketName>bucketname</BucketName><RequestId>requestid</RequestId><HostId>hostid</HostId></Error>

Created on 05-16-2016 09:27 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you! The above posts are very helpful.

I have a question on S3 access. Please help me on this.

I want to access 2 different S3 buckets with different permissions from HDFS. What is the best way to access the data and copy to hdfs. Is there any generic approach using IAM roles or we have to use only the aws access keys and override one after the other.

Created on 11-18-2016 09:01 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I have a question about accessing multiple AWS S3 buckets of different accounts in Hive.

I have several S3 buckets which belongs to different AWS accounts.

Following your info, I can access one of the buckets in Hive. However I have to write fs.s3a.access.key and fs.s3a.secret.key into hive-site.xml, it means for one instance of Hive, I can only access one AWS S3 account. Is that right?

And I want to use different buckets of different AWS S3 account in one Hive instance, is it possible?

Created on 12-13-2016 11:52 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

S3N is deprecated in newer versions of Hadoop, so it's better to use s3a. To use s3a, specify s3a:// in front of the path.

The following properties need to be configured first:

<property><name>fs.s3a.access.key</name><value>ACCESS-KEY</value></property><property><name>fs.s3a.secret.key</name><value>SECRET-KEY</value></property>

Created on 01-05-2017 05:13 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

newbie question: I don't know how I add a user in the hadoop environment ( Step 8 above) ? Can someone please enlighten me? Thanks

Created on 06-26-2019 12:06 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

How to take care of the s3 user level permissions if the user does following? Can we leverage ranger hdfs to restrict s3 permissions if the user is going through hdfs client?

hdfs dfs -cat s3a://s3hdptest/S3HDPTEST.csv