Community Articles

- Cloudera Community

- Support

- Community Articles

- How to configure HDF 1.2 to send to and get data f...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 04-18-2016 09:28 PM - edited 08-17-2019 12:45 PM

Setting up Hortonworks Dataflow (HDF) to work with kerberized Kafka in Hortonworks Data Platform (HDP)

HDF 1.2 does not contain the same Kafka client libraries as the Apache NiFi version. HDF Kafka libraries are specifically designed to work with the Kafka versions supplied with HDP.

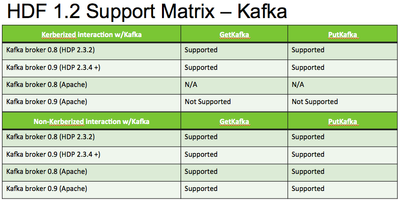

The following Kafka support matrix breaks down what is supported in each Kafka version:

*** (Apache) refers to the Kafka version downloadable from the Apache website.

For newer versions of HDF (1.1.2+), NiFi uses zookeeper to maintain cluster wide state. So the following only applies if this is a HDF NiFi cluster:

1. If a NiFi cluster has been setup to use a kerberized external or internal zookeeper for state, every kerberized connection to any other zookeeper would require using the same keytab and principal. For example a kerberized embedded zookeeper in NiFi would need to be configured to use the same client keytab and principal you want to use to authenticate with a say a Kafka zookeeper.

2. If a NiFi cluster has been setup to use a non-kerberized zookeeper for state, it cannot then talk to any other zookeeper that does use kerberos.

3. If a NiFi cluster has been setup to use a kerberized zookeeper for state, it cannot then communicate with any other non-kerberized zookeeper.

With that being said, the PutKafka and GetKafka processors do not have properties like the HDFS processors for keytab and principal. The keytab and principal would be defined in the same jaas file used if you setup HDF cluster state management. So before even trying to connect to kerberized Kafka, we need to get NiFi state management configured to use either an embedded or external kerberized zookeeper for state. Even if you are not clustered right now, you need to take the above in to consideration if you plan on upgrading to being a cluster later:

——————————————

NiFi Cluster Kerberized State Management:

https://nifi.apache.org/docs/nifi-docs/html/administration-guide.html#state_management

Lets assume you followed the above linked procedure to setup your NiFi cluster to create an embedded zookeeper. At the end of the above procedure you will have made the following config changes on each of your NiFi Nodes:

1. Created a zookeeper-jaas.conf file

On nodes with embedded zookeeper, it will contain something like this:

Server {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="./conf/zookeeper-server.keytab"

storeKey=true

useTicketCache=false

principal="zookeeper/myHost.example.com@EXAMPLE.COM";

};

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="./conf/nifi.keytab"

storeKey=true

useTicketCache=false

principal="nifi@EXAMPLE.COM";

};

On Nodes without embedded zookeeper, it will look something like this:

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="./conf/nifi.keytab"

storeKey=true

useTicketCache=false

principal="nifi@EXAMPLE.COM";

};

2. Added a config line to the NiFi bootstrap.conf file:

java.arg.15=-Djava.security.auth.login.config=/<path>/zookeeper-jaas.conf

*** the arg number (15 in this case) must be unused by any other java.arg line in the bootstrap.conf file

3. Added 3 additional properties to the bottom of the zookeeper.properties file you have configured per the linked procedure above:

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

jaasLoginRenew=3600000

requireClientAuthScheme=sasl

—————————————

Scenario 1 : Kerberized Kafka setup for NiFI Cluster:

So for scenario one, we will assume you are running on a NiFi cluster that has been setup per the above to use a kerberized zookeeper for NiFi state management.

Now that you have that setup, you have the foundation in place to add support for connecting to kerberized Kafka brokers and Kafka zookeepers.

The PutKafka processor connects to the Kafka broker and the GetKafka processor connects to the Kafka zookeepers. In order to connect to via Kerberos, we will need to do the following:

1. Modify the zookeeper-jaas.conf file we created when you setup the kerberized state management stuff above:

You will need to add a new section to the zookeeper-jass.conf file for the Kafka client:

If your NiFi node is running an embedded zookeeper node, your zookeeper-jaas.comf file will contain:

Server {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="./conf/zookeeper-server.keytab"

storeKey=true

useTicketCache=false

principal="zookeeper/myHost.example.com@EXAMPLE.COM";

};

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="./conf/nifi.keytab"

storeKey=true

useTicketCache=false

principal="nifi@EXAMPLE.COM”;

};

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useTicketCache=true

renewTicket=true

serviceName="kafka"

useKeyTab=true

keyTab="./conf/nifi.keytab"

principal="nifi@EXAMPLE.COM";

};

*** What is important to note here is that both the “KafkaClient" and “Client" (used for both embedded zookeeper and Kafka zookeeper) use the same principal and key tab ***

*** The principal and key tab for the “Server” (Used by the embedded NiFi zookeeper) do not need to be the same used by the “KafkaClient" and “Client” ***

If your NiFi cluster node is not running an embedded zookeeper node, your zookeeper-jaas.comf file will contain:

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="./conf/nifi.keytab"

storeKey=true

useTicketCache=false

principal="nifi@EXAMPLE.COM”;

};

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useTicketCache=true

renewTicket=true

serviceName="kafka"

useKeyTab=true

keyTab="./conf/nifi.keytab"

principal="nifi@EXAMPLE.COM";

};

*** What is important to note here is that both the KafkaClient and the Client (used for both embedded zookeeper and Kafka zookeeper) use the same principal and key tab ***

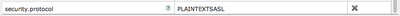

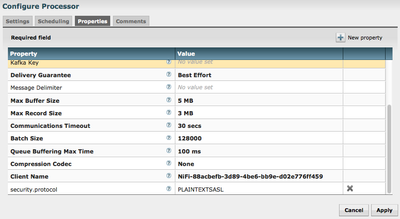

2. Add additional property to the PutKafka and GetKafka processors:

Now all the pieces are in place and we can start our NiFi(s) and add/modify the PutKafka and GetKafka processors.

You will need to add one new property by using

the on each putKafka and getKafka

processors “Properties tab:

You will use this same security.protocol (PLAINTEXTSASL) when intereacting with HDP Kafka versions 0.8.2 and 0.9.0.

————————————

Scenario 2 : Kerberized Kafka setup for Standalone NiFi instance:

For scenario two, a standalone NiFi does not use zookeeper for state management. So rather then modifying and existing jaas.conf file, we will need to create one from scratch.

The PutKafka processor connects to the Kafka broker and the GetKafka processor connects to the Kafka zookeepers. In order to connect to via Kerberos, we will need to do the following:

1. You will need to create a jaas.conf file somewhere on the server running your NiFi instance. This file can be named whatever you want, but to avoid confusion later should you turn your standlone NiFi deployment in to a NiFi cluster deployment, I recommend continuing to name the file zookeeper-jaas.conf.

You will need to add the following lines to this zookeeper-jass.conf file that will be used to talk to communicate with the Kerberized Kafka brokers and Kerberized Kafka zookeeper(s) :

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="./conf/nifi.keytab"

storeKey=true

useTicketCache=false

principal="nifi@EXAMPLE.COM”;

};

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useTicketCache=true

renewTicket=true

serviceName="kafka"

useKeyTab=true

keyTab="./conf/nifi.keytab"

principal="nifi@EXAMPLE.COM";

};

*** What is important to note here is that both the KafkaClient and Client configs use the same principal and key tab ***

2. Added a config line to the NiFi bootstrap.conf file:

java.arg.15=-Djava.security.auth.login.config=/<path>/zookeeper-jaas.conf

*** the arg number (15 in this case) must be unused by any other java.arg line in the bootstrap.conf file

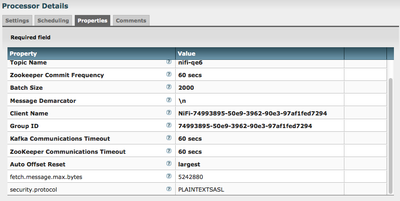

3. Add additional property to the PutKafka and GetKafka processors:

Now all the pieces are in place and we can start our NiFi(s) and add/modify the PutKafka and GetKafka processors.

You will need to add one new property by using

the on each putKafka and getKafka

processors “Properties tab:

You will use this same security.protocol (PLAINTEXTSASL) when interacting with HDP Kafka versions 0.8.2 and 0.9.0.

————————————————————

That should be all you need to get setup and going….

Let me fill you in on a few configuration recommendations for your PutKafka and getKafka processors to achieve better throughputs:

PutKafka:

1. Ignore for now what the documentation says for the Batch Size property on the PutKafka processor. It is really a measure of bytes, so jack that baby up from the default 200 to some much larger value.

2. Kafka can be configured to accept larger files but is much more efficient working with smaller files. The default max messages size accepted by Kafka is 1 MB, so try to keep the individual messages smaller then that. Set the Max Record Size property to the max size a message can be, as configured on your Kafka. Changing this value will not change what your Kafka can accept, but will prevent NiFi from trying to send something to big.

3. The Max Buffer Size property should be set to a value large enough to accommodate the FlowFiles it is being fed. A single NiFi FlowFile can contain many individual messages and the Message Delimiter property can be used to split that large FlowFile content into is smaller messages. The Delimiter could be new line or even a specific string of characters to denote where one message ends and another begins.

4. Leave the run schedule at 0 sec and you may even want to give the PutKafka an extra thread (Concurrent tasks)

GetKafka:

1. The Batch Size property on the GetKafka processor is correct in the documentation and does refer to the number of messages to batch together when pulled from a Kafka topic. The messages will end up in a single outputted FlowFile and the configured Message Demarcator (default new line) will be used to separate messages.

2. When pulling data from a Kafka topic that has been configured to allow messages larger than 1 MB, you must add an additional property to the GetKafka processor so it will pull those larger messages (the processor itself defaults to 1 MB). Add fetch.message.max.bytes and configure it to match the max allowed message size set on Kafka for the topic.

3. When using the GetKafka processor on a Standalone instance of NiFi, the number of concurrent tasks should match the number of partitions on the Kafka topic. This is not the case (dispite what the bulletin tell you when it is started) when the GetKafka processor is running on a NIFi cluster. Lets say you have 3 node NiFi cluster. Each Node in the cluster will pull from a different partition at the same time. So if the topic only has 3 partitions you will want to leave concurrent tasks at 1 (indicates 1 thread per NiFi node). If the topic has 6 partitions, set concurrent tasks to 2. Let say the topic has 4 partitions, I would still use one concurrent task. NiFi will still pull from all partitions, the addition partition will be included in a Round Robin fashion. If you were to set the same number of concurrent tasks as partitions in a NiFi cluster, you will end up with only one Node pulling from every partition while your other nodes sit idle.

4. Set your run schedule 500 ms to reduce excessive CPU utilization.