Community Articles

- Cloudera Community

- Support

- Community Articles

- How to enable user impersonation for SH interprete...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 04-28-2017 09:51 AM - edited 08-17-2019 01:07 PM

ENVIRONMENT and SETUP

I did test the below solution with

- HDP-2.6.0.3-8

- Ambari 2.5.0.3

To configure this environment, follow up the steps defined for HDP 2.5 (Zeppelin 0.6) - https://community.hortonworks.com/articles/81069/how-to-enable-user-impersonation-for-sh-interprete....

As the Zeppelin UI -> Interpreter window has changed, follow this up.

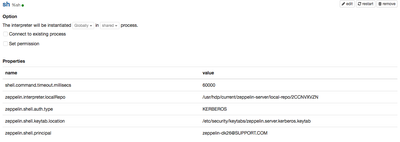

I. Default config looks like below:

II. Do the following change:

- edit the interpreter

- change The interpreter will be instantiated from Globally to Per User and shared to isolated

- then you will be able to see and select User Impersonate check-box

- also remove

zeppelin.shell.auth.type zeppelin.shell.keytab.location zeppelin.shell.principal

III. Changed config looks like below:

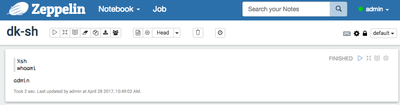

After Saving the changes and running below as admin

%sh whoami

We get

Created on 08-12-2018 08:21 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This will not work in a kerberized cluster (at least with SSSD using LDAP + MIT Kerberos) because the user impersonation in Zeppelin is implemented by doing a "sudo su - <user>" to the requested user.

In this case the whoami above will work ok, but If you try to execute any command involving the Hadoop cluster, for example "hdfs dfs", it will fail because your user doen't have the required Kerberos ticket obtained with "kinit".

The "kinit" is done automatically when you login in the console or by using ssh and providing the use's password (the Linux host will contact Kerberos server and get the ticket). But when using "su" from root or "sudo su" from a user in the sudoers, you are not providing the user's password and for this reason you have to execute kinit an provide the user's password manually to get the Kerberos ticket. This is not posible with Zeppelin because it doesn't provides an interactive console.

Created on 10-02-2019 06:18 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@lvazquez maybe you can directly execute a "kinit" to submit your user's credentials to your LDAP

I manage to authenticate users from AD while the cluster is kerberorized through a FreeIPA Server.

This is a command sample:

%sh

echo "password" | kinit foo@hortonworks.local

hdfs dfs -ls /

Found 12 items

drwxrwxrwt - yarn hadoop 0 2019-10-02 13:53 /app-logs

drwxr-xr-x - hdfs hdfs 0 2019-10-01 15:27 /apps

drwxr-xr-x - yarn hadoop 0 2019-10-01 14:06 /ats

drwxr-xr-x - hdfs hdfs 0 2019-10-01 14:08 /atsv2

drwxr-xr-x - hdfs hdfs 0 2019-10-01 14:06 /hdp

drwx------ - livy hdfs 0 2019-10-02 11:35 /livy2-recovery

drwxr-xr-x - mapred hdfs 0 2019-10-01 14:06 /mapred

drwxrwxrwx - mapred hadoop 0 2019-10-01 14:08 /mr-history

drwxrwxrwx - spark hadoop 0 2019-10-02 15:08 /spark2-history

drwxrwxrwx - hdfs hdfs 0 2019-10-01 15:31 /tmp

drwxr-xr-x - hdfs hdfs 0 2019-10-02 14:23 /user

drwxr-xr-x - hdfs hdfs 0 2019-10-01 15:14 /warehouseI think this way is really ugly but at least, it is possible.

Do not forget to change in your hdfs-site file the auth_to_local

RULE:[1:$1@$0](.*@HORTONWORKS.LOCAL)s/@.*//

RULE:[1:$1@$0](.*@IPA.HORTONWORKS.LOCAL)s/@.*//