Community Articles

- Cloudera Community

- Support

- Community Articles

- How to expand existing NiFi cluster fault toleranc...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 01-04-2016 04:54 PM - edited 08-17-2019 01:32 PM

Using NiFi to provide fault tolerance across data centers (The Multi-Zone approach)

The purpose of this document is to show how to take a NiFi cluster and create some additional fault tolerance using multiple zones/regions. You can think of these zones/regions as physically separated data centers. (They may not even be geographically co-located) The document will cover a few different configurations including one large cluster that spans across multiple zones, two independent clusters (each located in a different zone) with only one zone active at a time, and finally multiple clusters (each located in a different zone) all active at the same time. There are pros and cons to each configuration that will be covered. Depending on your deployment model, one configuration may be better suited then another.

A NiFi cluster consists of a single NiFi Cluster Manager (NCM) and 1 to N number of NiFi Nodes. NiFi does not set a limit on the number of Nodes that can exist in a cluster. While a NiFi cluster does offer a level of fault tolerance through multiple nodes, this document dives deeper in to having fault tolerance via a multiple zone deployment approach. The role of the NCM is to provide a single point of management for all the Nodes in a given cluster. Changes a Dataflow Manager (DFM) makes on the NiFi canvas/graph, controller services or reporting tasks are pushed out to every Node. DFMs are the people responsible for and have the permission to construct dataflows within NiFi through the process of stringing together multiple NiFi processors. These connected Nodes all operate independently of each other. The Nodes all communicate with the NCM via heartbeat messages. These heartbeat messages allow the NCM to gather together everything each Node is doing and report back to the user in single point. While the NCM itself never handles actual data itself, it is a pivotal part of the cluster. The NCM plays an important role when it comes to using Remote Process Groups (RPG) to send/pull FlowFiles from one NiFi to another. The process of sending data between NiFi instances using RPGs is known as Site-To-Site (S2S). We will see later how the biggest consideration with setting up fault tolerance revolves around dealing with S2S communications.

Since the NCM (at the time of writing this) serves as single point of failure for access to a NiFi cluster for monitoring and configuration, we will also discuss recovery of a lost NCM in this document. The loss of a NCM does not inhibit the Nodes from continuing to process their data. Its loss simply means the user has lost access to make changes and monitor the cluster until it is restored.

Lets take a quick high-level look at how S2S works when talking to a NiFi cluster. Source NiFi systems (Source systems could be standalone instances of NiFi or Nodes in NiFi cluster) will add a RPG to their graph. The RPG requires a URL to the target NiFi System. Since we are talking to a NiFi cluster in this case, the URL provided would be that of the NCM. Why the NCM? After all it does not want any of your data nor will it accept it. Well the NCM knows all about its cluster (How many Nodes are currently connected, their address on the network, how much data they have queued, etc…). This is a great source of information that the source system will use for smart load-balanced delivery of data. So the target NiFi NCM provides this information back to the source NiFi instance(s). The Source system(s) then smartly send data directly to those nodes via the S2S port configured on each of them.

So why is S2S so important when talking about fault tolerance? Well since the source NiFi instances all communicate with a single NCM to find out about all the nodes that data can be sent to, we have a single point of failure. How bad is that? In order to add a RPG to the graph of any source NiFi, that source NiFi must be able to communicate with the target NCM. During that time and on a regular interval there after, the target NCM updates the source NiFi(s) with node status. Should the target NCM become unreachable, the source NiFi(s) will continue to use the last status it received to continue to try to send data to the nodes. Provided those nodes have not also gone down or become unreachable, dataflow will continue. It is important to understand that since the source is doing smart data distribution to the target cluster’s nodes based on the status updates it gets, during an target NCM outage the load balanced behavior is no longer that smart. What do I mean by this? The source will continue to distribute data to the target nodes under the assumption that the last known strategy is still the best strategy. It will continue to make that assumption until the target NCM can be reached for new node statuses. Ok great, my data is still flowing even without the NCM.

Here is what the dataflow for delivery via S2S would look like on a source NiFi. Some NiFi processor(s) connected to a RPG.

This RPG would then load balance and smartly deliver data to one or more input ports on each of the Nodes in the destination NiFi cluster.

This single point of failure (NCM) also affects the DFM(s) of the target NiFi because without the NCM the UI cannot be reached. So no changes can be made to graph, no monitoring can be done directly, and no provenance queries can be executed until the NCM is brought back online.

Does fault tolerance exist outside of S2S? That depends on the design of the overall dataflow. The nodes in a cluster provide a level of fault tolerance. Source systems that are not NiFis or NiFis that are exchanging data without using S2S would need to be configured to load-balance data to all the Nodes directly. The NCM has no role in negotiating those dataflow path(s). The value added with S2S is that scaling up or down a cluster is made easy. Source systems are automatically made aware of what nodes are available to them at any given time.

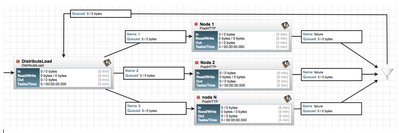

For those transfer methods other than S2S, the infrastructure needs to support having these non-NiFi sources failover data delivery between nodes in the one or more NiFi clusters. Here is an example of one such load-balanced delivery directly to a target cluster’s nodes with failover:

You can see here that if one Node should become unreachable, the data will route to failure and get redistributed to the other nodes.

The examples that follow will only cover the S2S data transport mechanism because it used to transfer data (specifically NiFi FlowFiles) between NiFi instances/clusters and the NCM plays an important role in that process. The above non-S2S delivery approach does not interface with the NCM at all.

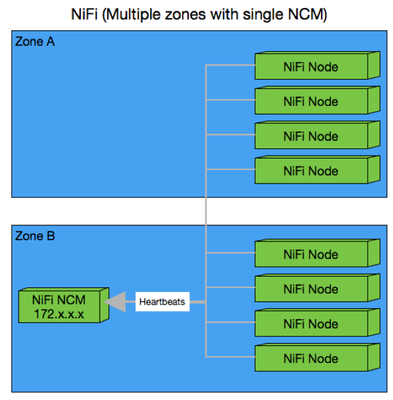

Single NCM with nodes spanning multiple zones

The first configuration we will discuss consists of a single NCM with nodes spanning multiple zones. This will be our active-active setup since the nodes in each zone will always be processing data. To provide fault tolerance each zone should be able to handle the entire load of data should the other zone(s) go down. In a two-zone scenario during normal operations, the load in either zone should not exceed 50% system resource utilization.

The NCM cannot exist in more then one zone in this setup, so we must place the NCM in one zone or the other.

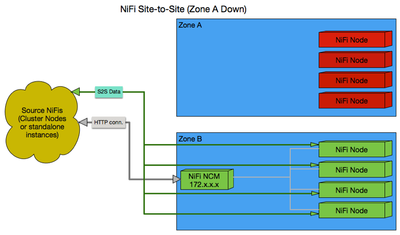

What about S2S where the NCM plays a more important role? Here is a quick look at how S2S would work for our multi-zone NiFI cluster:

Lets take a look at a couple possible scenarios with this type of configuration.

Scenario 1: Zone A Down

Here we explore what would happen if we were to lose Zone A. The NCM is still reachable in Zone B, so the source NiFi(s) will still be able to reach it and get status on the remaining connected Nodes also in Zone B.

Zone B is now processing 100% of the data until Zone A is brought back online. Once back online, the NCM will again receive heartbeats from the Zone A Nodes and report those nodes as once again available to the source NiFi(s).

It is also possible that not all NiFi nodes in Zone A are down. If that is the case, the NCM will still report those Nodes as available and data will still be sent to them.

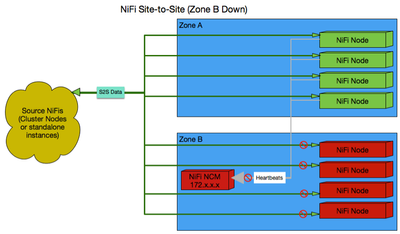

Scenario 2: Zone B Down

In this scenario the source NiFi(s) can no longer talk to the NCM to get an updated list of available nodes or status on each of the connected nodes.

The source NiFi(s) will continue to try to send data based on the last successful status they received before Zone B went down. While the Source systems will continue to try to send data to Zone B nodes, those attempts will fail and that data will fail over to the zone A nodes. In this scenario Zone A takes on 100% of the data until Zone B is back online.

Based on what we have learned from the preceding examples of a single NCM with nodes spanning multiple zones, what are the Pros and Cons to this configuration?

PROs:

- 1.All Nodes are always running current version of dataflow in every zone.

- 2.Since data is flowing through all Nodes in every zone, end-to-end functionality of the dataflows can be verified within each zone. This avoids the surprises that can accompany bringing backup systems online.

- 3.Provides better dataflow change management for clusters with constantly changing dataflows. No need to backup and transfer flow.tar to a separate backup cluster every time a change is made.

- 4.While no Zone failures exist, extra resource headroom is available to support spikes in processing load.

- 5.For S2S usage, there is no complex dataflow design to manage on source NiFis.

- 6.Data from source NiFis to target NiFis using S2S will only stop if all nodes in all zones become unavailable. (No manual intervention on source NiFis needed to get data transferring to target nodes that are still online.)

CONs:

- 1.DFM(s) lose access to the cluster if the zone containing the NCM goes down.

- 2.If the zone that is lost contained the NCM, source NiFi(s) will continue trying to send data to all Nodes in every zone because it is no longer receiving node statuses from that NCM.

- 3.Original NCM must be returned to service to restore full cluster operations.

- 4.Standing up a new NCM requires reconfiguring all nodes and restarting them so they connect to it (see the next section for how to stand up a new NCM).

- 5.Network latency between zones can affect the rate at which changes can be made to dataflows.

- 6.Network latency between zones can affect how long it takes to return queries made against the cluster (Provenance).

This configuration is typically used when dataflows are continuously changing. (Development in production model). This allows you to make real-time changes to dataflow across multiple zones. The dataflows on each node are kept identical (not just in design but all the component IDs for all processors and connections are identical as well).

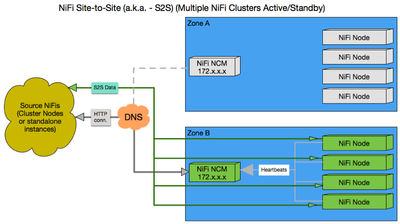

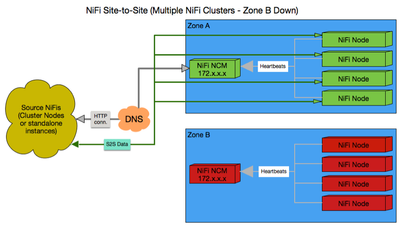

Multi-Cluster Fault Tolerance (One cluster in each zone)

(One Cluster Active at a time)

In this configuration you have two or more completely separate NiFi clusters. One cluster serves as your active cluster while the other remains off in a standby state. This type of configuration would rely on some process external to and not provided by NiFi to facilitate failover between clusters.

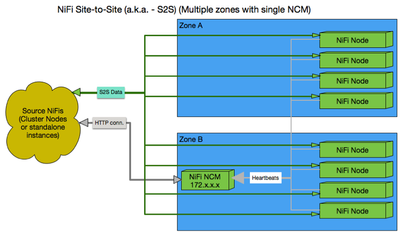

DNS plays an important role in this type of configuration because of how S2S works. We will explain why as we walk through the scenario. Here is how a two zone setup would look:

Here we have all the source systems sending data to a NiFi cluster running in zone B. We'll refer to this as our Active or Primary cluster for now. The diagram above shows the use of S2S to send data from source NiFis in to this cluster.

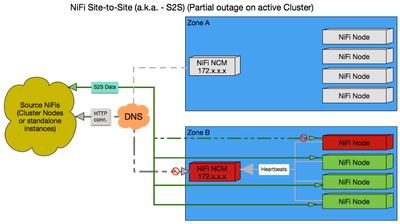

Scenario 1: Partial Outage on Active Cluster

Lets look at the simplest case where connectivity to the NCM or just a few Nodes in Zone B is lost. The loss of the NCM only affects S2S, so we will only discuss that mode of data exchange between systems here. If any of the source systems using S2S lose connectivity to the NCM in Zone B, those source systems will continue to use the last known state of the Nodes to continue sending data to the Nodes. It is also possible that in addition to losing connectivity with the NCM, connectivity to one or more of the Nodes may be lost. In that case, the source systems will continue to try and send data to the last list of active Nodes received from the NCM.

Here we see loss of connectivity with the NCM and one Node. If the NCM went down before the Node, the list of Nodes the source systems use for delivery will still include that down node. Attempts to send data to that down node will fail and caused the sending systems to try to send it to one of the other Nodes from the list it is working from. In this scenario the active cluster is in a degraded state. The Nodes that are still operating are carrying the full workload.

Scenario 2: Active Cluster Completely Down

Lets say there was a complete outage of zone B. In that case the sending NiFi(s) would be unable to send any data and would start to queue, creating a backlog of data. In order to continue processing while Zone B is down, the NiFi cluster in Zone A will need to be started, but first we need to discuss how Zone A should have already been setup.

- 1.The NCM configuration in Zone A must be identical in configuration to the NCM in Zone B with the exception of IP address. That identical configuration must include the public resolvable hostname.

- a.Why is this important? The RPG processor set up on the NiFi sources are trying to communicate with a specific NCM. If the NCM in Zone A also uses the same hostname, no changes need to be made to the flow on all of the various source NiFi(s).

- 2.There is no need for the Nodes to be identical in either hostname or IP to those in the other zone. Once source NiFi(s) connect to new NCM, they will get a current listing of the Nodes in Zone A.

- 3.All Nodes should be in a stopped state with no flow.xml.gz file or template directory located in their conf directories.

- 4.The flow.tar file located in the Zone B NCM should be copied to the Zone A NCM after any changes to the conf directory of the NCM on Zone A. How often depends on how often changes are made to the dataflow on Zone B.

So Zone A is now setup so that it is ready to take over running your dataflow. Lets walk through the process that would need to occur in this scenario where the complete loss of Zone B has occurred. This can be done manually, but you may benefit from automating this process:

- 1.Zone B is determined to be down or NiFi(s) running in Zone B are unreachable.

- 2.Zone A’s NCM is started using the most recent version of the flow.tar from Zone B.

- 3.Zone A’s Nodes are started. These Nodes are connected to the NCM and obtain the flow.xml.gz and templates from the NCM.

- 4.The DNS entry must be changed to resolve the Zone B’s NCM hostname to the IP of Zone A’s NCM. (Reminder, Zone A NCM should be using the same hostname as the Zone B NCM only with a different IP address)

*** This DNS change is necessary so that none of the source systems sending data via S2S need to change their configuration.

There are Pros and Cons to this configuration as well:

PROs:

- 1.Standby cluster can be turned on quickly returning full access to the users so that monitoring and changes to the dataflow can be done.

- 2.Being able to modify dataflows is not affected by nodes down in other zone. Any modifications made would need to be copied to zone B, which is now the new standby.

- 3.After switching to the standby zone, S2S is not full functionality is restored and is not degraded by the lack of a NCM.

- 4.Cluster communications between nodes and the NCM are not affected by network latency between zones.

- CONs:

- 1.To avoid breaking S2S, DNS must be used redirect traffic to new NCM IP. This process occurs outside of NiFi.

- 2.The process of detecting a complete zone outage and switching from one Zone to another is not built in to NiFi.

- 3.Some downtime in dataflow will exist while transitioning form one zone to the other.

- 4.Zone B, while now the standby, will still need to be brought back online to work off any data it had in its queue when it went offline.

This configuration is typically used when high latency exists between zones. The latency does not affect dataflow, but can affect the amount of time it takes for changes to be made on the graph and the amount of time it takes for provenance and status queries to return. External scripts/cron jobs are needed to keep the backup zone in sync with the active zone.

--------------------------------------------------------------------------------------------

Part 2 of this tutorial can be found here:

https://community.hortonworks.com/articles/8631/ho...

Part 2 covers Multi-Cluster Fault Tolerance (One cluster in each zone with All Clusters Active all the time)