Community Articles

- Cloudera Community

- Support

- Community Articles

- How to install and run Spark 2.0 on HDP 2.5 Sandbo...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 08-23-2016 07:53 PM - edited 08-17-2019 10:37 AM

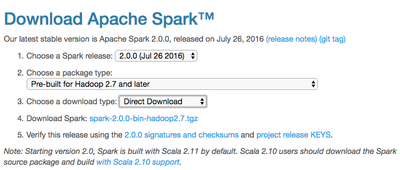

First, you should go to the Apache Spark downloads web page to download Spark 2.0.

Link to Spark downloads page:

http://spark.apache.org/downloads.html

Set your download options (shown in image below), and click on the link next to "Download Spark" (i.e. "spark-2.0.0-bin-hadoop2.7.tgz"):

This will download the gzipped tarball to your computer.

Next, startup the HDP 2.5 Sandbox image within your virtual machine (either using VirtualBox or VMFusion). Once the image is booted, startup a Terminal session on your laptop and copy the tarball to the VM. Here is an example using the 'scp' (secure copy) command, although you can use any file copy mechanism.

scp -p 2222 spark-2.0.0-bin-hadoop2.7.tgz root@127.0.0.1:~

This will copy the file to the 'root' user's home directory on the VM. Next, login (via ssh) to the VM:

ssh -p 2222 root@127.0.0.1

Once logged in, unzip the tarball with this command:

tar -xvzf spark-2.0.0-bin-hadoop2.7.tgz

You can now navigate to the "seed" directory already created for Spark 2.0, and move the contents from the unzipped tar file into the current directory:

cd /usr/hdp/current/spark2-client mv ~/spark-2.0.0-bin-hadoop2.7/* .

Next, change the ownership of the new files to match the local directory:

chown -R root:root *

Now, setup the SPARK_HOME environment variable for this session (or permanently by adding it to ~/.bash_profile)

export SPARK_HOME=/usr/hdp/current/spark2-client

Let's create the config files that we can edit them to configure Spark in the "conf" directory.

cd conf cp spark-env.sh.template spark-env.sh cp spark-defaults.conf.template spark-defaults.conf

Edit the config files with a text editor (like vi or vim), and make sure the following environment variables and/or parameters are set below. Add the following lines to the file 'spark-env.sh' and then save the file:

HADOOP_CONF_DIR=/etc/hadoop/conf SPARK_EXECUTOR_INSTANCES=2 SPARK_EXECUTOR_CORES=1 SPARK_EXECUTOR_MEMORY=512M SPARK_DRIVER_MEMORY=512M

Now, replace the lines in the "spark-defaults.conf" file to match this content, and then save the file:

spark.driver.extraLibraryPath /usr/hdp/current/hadoop-client/lib/native spark.executor.extraLibraryPath /usr/hdp/current/hadoop-client/lib/native spark.driver.extraJavaOptions -Dhdp.version=2.5.0.0-817 spark.yarn.am.extraJavaOptions -Dhdp.version=2.5.0.0-817 spark.eventLog.dir hdfs:///spark-history spark.eventLog.enabled true # Required: setting this parameter to 'false' turns off ATS timeline server for Spark spark.hadoop.yarn.timeline-service.enabled false #spark.history.fs.logDirectory hdfs:///spark-history #spark.history.kerberos.keytab none #spark.history.kerberos.principal none #spark.history.provider org.apache.spark.deploy.history.FsHistoryProvider #spark.history.ui.port 18080 spark.yarn.containerLauncherMaxThreads 25 spark.yarn.driver.memoryOverhead 200 spark.yarn.executor.memoryOverhead 200 #spark.yarn.historyServer.address sandbox.hortonworks.com:18080 spark.yarn.max.executor.failures 3 spark.yarn.preserve.staging.files false spark.yarn.queue default spark.yarn.scheduler.heartbeat.interval-ms 5000 spark.yarn.submit.file.replication 3 spark.ui.port 4041

Now that your config files are setup, change directory back to your $SPARK_HOME:

cd /usr/hdp/current/spark2-client

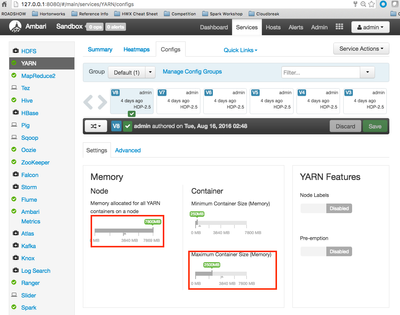

Before running a Spark application, you need to change 2 YARN settings to enable Yarn to allocate enough memory to run the jobs on the Sandbox. To change the Yarn settings, login to the Ambari console (http://127.0.0.1:8080/), and click on the "YARN" service along the left-hand side of the screen. Once the YARN Summary page is drawn, find the "Config" tab along top and click on it. Scroll down and you will see the "Settings" (not Advanced). Change the settings described below:

Note: Use the Edit/pencil icon to set each parameter to the exact values

1) Memory Node (Memory allocated for all YARN containers on a node) = 7800MB

2) Container (Maximum Container Size (Memory)) = 2500MB

Alternately, if you click the "Advanced" tab next to Settings, here are the exact config parameter names you want to edit:

yarn.scheduler.maximum-allocation-mb = 2500MB yarn.nodemanager.resource.memory-mb = 7800MB

After editing these parameters, click on the green "Save" button above the settings in Ambari. You will now need to Restart all affected services (Note: a yellow "Restart" icon should show up once the config settings are saved by Ambari; you can click on that button and select "Restart all affected services"). It may be faster to navigate to the Hosts page via the Tab, click on the single host, and look for the "Restart" button there.

Make sure that YARN is restarted successfully. Below is an image showing the new YARN settings:

Finally, you are ready to run the packaged SparkPi example using Spark 2.0. In order to run SparkPi on YARN (yarn-client mode), run the command below, which switches user to "spark" and uses spark-submit to launch the precompiled SparkPi example program:

su spark --command "bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn --deploy-mode client --driver-memory 2g --executor-memory 2g --executor-cores 1 examples/jars/spark-examples*.jar 10"

You should see lots of lines of debug/stderr output, followed by a results line similar to this:

Pi is roughly 3.144799144799145

Note: To run the SparkPi example in standalone mode, without the use of YARN, you can run this command:

./bin/run-example SparkPi

Created on 08-24-2016 01:45 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Spark 2.0 will be shipped as TP in HDP2.5 AFAIK, you don't need to manually install it from Apache community.

Created on 08-29-2016 06:43 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Can it run both Spark 1.6.1 and Spark 2.0 or just Spark 2.0 ?

Created on 09-06-2016 03:27 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Yes, you can install both spark 1.6 and 2.0 in HDP 2.5

Created on 11-19-2017 01:15 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

this is really great I think the key point here is as -Dhdp.version it's is still working for hdp version 2.6.3.0-235

- spark.driver.extraJavaOptions -Dhdp.version=2.5.0.0-817

- spark.yarn.am.extraJavaOptions -Dhdp.version=2.5.0.0-817

Created on 01-31-2018 09:20 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

how to set JAVA_HOME path ?