Community Articles

- Cloudera Community

- Support

- Community Articles

- How to set up CI-CD workflows in Cloudera Machine ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 01-30-2024 10:03 PM - edited 01-30-2024 10:39 PM

Summary

In this article, we learn how to use CML to automate Machine Learning workflows between development and production environments using an automation toolchain such as Gitlab. This solution can be extended to other platforms such as Github( using Github actions) or Atlassian (using Bitbucket pipelines).

Some prior understanding of how the DevOps pipeline works and how it can be applied in Machine learning is assumed here. There are some good resources online that can be referred for a deep-dive, but just enough information is provided below.

A (very) brief Introduction to CI-CD for Machine Learning

A DevOps pipeline is a set of automated processes and tools that allows software development teams to build and deploy code to a production environment. A few of the important components of the DevOps pipeline include Continuous Integration (CI) and Continuous Delivery and Deployment (CD), where:

- Continuous Integration is the practice or ability to make frequent commits to a common code repository

- Continuous Delivery & Deployments are the practice of the main or trunk branch of an application's source code to be in a releasable state.

Integrating the practices of DevOps to machine learning, popularly called MLOps allows ML teams to automate the lifecycle of a machine learning workflow.

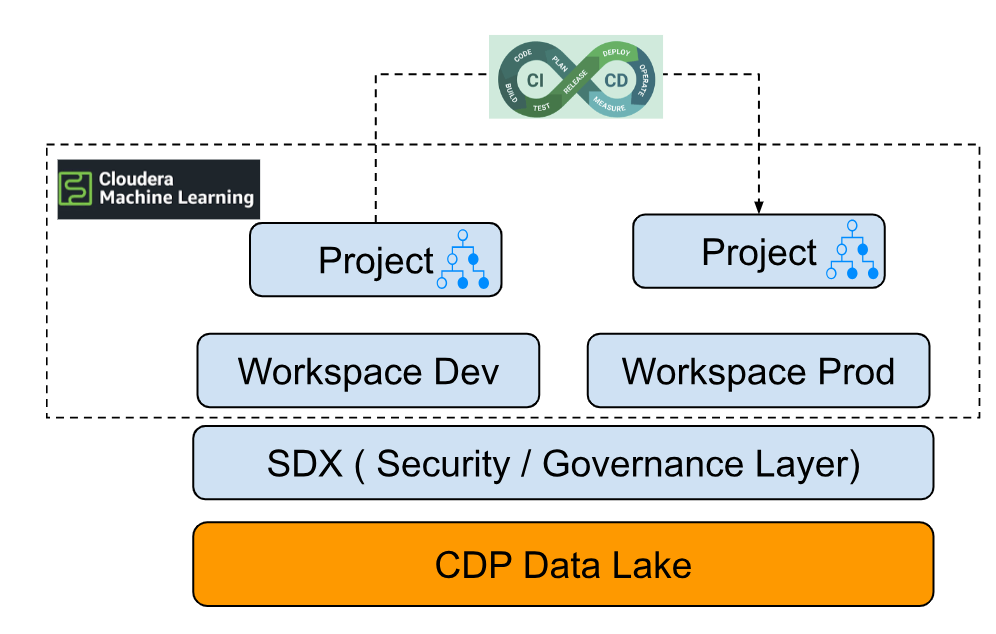

Below is an explanation of a simple pattern for setting up CI-CD in Cloudera Machine Learning:

- The CDP Data Lake is the storage layer for all formats of data that will be processed by the Machine Learning workloads. SDX layer in the Cloudera Platform provides fine-grained access security, as well as establishes the framework in the data lake for auditability and lineage.

- The workspace is a managed K8s layer (can be a Cluster or namespace) that segregates the computed profile across the development and production workloads.

- Projects are generally the compilation of artifacts including models, datasets, folders, and applications that will be developed to address one or many business use cases.

- In this case, we want to automate the CI/CD pipeline between the projects in the Development and Production workspace.

The Building Blocks of a Simple CI-CD Pipeline in CML

Overview:

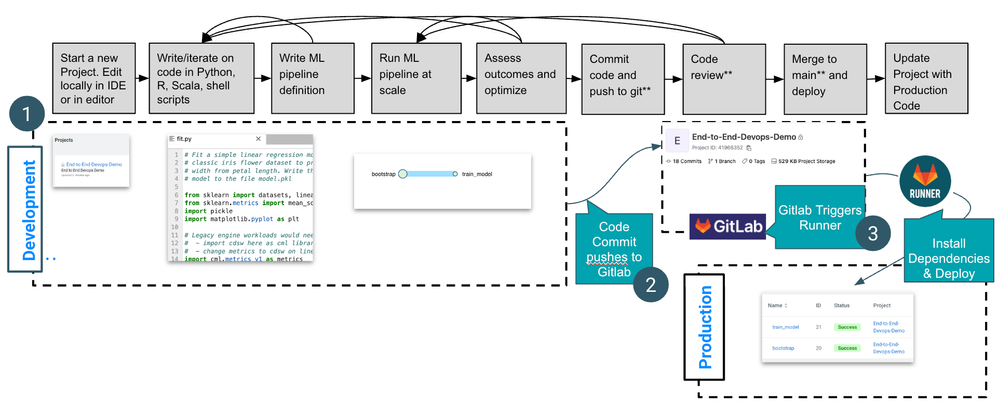

The picture above demonstrates a simple CI-CD pipeline flow in CML, which expands the ideas established earlier on a development and production workspace with automated deployments between the two using Gitlab as a DevOps toolchain. Three activity groups labelled in the picture are integral to this automation workflow as explained below:

Activity Group | Activity Description | Details |

1 | ML Development | Includes all Data Science iterative development activities including ingestion, data munging, model training, evaluation, and testing deployments in Development to ensure satisfactory performance |

2 | Continuous Integration | In this step, the developer intends to commit the code to the branch of the repository. – If the commit is permitted to the main branch then this acts as a trigger for Production deployments – If the commit is made on a separate branch, a pull request/ merge request that is approved by the main branch acts as a trigger for deployment |

3 | Continuous Deployment | The committed branch then uses a DevOps pipeline toolchain to deploy the workload into the production workspace target project. |

To demonstrate automation, we will use a simple Machine learning workflow in the repository here. In this workflow, we will copy two jobs in a source project in CML.

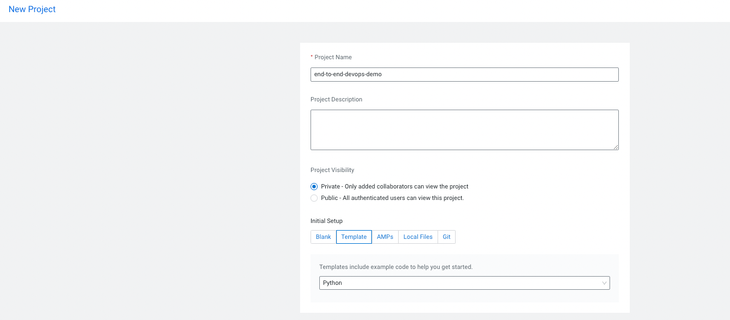

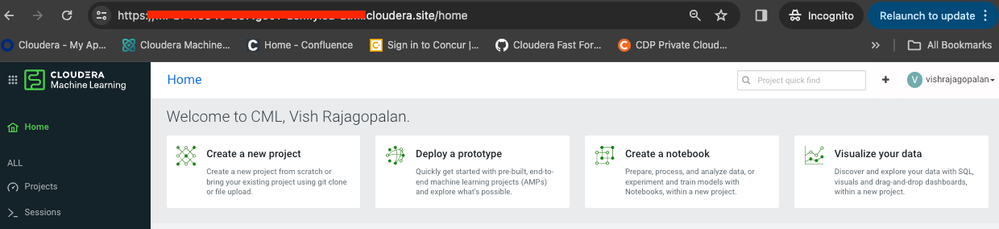

Setup CML Project

- Create 2 CML Projects as shown below, using a Python Template, in the workspaces for Development and Production.

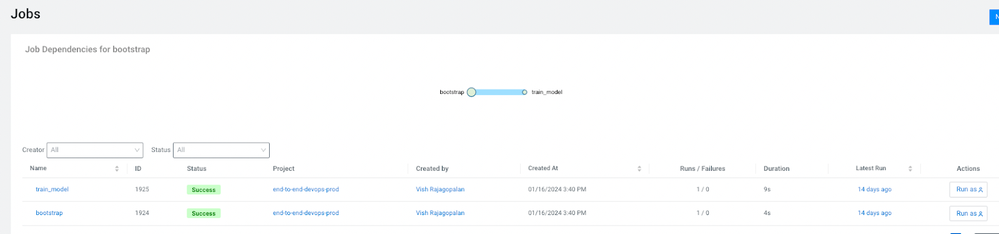

- Create two CML jobs as follows :

- Job1 :

- Job Name : bootstrap

- Compute Profile : 1 vCPU, 2GB Memory

- Type : Manual

- File : bootstrap.py

- Job 2:

- Job Name : train

- Compute Profile : 1 vCPU, 2GB Memory

- Type : Dependent

- File : fit.py

- Job1 :

- On successfully saving these two files, you should see the Jobs in our project as follows. (Ensure that the jobs can run successfully before proceeding further)

Setup Automation in Gitlab:

To build our deployment automation, we will use the following components in CML and Gitlab

- CML Target Workspace Domain name: starts from http://xxx.cloudera.site ( see below). You need to save this somewhere

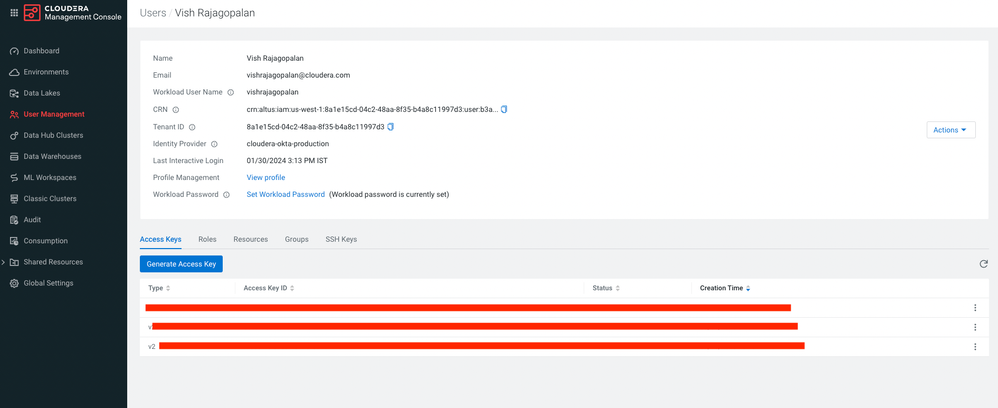

- CDP Access Keys for remotely accessing Workloads: Create a new access key and save the keys:

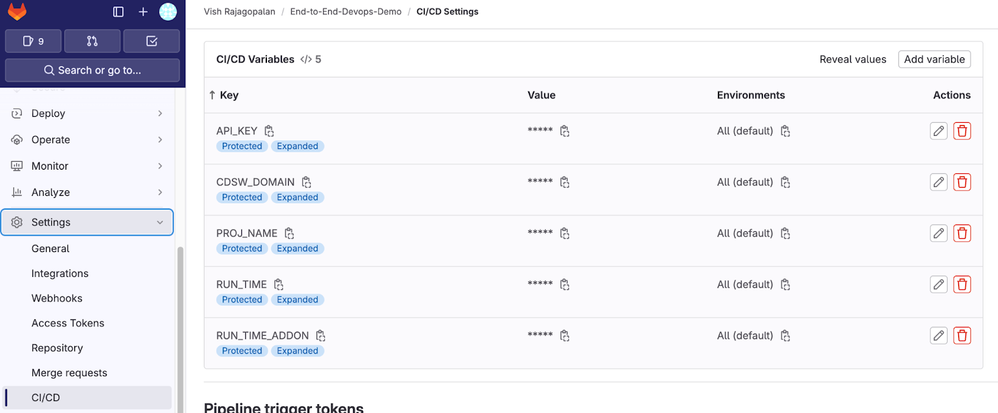

- Create Variables in Gitlab ( Available at Project → Settings → CI-CD):

- The API Key and CDSW_DOMAIN name thatwere obtained earlier. The other details are provided below:

PROJ_NAME

< target project name in production>

RUN_TIME

The runtime image, the default runtime at the time of writing this article is ( docker.repository.cloudera.com/cloudera/cdsw/ml-runtime-workbench-python3.9-standard:2023.08.2-b8). Use the one per your need.

RUN_TIME_ADDON

The runtime addon that is needed for setting up the docker container in production:” hadoop-cli-7.2.16-hf3”

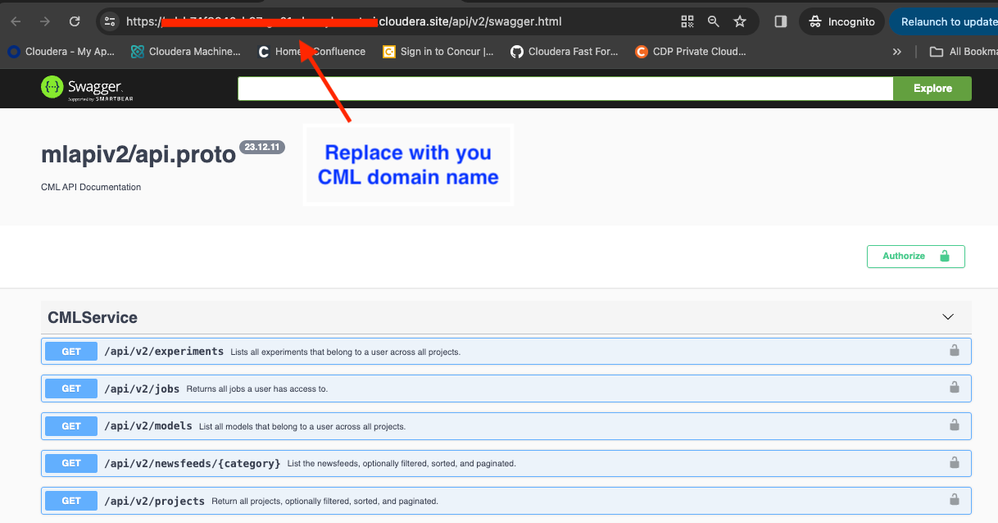

- CURL commands to execute the REST APIs from Gitlab. The CML API v2 details can be found at http://your-cml-domainname.cloudera.site/api/v2/swagger.html as shown in the image below: (you can see many different API calls as you scroll through the page)

- Pipeline in GitLab: Go to Gitlab Project → Build → Pipeline Editor to enter the pipeline code:

# This file is a template, and might need editing before it works on your project.

deploy-to-prod-cluster:

stage: deploy

image: docker:stable

before_script:

- apk add --update curl && rm -rf /var/cache/apk/*

- apk add jq

script:

# Search for the specific Target Deployment Project

- 'echo "$CDSW_DOMAIN/api/v2/projects?search_filter=%7B%22name%22%3A%22$PROJECT_NAME%22%7D&sort=%2Bname"'

- 'echo $API_KEY'

- 'curl -X GET "$CDSW_DOMAIN/api/v2/projects?search_filter=%7B%22name%22%3A%22$PROJ_NAME%22%7D" -H "accept: application/json" -H "Authorization: Bearer $API_KEY" | jq'

- 'PROJECT_ID=$(curl -X GET "$CDSW_DOMAIN/api/v2/projects?search_filter=%7B%22name%22%3A%22$PROJ_NAME%22%7D" -H "accept: application/json" -H "Authorization: Bearer $API_KEY" | jq -r ".projects|.[0]|.id")'

- 'echo $PROJECT_ID'

- 'pwd'

# - 'ls -l'

# - 'RUN_TIME="docker.repository.cloudera.com/cloudera/cdsw/ml-runtime-workbench-python3.7-standard:2022.04.1-b6"'

# create a Job in Target Project folder

- 'JOB_ID=$(curl -X POST "$CDSW_DOMAIN/api/v2/projects/$PROJECT_ID/jobs" -H "accept: application/json" -H "Authorization: Bearer $API_KEY" -H "Content-Type: application/json" -d "{ \"arguments\": \"\", \"attachments\": [ \"\" ], \"cpu\": 2, \"environment\": { \"additionalProp1\": \"\", \"additionalProp2\": \"string\", \"additionalProp3\": \"string\" }, \"kernel\": \"\", \"kill_on_timeout\": true, \"memory\": 4, \"name\": \"bootstrap\", \"nvidia_gpu\": 0, \"parent_job_id\": \"\", \"project_id\": \"string\", \"recipients\": [ { \"email\": \"\", \"notify_on_failure\": true, \"notify_on_stop\": true, \"notify_on_success\": true, \"notify_on_timeout\": true } ], \"runtime_addon_identifiers\": [ \"$RUN_TIME_ADDON\" ], \"runtime_identifier\": \"$RUN_TIME\", \"schedule\": \"\", \"script\": \"0_bootstrap.py\", \"timeout\": 0}" | jq -r ".id")'

- 'echo $JOB_ID'

# Create a dependent Job in the target deployment folder

- 'CHILD_JOB_ID=$(curl -X POST "$CDSW_DOMAIN/api/v2/projects/$PROJECT_ID/jobs" -H "accept: application/json" -H "Authorization: Bearer $API_KEY" -H "Content-Type: application/json" -d "{ \"arguments\": \"\", \"attachments\": [ \"\" ], \"cpu\": 2, \"environment\": { \"additionalProp1\": \"\", \"additionalProp2\": \"string\", \"additionalProp3\": \"string\" }, \"kernel\": \"\", \"kill_on_timeout\": true, \"memory\": 4, \"name\": \"train_model\", \"nvidia_gpu\": 0, \"parent_job_id\": \"$JOB_ID\", \"project_id\": \"string\", \"recipients\": [ { \"email\": \"\", \"notify_on_failure\": true, \"notify_on_stop\": true, \"notify_on_success\": true, \"notify_on_timeout\": true } ], \"runtime_addon_identifiers\": [ \"$RUN_TIME_ADDON\" ], \"runtime_identifier\": \"$RUN_TIME\", \"schedule\": \"\", \"script\": \"fit.py\", \"timeout\": 0}" | jq -r ".id")'

# Execute the job run

- 'curl -X POST "$CDSW_DOMAIN/api/v2/projects/$PROJECT_ID/jobs/$JOB_ID/runs" -H "accept: application/json" -H "Authorization: Bearer $API_KEY" -H "Content-Type: application/json" -d "{ \"arguments\": \"string\", \"environment\": { \"additionalProp1\": \"string\", \"additionalProp2\": \"string\", \"additionalProp3\": \"string\" }, \"job_id\": \"string\", \"project_id\": \"string\"}" | jq'

- The above pipeline copies two batch jobs simulating a data ingestion and training workload to the Target Workspace. You may need to edit when you want to copy additional artifacts to the target workspace.

Run the Automation Pipeline

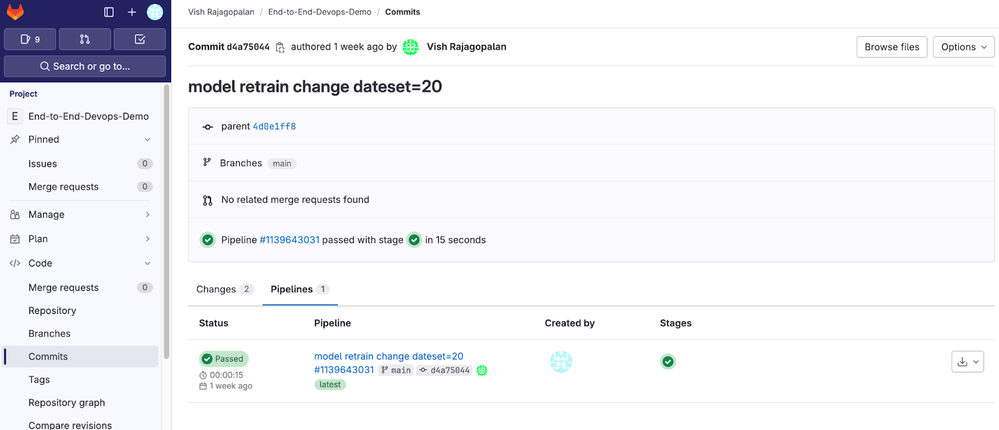

- A code commit in the source project will trigger a deployment to the Target. Your deployment pipeline should execute as below:

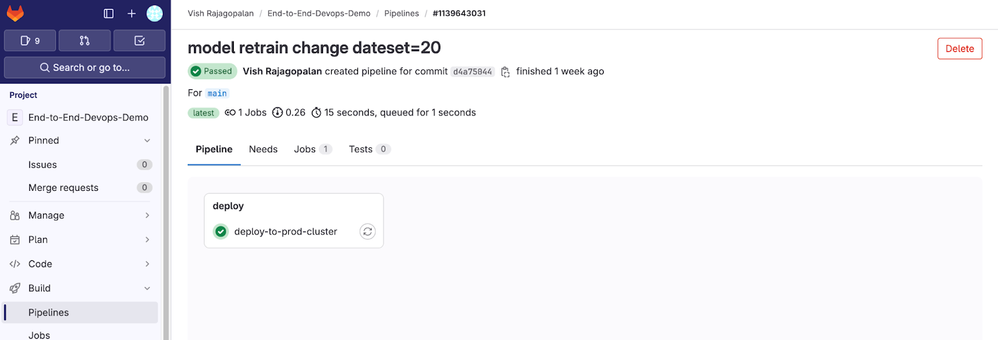

- To see the details of the actual pipeline execution, click on the pipeline as shown below:

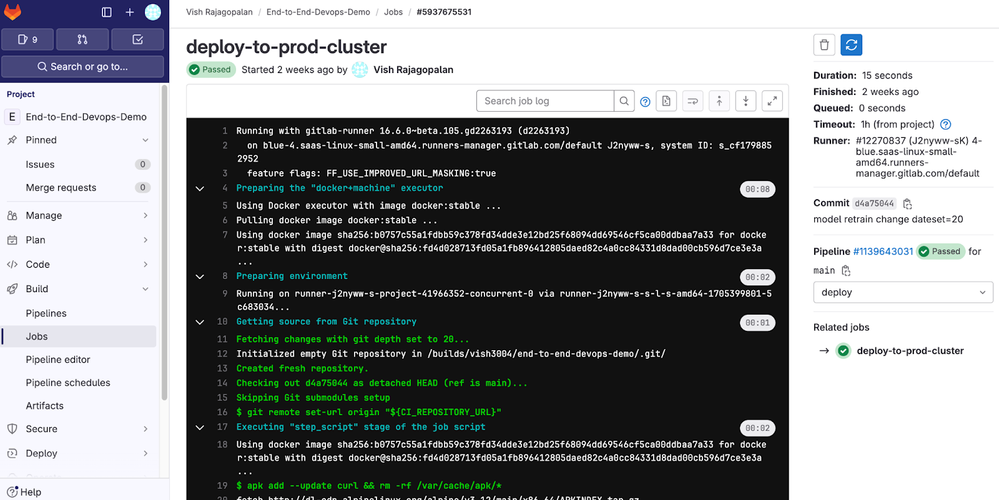

- Clicking on Deploy shows the actual pipeline deployment in a Gitlab container, which can be used to check pipeline execution details and errors (if any).