Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Guru

Created on 09-20-2021 11:58 AM - edited 09-22-2021 03:33 PM

In this example, I am importing encryption keys from HDP 3.1.5 cluster to an HDP 2.6.5 cluster.

- Create key "testkey" in Ranger KMS HDP 3.1.5 cluster with steps: List and Create Keys. In HDP 3.1.5, the current master key is:

Encryption Key:

- Create an encryption zone with the "testkey":

[hdfs@c241-node3 ~]$ hdfs crypto -createZone -keyName testkey -path

/testEncryptionZone

Added encryption zone /testEncryptionZone

- List to confirm the zone and keys:

[hdfs@c241-node3 ~]$ hdfs crypto -listZones

/testEncryptionZone testkey

- Export the keys:

- Log in to KMS host

- export java home

- cd /usr/hdp/current/ranger-kms

- ./exportKeysToJCEKS.sh $filename

The output will look as follows:

[root@c241-node3 ranger-kms]# export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-

1.8.0.292.b10-1.el7_9.x86_64/jre

[root@c241-node3 ranger-kms]# ./exportKeysToJCEKS.sh /tmp/hdp315keys.keystore

Enter Password for the keystore FILE :

Enter Password for the KEY(s) stored in the keystore:

Keys from Ranger KMS Database has been successfully exported into

/tmp/hdp315keys.keystore

On to the HDP 2.6.5 cluster where we need to import the keys, do the following:

- Log in to KMS host

- Add org.apache.hadoop.crypto.key.**; in the property jceks.key.serialFilter. This needs to be changed in the following file on KMS host only:

/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.292.b10-1.el7_9.x86_64/jre/lib/security/java.securityAfter the change, the entry in the file should look like this:

jceks.key.serialFilter = java.lang.Enum;java.security.KeyRep;\

java.security.KeyRep$Type;javax.crypto.spec.SecretKeySpec;org.apache.hadoop.crypto.k

ey.**;!*

- export JAVA_HOME, RANGER_KMS_HOME, RANGER_KMS_CONF, SQL_CONNECTOR_JAR

- cd /usr/hdp/current/ranger-kms/

- Run ./importJCEKSKeys.sh $filename JCEKS

The output looks like this:

[root@c441-node3 ranger-kms]# export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-

1.8.0.292.b10-1.el7_9.x86_64/jre

[root@c441-node3 ranger-kms]# export RANGER_KMS_HOME=/usr/hdp/2.6.5.0-292/ranger-kms

[root@c441-node3 ranger-kms]# export RANGER_KMS_CONF=/etc/ranger/kms/conf

[root@c441-node3 ranger-kms]# export SQL_CONNECTOR_JAR=/var/lib/ambariagent/

tmp/mysql-connector-java.jar

[root@c441-node3 security]# cd /usr/hdp/current/ranger-kms/

[root@c441-node3 ranger-kms]# ./importJCEKSKeys.sh /tmp/hdp315keys.keystore JCEKS

Enter Password for the keystore FILE :

Enter Password for the KEY(s) stored in the keystore:

2021-08-12 23:58:06,729 ERROR RangerKMSDB - DB Flavor could not be determined

Keys from /tmp/hdp315keys.keystore has been successfully imported into RangerDB.

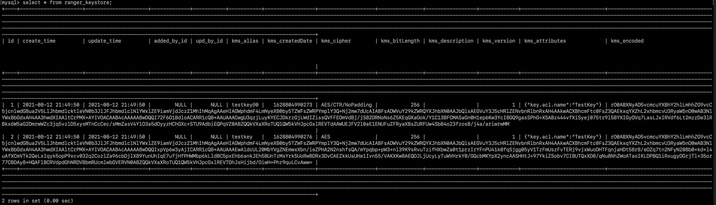

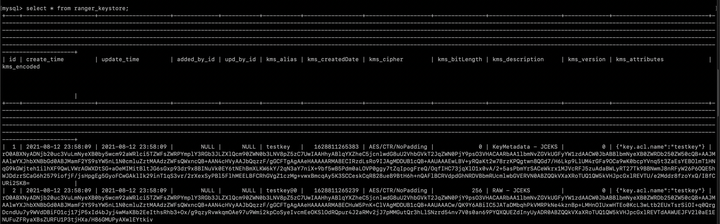

- To confirm that the encryption keys are imported, in DB of HDP 2.6.5 cluster, check the ranger_keystore table for the entry for "testkey".

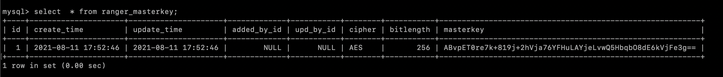

- Also, check if the master key in HDP 2.6.5 is untouched; it is the same which Ranger KMS created:

- Now create an encryption zone in HDP 2.6.5 with the imported key:

[hdfs@c441-node3 ~]$ hdfs dfs -mkdir /testEncryptionZone-265

[hdfs@c441-node3 ~]$ hdfs crypto -createZone -keyName testkey -path

/testEncryptionZone-265

Added encryption zone /testEncryptionZone-265

- Confirm the zone and keys:

[hdfs@c441-node3 ~]$ hdfs crypto -listZones

/testEncryptionZone-265 testkey

- Now for the distcp, note that it needs to have /.reserved/raw before the encryption zone path and -px option. Command:

hadoop distcp -px /.reserved/raw/$encryptionZonePath/filename

hdfs://destination/.reserved/raw/$encryptionZonePath/filename

- Check this document link to read about these options: Configuring Apache HDFS Encryption

Following is the output of distcp. It is truncated but shows copied file.

Note that the skipCRC is false.

[hdfs@c241-node3 ~]$ hadoop distcp -px /.reserved/raw/testEncryptionZone/text.txt

hdfs://172.25.37.10:8020/.reserved/raw/testEncryptionZone-265/

ERROR: Tools helper /usr/hdp/3.1.5.0-152/hadoop/libexec/tools/hadoop-distcp.sh was

not found.

21/08/13 01:52:58 INFO tools.DistCp: Input Options:

DistCpOptions{atomicCommit=false, syncFolder=false, deleteMissing=false,

ignoreFailures=false, overwrite=false, append=false, useDiff=false, useRdiff=false,

fromSnapshot=null, toSnapshot=null, skipCRC=false, blocking=true,

numListstatusThreads=0, maxMaps=20, mapBandwidth=0.0, copyStrategy='uniformsize',

preserveStatus=[XATTR], atomicWorkPath=null, logPath=null, sourceFileListing=null,

sourcePaths=[/.reserved/raw/testEncryptionZone/text.txt],

targetPath=hdfs://172.25.37.10:8020/.reserved/raw/testEncryptionZone-265,

filtersFile='null', blocksPerChunk=0, copyBufferSize=8192, verboseLog=false,

directWrite=false}, sourcePaths=[/.reserved/raw/testEncryptionZone/text.txt],

targetPathExists=true, preserveRawXattrsfalse

<TRUNCATED>

21/08/13 01:52:59 INFO tools.SimpleCopyListing: Paths (files+dirs) cnt = 1; dirCnt

= 0

21/08/13 01:52:59 INFO tools.SimpleCopyListing: Build file listing completed.

21/08/13 01:52:59 INFO tools.DistCp: Number of paths in the copy list: 1

21/08/13 01:52:59 INFO tools.DistCp: Number of paths in the copy list: 1

<TRUNCATED>

DistCp Counters

Bandwidth in Btyes=21

Bytes Copied=21

Bytes Expected=21

Files Copied=1

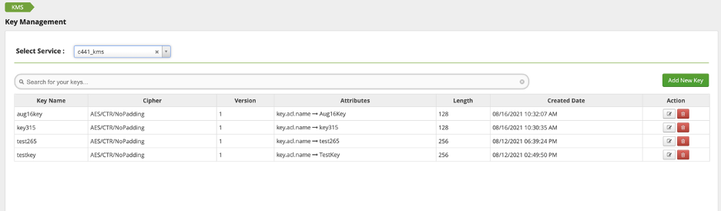

Another question that came up - what happens to old keys when I import a new key? It just gets added to the existing keys. Here is a screenshot:

2,435 Views