Community Articles

- Cloudera Community

- Support

- Community Articles

- Improve ELK Mpack for HDP & HDF 3 for Ambari 2.7

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-23-2019 04:08 PM - edited 08-17-2019 04:43 AM

This is a Work In Progress Article.

Downloads:

- HDP/HDF 3 Ambari 2.7 Mpack ELK 6.3.2 with sudo (for non root user install)

- HDP/HDF 3 Ambari 2.7 Mpack ELK 6.3.2 without sudo

In the Parent Article I introduce the process to take an existing HortonWorks ELK Mpack and take it through a series of versions (up to 2.6) which would allow me to install the ELK Version and Components I want (ElasticSearch, Logstash, Kibana, FileBeats, MetricBeats) in Ambari 2.6. In this article I am going to install the last articles ELK 2.6 version into my local machine using Vagrant. With this working Test Base I will version the Mpack up to 3.0 as I change the files to allow install into HDP & HDF 3.0. I am also going to change to the current version of ELK 6.6.1.

Starting with the 2.6 Mpack and the Ambari Quick Start Guide I am able to get a cluster installed very easily in my local machine. In my Test Base install I chose a single node c7401.ambari.apache.org. For the purpose of this Test Base I only want to complete the most minimal install to support the Mpack Services without any issues during the Install Wizard. On this single node I install Ambari Server and Agent and the following components:

- Zookeeper

- Ambari Metrics

- ElasticSearch

- LogStash

- Kibana

- FileBeats

- MetricBeats

Terminal Commands Required In Local Machine

- git clone https://github.com/u39kun/ambari-vagrant.git

- sudo -s 'cat ambari-vagrant/append-to-etc-hosts.txt >> /etc/hosts'

- cd ambari-vagrant/centos7.4

- cp ../insecure_private_key .

- cp ~/Downloads/elasticsearch_mpack-2.6.0.0-9.tar.gz .

- vagrant up c7401

- vagrant ssh c7401

Terminal Commands Required in Vagrant Node

- wget -O /etc/yum.repos.d/ambari.repo http://public-repo-1.hortonworks.com/ambari/centos6/2.x/updates/2.5.1.0/ambari.repo

- yum --enablerepo=extras install epel-release -y

- yum install java java-devel ambari-server ambari-agent -y

- ambari-server setup -s

- ambari-server install-mpack --mpack=/vagrant/elasticsearch_mpack-2.6.0.0-9.tar.gz --verbose

- ambari-server start

- ambari-agent start

After install there were some issues with starting the services. This is okay for now, the ELK Mpack really needs 4 nodes. Most of the work for versioning will be making sure the new Stack Versions are included. I can test all of these changes without expecting any services to work.

With the Test Base complete I can now quickly spin up fresh clusters working my way from HDP 2.6 to HDP 3.1.0 and ELK 6.3.2 to 6.6.1. The ELK versions will likely require some additional configuration changes, so this version will be completed in a final test base that will include HDP 3.1.0, 4 nodes, and expect the services to start.

Results From Using This Test Base HDP

It took me a few sessions working with this base to figure out that the out of box install issues for HDP 3 were related to just a few conflicts in the original Mpack parameters.

Conflict with config settings for user management. The following python command was necessary:

python /var/lib/ambari-server/resources/scripts/configs.py -u admin -p admin -n DFHZ_ELK -l c7401.ambari.apache.org -t 8080 -a set -c cluster-env -k ignore_groupsusers_create -v true

Adjustments to Mpack services params.py to get hostname and java_home from slightly different paths in the config object.

- hostname = config['agentLevelParams']['hostname']

- java64_home = config['ambariLevelParams']['java_home']

With a working install for this new Mpack in HDP 3 I can now start working on a 2 node cluster to make sure nothing else is required to get the original 4 node ELK stack installed on HDP 3. After the 2 node install cluster wizard is complete I did have to manually start some ELK services.

HDF 2 Node Test Base

Next I need to create an HDF cluster and make sure this Mpack works there. This requires a fully new test base and some changes to the mpack.json file to include HDF stack_version.

Terminal Command Required in Vagrant Node for Ambari Master

wget http://public-repo-1.hortonworks.com/HDF/centos7/3.x/updates/3.3.1.0/tars/hdf_ambari_mp/hdf-ambari-m... && wget -O /etc/yum.repos.d/ambari.repo http://public-repo-1.hortonworks.com/ambari/centos7/2.x/updates/2.7.3.0/ambari.repo && yum --enablerepo=extras install epel-release -y && yum install nano java java-devel ambari-server ambari-agent -y && ambari-server setup -s && ambari-server install-mpack --mpack=/root/hdf-ambari-mpack-3.3.1.0-10.tar.gz --verbose && ambari-server install-mpack --mpack=/vagrant/elasticsearch_mpack-3.0.0.0-0.tar.gz --verbose && ambari-server start && ambari-agent start

Terminal Command Required in Vagrant Node Ambari Agent

wget -O /etc/yum.repos.d/ambari.repo http://public-repo-1.hortonworks.com/ambari/centos7/2.x/updates/2.7.3.0/ambari.repo && yum --enablerepo=extras install epel-release -y && yum install nano java java-devel ambari-agent -y && ambari-agent start

Results From Using Test Base HDF

It took me quite a few attempts to identify a solution to very many symptoms. Somewhere in the config object for HDF are some non UTF-8 characters. In some places this threw and error, in other places it ended up silently creating empty files across the elk stack during install. I added these lines to the python scripts:

- # encoding=utf8

- import sys

- reload(sys)

- sys.setdefaultencoding('utf8')

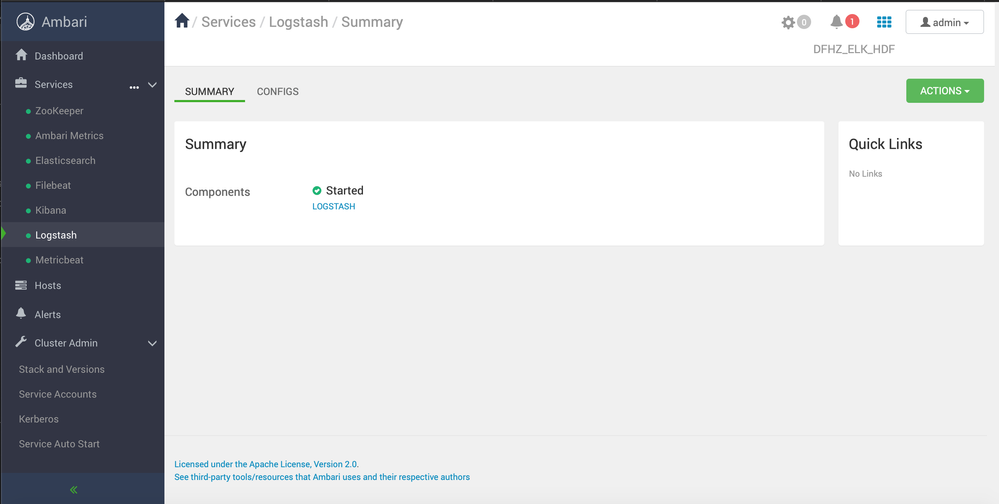

Once I identified the solution for the component python scripts I was able to get the stack installed without errors and running in HDF with only some starting issues. After starting components manually, Logstash & Kibana reporting as stopped but they are actually running.

During my next sitting I focused on the stopped services. I set the Ambari-agent log level to DEBUG and noticed some additional terminal output in the command status output from "sudo service start". After changing Kibana and Logstash to just service start, I was able to manually stop the services in the node, then start from Ambari. I am not 100% sure this sudo was related. At any rate the ELK Mpack is now installed and all services running in HDF:

I am going to go through a few more complete tests to make sure I can get the cluster stable right after install without any additional work. I completed my final test with "sudo service" replaced with "service". During Cluster Install Wizard everything installed without errors. The only issue was a warning for Check ElasticSearch which happens faster than ElasticSearch is started up. I came back to Ambari w/ Elasticsearch, Kibana, and Logstash running. I just had to manually start FileBeats and Metricbeats.

Now I will be able to focus on last part of this article: upgrade ELK 6.3.1 to 6.6.1.