Community Articles

- Cloudera Community

- Support

- Community Articles

- Ingesting Golden Gate Records From Apache Kafka an...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

12-30-2017

12:05 AM

- edited on

03-06-2020

11:36 AM

by

lwang

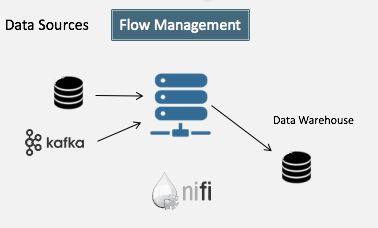

Oracle -> GoldenGate -> Apache Kafka -> Apache NiFi / Hortonworks Schema Registry -> JDBC Database

Sometimes you need to process any number of table changes sent from tools via Apache Kafka. As long as they have proper header data and records in JSON, it's really easy in Apache NiFi.

Requirements:

- Process Each Partition Separately

- Process Records in Order as each message is an Insert, Update or Delete to an existing table in our receiving JDBC store.

- Re-process if data lost

For The Main Processor for Routing, It must only run on the Primary Node.

Enforcing Order

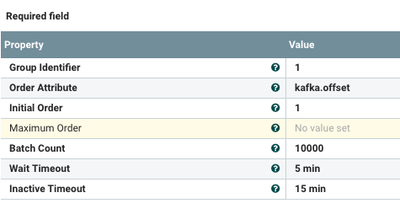

We use the Kafka.Offset to order the records, which makes sense in Apache Kafka topics.

After Insert, Update, Delete queries are built, let's confirm and enforce that strict ordering.

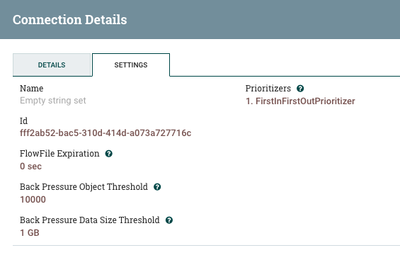

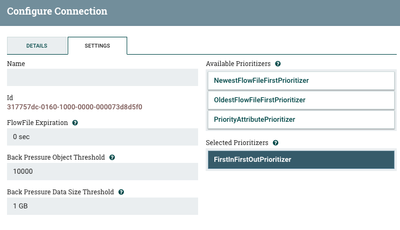

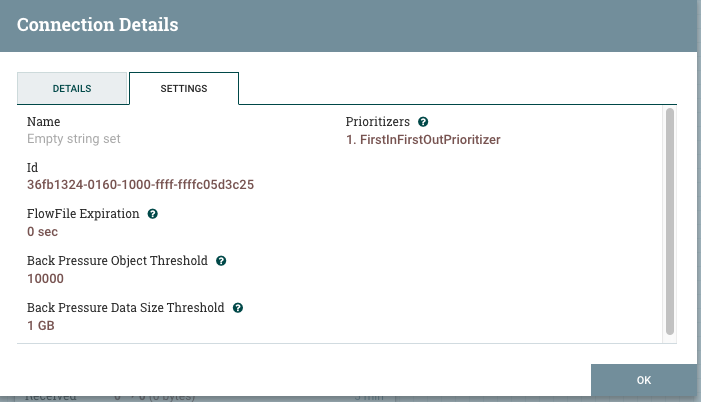

To further confirm processing in order, we make each connection in the flow FirstInFirstOutPrioritizer.

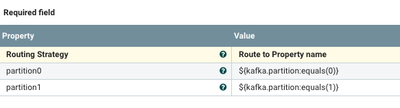

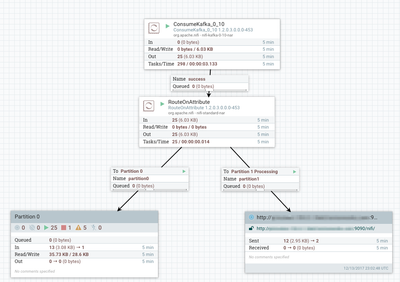

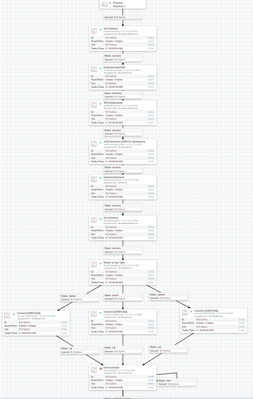

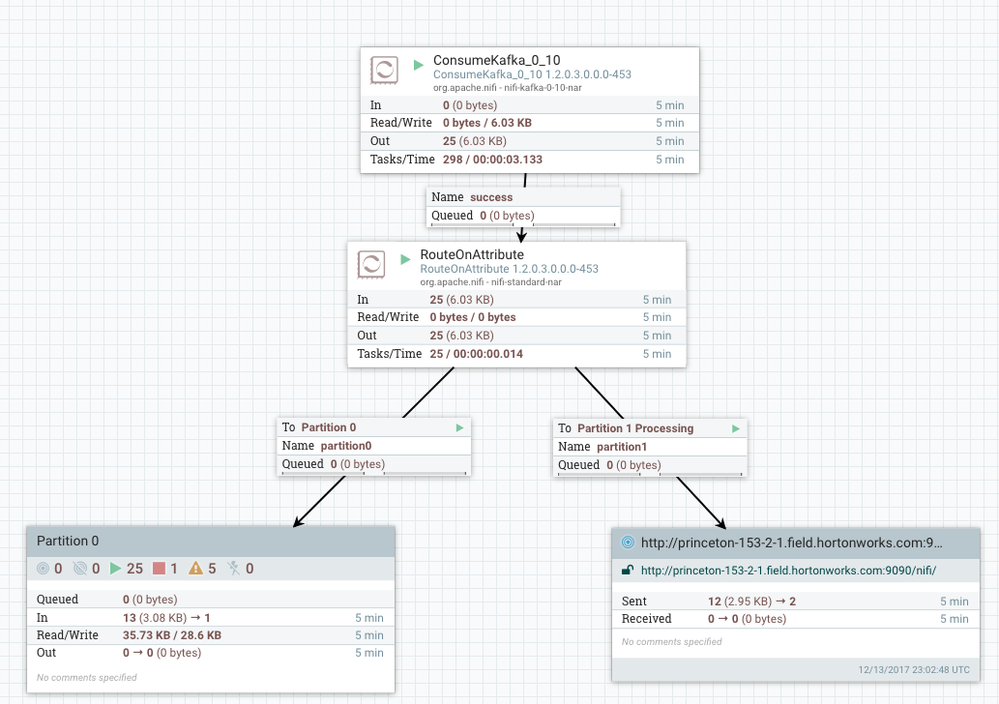

To Route, We Route Each Partition to A Different Processor Group (One Local, The Other Remote)

Let's Store Some Data in HDFS for each Table

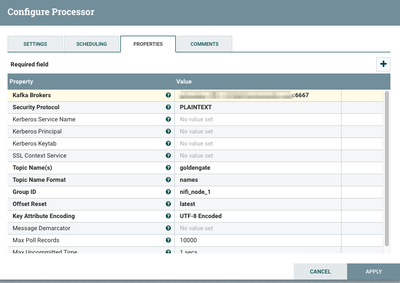

Connect To Kafka and Grab From our Topic

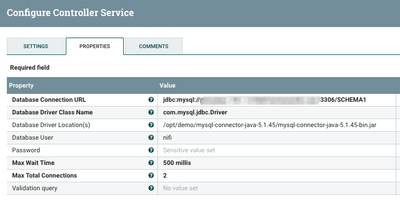

Let's Connect to our JDBC Store

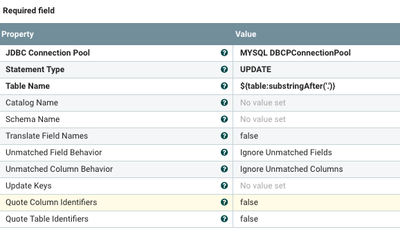

Let's do an Update (Table Name is Dynamic)

The Jolt Processor has an awesome tester for trying out Jolt

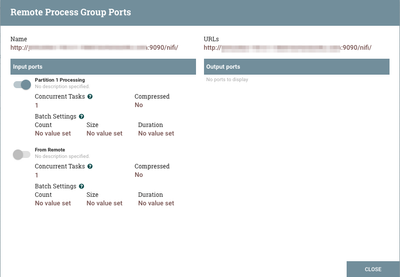

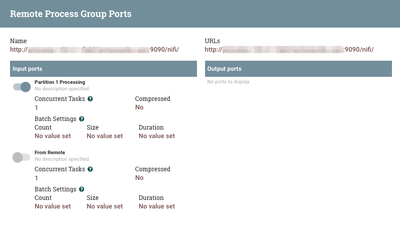

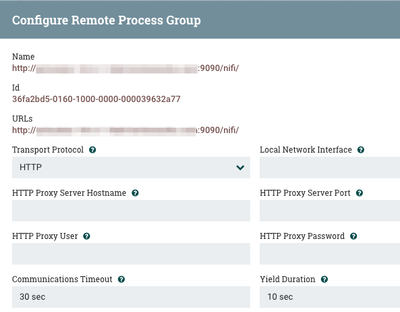

Make sure we connect our remote partitions

Routing From Routing Server (Primary Node)

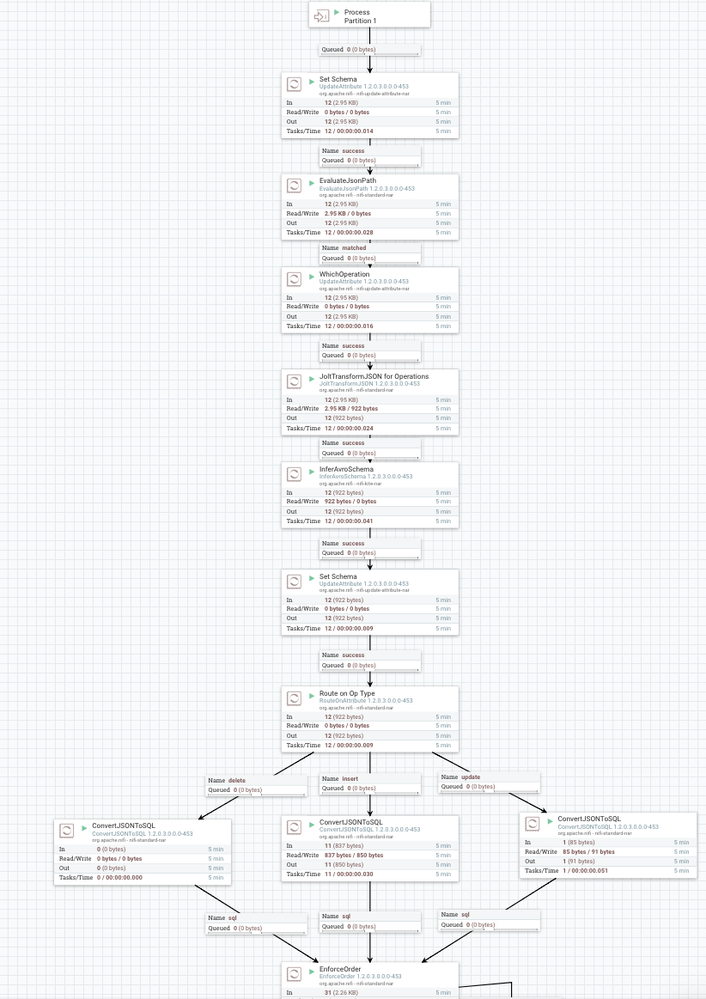

For Processing Partition 0 (Run on the Routing Server)

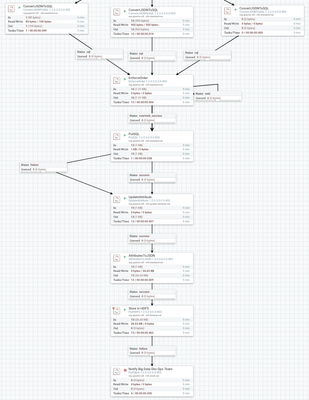

We infer the schema with our InferAvroSchema, so we don't need to know the embedded table layouts before a record arrives. In production it makes sense to know all these in advance and do integration tests and versioning of schemas. This is where Hortonworks Scheme Registry is awesome. We name the avro record after the table dynamically. We can get and store permanent schema in the Hortonworks Schema Registry.

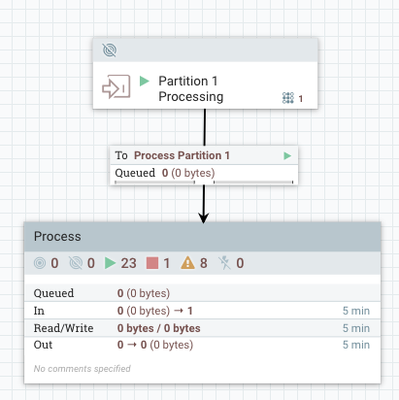

Process The Next Partition 1 .. (We can have one server or cluster per partition)

Process the Partition 1 Kafka Records from the Topic

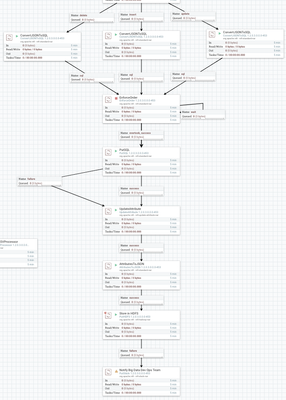

This Flow Will Convert Our Embedded JSON Table Record into New SQL

Input: {"ID":2001,"GD":"F","DPTID":2,"FIRSTNAME":"Tim","LAST":"Spann"}

Output: INSERT INTO THETABLE (ID, GD, DPTID, FIRSTNAME, LAST) VALUES (?, ?, ?, ?, ?)

sql.args.5.value Spann

sql.table THETABLE

With all the field being parameters for a SQL Injection safe parameter based insert, update or delete based on control sent.

Golden Gate Messages

{"table": "SCHEMA1.TABLE7","op_type": "I","op_ts": "2017-11-01 04:31:56.000000","current_ts": "2017-11-01T04:32:04.754000","pos": "00000000310000020884","after": {"ID":1,"CODE": "B","NAME":"STUFF","DESCR" :"Department","ACTIVE":1}}

Using a simple EvaluateJsonPath we pull out these control fields, example: $.before.

The Table Name for ConvertJSONtoSQL: ${table:substringAfter('.')}. This is to remove all leading schema / tablespace name. From the drop down for each of the three we pick either UPDATE, INSERT or DELETE based on the op_type.

We follow this with a PutSQL which will execute on our destination JDBC database sink.

After that I collect all the attributes convert them to a JSON flowfile and save that to HDFS for logging and reporting. This step could be skipped or could be in another format or sent elsewhere.

Control Fields

pos: position

table: table to update in the data warehouse

current_ts: time stamp

op_ts: time stamp

op_type: operation type (I – insert, U- update, D – delete)

Important Apache NiFi System Fields

kafka.offset

kafka.partition

kafka.topic

We can Route and process these for special handling.

To Create HDFS Directories for Changes

su hdfs <br>hdfs dfs -mkdir -p /new/T1 <br>hdfs dfs -mkdir -p /new/T2 <br>hdfs dfs -mkdir -p /poc/T3 hdfs dfs -chmod -R 777 /new <br>hdfs dfs -ls -R /new

To Create a Test Apache Kafka Topic

./bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 2 --topic goldengate

Creating a MYSQL Database As Recipient JDBC Server

wget https://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-java-5.1.45.tar.gz mysql create database mydw; CREATE USER 'nifi'@'%' IDENTIFIED BY 'MyPassWordIsSoAwesome!!!!'; GRANT ALL PRIVILEGES ON *.* TO 'nifi'@'%' WITH GRANT OPTION; commit; SHOW GRANTS FOR 'nifi'@'%'; #Create some tables in the database for your records. create table ALOG ( AID VARCHAR(1), TIMESEC INT, SOMEVAL VARCHAR(255), PRIMARY KEY (AID, TIMESEC) );

Jolt Filter

Attribute: afterJolt

${op_type:equalsIgnoreCase("D"):ifElse("none", "after")}

Attribute: beforeJolt

${op_type:equalsIgnoreCase("D"):ifElse("before", "none")}

Jolt Script to Transform JSON

[ {

"operation": "shift",

"spec": {

"${beforeJolt}": {

"*": "&"

},

"${afterJolt}": {

"*": "&"

}

}

}, {

"operation": "shift",

"spec": {

"*": "&"

}

} ]

Primary Node Flow Template

Partition X Node Flow Template

References:

- https://www.xenonstack.com/blog/data-ingestion-using-apache-nifi-for-building-data-lakes-twitter-dat...

- https://community.hortonworks.com/questions/107536/nifi-uneven-distribution-consumekafka.html

- https://community.hortonworks.com/articles/57262/integrating-apache-nifi-and-apache-kafka.html

- https://community.hortonworks.com/articles/80284/hdf-2x-adding-a-new-nifi-node-to-an-existing-secur....

- https://dev.mysql.com/downloads/connector/j/