Community Articles

Find and share helpful community-sourced technical articles.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Announcements

Now Live: Explore expert insights and technical deep dives on the new Cloudera Community Blogs — Read the Announcement

- Cloudera Community

- Support

- Community Articles

- Install HDB (HAWQ) via Ambari and use Zeppelin for...

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Guru

Created on 05-19-2016 01:29 AM - edited 08-17-2019 12:21 PM

Deploy HDB (HAWQ) on HDP 2.4.2 and use Zeppelin for Visualization

Goals:

- Install a 4 node cluster running HDP 2.4.2 using Ambari 2.2.2.0 (including Zeppelin and HDB) using Ambari bootstrap via blueprints or Ambari install wizard

- Configure HAWQ for Zeppelin

- Configure Zeppelin for HAWQ

- Run HAWQ queries via Zeppelin

- Access Hive tables from HAWQ

Notes:

- HDB managed via Ambari is only supported from Ambri 2.2.2.0 onwards. Do not attempt using older versions of Ambari

- Single node setup:

- The same steps can be used to install a single node setup - just make sure to change the HAWQ master port from default (5432) - for example: to 10432. Sample script to automate bringing up a single node HDP cluster with HDB/Zeppelin installed is available here

- Prebuilt single node VM for VMWare built with HDP 2.4.2, Ambari 2.2.2.0, HDB 2.0.0.0 and Zeppelin is available for download here (size: 4.84 GB). It was setup using below so once services are up, you can access Zeppelin (on port 9995) and use the existing 'HAWQ' notebook to run HAWQ queries.

- Update (July 10th 2016): An official HDB/HDP sandbox has been posted here (SSH login: root/hawq2016)

Install Ambari 2.2.2.0 and HDB/Zeppelin service definitions

- Bring up 4 VMs imaged with RHEL/CentOS 6.x (e.g. node1-4 in this case)

- On non-ambari nodes (nodes2-4 in this case), install ambari-agents and point them to ambari node (e.g. node1 in this case). For single node setup, this step can be skipped.

export ambari_server=node1 curl -sSL https://raw.githubusercontent.com/seanorama/ambari-bootstrap/master/ambari-bootstrap.sh | sudo -E sh

- On Ambari node (e.g. node1), install ambari-server

export install_ambari_server=true curl -sSL https://raw.githubusercontent.com/seanorama/ambari-bootstrap/master/ambari-bootstrap.sh | sudo -E sh

- Install Zeppelin service definition

yum install -y git git clone https://github.com/hortonworks-gallery/ambari-zeppelin-service.git /var/lib/ambari-server/resources/stacks/HDP/2.4/services/ZEPPELIN sed -i.bak '/dependencies for all/a \ "ZEPPELIN_MASTER-START": ["NAMENODE-START", "DATANODE-START"],' /var/lib/ambari-server/resources/stacks/HDP/2.4/role_command_order.json

- Install Pivotal service definition and repo per HDB doc

- Create staging dir:

mkdir /staging chmod a+rx /staging

- Transfer (e.g. by scp) hdb-2.0.0.0-22126.tar.gz and hdb-ambari-plugin-2.0.0-448.tar.gz from Pivotal Network to /staging dir

- Setup HDB repo and Ambari service definition:

tar -xvzf /staging/hdb-2.0.0.0-*.tar.gz -C /staging/ tar -xvzf /staging/hdb-ambari-plugin-2.0.0-*.tar.gz -C /staging/ yum install -y httpd service httpd start cd /staging/hdb* ./setup_repo.sh cd /staging/hdb-ambari-plugin* ./setup_repo.sh yum install -y hdb-ambari-plugin

- At this point you should see a local repo up at http://node1/HDB/

- Restart Ambari so it now recognizes Zeppelin, HAWQ, PXF services

service ambari-server restart service ambari-agent restart

- Confirm 4 agents were registered and agent is up

curl -u admin:admin -H X-Requested-By:ambari http://localhost:8080/api/v1/hosts service ambari-agent status

Deploy vanilla HDP + Zeppelin + HDB

- Deploy cluster running latest HDP including Zeppelin, HAWQ, PXF. You can either:

- Option 1: login to Ambari UI and use Install Wizard. In this case:

- You will need to set the 'HAWQ System User Password' to any value you like

- Make sure to manually adjust the HDFS settings mentioned in HDB doc

- Make sure that the port specified in 'HAWQ master port' (by default, 5432) is not in use on the host where you will install HAWQ master

- If installing on single node or any other scenario where HAWQ master need to be installed on node where a postgres setup already exists (e.g. if installing HAWQ master on the same host where Ambari is installed) you will need to change the master port from default value (5432)

- On single node setup, 'HAWQ standby master' will not be installed

- Refer to HDB doc for full details

#install bootstrap yum install -y python-argparse cd git clone https://github.com/seanorama/ambari-bootstrap.git #decide which services to deploy and set the number of nodes in the cluster export ambari_services="HDFS MAPREDUCE2 YARN ZOOKEEPER HIVE ZEPPELIN SPARK HAWQ PXF" export host_count=4 cd ./ambari-bootstrap/deploy/

- Create configuration-custom.json with custom configs to be included in the blueprint e.g. below we are including HDFS config customizations for HAWQ. If installing HAWQ master on single node (or host where postgres/Ambari already running) change the hawq_master_address_port from 5432 (e.g. to 10432)

cat << EOF > configuration-custom.json

{

"configurations" : {

"hdfs-site": {

"dfs.allow.truncate": "true",

"dfs.block.access.token.enable": "false",

"dfs.block.local-path-access.user": "gpadmin",

"dfs.client.read.shortcircuit": "true",

"dfs.client.socket-timeout": "300000000",

"dfs.client.use.legacy.blockreader.local": "false",

"dfs.datanode.handler.count": "60",

"dfs.datanode.socket.write.timeout": "7200000",

"dfs.namenode.handler.count": "600",

"dfs.support.append": "true"

},

"hawq-site":{

"hawq_master_address_port":"5432"

},

"hawq-env":{

"hawq_password":"gpadmin"

},

"core-site": {

"ipc.client.connection.maxidletime": "3600000",

"ipc.client.connect.timeout": "300000",

"ipc.server.listen.queue.size": "3300"

}

}

}

EOF- Review the blueprint if you like, otherwise deploy the cluster creation

#optional - if you want to review the BP before deploying it #export deploy=false #./deploy-recommended-cluster.bash #more temp*/blueprint.json #generate BP including customizations and start cluster deployment export deploy=true ./deploy-recommended-cluster.bash

- This will kick off HDP cluster install, including Zeppelin, HAWQ and PXF. You can monitor the install it via Ambari at http://node1:8080

- Once complete, your cluster of HDP + HDB will be up

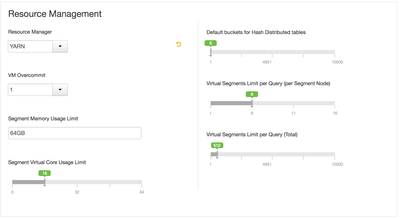

- Note on resource management:

- By default HAWQ runs in standalone resource management mode. To enable HAWQ to run on YARN:

- Ambari > HAWQ > Configs > Settings > Resource Management > Change 'Resource Manager' to YARN

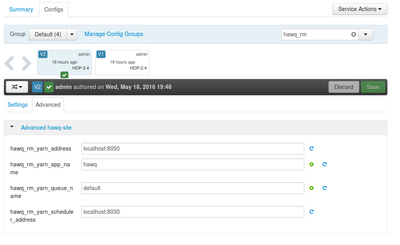

- Other configs you may want to modify when running in YARN resource management mode relate to the YARN RM and scheduler endpoints, plus the YARN queue to start HAWQ AM in:

- Note: there is currently a bug (applicable to deployment via blueprints) where the hawq_rm_yarn_address and hawq_rm_yarn_scheduler_address are not auto-populated to the correct settings for your cluster and need to be manually changed. This issue does not apply when using 'Add service' wizard to add HAWQ.

- By default HAWQ runs in standalone resource management mode. To enable HAWQ to run on YARN:

- Troubleshooting:

- If you get an error saying "Attempted to create a Blueprint which already exists, blueprint_name=recommended", most likely you already have a blueprint called 'recommended'. Try the below to delete it first and re-try:

curl -u admin:admin -H X-Requested-By:ambari -X DELETE http://localhost:8080/api/v1/blueprints/recommended

Configure HAWQ for Zeppelin

- On HAWQ master node (node3 in this exmple), run below to create a separate DB in HAWQ for Zeppelin to access:

- SSH in

- connect to HAWQ via psql shell

- create a new DB

su - gpadmin source /usr/local/hawq/greenplum_path.sh export PGPORT=5432 psql -d postgres create database contoso; \q

- Note: you only need to set PGPORT if HAWQ master was not installed on default port (5432). If you specified a different port, you will need to set this accordingly.

- Also note: if you forgot the HAWQ master password you set earlier and need to reset it, you can do this by psql shell:

ALTER ROLE gpadmin WITH PASSWORD 'gpadmin';

- On HAWQ master node, run below to add the IP of zeppelin node to HAWQ pg_hba.conf conf. This is done to allow Zeppelin to access HAWQ from a different node

- Make sure to replace 172.17.0.2 below with IP of host running Zeppelin

echo "host all all 172.17.0.2/32 trust" >> /data/hawq/master/pg_hba.conf

- Either restart HAWQ via Ambari or, to avoid restarting, you can run the below command as gpadmin user to reload HAWQ configs without starting the components. See doc for more details on the reload option

hawq stop cluster --reload

Configure Zeppelin for HAWQ

- Open Zeppelin interpreter and scroll down to section for psql and make below changes to use zeppelin user to connect to contoso DB on HAWQ master node/port:

- postgresql.url = jdbc:postgresql://node3:5432/contoso

- change the hostname to your hawq master hostname

- if installing on single node, change the port to the updated port (e.g. 10432)

- postgresql.password = gpadmin (or whatever you set it to during installation of HAWQ)

- postgresql.url = jdbc:postgresql://node3:5432/contoso

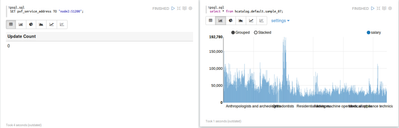

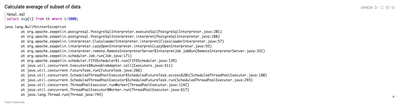

Run HAWQ queries via Zeppelin

- Create a new note in Zeppelin with below cells to create/populate a test table and calculate avg of subset:

%psql.sql create table tt (i int); insert into tt select generate_series(1,1000000);

%psql.sql select avg(i) from tt where i>5000;

Access Hive tables via HAWQ

- It's possible to access Hive tables from HAWQ (via PXF) using both the command-line as well as through Zeppelin.

- First let's import some sample data into Hive

- On Hive host, run below to download csv and create Hive table

#Import sample tables cd /tmp wget https://raw.githubusercontent.com/abajwa-hw/security-workshops/master/data/sample_07.csv beeline -u "jdbc:hive2://localhost:10000/default" use default; CREATE TABLE `sample_07` ( `code` string , `description` string , `total_emp` int , `salary` int ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS TextFile; load data local inpath '/tmp/sample_07.csv' into table sample_07;

- To access Hive table from HAWQ via psql shell you can run below (replace node2 with host where Hive is running):

su - gpadmin source /usr/local/hawq/greenplum_path.sh export PGPORT=5432 psql -d postgres SET hcatalog_enable = true; SET pxf_service_address TO "node2:51200"; select count(*) from hcatalog.default.sample_07;

- Using Zeppelin you can run similar steps (replace node2 with host where Hive is running):

%psql.sql SET hcatalog_enable = true; SET pxf_service_address TO "node2:51200";

%psql.sql select description, salary from hcatalog.default.sample_07;

(Optional) - Run queries on retail sample data

A retail dataset with sample queries can be found at https://github.com/pivotalsoftware/pivotal-samples

- To import the data and create the hive tables, run below from your host running Hive

cd /tmp git clone https://github.com/pivotalsoftware/pivotal-samples.git cd /tmp/pivotal-samples/sample-data/ sudo -u hdfs ./load_data_to_HDFS.sh sudo -u hdfs hdfs dfs -chmod -R 777 /retail_demo hive -f /tmp/pivotal-samples/hive/create_hive_tables.sql

- Now you can run the sample queries via Zeppelin:

- Top 10 categories in terms of items sold for all time

%psql.sql

SELECT product_id

, product_category_id

, product_count

, category_rank

FROM (SELECT product_id, product_category_id

, SUM(item_quantity::int) AS product_count

, row_number() OVER (PARTITION BY product_category_id ORDER BY SUM(item_quantity::int) DESC) AS category_rank

FROM hcatalog.retail_demo.order_lineitems_hive

GROUP BY product_id, product_category_id

) AS lineitems

WHERE category_rank <= 10

ORDER BY product_category_id, category_rank

;- Customers first and last order ID/date

%psql.sql

SELECT CASE WHEN order_datetime::timestamp < timestamp '2010-10-08' THEN date_trunc('day', order_datetime::timestamp + interval ' 1 week')

ELSE date_trunc('day', order_datetime::timestamp)

END::date AS order_day

, SUM(CASE WHEN order_datetime >= timestamp '2010-01-08' THEN 1 ELSE 0 END) AS num__orders_current

, SUM(CASE WHEN order_datetime < timestamp '2010-01-08' THEN 1 ELSE 0 END) AS num__orders_last_week

FROM hcatalog.retail_demo.order_lineitems_hive

WHERE order_datetime BETWEEN timestamp '2010-10-01' AND timestamp '2010-10-15 23:59:59'

GROUP BY 1

ORDER BY 1

;- Top 10 categories in terms of items sold for all time

%psql.sql

SELECT product_id

, product_category_id

, product_count

, category_rank

FROM (SELECT product_id, product_category_id

, SUM(item_quantity::int) AS product_count

, row_number() OVER (PARTITION BY product_category_id ORDER BY SUM(item_quantity::int) DESC) AS category_rank

FROM hcatalog.retail_demo.order_lineitems_hive

GROUP BY product_id, product_category_id

) AS lineitems

WHERE category_rank <= 10

ORDER BY product_category_id, category_rank

;Troubleshooting

- When trying to run HAWQ queries from Zeppelin, if you get java.lang.NullPointerException as below:

- Double check the psql interpreter settings as mentioned above and restart the interpreter

- Make sure postgresql.user and postgresql.password are correct (if needed, reset the gpadmin password using command mentioned above)

- Make sure postgresql.url is referencing

- correct hostname and port for HAWQ master

- valid database

- Double check the psql interpreter settings as mentioned above and restart the interpreter

- If running in YARN mode and the HAWQ queries are hanging, it's possible that there that the HAWQ YARN Application master does not have sufficient resources.

- You can confirm this by opening the resource manager UI (Ambari > YARN > Quicklinks) and see if any HAWQ jobs are sitting in 'Accepted' state (instead of 'Running').

- You can also confirm by checking the latest HAWQ master log (under /data/hawq/master/pg_log/hawq*.log): it may show messages like "fail to allocate from YARN RM after retry several times"

- If there are existing jobs running on YARN (e.g. Spark, Hive etc), you may want to wait for them to complete or kill them and re-try

5,699 Views

Comments

Master Mentor

Created on 05-20-2016 03:52 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content