Community Articles

- Cloudera Community

- Support

- Community Articles

- Installing and Configuring HBase Indexer in a Kerb...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 08-10-2016 01:41 AM - edited 08-17-2019 10:52 AM

Author: Gerdan Rezende dos Santos - https://community.hortonworks.com/users/12342/gerdan.html

Co-author: Pedro Drummond - https://community.hortonworks.com/users/10987/pedrodrummond.html

1 - PREREQUISITES

Before you begin, you need to have:

1. HDP 2.4 kerberized cluster;

2. Configured repos for HDPSearch;

3. Java + JCE installed with defined JAVA_HOME;

4. Knowledge of you kerberos’ REALM.

2 - KERBEROS CONFIGURATION

2.1 - “Principal” Definition

It’s a Kerberos term which defines an entity by means of an address, user or service.

This address is commonly divided into 3 parts:

The first part contains the username or the service name;

The second part contains the hostname if one is defining a service, or left in blank if one is defining a user;

The third part contains the REALM.

◦Ex: user1/solr.community.hortonworks.com@LOCAL.COM

2.2 - Installing Kerberos Clients for HDPSearch Host

For RedHat or Centos:

yum -y install krb5-libs krb5-auth-dialog krb5-workstation

Then copy to the host client the file /etc/krb5.conf from the kerberos server.

2.3 - Creating the Principal WITH Keytab (Authentication through keytab) as Root

Authenticate at kadmin console with a user that has administrative permission, in this example we’re using “ root/admin@LOCAL.COM”.

[root@host ~] kadim –p root/admin@LOCAL.COM Authenticating as principal root/admin@LOCAL.COM with password.Password for root/admin@LOCAL.COM:[enter password]

At this point you’ll be at kadmin console. To add a user, use the command add_principal or the short version addprinc. Also specify the option -randkey while creating the user since specifying a password is useless once you generate the keytab for that user.

Now the HBase and HTTP users for the host where your HDPSearch is located must be created and then merged to the same file. In this example our host is solr.community.hortonworks.com.

kadmin: addprinc -randkey \ hbase/solr.community.hortonworks.com@LOCAL.COM WARNING: no policy specified for hbase/solr.community.hortonworks.com@LOCAL.COM; defaulting to no policy Principal "hbase/solr.community.hortonworks.com@LOCAL.COM" created. kadmin: addprinc -randkey \ HTTP/solr.community.hortonworks.com@LOCAL.COM WARNING: no policy specified for HTTP/solr.community.hortonworks.com@LOCAL.COM; defaulting to no policy Principal "HTTP/solr.community.hortonworks.com@LOCAL.COM" created. #STILL AT THE KADMIN CONSOLE, EXPORT BOTH KEYTABS TO THE SAME FILE. kadmin: xst -k /etc/security/keytabs/http.hbase.keytab \ HTTP/solr.community.hortonworks.com \ hbase/solr.community.hortonworks.com Entry for principal HTTP/solr.community.hortonworks.com with kvno 8, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. Entry for principal HTTP/solr.community.hortonworks.com with kvno 8, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. Entry for principal HTTP/solr.community.hortonworks.com with kvno 8, encryption type des3-cbc-sha1 added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. Entry for principal HTTP/solr.community.hortonworks.com with kvno 8, encryption type arcfour-hmac added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. Entry for principal HTTP/solr.community.hortonworks.com with kvno 8, encryption type des-hmac-sha1 added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. Entry for principal HTTP/solr.community.hortonworks.com with kvno 8, encryption type des-cbc-md5 added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. Entry for principal hbase/solr.community.hortonworks.com with kvno 5, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. Entry for principal hbase/solr.community.hortonworks.com with kvno 5, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. Entry for principal hbase/solr.community.hortonworks.com with kvno 5, encryption type des3-cbc-sha1 added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. Entry for principal hbase/solr.community.hortonworks.com with kvno 5, encryption type arcfour-hmac added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. Entry for principal hbase/solr.community.hortonworks.com with kvno 5, encryption type des-hmac-sha1 added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. Entry for principal hbase/solr.community.hortonworks.com with kvno 5, encryption type des-cbc-md5 added to keytab WRFILE:/etc/security/keytabs/http.hbase.keytab. kadmin:

For generating the keytab use the command xst as you can see above. The option -k defines the location where the file that contains the keytab(s) will be recorded and following parameter defines the user(s) related to this keytab.

3 - HDPSEARCH INSTALLATION

yum -y install lucidworks-hdpsearch chown -R solr:solr /opt/lucidworks-hdpsearch

The next steps must be executed with the solr user.

su solr

4 - CONFIGURING SOLR TO STORE INDEX FILES AT HDFS (Optional)

For the lab we’ll use the schemaless setup that comes with solr;

· Schemaless setup is a set of SOLR attributes that allows indexing documents.

· An example of this setup can be found at /opt/lucidworks-hdpsearch/solr/server/solr/configsets/data_driven_schema_configs.

Create the necessary directories at HDFS:

sudo -u hdfs hadoop fs -mkdir /user/solr sudo -u hdfs hadoop fs -chown solr /user/solr

Edit the file located at the directory:

vi /opt/lucidworks-hdpsearch/solr/server/solr/configsets/data_driven_schema_configs_hdfs/conf/solrconfig.xml

Replace the section:

<directoryFactory name="DirectoryFactory"

class="${solr.directoryFactory:solr.NRTCachingDirectoryFactory}">

</directoryFactory>

by:

<directoryFactory name="DirectoryFactory" class="solr.HdfsDirectoryFactory"> <str name="solr.hdfs.home">hdfs://sandbox.hortonworks.com/user/solr</str> <bool name="solr.hdfs.blockcache.enabled">true</bool> <int name="solr.hdfs.blockcache.slab.count">1</int> <bool name="solr.hdfs.blockcache.direct.memory.allocation">false</bool> <int name="solr.hdfs.blockcache.blocksperbank">16384</int> <bool name="solr.hdfs.blockcache.read.enabled">true</bool> <bool name="solr.hdfs.blockcache.write.enabled">false</bool> <bool name="solr.hdfs.nrtcachingdirectory.enable">true</bool> <int name="solr.hdfs.nrtcachingdirectory.maxmergesizemb">16</int> <int name="solr.hdfs.nrtcachingdirectory.maxcachedmb">192</int> </directoryFactory>

Change the locktype to:

<lockType>hdfs</lockType>

Save the file and exit.

5 - INITIALIZE SOLR IN CLOUD MODE

mkdir -p ~/solr-cores/core1 mkdir -p ~/solr-cores/core2 cp /opt/lucidworks-hdpsearch/solr/server/solr/solr.xml ~/solr-cores/core1 cp /opt/lucidworks-hdpsearch/solr/server/solr/solr.xml ~/solr-cores/core2 /opt/lucidworks-hdpsearch/solr/bin/solr start -cloud -p 8983 -z zookeeper.hortonworks.com:2181 -s ~/solr-cores/core1 /opt/lucidworks-hdpsearch/solr/bin/solr restart -cloud -p 8984 -z zookeeper.hortonworks.com:2181 -s ~/solr-cores/core2

6 - CREATE AND VALIDATE A COLLECTION NAMED “LABS” WITH 2 SHARDERS AND REPLICATION FACTOR OF 2

/opt/lucidworks-hdpsearch/solr/bin/solr create -c labs -d /opt/lucidworks-hdpsearch/solr/server/solr/configsets/data_driven_schema_configs_hdfs/conf -n labs -s 2 -rf 2

Using the browser, visit http://solr.hortonworks.com:8983/solr/#/~cloud. You should see the collection “labs” with 2 shards, each one of them with a replication factor of 2.

7 - HBASE & HBASE-INDEXER CONFIGURATION

For pointing Solr to Zookeeper we need to configure the hbase-indexer-site.xml file.

At the HBase-Indexer host, open the file located at:

vi /opt/lucidworks-hdpsearch/hbase-indexer/conf/hbase-indexer-site.xml

This is an example of how it should look like:

<?xml version="1.0"?>

<configuration>

<property>

<name>hbaseindexer.zookeeper.connectstring</name>

<value>zookeeperHOST1:2181,zookeeperHOST2:2181</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value> zookeeperHOST1,zookeeperHOST2</value>

</property>

</configuration>

Copy to the HBase server the necessary libraries to activate the replication:

scp user@HOST_INDEXER:/opt/lucidworks-hdpsearch/hbase-indexer/lib/hbase-sep* user@HOST_HBASE:/usr/hdp/current/hbase-master/lib/

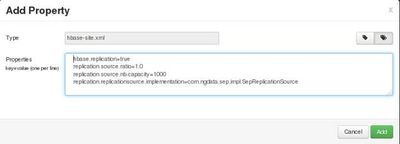

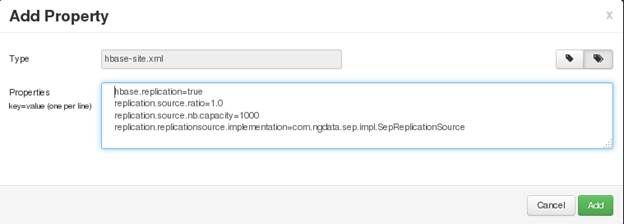

At AMBARI user interface (version 2.2.2.0), go to HBase -> Configs -> Custom hbase-site and click “Add Property ...” and then add the following properties:

hbase.replication=true replication.source.ratio=1.0 replication.source.nb.capacity=1000 replication.replicationsource

Click the “Save” button. It should look like the screen bellow having entered the above properties:

Restart the services requested by AMBARI.

Copy the hbase-site.xml file to the HBase-Indexer configuration directory:

cp /etc/hbase/conf/hbase-site.xml /opt/lucidworks-hdpsearch/hbase-indexer/conf/

8 - Start the HBase-Indexer with the kerberized Cluster

Before anything else you’ll need to replace the zkcli.sh file that comes with hbase-indexer. It prevents connecting to the kerberized HBase so you can listen to the replication stream and thus generate json documents to Solr.

cp /usr/hdp/2.4.2.0-258/zookeeper/bin/zkCli.sh /opt/lucidworks-hdpsearch/solr/server/scripts/cloud-scripts/zkcli.sh

Create a JAAS file to notify the kerberos user which will be used to authenticate:

vim /opt/lucidworks-hdpsearch/hbase-indexer/conf/hbase_indexer.jaas

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

useTicketCache=false

keyTab="/etc/security/keytabs/hbase.service.keytab" #keytab do usuário

principal="hbase/All_FQDN@LOCAL.COM"; #usuário

};

Alter the new zkcli.sh to use this JAAS file:

vim /opt/lucidworks-hdpsearch/solr/server/scripts/cloud-scripts/zkcli.sh #Add the following information to the JAAS file to be able to authenticate -Djava.security.auth.login.config=/opt/lucidworks-hdpsearch/hbase-indexer/conf/hbase_indexer.jaas

The result is:

All set! Now initialize the HBase-Indexer service:

/opt/lucidworks-hdpsearch/hbase-indexer/bin/hbase-indexer server

9 - Create an Index and Test the Solution

Access HBase and create a table:

hbase(main):001:0> create 'indexdemo-user', { NAME => 'info', REPLICATION_SCOPE => '1' }

Create a XML file to build the bridge between the contents of the HBase table and what we’re indexing in Solr. Further examples and a read-me file you may find at /opt/lucidworks-hdpsearch/hbase-indexer/demo.

vi /opt/lucidworks-hdpsearch/hbase-indexer/indexdemo-indexer.xml <?xml version="1.0"?> <indexer table="indexdemo-user"> <field name="firstname_s" value="info:firstname"/> <field name="lastname_s" value="info:lastname"/> <field name="age_i" value="info:age" type="int"/> </indexer>

Add an index:

/opt/lucidworks-hdpsearch/hbase-indexer/bin/hbase-indexer add-indexer -n indexLab -c /opt/lucidworks-hdpsearch/hbase-indexer/indexdemo-indexer.xml -cp solr.zk=zk01.hortonworks.com,zk02.hortonworks.com,zk03.hortonworks.com -cp solr.collection=labs -z zk01.hortonworks.com:2181

Check if it was created and if it runs:

/opt/lucidworks-hdpsearch/hbase-indexer/bin/hbase-indexer list-indexers -z zk01.hortonworks.com:2181 indexLab + Lifecycle state: ACTIVE + Incremental indexing state: SUBSCRIBE_AND_CONSUME + Batch indexing state: INACTIVE + SEP subscription ID: Indexer_indexLab + SEP subscription timestamp: 2016-08-05T16:14:12.305-03:00 + Connection type: solr + Connection params: + solr.zk = zk01.hortonworks.com,zk02.hortonworks.com,zk03.hortonworks.com + solr.collection = labs + Indexer config: 218 bytes, use -dump to see content + Indexer component factory: com.ngdata.hbaseindexer.conf.DefaultIndexerComponentFactory + Additional batch index CLI arguments: (none) + Default additional batch index CLI arguments: (none) + Processes + 1 running processes + 0 failed processes

Insert some records into HBase to verify it into Solr:

put 'indexdemo-user', 'row1', 'info:firstname', 'Gerdan' put 'indexdemo-user', 'row1', 'info:lastname', 'Santos' put 'indexdemo-user', 'row2', 'info:firstname', 'Pedro' put 'indexdemo-user', 'row2', 'info:lastname', 'Drummond' put 'indexdemo-user', 'row3', 'info:firstname', 'Cleire' put 'indexdemo-user', 'row3', 'info:lastname', 'Ritfield' put 'indexdemo-user', 'row4', 'info:firstname', 'Horton' put 'indexdemo-user', 'row4', 'info:lastname', 'Works'

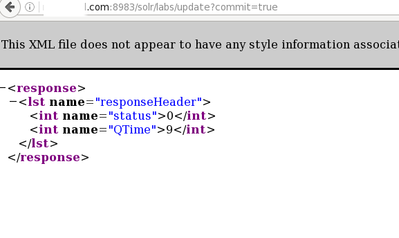

Perform a commit in Solr via terminal ( very important):

#Via terminal curl http://solar.hortonworks.com:8983/solr/labs/update?commit=true

Or via browser:

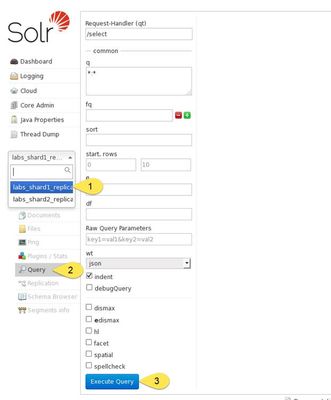

Verify in Solr if the indexed data appears:

There you go! The HBase indexer with a kerberized cluster is now working:

10 - References

https://web.mit.edu/kerberos/krb5-1.12/doc/admin/admin_commands/kadmin_local.html

Created on 10-23-2017 08:43 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

In HDP 2.5, users will have uncomment two lines in bin/hbase-indexer to make it work!

HBASE_INDEXER_OPTS="$HBASE_INDEXER_OPTS -Dlww.jaas.file=/opt/lucidworks-hdpsearch/hbase-indexer/conf/hbase_indexer.jaas" HBASE_INDEXER_OPTS="$HBASE_INDEXER_OPTS -Dlww.jaas.appname=Client" #Client is the name given in this tutorial to Jaas Section

Created on 12-29-2017 02:35 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Tip! 🙂

Please make sure to add below line in hbase-indexer-env.sh in order to avoid org.apache.zookeeper.KeeperException$NoAuthException:KeeperErrorCode=NoAuthfor/hbase-secure/blah blah error

HBASE_INDEXER_OPTS="$HBASE_INDEXER_OPTS -Djava.security.auth.login.config=<path-of-indexer-jass-file>"