Community Articles

- Cloudera Community

- Support

- Community Articles

- Introduction to LLAP

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 01-02-2020 10:43 PM - edited 09-16-2022 01:45 AM

Introduction to Hive and LLAP

Hadoop is the backbone of big data applications. The hallmark of Hadoop is its efficiency to process large volumes of data on a cluster of commodity servers. Apache Hive is the open-source data warehouse system built on top of Hadoop for querying and analyzing large datasets stored in Hadoop files. One of the greatest challenges that Hive faced was its perceived slow performance. Hive on TEZ was a leap in the right direction for performance. It performed better than its predecessor MR execution engine. LLAP, known as Long Live and Process, essentially is the next-generation execution engine with a better performance and response time. LLAP is very similar to Hive, the main difference being the worker tasks runs inside LLAP Daemons and that too inside tiny computational units called Executors. However, in Hive, these tasks are run by containers. The LLAP daemons are persistent and execute fragments of Hive Queries.

What are the core components of Hive LLAP?

Hiveserver2Interactive Process: The code of the Hiveserver2Interactive Process is based on Hive2, with some major variations from the present codebase of HiveServer2, which is based on Hive1. This is true for HDP 2.6. However, from HDP 3.0 the HiveServer2Interactive and HiveServer2 will be the same.

SliderAM: Slider Application Master

LLAP Daemons: These are big fat Yarn containers with an application ID.

YARN Resources: Specially allocated Yarn Queues are needed for LLAP to run. In the example, as shown below, we are using a special Queue called LLAP. The queue is named from Yarn Queue Manager and suitable resources are allocated to the customized queue.

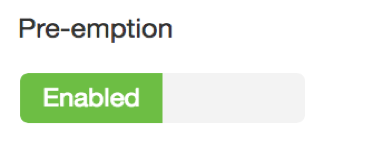

We will have to enable Yarn Pre-emption for LLAP to work.

Ambari àYarn

Fig 1

Yarn preemption is enabled to make sure that the queue has a guaranteed level of cluster resources.

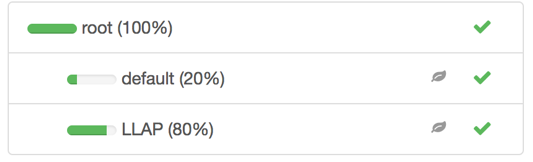

In the present example, as illustrated below, we are allocating 80% of the cluster resources to the queue LLAP.

Screenshot from Yarn Queue Manager.

Fig 2

There is no good number for the resource allocation, we will need to consider the resources that will be needed by LLAP along with the maximum resources that are available in the cluster. In the test cluster used by the author, no other applications are running and is a test box for LLAP applications. (It should also be noted that the memory configurations demonstrated in this document may not be optimal. Viewers are advised to test and change the configurations consistent with their environment and the workload). So, the resources queue is skewed for LLAP intentionally. LLAP, in general, is a resource-intensive service and there is no good answer to the question of how many resources are needed to run LLAP in a cluster.

Yarn Min and Max Containers and its Impact on LLAP Daemons.

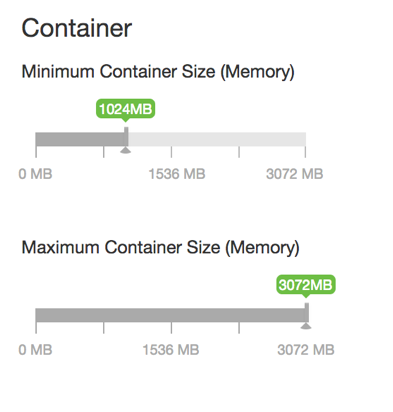

Please navigate to AmbariàYarnàConfigs.

Fig 3

As illustrated in Fig3, we have set Yarn Minimum Container Size to 1GB and the Yarn Maximum Container Size is set to 3GB. The size of the LLAP daemon size should be set between the Yarn Minimum Container size and Maximum Container size. Failure to do so will result in a similar error as reported by a few customers, which is highlighted.

--------------------------------------------------------------------------------------------------------------------------------

WARN cli.LlapServiceDriver: Ignoring unknown llap server parameter: [hive.aux.jars.path]

Failed: Working memory (Xmx=60.80GB) + cache size (42.00GB) has to be smaller than the container sizing (64.00GB)

java.lang.IllegalArgumentException: Working memory (Xmx=60.80GB) + cache size (42.00GB) has to be smaller than the container sizing (64.00GB)

at com.google.common.base.Preconditions.checkArgument(Preconditions.java:92)

at org.apache.hadoop.hive.llap.cli.LlapServiceDriver.run(LlapServiceDriver.java:256)

at org.apache.hadoop.hive.llap.cli.LlapServiceDriver.main(LlapServiceDriver.java:113)

--------------------------------------------------------------------------------------------------------------------------------

Fig 4

The above error stack also represents a different error too. The cache size plus the heap size should also be lesser than the maximum container size. It is highlighted in green color.

Queue Size Calculation for the YARN Queue LLAP:

Size of Slider AM + (n * Size of LLAP Daemons) + Tez-AM Coordinators

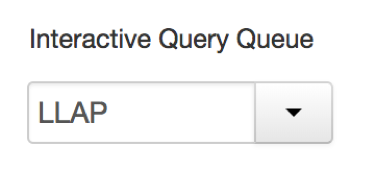

Upon successfully allocating the queue resources for the LLAP queue, we need to make sure that the LLAP applications are run in the LLAP queue. This is a manual process and should be done via Ambari.

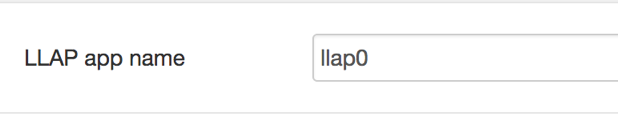

Screenshot from Ambari àHive.

Fig 5

NB: This will specify that the LLAP daemons and the container will be running in the LLAP queue.

Fig 6

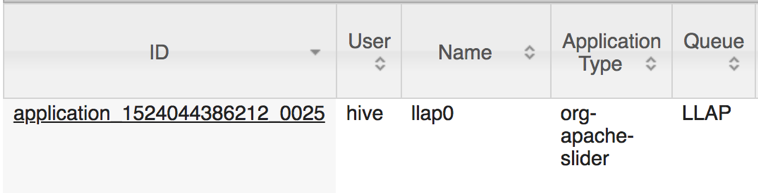

LLAP daemons are launched as Yarn Applications with the aid of Apache Slider.

A screenshot from Resource Manager UI:

Fig 7

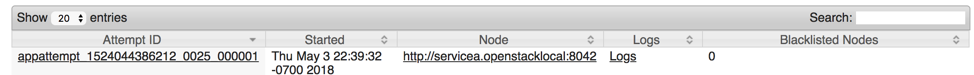

The above said application is the slider process that runs the LLAP Daemons. If you click on the application ID, you will reach the following page.

Fig 8

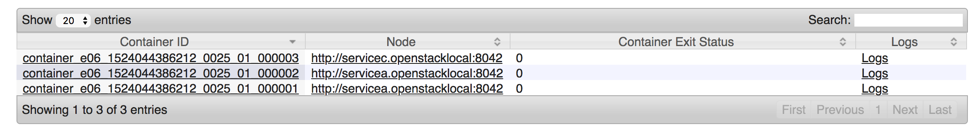

Upon double-clicking the appattempt, a new page opens up. For the scope of the document, only certain sections of the RM UI page will be extracted and presented.

Fig 9

We see 3 containers here.

- container_e06_1524044386212_0025_01_000001

- container_e06_1524044386212_0025_01_000002

- container_e06_1524044386212_0025_01_000003

The first container belongs to the Slider AM. The size of the slider is determined by the configuration as shown in the below picture.

Fig 10. A

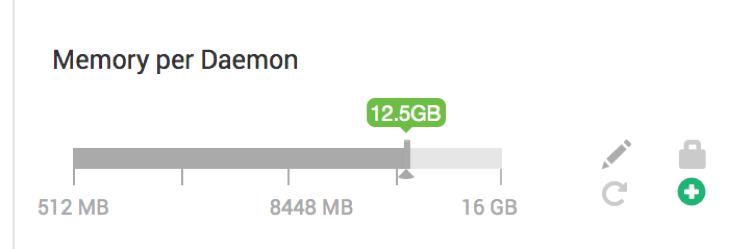

Setting LLAP Daemon size:

It is very important to keep the Yarn Node Manager size when we design the LLAP daemon size. The Daemon size cannot bigger than yarn.scheduler.maximum-allocation-mb and cannot be lesser than yarn.scheduler.minimum-allocation-mb. Inside the daemon lies the executors. Typically, each executor needs 4 GB of memory. Executor memory size is shared between join hash table loading, map-side aggregation hash tables, PTF operator buffering, shuffle and sort buffers. If we give less memory to the executors, it can slow down some queries.eg: Hash join can convert themselves into sort-merge joins. However, configuring more memory can be counterproductive too. It may be possible to have more room for hash joins, but query performance may be affected when all the executors load huge tables resulting in GC pauses.

From Fig 10 A, we can see 2 more containers ending in 000002 and 000003. These are the LLAP Daemons. The size of these LLAP Daemons are controlled by the configuration parameter (hive.llap.daemon.yarn.container.mb) (as shown in the below said figure, Fig 10.B)

Fig 10.B

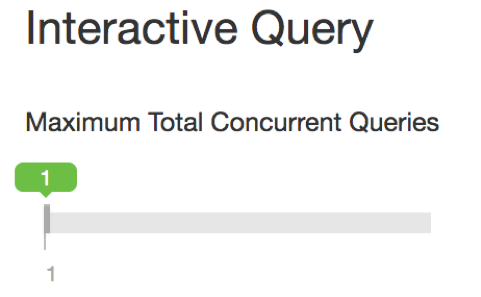

Fig 11

The above-said setting corresponds to Tez AM coordinator. The number of Query Coordinators spun up limits, the number of concurrent queries. It is these Tez AM’s that speak to executors inside the LLAP daemon. An ideal number of concurrent queries would depend on system resources and the workload. The LLAP daemon and the Tez AM coordinators run on Yarn.

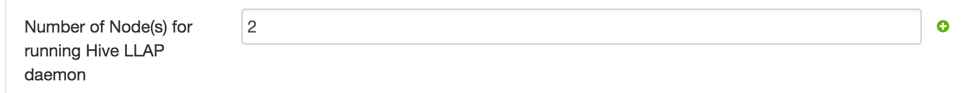

Number of LLAP Daemons

Fig 12

We can regulate the number of LLAP daemons using the above-shown configuration. As per the above, we will be able to see 2 Hive -LLAP daemons. You may please refer to the RM UI (Fig 9)for checking the number of LLAP Daemons for further confirmation. (NB: Watch out for the containers ending in 000002 and 000003)

Ports Used for Hive LLAP

Fig 13

Hiveserver2Interactive Heap Size

Fig 14

Hiveserver2Interactive has its own heap size. This is different from the heap size of Hiverserver2. We connect to Hiveserver2Interactive using beeline and the queries run via beeline. The hiveserver2interactive process will use the heap size of Hiveserver2Interactive as specified in the above-said configuration. It should be noted here that LLAP can be connected only via the beeline and it does not have a Hive CLI access.

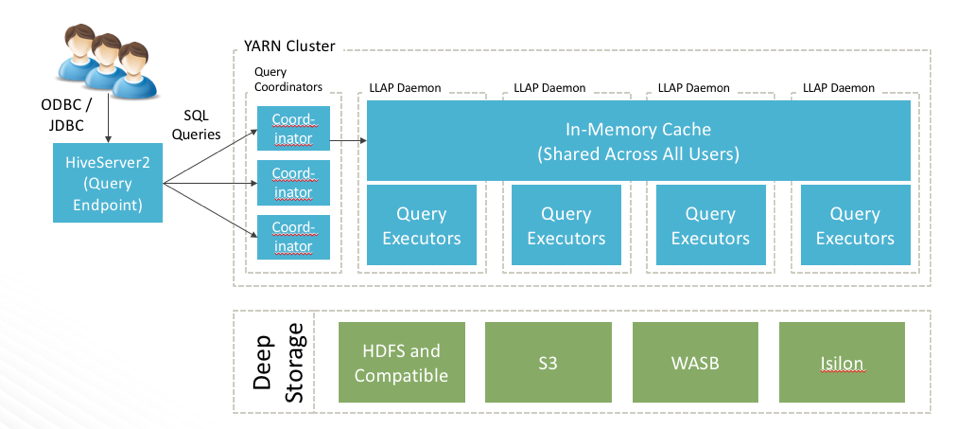

Execution Flow of LLAP

- Users can connect to LLAP via beeline.

- Users can use the Zookeeper string to connect to Hiveserver2Interactive.

- The JDBC connection connects to Hiveserver2Interactive which is the query endpoint.

- The query is compiled by HiveServer2Interactive.

- The compiled form of the query will be sent to the Query Coordinators for execution.

- Each of the coordinators will take one query and will execute it end to end.

- The number of Query Coordinators spun up limits the number of concurrent queries.

- The Query Coordinators will send fragment of the query to the executors along with the data they executors needs to work on.

- The executors will work on the tiny fragments of the query.

- Upon finishing the query, the Tez Query Coordinators will return the results back to the customer.

Fig 15

LLAP daemons are computer nodes that do 2 things.

- IO subsystem, which has a shared cache.

- Query executors: A multi-threaded query execution engine that can run multiple fragments at the same time.

Limitations to Hive-LLAP :

- Hive-LLAP does not support impersonation. Hence hive.server2.enable.doAs should be set to false.

- There is no support for Hive-LLAP HA in HDP 2.6.5.

- The compiler depends on the table, partition and columns statistics to generate optimal query plan. Hence keeping table, partition and column statistics up to date is very much important for LLAP compiler.

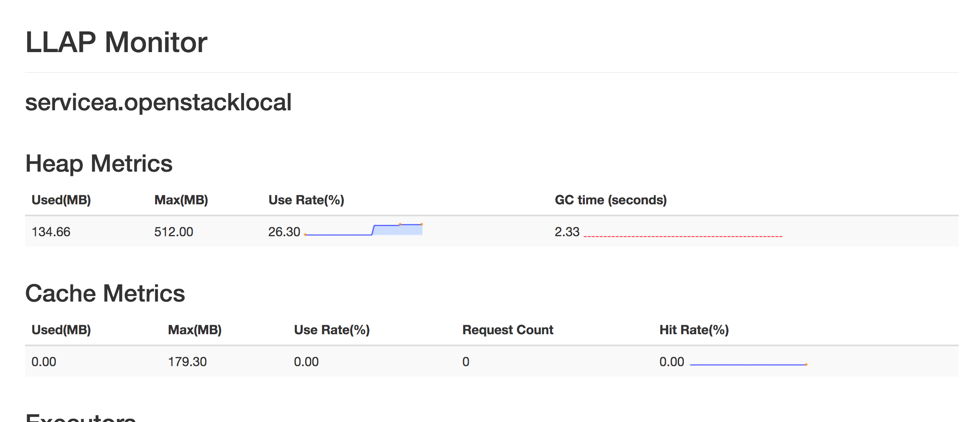

More graphics for Hive LLAP:

LLAP does have some inbuilt graphical representations.

Navigate to

Fig 16

Some other helpful metrics:

- /jmx – shows various useful metrics like (1. Executor status, 2. daemon.log.dir,3. ExecutorMemoryPerInstance)

- /stacks - shows daemon stacks – threads that process query fragments are TezTR-*and IO-Elevator-Thread*

- /conf – shows LLAP configuration (the values actually in use)

- /iomem (only in 3.0) – shows debug information about cache contents and usage

- /peers – shows all LLAP nodes in the cluster

- /conflog (only in 3.0) – allows one to see and add/change logger and log level for this daemon without restart