Community Articles

- Cloudera Community

- Support

- Community Articles

- Kafka in SSL+KERBEROS, and Spark2 connecting SSL S...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

11-05-2017

12:17 PM

- edited on

02-24-2020

07:52 AM

by

SumitraMenon

The HDP document present details steps of configuring SSL for each service component. However, more than often the services and corresponding applications work in the composite security environment, such as authentication with wire encryption, saying Kerberos + SSL. The blog in below link provided a solution for Kafka after being ssl secured. The solution is to join the certificate of Kafka brokers in JDK truststore.

Here I want to intensify a bit further, we will talk about the solution that places Kafka brokers' certificates outside of JDK truststore, and methods to testify. And for Kafka client Java application, how it works with ssl configuration, as well as that for Spark streaming successfully connecting ssl-secured Kafka brokers. Before those topics, we need to specify how to conduct ssl configuration for Kafka broker. The environment is HDP 2.6.3.0 with FreeIPA kerberized, Spark 2.2.0 and Kafka 0.10.1 with ssl securied.

1. configure ssl for Kafka

1.1 On each broker, create keystore files, certificates, and truststore files.

a. Create a keystore file:

keytool -genkey -alias <host> -keyalg RSA -keysize 1024 –dname CN=<host>,OU=hw,O=hw,L=paloalto,ST=ca,C=us –keypass <KeyPassword> -keystore <keystore_file> -storepass <storePassword>

b. Create a certificate:

keytool -export -alias <host> -keystore <keystore_file> -rfc –file <cert_file> -storepass <StorePassword>

c. Create a truststore file:

keytool -import -noprompt -alias <host> -file <cert_file> -keystore <truststore_file> -storepass <truststorePassword>

1.2 Create one truststore file that contains the public keys from all certificates.

a. Log on to one host and import the truststore file for that host:

keytool -import -noprompt -alias <hostname> -file <cert_file> -keystore <all_jks> -storepass <allTruststorePassword>

b. Copy the <all_jks> file to the other nodes in your Kafka cluster, and repeat the keytool command on each broker server.

2. Configure SSL for Kafka Borker

Suppose we have those keystore and truststore in the flowing path on each broker:

[root@cheny0 ssl]# ls -l total 12 -rw-r--r-- 1 root root731 Nov5 04:24 cheny0.field.hortonworks.com.crt -rw-r--r-- 1 root root 1283 Nov5 04:22 cheny0.field.hortonworks.com.jks -rw-r--r-- 1 root root577 Nov5 04:24 cheny0.field.hortonworks.com_truststore.jks [root@cheny0 ssl]# pwd /temp/ssl<br>

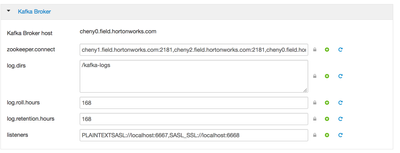

Then we configure in Ambair->Kafka->Configuration as following, we wanna plaintext communication among brokers, and configure ssl port as 6668, plaintext port as 6667.

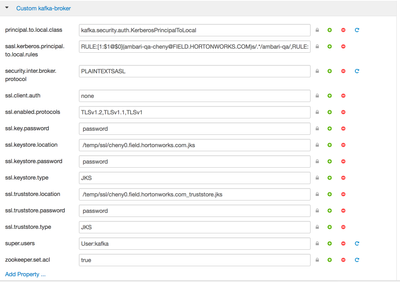

and for another ssl configuration, we need to customize

save and restart to make the configuration take effect, and then using 'netstat -pan |grep 6668' to check if it really start. Or we should find a similar line in /var/log/kafka/server.log as follows

[2017-11-05 04:34:15,789] INFO Registered broker 1001 at path /brokers/ids/1001 with addresses: PLAINTEXTSASL -> EndPoint(cheny0.field.hortonworks.com,6667,PLAINTEXTSASL),SASL_SSL -> EndPoint(cheny0.field.hortonworks.com,6668,SASL_SSL) (kafka.utils.ZkUtils)

To testify ssl port, we can use command

openssl s_client -debug -connect localhost:9093 -tls1

And it should respond the certificate of connected broker, as shown in following

Server certificate -----BEGIN CERTIFICATE----- MIIB6DCCAVGgAwIBAgIEaSkk1DANBgkqhkiG9w0BAQsFADAnMSUwIwYDVQQDExxj aGVueTAuZmllbGQuaG9ydG9ud29ya3MuY29tMB4XDTE3MTEwNDIwMjIyMFoXDTE4 MDIwMjIwMjIyMFowJzElMCMGA1UEAxMcY2hlbnkwLmZpZWxkLmhvcnRvbndvcmtz LmNvbTCBnzANBgkqhkiG9w0BAQEFAAOBjQAwgYkCgYEAp9KsltYleQQ7KE8ZG4LN lb5Jlj6kJuuYDDgOJftqMlelbGqnAEbn6cpvD13GP710jwOC1kINMANu6Y4cRNFs 3s4EuWC/hFA4SDzUnAaqvhBbAHPqDx1WonSg4P6333/O1v1Or5vHNaQiaEY7EWcx shU5PrtDwudo9s38L2HYsMcCAwEAAaMhMB8wHQYDVR0OBBYEFI5OYMK1mbL14ypD gcxRtxFI3eZ8MA0GCSqGSIb3DQEBCwUAA4GBAAcP33K8Ld3ezJxN2ss0BeW83ouw DUvAuZ6VU+TtSdo6x5uwkVlIIvITcoKcVd4O75idhIAxPajIBspzojsKg1rgGjlc 2G4pYjUbkH/P+Afs/YMWRoH91BKdAzhA4/OhiCq2xV+s+AKTgXUVmHnRkBtaTvX8 6Oe7jmXIouzJkiU/ -----END CERTIFICATE-----

So far, that only proves 6668 is really listening and it's ssl secured. But not be sure the ssl we configured works or not. That needs us using Kafka tools to testify further.

3. Enable Kafka Tools after SSL Configured

3.1 first we check if the plaintext port work

The following command steps lead you to test if Kafka works on plaintext port 6667, and there use Kerberos configuration, which links to kafka_client_jass.conf and kafka_jaas.conf in path /usr/hdp/current/kafka-broker/conf/. If there prompt out errors of Kerberos/GSSAPI, should check the jaas configuration.

//log in user 'kafka' [root@cheny0 bin]# kinit -kt /etc/security/keytabs/kafka.service.keytab kafka/cheny0.field.hortonworks.com@FIELD.HORTONWORKS.COM //move to path where place Kafka tools script [root@cheny0 ~]# cd /usr/hdp/current/kafka-broker/bin //launch producer [root@cheny0 bin]# ./kafka-console-producer.sh --broker-list cheny0.field.hortonworks.com:6667 --topic test --security-protocol PLAINTEXTSASL //launch consumer [root@cheny0 bin]# ./kafka-console-consumer.sh --bootstrap-server cheny0.field.hortonworks.com:6667 --topic test --from-beginning --security-protocol PLAINTEXTSASL

3.2 check if the ssl port 6668 work

The key point lies in configure ssl properties in consumer.properties and producer.properties files under path /usr/hdp/current/kafka-broker/config/. If here encounter problems we should first proofread the properties we configured, and then doubt on if the key or truststore is correct.

//log in user 'kafka' [root@cheny0 bin]# kinit -kt /etc/security/keytabs/kafka.service.keytab kafka/cheny0.field.hortonworks.com@FIELD.HORTONWORKS.COM //move to path where place Kafka tools script [root@cheny0 ~]# cd /usr/hdp/current/kafka-broker/bin //add the following lines in /usr/hdp/current/kafka-broker/config/producer.properties and consumer.properties ssl.keystore.location = /temp/ssl/cheny0.field.hortonworks.com.jks ssl.keystore.password = password ssl.key.password = password ssl.truststore.location = /temp/ssl/cheny0.field.hortonworks.com_truststore.jks ssl.truststore.password = password //launch producer [root@cheny0 bin]# ./kafka-console-producer.sh --broker-list cheny0.field.hortonworks.com:6668 --topic test --security-protocol SASL_SSL --producer.config /usr/hdp/current/kafka-broker/config/producer.properties //launch consumer [root@cheny0 bin]# ./kafka-console-consumer.sh --new-consumer --bootstrap-server cheny0.field.hortonworks.com:6668 --topic test --from-beginning --security-protocol SASL_SSL --consumer.config /usr/hdp/current/kafka-broker/config/consumer.properties

If the bove passed through, that say your Kafka ssl configuration work fine. Then we get to next talking how for Kafka Java applications to work.

4. Enable Kafka Java Applications after SSL Configured

For Kafka performance tool, if we change to use 'java' command to launch the ProducerPerformance, the piont is we can use option --producer.config to link the ssl properties we configured the same in previous section.

//launch java application to procuding test message java -Djava.security.auth.login.config=/usr/hdp/current/kafka-broker/conf/kafka_jaas.conf -Djava.security.krb5.conf=/etc/krb5.conf -Djavax.security.auth.useSubjectCredsOnly=true -cp /usr/hdp/current/kafka-broker/libs/scala-library-2.11.8.jar:/usr/hdp/current/kafka-broker/libs/*:/temp/kafka-tools-1.0.0-SNAPSHOT.jar org.apache.kafka.tools.ProducerPerformance --num-records 500000 --record-size 1000 --topic chen --throughput 100000 --num-threads 2 --value-bound 5000 --print-metrics --producer-props bootstrap.servers=cheny0:6668 compression.type=gzip max.in.flight.requests.per.connection=1 linger.ms=10 security.protocol=SASL_SSL --producer.config=/usr/hdp/current/kafka-broker/config/producer.properties //lauch consumer to receive the test message [root@cheny0 bin]# ./kafka-console-consumer.sh --new-consumer --bootstrap-server cheny0.field.hortonworks.com:6668 --topic chen --security-protocol SASL_SSL --consumer.config /usr/hdp/current/kafka-broker/config/consumer.properties

For generalized Java application where it uses Kafka client, then we should finalize the properties configurations in Java code. That is we will introduce in next section where Spark application connects Kafka with SSL+Kerberos.

5. Spark Streaming Connects SSL-secured Kafka in Kerberos Environment

Becuase here we launch Spark or Spark streaming on yarn cluster, and the spark (streaming) job is first uploaded onto hdfs path /user/kakfa.., and distributed into each executors. So, if it connects to Kafka and needs to include credentials of Kafka, i.e., jaas and keytab, so that can successfully reach Kafka broker. I had previousaly written an artical on this topic, for those who is interested can be referred to the following link

And the following use the same Spark streaming Java program that we introduce in the above weblink. The part that we should pay more attention in the following snippets, is the Java code which includes Kafak ssl properties configuration. If it fails to run, you should first proofread those configuration code.

//first time executing, creat kafka path in hdfs

[root@cheny0 temp]# kinit -kt /etc/security/keytabs/hdfs.headless.keytab hdfs-cheny@FIELD.HORTONWORKS.COM

[root@cheny0 temp]# hdfs dfs -mkdir /user/kafka

[root@cheny0 temp]# hdfs dfs -chown kafka:hdfs /user/kafka

//change the benchmark java code

HashMap<String, Object> kafkaParams = new HashMap<String, Object>();

kafkaParams.put( "bootstrap.servers", "cheny0.field.hortonworks.com:6668" );

kafkaParams.put( "group.id", "test_sparkstreaming_kafka" );

kafkaParams.put( "auto.offset.reset", "latest" );

kafkaParams.put( "security.protocol", "SASL_SSL" );

kafkaParams.put("key.deserializer", StringDeserializer.class);

kafkaParams.put("value.deserializer", StringDeserializer.class);

kafkaParams.put("ssl.keystore.location","/temp/ssl/cheny0.field.hortonworks.com.jks" );

kafkaParams.put("ssl.keystore.password","password" );

kafkaParams.put("ssl.key.password","password" );

kafkaParams.put("ssl.truststore.location","/temp/ssl/cheny0.field.hortonworks.com_truststore.jks" );

kafkaParams.put("ssl.truststore.password", "password" );

//compile out the application jar and copy to broker's path cheny0.field.hortonworks.com:/temp

sh-3.2# cd /Users/chen.yang/workspace/kafkaproducer

sh-3.2# mvn package

sh-3.2# cd target

sh-3.2# scp kafka-producer-1.0-SNAPSHOT.jar cheny0:/tmp

//copy keytab and jass to /temp

[root@cheny0 temp]# scp /usr/hdp/current/kafka-broker/conf/kafka_jaas.conf /temp/

[root@cheny0 temp]# scp /etc/security/keytabs/kafka.service.keytab ./

//revise the jaas.config

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

doNotPrompt=true

principal="kafka/cheny0.field.hortonworks.com@FIELD.HORTONWORKS.COM"

keyTab="kafka.service.keytab"

useTicketCache=true

renewTicket=true

serviceName="kafka";

};

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

doNotPrompt=true

principal="kafka/cheny0.field.hortonworks.com@FIELD.HORTONWORKS.COM"

keyTab="kafka.service.keytab"

useTicketCache=true

renewTicket=true

serviceName="kafka";

};

//copy /temp to other yarn cluster node

[root@cheny0 temp]# scp -r /temp cheny1:/

[root@cheny0 temp]# scp -r /temp cheny2:/

[root@cheny0 bin]# kinit -kt /etc/security/keytabs/kafka.service.keytab kafka/cheny0.field.hortonworks.com@FIELD.HORTONWORKS.COM

[root@cheny0 temp]# spark-submit --master=yarn --deploy-mode=cluster --files /temp/kafka_jaas.conf,/temp/kafka.service.keytab --conf "spark.driver.extraJavaOptions=-Djava.security.auth.login.config=kafka_jaas.conf" --conf "spark.executor.extraJavaOptions=-Djava.security.auth.login.config=kafka_jaas.conf" --jars /usr/hdp/current/spark2-client/jars/spark-streaming_2.11-2.2.0.2.6.3.0-235.jar,/usr/hdp/current/spark2-client/jars/spark-streaming-kafka-0-10_2.11-2.2.0.2.6.3.0-235.jar,/usr/hdp/current/kafka-broker/libs/kafka-clients-0.10.1.2.6.3.0-235.jar,/usr/hdp/current/spark2-client/examples/jars/spark-examples_2.11-2.2.0.2.6.3.0-235.jar --class spark2.example.Spark2Benchmark /temp/kafka-producer-1.0-SNAPSHOT.jar

//kill the job to save yarn resource

[root@cheny0 temp]# yarn application -kill application_1509825587944_0012

17/11/05 15:41:00 INFO client.RMProxy: Connecting to ResourceManager at cheny1.field.hortonworks.com/xxx.xx.xxx.243:8050

17/11/05 15:41:01 INFO client.AHSProxy: Connecting to Application History server at cheny1.field.hortonworks.com/xxx.xx.xxx.xxx:10200

Killing application application_1509825587944_0012

17/11/05 15:41:01 INFO impl.YarnClientImpl: Killed application application_1509825587944_0012

6. Summary

To check and enable those Kafka command, scripts and applications to work after ssl secured is not easier than that of Kerberos configuration. So, we should also check at each step to make sure we need not set back. That is the way I learn. Hope you guys get success as well. And for any suggestion or question, please fee free to leave your commend below. Have a good day.

Created on 11-06-2017 11:46 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

could you please confirm if SASL_SSL works with Spark 1.6 also?