Community Articles

- Cloudera Community

- Support

- Community Articles

- Monitor Apache NiFi with Apache NiFi

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 04-02-2017 08:58 PM - edited 08-17-2019 01:25 PM

Monitoring Apache NiFi

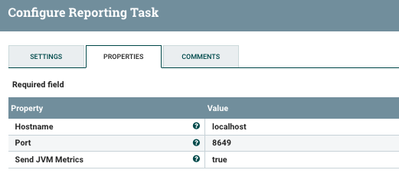

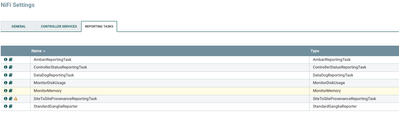

It's really important to pick some Reporting Tasks to let you know what's happening in Apache NiFi servers. Ambari will send it to your HDF Ambari which will show the results in nice Grafana graphs, charts and tables.

You can also monitor disk usage, memory and also send tasks to DataDog, Ganglia and Other Servers. It's also easy to write your own Reporting Task if you need a different one.

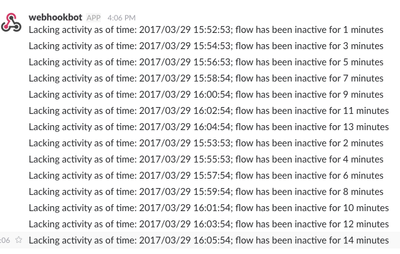

One of the ways to monitor your Apache NiFi Data Flows is to use the MonitorActivity processor which will create messages that can be sent to your Operations Dashboard, Console or elsewhere.

For people doing ChatOps, you can easily push these messages to Slack (there's a processor for that) PutSlack. You could also send a REST call to HipChat or other chat tools. Pretty easy to wrap that up in a custom processor as well.

Other Things to Monitor

REST END Points

server:port/nifi-api/system-diagnostics

See: https://nifi.apache.org/docs/nifi-docs/rest-api/

Logs

...nifi/logs/nifi-app.log and

..nifi/logs/nifi-user.log

These can be ingested with Apache NiFi for detailed log processing.

You can filter and send some messages to SumoLogic or elsewhere via Apache NiFi.

Created on 04-03-2017 12:18 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

PutSlack was such a good addition!

Be careful ingesting nifi-app.log though! I've tried this before and it quickly spirals out of control as each read of the log also generates log entries which then get picked up and generate more log entries.

Created on 04-03-2017 06:27 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Sebastian Carroll IMHO you would need a separate NiFi instance for that purpose, same goes if you want to archive Provenance events from the NiFi instance; another option would be to send the logs to Splunk, etc. for log processing and for any analytics on top of that (dashboard, alerts, etc.)

Created on 03-14-2018 11:52 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Yes, much safer to have another instance you can use for reporting and such. Even if it's just one node.

Created on 08-20-2019 11:29 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Created on 11-20-2025 11:06 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi everyone, I hope you’re doing well. I am working on a dataflow in Apache NiFi 1.18, and I need to retrieve the queue size information (flowfile count and content size) directly within NiFi itself, not via an external script or Postman. I know that the NiFi REST API provides this data, and I can access it successfully using external tools. However, my goal is to access queue metrics from inside NiFi, for example through processors like InvokeScriptedProcessor, QueryNiFiReportingTask, or any other built-in mechanism, without sending an external REST API request from outside NiFi. Is there a recommended approach, processor, or reporting task that allows NiFi to read its own queue sizes internally? If not, what would be the best practice to achieve this? Any guidance or examples would be greatly appreciated. Thank you in advance!