Community Articles

- Cloudera Community

- Support

- Community Articles

- Monitoring Energy Usage Utilizing Apache NiFi, Pyt...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 12-22-2017 08:49 PM - edited 08-17-2019 09:45 AM

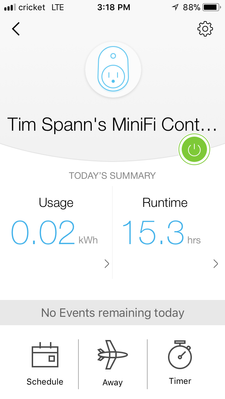

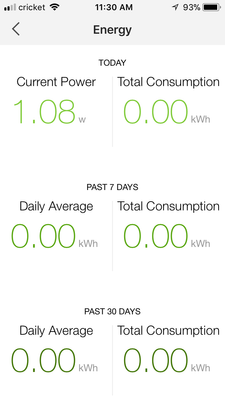

In the Holidays, it's nice to know how much energy you are using. So one small step is I bought a low-end inexpensive TPLink Energy Monitoring plug for one device. I have been monitoring phone charging and my Apple monitor.

Let's read the data and do some queries in Apache Hive and Apache Spark 2 SQL.

Processing Live Energy Feeds in The Cloud

Monitor Energy From a Local OSX

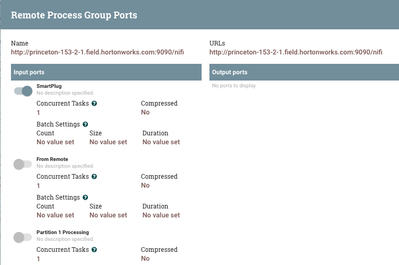

If your local instance does not have access to Apache Hive, you will need to send the data via Site-to-Site to a Remote Apache NiFi / HDF server/cluster that can.

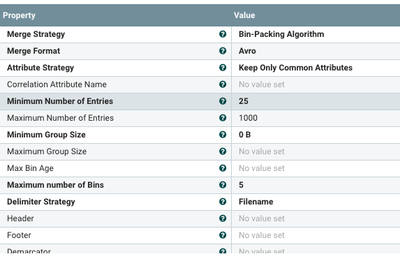

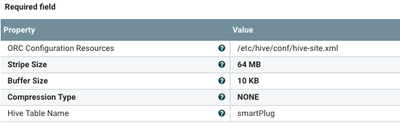

For Apache Hive Usage, Please Convert to Apache ORC Files

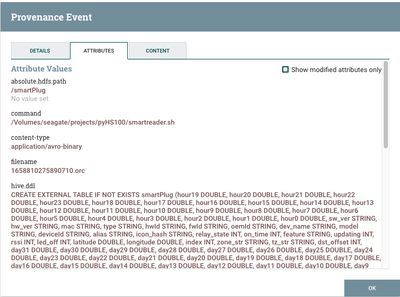

To Create Your New Table, Grab the hive.ddl

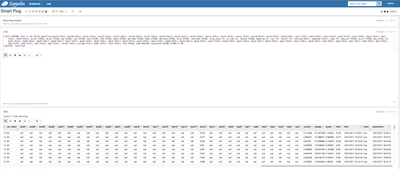

Inside of Apache Zeppelin, we can create our table based on the above DDL. We could have also let Apache NiFi create the table for us. I like to keep my DDL with my notebook. Just a personal choice.

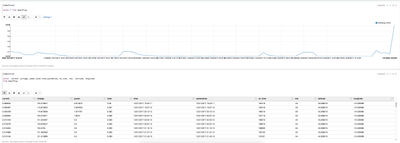

We can then query our table in Apache Zeppelin utilizing Apache Spark 2 SQL and Apache Hive QL.

Overview

Step 1: Purchase an inexpensive energy monitoring plug

Step 2: Connect it to a Phone App via WIFI

Step 3: Once Configured, you can now access via Python

Step 4: Install the HS100 Python Library in Python 3.x

Step 5: Fork My Github and Use My Shell Script and Python Script

Step 6: Add the Local Apache NiFi Flow which will call that Script

Step 7: Add a Remote Apache NiFi Flow for Processing into Apache Hadoop

Step 8: Create Your Table

Step 9: Query with Apache Hive and Apache Spark SQL via Apache Zeppelin or Other UI

Step 10: Turn that extra stuff off and save money!

The Open Source Code and Artefacts

Shell Script (smartreader.sh)

python3 meterreader.py

Python Code (meterreader.py)

from pyHS100 import SmartPlug, SmartBulb

#from pprint import pformat as pf

import json

import datetime

plug = SmartPlug("192.168.1.200")

row = { }

emeterdaily = plug.get_emeter_daily(year=2017, month=12)

for k, v in emeterdaily.items():

row["hour%s" % k] = v

hwinfo = plug.hw_info

for k, v in hwinfo.items():

row["%s" % k] = v

sysinfo = plug.get_sysinfo()

for k, v in sysinfo.items():

row["%s" % k] = v

timezone = plug.timezone

for k, v in timezone.items():

row["%s" % k] = v

emetermonthly = plug.get_emeter_monthly(year=2017)

for k, v in emetermonthly.items():

row["day%s" % k] = v

realtime = plug.get_emeter_realtime()

for k, v in realtime.items():

row["%s" % k] = v

row['alias'] = plug.alias

row['time'] = plug.time.strftime('%m/%d/%Y %H:%M:%S')

row['ledon'] = plug.led

row['systemtime'] = datetime.datetime.now().strftime('%m/%d/%Y %H:%M:%S')

json_string = json.dumps(row)

print(json_string)The code is basically a small tweak on the example code provided with the pyHS100 code. This code allows you to access the HS110 that I have. My PC and my smart meter are on the same WiFi which can't be 5G.

Example Data

{"hour19": 0.036, "hour20": 0.021, "hour21": 0.017, "sw_ver": "1.1.1 Build 160725 Rel.164033", "hw_ver": "1.0", "mac": "50:C7:BF:B1:95:D5", "type": "IOT.SMARTPLUGSWITCH", "hwId": "60FF6B258734EA6880E186F8C96DDC61", "fwId": "060BFEA28A8CD1E67146EB5B2B599CC8", "oemId": "FFF22CFF774A0B89F7624BFC6F50D5DE", "dev_name": "Wi-Fi Smart Plug With Energy Monitoring", "model": "HS110(US)", "deviceId": "8006ECB1D454C4428953CB2B34D9292D18A6DB0E", "alias": "Tim Spann's MiniFi Controller SmartPlug - Desk1", "icon_hash": "", "relay_state": 1, "on_time": 161599, "active_mode": "schedule", "feature": "TIM:ENE", "updating": 0, "rssi": -32, "led_off": 0, "latitude": 40.268216, "longitude": -74.529088, "index": 18, "zone_str": "(UTC-05:00) Eastern Daylight Time (US & Canada)", "tz_str": "EST5EDT,M3.2.0,M11.1.0", "dst_offset": 60, "day12": 0.074, "current": 0.04011, "voltage": 122.460974, "power": 1.8772, "total": 0.074, "time": "12/21/2017 13:21:52", "ledon": true, "systemtime": "12/21/2017 13:21:53"}As you can see we only get the hours and days where we had usage. Since this is new, I don't have them all.

I created my schema to handle all the days of a month and all the hours of a day.

We are going to have a sparse table. If I was monitoring millions of devices, I would put this in Apache HBase. I may do that later.

Let's create an HDFS directory for Loading Apache ORC Files

hdfs dfs -mkdir -p /smartPlug

hdfs dfs -chmod -R 777 /smartPlug

Table DDL

CREATE EXTERNAL TABLE IF NOT EXISTS smartPlug (hour19 DOUBLE, hour20 DOUBLE, hour21 DOUBLE, hour22 DOUBLE, hour23 DOUBLE, hour18 DOUBLE, hour17 DOUBLE, hour16 DOUBLE, hour15 DOUBLE, hour14 DOUBLE, hour13 DOUBLE, hour12 DOUBLE, hour11 DOUBLE, hour10 DOUBLE, hour9 DOUBLE, hour8 DOUBLE, hour7 DOUBLE, hour6 DOUBLE, hour5 DOUBLE, hour4 DOUBLE, hour3 DOUBLE, hour2 DOUBLE, hour1 DOUBLE, hour0 DOUBLE, sw_ver STRING, hw_ver STRING, mac STRING, type STRING, hwId STRING, fwId STRING, oemId STRING, dev_name STRING, model STRING, deviceId STRING, alias STRING, icon_hash STRING, relay_state INT, on_time INT, feature STRING, updating INT, rssi INT, led_off INT, latitude DOUBLE, longitude DOUBLE, index INT, zone_str STRING, tz_str STRING, dst_offset INT, day31 DOUBLE, day30 DOUBLE, day29 DOUBLE, day28 DOUBLE, day27 DOUBLE, day26 DOUBLE, day25 DOUBLE, day24 DOUBLE, day23 DOUBLE, day22 DOUBLE, day21 DOUBLE, day20 DOUBLE, day19 DOUBLE, day18 DOUBLE, day17 DOUBLE, day16 DOUBLE, day15 DOUBLE, day14 DOUBLE, day13 DOUBLE, day12 DOUBLE, day11 DOUBLE, day10 DOUBLE, day9 DOUBLE, day8 DOUBLE, day7 DOUBLE, day6 DOUBLE, day5 DOUBLE, day4 DOUBLE, day3 DOUBLE, day2 DOUBLE, day1 DOUBLE, current DOUBLE, voltage DOUBLE, power DOUBLE, total DOUBLE, time STRING, ledon BOOLEAN, systemtime STRING) STORED AS ORC LOCATION '/smartPlug'

A Simple Query on Some of the Variables

select `current`,voltage, power,total,time,systemtime, on_time, rssi, latitude, longitude from smartPlug

Note that current is a special word in SQL so we tick it.

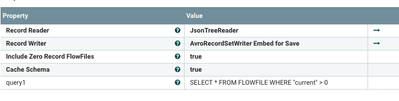

An Apache Calcite Query Inside Apache NiFi

SELECT * FROM FLOWFILE WHERE "current" > 0

With the Python API I can turn it off, so don't monitor then. In an updated article I will add a few smart plugs and turn them on and off based on things occurring. Perhaps turn off a light when no motion detected. We can do anything with Apache NiFi, Apache MiniFi and Python. The API also allows for turning the green LED light on the plug on and off.

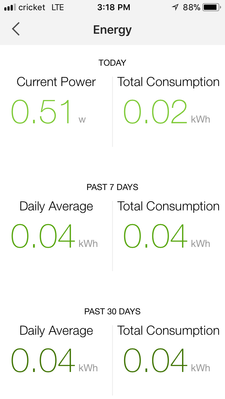

The Screen Prints above are from the IoS version of the TPLink KASA app, which let's you configure and monitor your plug. For many people that's good enough, but not for me.

Resources

https://github.com/GadgetReactor/pyHS100

https://pypi.python.org/pypi/pyHS100

pip3 install pyhs100