Community Articles

Find and share helpful community-sourced technical articles.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Announcements

Now Live: Explore expert insights and technical deep dives on the new Cloudera Community Blogs — Read the Announcement

- Cloudera Community

- Support

- Community Articles

- New Features of Apache NiFi 1.13.0

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Master Guru

Created on

02-22-2021

07:07 AM

- edited on

02-23-2021

09:13 PM

by

subratadas

New Features of Apache NiFi 1.13.0

Download today: Apache NiFi Downloads

Release Notes: Release Notes (Apache NiFi)

Migration: Migration Guidance

New Features

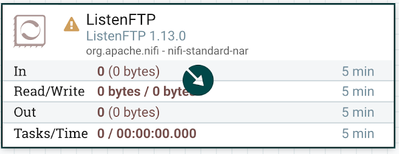

- ListenFTP

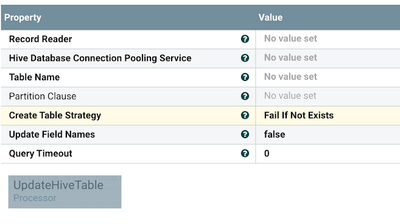

- UpdateHiveTable - Hive DDL changes - Hive Update Schema i.e. Data Drift i.e. Hive Schema Migration!!!!

- SampleRecord - different sampling approaches to records (Interval Sampling, Probabilistic Sampling, Reservoir Sampling)

- CDC updates

- Kudu updates

- AMQP and MQTT integration upgrades

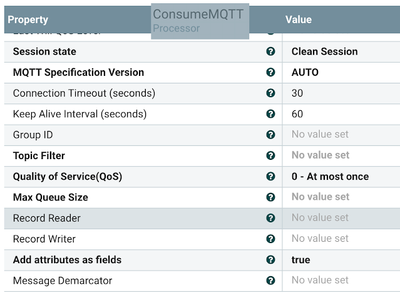

- ConsumeMQTT - readers, and writers added

- HTTP access to NiFi by default is now configured to accept connections to 127.0.0.1/localhost only. If you want to allow broader access for some reason for HTTP, and you understand the security implications, you can still control that as always by changing the 'nifi.web.http.host' property in nifi.properties as always. That said, take the time to configure proper HTTPS. We offer detailed instructions and tooling to assist.

- ConsumeMQTT - add record reader/writer

- The ability to run NiFi with no GUI as MiNiFi/NiFi combined code base continues.

- Support for Kudu dates

- Updated GRPC versions

- Apache Calcite update

- PutDatabaseRecord update

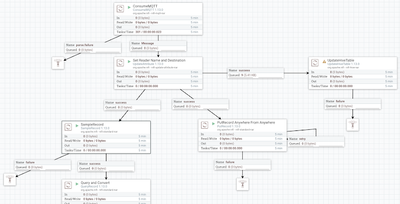

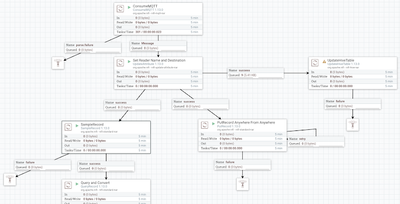

Here is an example for NiFi ETL Flow:

Example NiFi 1.13.0 Flow:

- ConsumeMQTT: now with readers

- UpdateAttribute: set record.sink.name to kafka and recordreader.name to json.

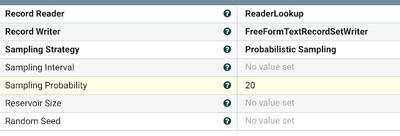

- SampleRecord: sample a few of the records

- PutRecord: Use reader and destination service

- UpdateHiveTable: new sink

Consume from MQTT and read and write to/from records.

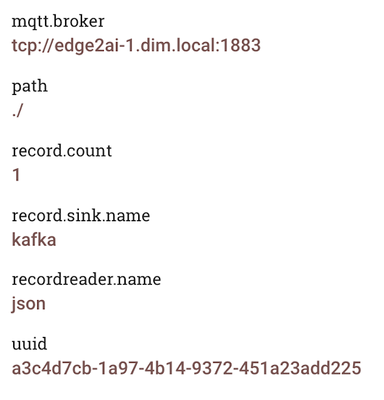

Some example attributes from a running flow:

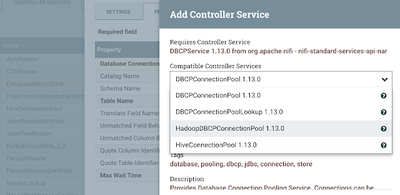

Connection pools for DatabaseRecordSinks can be JDBC, Hadoop, and Hive.

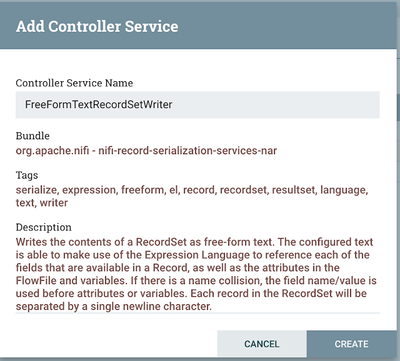

FreeFormTextRecordSetWriter is great for writing any format.

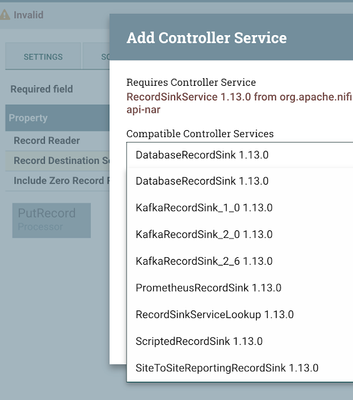

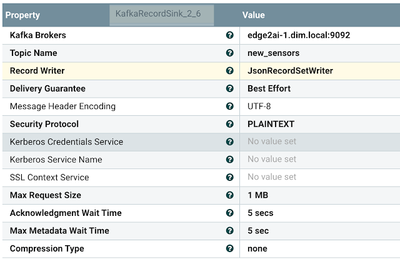

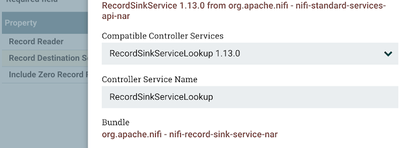

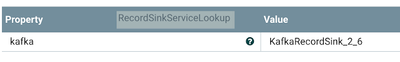

RecordSinkService, we will pick Kafka as our destination.

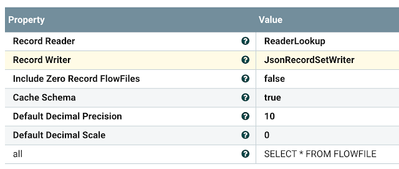

The reader will pick JSON in our example based on our UpdateAttribute; we can dynamically change this as data streams.

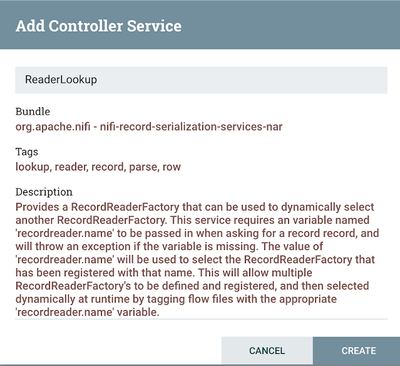

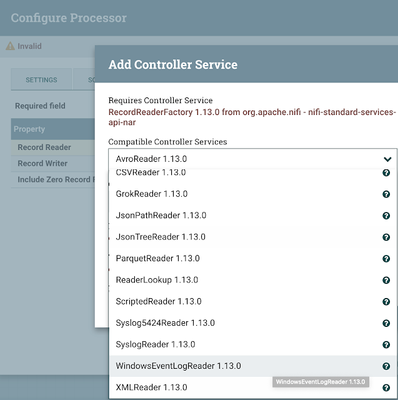

ReaderLookup - lets you pick a reader based on an attribute.

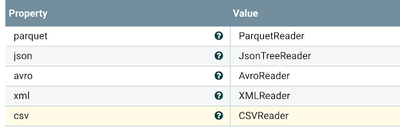

We have defined readers for Parquet, JSON, AVRO, XML, and CSV; no matter the type, I can automagically read it. Great for reusing code and great for cases like our new ListenFTP where you may get sent tons of different files to process. Use one FLOW!

RecordSinkService can help you make all our flows generic so you can drop in different sinks/destinations for your writers based on what the data coming in is. This is revolutionary for code reuse.

We can write our output in a custom format that could look like a document, HTML, fixed-width, a form letter, weird delimiter, or whatever you need.

We use the RecordSinkServiceLookup to allow us to change our sink location dynamically; we are passing in an attribute to choose Kafka.

As another output, we can UpdateHiveTable from our data and change the table as needed.

Straight From Release Notes: New Feature

- [NIFI-7386] - AzureStorageCredentialsControllerService should also connect to storage emulator

- [NIFI-7429] - Add Status History capabilities for system-level metrics

- [NIFI-7549] - Adding Hazelcast based implementation for DistributedMapCacheClient

- [NIFI-7624] - Build a ListenFTP processor

- [NIFI-7745] - Add a SampleRecord processor

- [NIFI-7796] - Add Prometheus metrics for total bytes received and bytes sent for components

- [NIFI-7801] - Add acknowledgment check to Splunk

- [NIFI-7821] - Create a Cassandra implementation of DistributedMapCacheClient

- [NIFI-7879] - Create record path function for UUID v5

- [NIFI-7906] - Add graph processor with the flexibility to query graph database conditioned on flowfile content and attributes

- [NIFI-7989] - Add Hive "data drift" processor

- [NIFI-8136] - Allow State Management to be tied to Process Session

- [NIFI-8142] - Add "on conflict do nothing" feature to PutDatabaseRecord

- [NIFI-8146] - Allow RecordPath to be used for specifying operation type and data fields when using PutDatabaseRecord

- [NIFI-8175] - Add a WindowsEventLogReader

An update on Cloudera Flow Management!

Cloudera Flow Management on DataHub Public Cloud

This minor update has some Schema Registry and Atlas integration updates.

If that wasn't enough, a new version of MiNiFi C++ Agent!

Cloudera Edge Manager 1.2.2 Release

February 15, 2021

CEM MiNiFi C++ Agent - 1.21.01 release includes:

- Support for JSON output in the Consume Windows Even Log processor

- Full Expression Language support on Windows

- Full S3 support (List, Fetch, Get, Put)

Remember when you are done.

2,471 Views