Community Articles

- Cloudera Community

- Support

- Community Articles

- NiFi ETL: Removing columns, filtering rows, changi...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 11-16-2016 08:10 PM - edited 08-17-2019 07:54 AM

Introduction

In this article I demonstrate how to use NiFi to manipulate with data records structured in columns, by showing how to perform the following three ETL operations in one flow against a dataset:

- Remove one or more columns (fields) from the dataset

- Filter out rows based on one or more field values

- Change field values (e.g convert all nulls to empty characters)

These operations are based strongly on use of regular expressions in native NiFi processors. I assume you have basic knowledge of regular expressions and point out the key regular expression pattern needed to work with data structured in columns (e.g to operate on the 3rd , 5th and 6th columns of a delimited file).

For transformations more complex than shown here, you use the same flow and regex pattern but more complex expressions inside of the regex pattern. Note that if NiFi starts to feel limited as an ETL tool (extreme complexity of transformations or volumes), consider pig with UDFs or 3rd party ETL tools. Also keep in mind that if you are using NiFi to land data in Hadoop, it is a best practice to land and persist the data raw and then transform from there (pig is a good tool). So, be sure to ask yourself whether transformations should be done in NiFi or elsewhere.

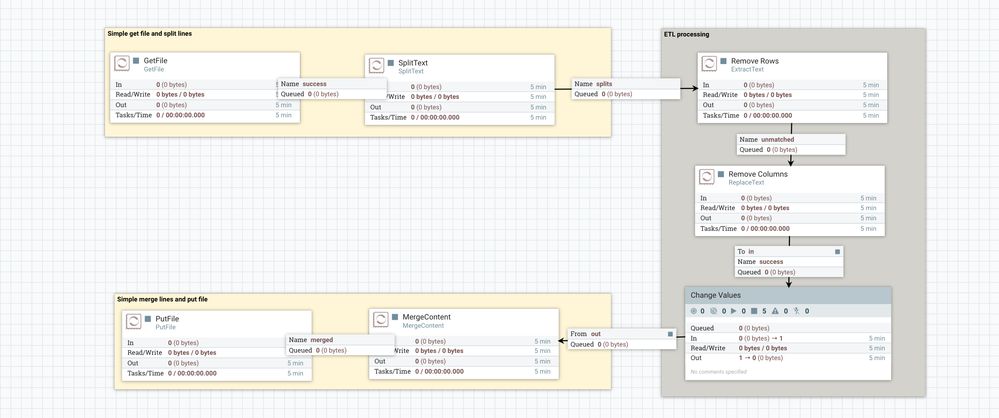

The Overall Flow

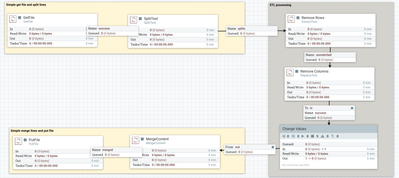

The overall flow is shown below, with the processors in gray doing the transformations.

Basic flow is:

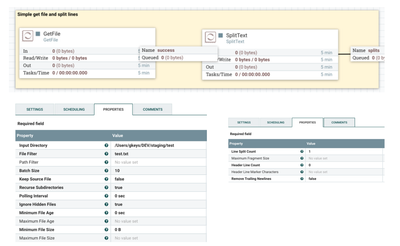

- GetFile and SplitText feed records of a delimited file (e.g. csv) into the ETL processors.

- ExtractText filters out records (in my flow I match records to discard and flow the unmatched records)

- ReplaceText removes the same column(s) from the filtered records

- A processing group of ReplaceText processors changes values of fields in the record, based on specified condition(s)

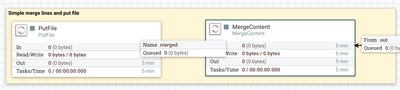

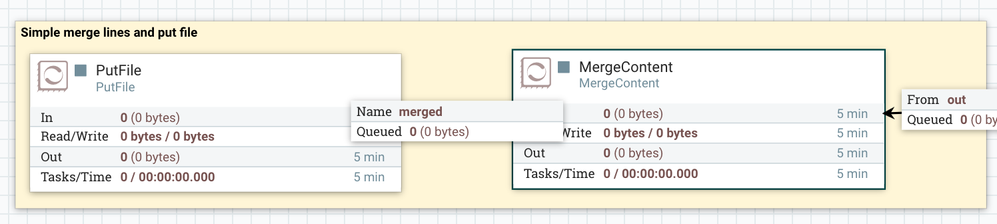

- MergeContent and PutFile append the result into a single file.

Note that the data can be gotten from any source (not necessarily a file as shown here) and put to any source. The only requirement is that the lines inputted to the ETL processing subflow are delimited into the same number of fields (comma-separated, tab-separated, etc).

The Regular Expression Pattern to Work with Columns (Fields)

The key regex pattern to work with data in columns is shown below. I will use the example of a comma-separated file.

((?:.*,){n}) represents any n consecutive fields, where .* represents any value of a field (including empty value) and commas are field delimiters^ represents the beginning of a line (record)

So, each record can be represented as:

^((?:.*,){n})(.*) where((?:.*,){n}) are the first n fields and (.*) is the last fieldNote that each outer () represents expression groups. So:

^((?:.*,){n})(.*) In regex $1 will represent the first expression group ((?:.*,){n}) which are the first n fields and $2 will represent the second expression group (.*) which is the last field^((?:.*,){2})(.*,)((?:.*,){6})(.*) $1 represents the first 2 fields, $2 represents the 3rd field, $3 represents fields 4-9 and $4 represents the last field.The usefulness of this regular expression pattern should be clear as shown below.

Example ETL

I am going to use the following simple data set where the first column is referred to as column 1.

0001,smith,joe,garbage field,10-09-2015,null,6.8,T 0002,gupta,kaya,junk feild,08-01-2014,A,7.9,A 0003,harrison,audrey,no comment,01-17-2016,T,5.1,A 0004,chen,wen,useless words,12-21-2015,B,8.1,A 0005,abad,zaid,*65 fr @abc.com,03-21-2014,A,7.8,null

and perform the following transformations:

- Filter out all rows with value T in column 6

- Remove column 4 from all records

- Convert all null to empty characters

The Flow

Extract the data and feed to transform processing

I get the data from a file (you could fetch from another type of data store) and split the lines into a sequence of flow files fed to the transformations.

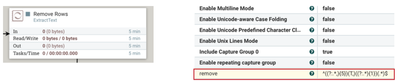

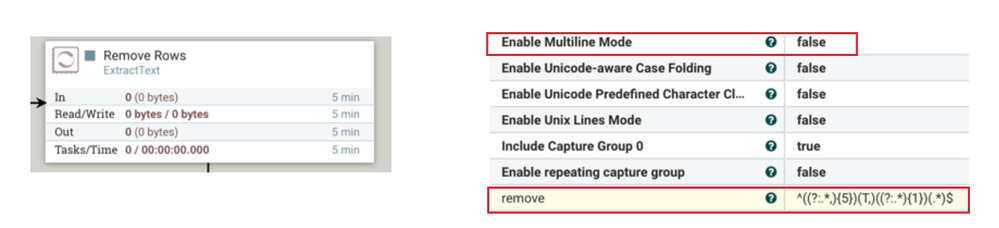

Filter Records

Records are filtered with the ExtractText processor. Recall that SplitText is feeding each record to this processor as a sequence of single files.

Key setting is the attribute "remove" that I have added. Any line that matches this regular expression will not be sent to the next processor (because the connection is to send unmatched). Note that if you want filter based on more conditions you can either expand the regex shown or added more attributes (which is an OR logic, any record satisfying one or more of the attributes added is considered matched).

As explained above, the regex shown will match any record with T as value in the 6th field.

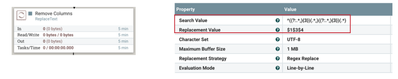

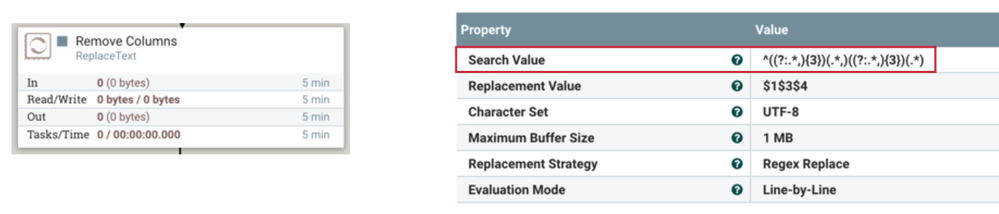

Remove Columns

Columns are removed with the ReplaceText processor.

As explained earlier, the Search Value regular expression defines 4 expression groups (the first 3 columns, the 4th column, the next 3 columns, and the last column. The Replacement Value says to keep the 1st, 3rd and 4 expression groups, thus dropping the 4th column.

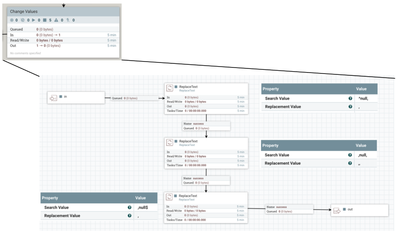

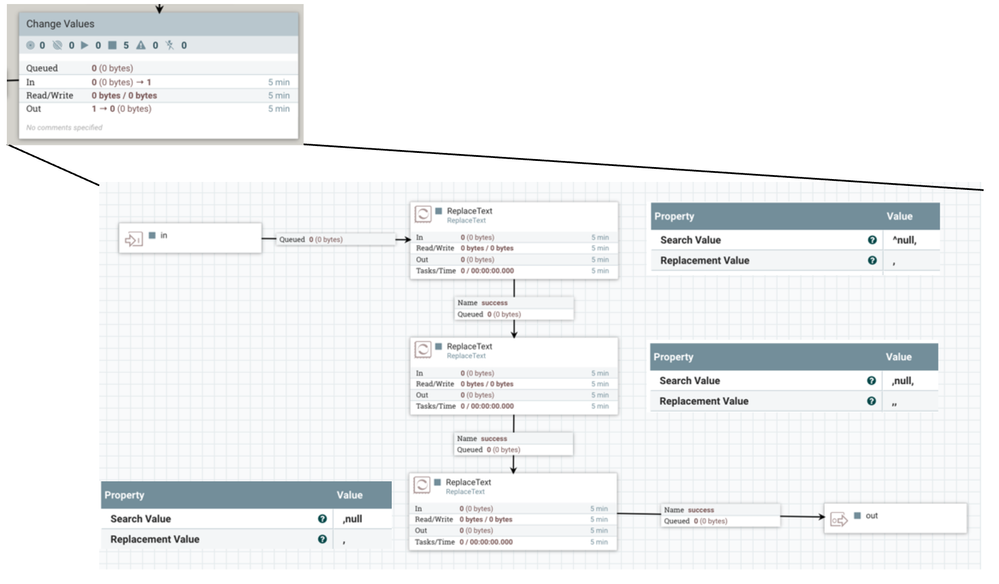

Replace Values

I use three ReplaceText Processors to replace field values for the first field (no delim before value), middle fields (delim before and after value) and last field (no delim after value). I use this to replace null with empty character. I also use a processor group to organize these conveniently.

Load

I put the data to a file but you could load to any target. I use the default settings for these processors, except for the path data I specify for the target file.

Result

0001,smith,joe,10-09-2015,,6.8,T 0005,abad,zaid,03-21-2014,A,7.8, 0004,chen,wen,12-21-2015,B,8.1,A 0002,gupta,kaya,08-01-2014,A,7.9,A

Conclusion

Using NiFi to transforming fields of data (remove columns, change field values) is fairly straightforward if you are strong in regular expressions. For data structured as columns, the key regular expression concept to leverage is repeated expression groups to represent column positions as described earlier in the article. Beyond this, the more skilled you are in regular expressions the greater you will be able to leverage more complex transformations using NiFi. And as stated in the introduction, be sure to ask if transformations are more apropriate in NiFi or elsewhere.

Resources

- http://hortonworks.com/apache/nifi

- https://nifi.apache.org/docs/nifi-docs

- http://hortonworks.com/hadoop-tutorial/learning-ropes-apache-nifi

- https://nifi.apache.org/docs/nifi-docs/html/getting-started.html

- https://nifi.apache.org/docs/nifi-docs/html/nifi-in-depth.html

- https://community.hortonworks.com/articles/7999/apache-nifi-part-1-introduction.html

- http://www.regular-expressions.info

- http://shop.oreilly.com/product/9780596528126.do

Created on 06-27-2017 08:37 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @Greg Keys,

Thank you for this document.

May I ask if I would like to search for datetime type(YYY-MM-DD HH:MM:SS) in a row and convert it to unix timestamp type.

Any idea on it?

Thanks.

Created on 11-03-2017 07:42 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @Greg Keys,

Thanks for the post. Row filtering works based on the column values which is not in the end. But I am not sure how to filter the rows based on the last column value. Can you please let me know.

Thanks