Community Articles

- Cloudera Community

- Support

- Community Articles

- Optimizing HBase I/O for Large Scale Hadoop Implem...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 12-27-2016 05:06 PM - edited 08-17-2019 06:36 AM

The distributed nature of HBase, coupled with the concepts of an ordered write log and a log-structured merge tree, makes HBase a great database for large scale data processing. Over the years, HBase has proven itself to be a reliable storage mechanism when you need random, realtime read/write access to your Big Data.

HBase is able to deliver because of a prioritization mechanism for memory utilization and caching structures over disk I/O. Memory store, implemented as the MemStore, accumulates data edits as they’re received, buffering them in memory (1). The block cache, an implementation of the BlockCache interface, keeps data blocks resident in memory after they’re read.

OVERVIEW OF HBASE I/O

Memstore

The MemStore is important for accessing recent edits. Without the MemStore, accessing that data as it was written into the write log would require reading and deserializing entries back out of that file, at least a O(n)operation. Instead, MemStore maintains a skiplist structure, which enjoys a O(log n) access cost and requires no disk I/O. The MemStore contains just a tiny piece of the data stored in HBase, however.

Caching Options

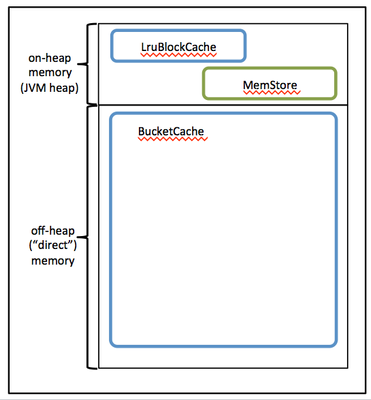

HBase provides two different BlockCache implementations: the default on-heap LruBlockCache and the BucketCache, which is (usually) off-heap. This section discusses benefits and drawbacks of each implementation, how to choose the appropriate option, and configuration options for each.

The following table describes several concepts related to HBase file operations and memory (RAM) caching.

| Component | Description |

| HFile | An HFile contains table data, indexes over that data, and metadata about the data. |

| Block | An HBase block is the smallest unit of data that can be read from an HFile. Each HFile consists of a series of blocks. (Note: an HBase block is different than an HDFS block or other underlying file system blocks.) |

| BlockCache | BlockCache is the main HBase mechanism for low-latency random read operations. BlockCache is one of two memory cache structures maintained by HBase. When a block is read from HDFS, it is cached in BlockCache. Frequent access to rows in a block cause the block to be kept in cache, improving read performance. |

| MemStore | MemStore ("memory store") is the second of two cache structures maintained by HBase. MemStore improves write performance. It accumulates data until it is full, and then writes ("flushes") the data to a new HFile on disk. MemStore serves two purposes: it increases the total amount of data written to disk in a single operation, and it retains recently-written data in memory for subsequent low-latency reads. |

| Write Ahead Log (WAL) | The WAL is a log file that records all changes to data until the data is successfully written to disk (MemStore is flushed). This protects against data loss in the event of a failure before MemStore contents are written to disk. |

BlockCache and MemStore reside in random-access memory (RAM); HFiles and the Write Ahead Log are persisted to HDFS.

The read and write operations for any OOTB HBase implementation can be described as follows:

- During write operations, HBase writes to WAL and MemStore. Data is flushed from MemStore to disk according to size limits and flush interval.

- During read operations, HBase reads the block from BlockCache or MemStore if it is available in those caches. Otherwise it reads from disk and stores a copy in BlockCache

Caching Structure

By default, BlockCache resides in an area of RAM that is managed by the Java Virtual Machine ("JVM") Garbage Collector; this area of memory is known as “on-heap" memory or the "Java heap." The BlockCache implementation that manages on-heap cache is called LruBlockCache.

As you are going to see in the example below, if you have stringent read latency requirements and you have more than 20 GB of RAM available on your servers for use by HBase RegionServers, consider configuring BlockCache to use both on-heap and off-heap memory, as shown below. The associated BlockCache implementation is called BucketCache. Read latencies for BucketCache tend to be less erratic than LruBlockCache for large cache loads, because BucketCache (not JVM Garbage Collection) manages block cache allocation.

Figure below explains HBase's caching structure:

How to choose?

There are two reasons to consider enabling one of the alternative BlockCache implementations. The first is simply the amount of RAM you can dedicate to the region server. Community wisdom recognizes the upper limit of the JVM heap, as far as the region server is concerned, to be somewhere between 14GB and 31GB. The precise limit usually depends on a combination of hardware profile, cluster configuration, the shape of data tables, and application access patterns.

The other time to consider an alternative cache is when response latency really matters. Keeping the heap down around 8-12GB allows the CMS collector to run very smoothly, which has measurable impact on the 99th percentile of response times. Given this restriction, the only choices are to explore an alternative garbage collector or take one of these off-heap implementations for a spin.

Let's take a real world example and go from there.

REAL WORLD SCENARIO

In the example that I use below, I had 3 HBase region server nodes, each with ~24TB of SSD based storage on each node and 512 GB RAM on each machine. I allocated:

- 256 GB for the RegionServer process (there is additional memory available for other HDP processes)

- Workload consists of 50% reads, 50% writes.

- HBase Heap Size= 20 GB (20480 MB)

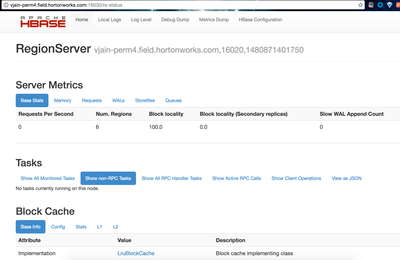

1. The best way to monitor HBase is through the UI that comes with every HBase installation. It is known as the HBase Master UI. The link to the HBase Master UI by default is: http://<HBASE_MASTER_HOST:16010/master-status.

2. Click on the individual region server to view the stats for that server. See below:

3. Click on the stats and you will have all that you need to see about Block cache.

BlockCache Issues

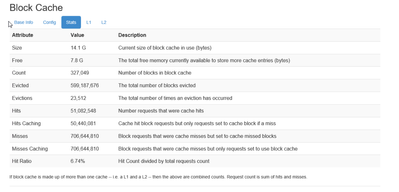

In this particular example, we experienced a number of GC pauses and RegionTooBusyException had started flooding my logs. So followed the steps above to see if there was an issue with the way my HBase's block cache was behaving.

The LruBlockCache is an LRU cache that contains three levels of block priority to allow for scan-resistance and in-memory ColumnFamilies:

- Single access priority: The first time a block is loaded from HDFS it normally has this priority and it will be part of the first group to be considered during evictions. The advantage is that scanned blocks are more likely to get evicted than blocks that are getting more usage.

- Multi access priority: If a block in the previous priority group is accessed again, it upgrades to this priority. It is thus part of the second group considered during evictions.

- In-memory access priority: If the block’s family was configured to be "in-memory", it will be part of this priority disregarding the number of times it was accessed. Catalog tables are configured like this. This group is the last one considered during evictions.

As you can see and what I noticed as well, there were a large number of blocks that were evicted. Eviction is generally ok simply because, you would need to free up your cache as you continue to write data to your region server. However, in my case there was plenty of room in the cache and technically HBase should have continued to cache the data rather than evicting any blocks.

The second problematic behavior was that there were very large number of cache misses (~70 million). Cache miss is a state where the data requested for processing by a component or application is not found in the cache memory. It causes execution delays by requiring the program or application to fetch the data from other cache levels or the main memory.

So clearly, we had to solve for the following issues in order to get better performance from our HBase cluster:

- Number of evicted blocks

- Number of eviction occurrences

- Number of block requests that were cache misses and set to use the block cache

- Maximize the hit ratio

Issue Resolution

In order to resolve the issues mentioned above, we need to configure and enable BucketCache. To do this, note the memory specifications and values, plus additional values shown in the following table:

| Item | Description | Value or Formula | Example |

| A | Total physical memory for RegionServer operations: on-heap plus off-heap ("direct") memory (MB) | (hardware dependent) | 262144 |

| B | HBASE_HEAPSIZE (-Xmx) Maximum size of JVM heap (MB) | Recommendation: 20480 | 20480 |

| C | -XX:MaxDirectMemorySize Amount of off-heap ("direct") memory to allocate to HBase (MB) | A - B | 262144 - 20480 = 241664 |

| Dp | hfile.block.cache.size Proportion of maximum JVM heap size (HBASE_HEAPSIZE, -Xmx) to allocate to BlockCache. The sum of this value plus hbase.regionserver.global.memstore.size must not exceed 0.8. | (proportion of reads) * 0.8 | 0.50 * 0.8 = 0.4 |

| Dm | Maximum amount of JVM heap to allocate to BlockCache (MB) | B * Dp | 20480 * 0.4 = 8192 |

| Ep | hbase.regionserver. global.memstore.size Proportion of maximum JVM heap size (HBASE_HEAPSIZE, -Xmx) to allocate to MemStore. The sum of this value plus hfile.block.cache.size must be less than or equal to 0.8. | 0.8 - Dp | 0.8 - 0.4 = 0.4 |

| F | Amount of off-heap memory to reserve for other uses (DFSClient; MB) | Recommendation: 1024 to 2048 | 2048 |

| G | Amount of off-heap memory to allocate to BucketCache (MB) | C - F | 241664 - 2048 = 239616 |

| hbase.bucketcache.size Total amount of memory to allocate to BucketCache, on- and off-heap (MB) | Dm + G | 264192 |

Enable BucketCache

After completing the steps in Configuring BlockCache, follow these steps to configure BucketCache.

- In the

hbase-env.shfile for each RegionServer, or in thehbase-env.shfile supplied to Ambari, set the-XX:MaxDirectMemorySizeargument forHBASE_REGIONSERVER_OPTSto the amount of direct memory you wish to allocate to HBase. In the configuration for the example discussed above, the value would be241664m. (-XX:MaxDirectMemorySizeaccepts a number followed by a unit indicator;mindicates megabytes.)HBASE_OPTS="$HBASE_OPTS -XX:MaxDirectMemorySize=241664m" - In the

hbase-site.xmlfile, specify BucketCache size and percentage. In the example discussed above, the values would be264192and0.90697674, respectively. If you choose to round the proportion, round up. This will allocate space related to rounding error to the (larger) off-heap memory area. <property> <name>hbase.bucketcache.size</name> <value>241664</value> </property>

- In the

hbase-site.xmlfile, sethbase.bucketcache.ioenginetooffheap. This enables BucketCache.<property> <name>hbase.bucketcache.ioengine</name> <value>offheap</value> </property>

- Restart (or rolling restart) the cluster. It can take a minute or more to allocate BucketCache, depending on how much memory you are allocating. Check logs for error messages.

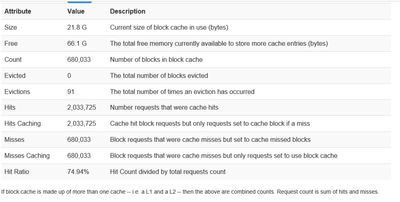

Results

And Voila!

- 100% reduction in the number of evicted blocks

- 99% reduction in the number of evictions

- A reduction of 90% in the number of cache misses

- Hit ratio went up 10x

Created on 01-17-2021 12:41 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @vjain ,

To configure the BuckeCache in the descripption there is a two JVM properties. Which one to use please? : HBASE_OPTS or HBASE_REGIONSERVER_OPTS

In the hbase-env.sh file for each RegionServer, or in the hbase-env.sh file supplied to Ambari, set the -XX:MaxDirectMemorySize argument forHBASE_REGIONSERVER_OPTS to the amount of direct memory you wish to allocate to HBase. In the configuration for the example discussed above, the value would be 241664m. (-XX:MaxDirectMemorySize accepts a number followed by a unit indicator; m indicates megabytes.)

HBASE_OPTS="$HBASE_OPTS -XX:MaxDirectMemorySize=241664m"

Thanks,

Helmi KHALIFA