Community Articles

- Cloudera Community

- Support

- Community Articles

- Practice on using ansible 2.4 to deploy HDP 2.6.4....

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 04-14-2018 07:06 PM - edited 08-17-2019 07:50 AM

Ambari Server version: 2.5.2.0-298

HDP version: 2.6.4.0-91

Ansible version: 2.4

OS version: centos7

There are options of deployment tools to do automatic HDP installation, one of the reasons for why ansible is popular is ansible is lightweight and it totally relies on ssh connection without any physical agent. But ansible script has its organizational structure that we will not talk here in detail, and for people who are not familiar with its grammar and its running logic, can reference to this quick tutorial

https://serversforhackers.com/c/an-ansible2-tutorial

but when you delve into full deck of scripts for hdp installation, you'd better to have the document along with

https://docs.ansible.com/ansible/2.4/porting_guide_2.4.html

And beware of composing the full ansible scripts from scratch is a little bit boring, to save time you can build your own on some other existing scripts, for hdp installation we can start with the following link, but to fit for your case, still, need to do tailoring

https://github.com/hortonworks/ansible-hortonworks

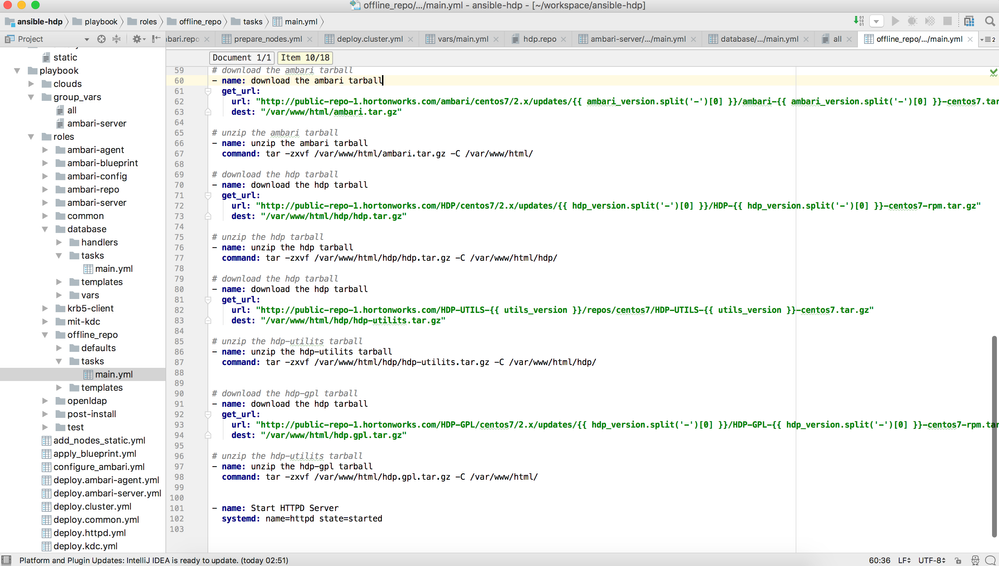

Script editor can help improve your work efficiency, here I recommend composing script in IntelliJ IDEA which can give you a neat and clear layout to put things in the place. So, we actually now have a project built in IntelliJ IDEA.

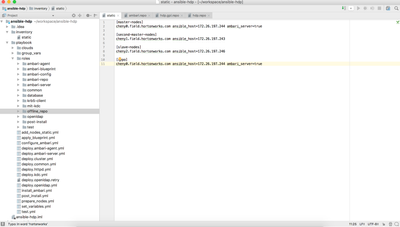

Next, I'm gonna give you a full picture of this ansible project structure. Basically, we first need to define the cluster layout, so we have an inventory file 'static' as shown in the above, and then to divide the installation into a sequence of macro steps or phases, we then defined a bunch of roles, which organize related tasks and data into one coherent structure. Roles have a directory structure here we only use some of them including default, tasks, templates, and vars.

Default and tasks directory respectively contain a main.yml which put together a sequence of micro-steps into a series of tasks. Template files can contain template variables, based on Python's Jinja2 template engine. Files in here should end in .j2, but can otherwise have any name. In our project, we use the template to generate blueprint or local repository files. The vars directory contains yml files which simply list variables we'll use in the roles.

Now, let me walk you through the installation process. The script "deploy.cluster.yml" gives out a workflow for the installation as following,

# Will Prepare the Nodes - include: "prepare_nodes.yml" # Install Repository - include: "deploy.httpd.yml" # Install Ambari Agent - include: "install_ambari.yml" tags: install_ambari # Register local repository to Ambari Server - include: "configure_ambari.yml" # Generate blueprint - include: "apply_blueprint.yml" tags: blueprint - include: "post_install.yml"

let's say we will build from clean os only with password-less ssh configured, so the very first step is doing some basic package installation and os settings.

packages: - python-httplib2 # required for calling Ambari API from Ansible - openssh-clients # scp required by Ambari - curl # curl required by Ambari - unzip # unzip required by Ambari - tar # tar required by Ambari - wget # wget required by Ambari - openssl # openssl required by Ambari - chrony # ntp required by Hadoop

ntp_service_name: chronyd

firewall_service_name: firewalld

update_grub_command: 'grub2-mkconfig -o "$(readlink -n /etc/grub2.cfg)"'

And this preparation is fulfilled by "common" role, next we step to configure repositories for ambari and hdp. Let's say in most cases the cluster has limit to access the internet, so better download and configure local repositories for cluster nodes to use. To this end, we need do two things: build up HTTP server and put the repository in the root directory, second is generate repo files and scatter across the cluster.

The following is the snippet from tasks of role "offline-repo", this is for downloading and decompose the tarball into http server root directory.

# download the ambari tarball- name: download the ambari tarball

get_url: url: "http://public-repo-1.hortonworks.com/ambari/centos7/2.x/updates/{{ ambari_version.split('-')[0] }}/ambari-{{ ambari_version.split('-')[0] }}-centos7.tar.gz"dest: "/var/www/html/ambari.tar.gz"# unzip the ambari tarball- name: unzip the ambari tarball

command: tar -zxvf /var/www/html/ambari.tar.gz -C /var/www/html/

# download the hdp tarball- name: download the hdp tarball

get_url: url: "http://public-repo-1.hortonworks.com/HDP/centos7/2.x/updates/{{ hdp_version.split('-')[0] }}/HDP-{{ hdp_version.split('-')[0] }}-centos7-rpm.tar.gz"dest: "/var/www/html/hdp/hdp.tar.gz"# unzip the hdp tarball- name: unzip the hdp tarball

command: tar -zxvf /var/www/html/hdp/hdp.tar.gz -C /var/www/html/hdp/

# download the hdp tarball- name: download the hdp tarball

get_url: url: "http://public-repo-1.hortonworks.com/HDP-UTILS-{{ utils_version }}/repos/centos7/HDP-UTILS-{{ utils_version }}-centos7.tar.gz"dest: "/var/www/html/hdp/hdp-utilits.tar.gz"# unzip the hdp-utilits tarball- name: unzip the hdp-utilits tarball

command: tar -zxvf /var/www/html/hdp/hdp-utilits.tar.gz -C /var/www/html/hdp/

# download the hdp-gpl tarball- name: download the hdp tarball

get_url: url: "http://public-repo-1.hortonworks.com/HDP-GPL/centos7/2.x/updates/{{ hdp_version.split('-')[0] }}/HDP-GPL-{{ hdp_version.split('-')[0] }}-centos7-rpm.tar.gz"dest: "/var/www/html/hdp.gpl.tar.gz"# unzip the hdp-utilits tarball- name: unzip the hdp-gpl tarball

command: tar -zxvf /var/www/html/hdp.gpl.tar.gz -C /var/www/html/

And then generate repo files according to the repo template. This is the repo for ambari, need substitute placeholder ambari_version with the exact version number that we gives in vars file "all" in group vars directory

[AMBARI-{{ ambari_version }}]

name=AMBARI Version - AMBARI-{{ ambari_version }}

baseurl=http://{{ groups['repo'][0] }}/ambari/centos7

gpgcheck=1

gpgkey=http://{{ groups['repo'][0] }}/ambari/centos7/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins

enabled=1

priority=1And this is repo for hdp, need substitute the placeholder hdp_version with the exact version number that we gives in vars file "all" in group vars directory

#VERSION_NUMBER={{ hdp_version }}

[HDP-{{ hdp_version }}]

name=HDP Version - HDP-{{ hdp_version }}

baseurl=http://{{ groups['repo'][0] }}/hdp/HDP/centos7/{{ hdp_version }}

gpgcheck=1

gpgkey=http://{{ groups['repo'][0] }}/hdp/HDP/centos7/{{ hdp_version }}/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins

enabled=1

priority=1

[HDP-UTILS-{{ utils_version }}]

name=HDP-UTILS Version - HDP-UTILS-{{ utils_version }}

baseurl=http://{{ groups['repo'][0] }}/hdp

gpgcheck=1

gpgkey=http://{{ groups['repo'][0] }}/hdp/HDP/centos7/{{ hdp_version }}/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins

enabled=1

priority=1After deploy repo files across cluster nodes, then we start to install ambari server and agents via role "ambari-server" and "ambari-agent" respectively. Because Ambari-server relies on a database to store metadata to maintain health and manage the cluster. Let's say we use mysql to do the database job, then here has role "database" to install mysql and configure database and users as follows,

- block:

- name: Create the ambari database (mysql)

mysql_db:

name: "{{ database_options.ambari_db_name }}"

state: present

- name: Create the ambari database user and host based access (mysql)

mysql_user:

name: "{{ database_options.ambari_db_username }}"

host: "{{ hostvars[inventory_hostname]['ansible_fqdn'] }}"

priv: "{{ database_options.ambari_db_name }}.*:ALL"

password: "{{ database_options.ambari_db_password }}"

state: present

- name: Create the ambari database user and IP based access (mysql)

mysql_user:

name: "{{ database_options.ambari_db_username }}"

host: "{{ hostvars[inventory_hostname]['ansible_'~hostvars[inventory_hostname].ansible_default_ipv4.alias]['ipv4']['address'] }}"

priv: "{{ database_options.ambari_db_name }}.*:ALL"

password: "{{ database_options.ambari_db_password }}"

state: present

- name: Create the hive database (mysql)

mysql_db:

name: "{{ database_options.hive_db_name }}"

state: present

when: hiveserver_hosts is defined and hiveserver_hosts|length > 0

- name: Create the hive database user and host based access (mysql)

mysql_user:

name: "{{ database_options.hive_db_username }}"

host: "{{ hostvars[item]['ansible_fqdn'] }}"

priv: "{{ database_options.hive_db_name }}.*:ALL"

password: "{{ database_options.hive_db_password }}"

state: present

with_items: "{{ hiveserver_hosts }}"

when: hiveserver_hosts is defined and hiveserver_hosts|length > 0

- name: Create the hive database user and IP based access (mysql)

mysql_user:

name: "{{ database_options.hive_db_username }}"

host: "{{ hostvars[item]['ansible_'~hostvars[item].ansible_default_ipv4.alias]['ipv4']['address'] }}"

priv: "{{ database_options.hive_db_name }}.*:ALL"

password: "{{ database_options.hive_db_password }}"

state: present

with_items: "{{ hiveserver_hosts }}"

when: hiveserver_hosts is defined and hiveserver_hosts|length > 0

- name: Create the oozie database (mysql)

mysql_db:

name: "{{ database_options.oozie_db_name }}"

state: present

when: oozie_hosts is defined and oozie_hosts|length > 0

- name: Create the oozie database user and host based access (mysql)

mysql_user:

name: "{{ database_options.oozie_db_username }}"

host: "{{ hostvars[item]['ansible_fqdn'] }}"

priv: "{{ database_options.oozie_db_name }}.*:ALL"

password: "{{ database_options.oozie_db_password }}"

state: present

with_items: "{{ oozie_hosts }}"

when: oozie_hosts is defined and oozie_hosts|length > 0

- name: Create the oozie database user and IP based access (mysql)

mysql_user:

name: "{{ database_options.oozie_db_username }}"

host: "{{ hostvars[item]['ansible_'~hostvars[item].ansible_default_ipv4.alias]['ipv4']['address'] }}"

priv: "{{ database_options.oozie_db_name }}.*:ALL"

password: "{{ database_options.oozie_db_password }}"

state: present

with_items: "{{ oozie_hosts }}"

when: oozie_hosts is defined and oozie_hosts|length > 0

- name: Create the ranger admin database (mysql)

mysql_db:

name: "{{ database_options.rangeradmin_db_name }}"

state: present

when: rangeradmin_hosts is defined and rangeradmin_hosts|length > 0

- name: Create the ranger admin database user and host based access (mysql)

mysql_user:

name: "{{ database_options.rangeradmin_db_username }}"

host: "{{ hostvars[item]['ansible_fqdn'] }}"

priv: "{{ database_options.rangeradmin_db_name }}.*:ALL"

password: "{{ database_options.rangeradmin_db_password }}"

state: present

with_items: "{{ rangeradmin_hosts }}"

when: rangeradmin_hosts is defined and rangeradmin_hosts|length > 0

- name: Create the ranger admin database user and IP based access (mysql)

mysql_user:

name: "{{ database_options.rangeradmin_db_username }}"

host: "{{ hostvars[item]['ansible_'~hostvars[item].ansible_default_ipv4.alias]['ipv4']['address'] }}"

priv: "{{ database_options.rangeradmin_db_name }}.*:ALL"

password: "{{ database_options.rangeradmin_db_password }}"

state: present

with_items: "{{ rangeradmin_hosts }}"

when: rangeradmin_hosts is defined and rangeradmin_hosts|length > 0

- name: Create the registry database (mysql)

mysql_db:

name: "{{ database_options.registry_db_name }}"

state: present

when: registry_hosts is defined and registry_hosts|length > 0

- name: Create the registry database user and host based access (mysql)

mysql_user:

name: "{{ database_options.registry_db_username }}"

host: "{{ hostvars[item]['ansible_fqdn'] }}"

priv: "{{ database_options.registry_db_name }}.*:ALL"

password: "{{ database_options.registry_db_password }}"

state: present

with_items: "{{ registry_hosts }}"

when: registry_hosts is defined and registry_hosts|length > 0

- name: Create the registry database user and IP based access (mysql)

mysql_user:

name: "{{ database_options.registry_db_username }}"

host: "{{ hostvars[item]['ansible_'~hostvars[item].ansible_default_ipv4.alias]['ipv4']['address'] }}"

priv: "{{ database_options.registry_db_name }}.*:ALL"

password: "{{ database_options.registry_db_password }}"

state: present

with_items: "{{ registry_hosts }}"

when: registry_hosts is defined and registry_hosts|length > 0

- name: Create the streamline database (mysql)

mysql_db:

name: "{{ database_options.streamline_db_name }}"

state: present

when: streamline_hosts is defined and streamline_hosts|length > 0

- name: Create the streamline database user and host based access (mysql)

mysql_user:

name: "{{ database_options.streamline_db_username }}"

host: "{{ hostvars[item]['ansible_fqdn'] }}"

priv: "{{ database_options.streamline_db_name }}.*:ALL"

password: "{{ database_options.streamline_db_password }}"

state: present

with_items: "{{ streamline_hosts }}"

when: streamline_hosts is defined and streamline_hosts|length > 0

- name: Create the streamline database user and IP based access (mysql)

mysql_user:

name: "{{ database_options.streamline_db_username }}"

host: "{{ hostvars[item]['ansible_'~hostvars[item].ansible_default_ipv4.alias]['ipv4']['address'] }}"

priv: "{{ database_options.streamline_db_name }}.*:ALL"

password: "{{ database_options.streamline_db_password }}"

state: present

with_items: "{{ streamline_hosts }}"

when: streamline_hosts is defined and streamline_hosts|length > 0

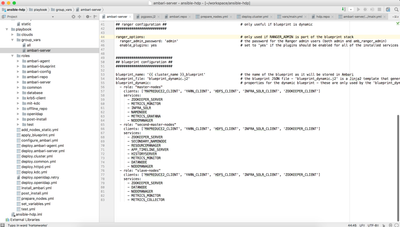

when: database == "mysql" or database == "mariadb"<br>After ambari configured, we then start hdp installation by uploading blueprint. For generating the blueprint we need beforehand to give which compoents should install on which cluster node, and that we already define in vars file as shown in the following picture.

And then role "ambari-blueprint" parse the var "blueprint_dynamic" definition and apply template to generate corresponding blueprint file,

{

"configurations" : [

{

"hadoop-env" : {

"dtnode_heapsize" : "1024m",

"namenode_heapsize" : "2048m",

"namenode_opt_maxnewsize" : "384m",

"namenode_opt_newsize" : "384m"

}

},

{

"hdfs-site" : {

"dfs.datanode.data.dir" : "/hadoop/hdfs/data",

"dfs.datanode.failed.volumes.tolerated" : "0",

"dfs.replication" : "3"

}

},

{

"yarn-site" : {

"yarn.client.nodemanager-connect.retry-interval-ms" : "10000"

}

},

{

"hive-site" : {

"javax.jdo.option.ConnectionDriverName": "com.mysql.jdbc.Driver",

"javax.jdo.option.ConnectionURL": "jdbc:mysql://cheny0.field.hortonworks.com:3306/hive",

"javax.jdo.option.ConnectionUserName": "hive",

"javax.jdo.option.ConnectionPassword": "hive",

"hive.metastore.failure.retries" : "24"

}

},

{

"hiveserver2-site" : {

"hive.metastore.metrics.enabled" : "true"

}

},

{

"hive-env" : {

"hive_database": "Existing MySQL / MariaDB Database",

"hive_database_type": "mysql",

"hive_database_name": "hive",

"hive_user" : "hive"

}

},

{

"oozie-site" : {

"oozie.service.JPAService.jdbc.driver": "com.mysql.jdbc.Driver",

"oozie.service.JPAService.jdbc.url": "jdbc:mysql://cheny0.field.hortonworks.com:3306/oozie",

"oozie.db.schema.name": "oozie",

"oozie.service.JPAService.jdbc.username": "oozie",

"oozie.service.JPAService.jdbc.password": "oozie",

"oozie.action.retry.interval" : "30"

}

},

{

"oozie-env" : {

"oozie_database": "Existing MySQL / MariaDB Database",

"oozie_user" : "oozie"

}

},

{

"hbase-site" : {

"hbase.client.retries.number" : "35"

}

},

{

"core-site": {

"fs.trash.interval" : "360"

}

},

{

"storm-site": {

"storm.zookeeper.retry.intervalceiling.millis" : "30000"

}

},

{

"kafka-broker": {

"zookeeper.session.timeout.ms" : "30000"

}

},

{

"zoo.cfg": {

"clientPort" : "2181"

}

}

],

"host_groups" : [

{

"name" : "master-nodes",

"configurations" : [ ],

"components" : [

{ "name" : "MAPREDUCE2_CLIENT" },

{ "name" : "YARN_CLIENT" },

{ "name" : "HDFS_CLIENT" },

{ "name" : "INFRA_SOLR_CLIENT" },

{ "name" : "ZOOKEEPER_CLIENT" },

{ "name" : "ZOOKEEPER_SERVER" },

{ "name" : "METRICS_MONITOR" },

{ "name" : "INFRA_SOLR" },

{ "name" : "NAMENODE" },

{ "name" : "METRICS_GRAFANA" },

{ "name" : "NODEMANAGER" }

]

},

{

"name" : "second-master-nodes",

"configurations" : [ ],

"components" : [

{ "name" : "MAPREDUCE2_CLIENT" },

{ "name" : "YARN_CLIENT" },

{ "name" : "HDFS_CLIENT" },

{ "name" : "INFRA_SOLR_CLIENT" },

{ "name" : "ZOOKEEPER_CLIENT" },

{ "name" : "ZOOKEEPER_SERVER" },

{ "name" : "SECONDARY_NAMENODE" },

{ "name" : "RESOURCEMANAGER" },

{ "name" : "APP_TIMELINE_SERVER" },

{ "name" : "HISTORYSERVER" },

{ "name" : "METRICS_MONITOR" },

{ "name" : "DATANODE" },

{ "name" : "NODEMANAGER" }

]

},

{

"name" : "slave-nodes",

"configurations" : [ ],

"components" : [

{ "name" : "MAPREDUCE2_CLIENT" },

{ "name" : "YARN_CLIENT" },

{ "name" : "HDFS_CLIENT" },

{ "name" : "INFRA_SOLR_CLIENT" },

{ "name" : "ZOOKEEPER_CLIENT" },

{ "name" : "ZOOKEEPER_SERVER" },

{ "name" : "DATANODE" },

{ "name" : "NODEMANAGER" },

{ "name" : "METRICS_MONITOR" },

{ "name" : "METRICS_COLLECTOR" }

]

}

],

"Blueprints" : {

"stack_name" : "HDP",

"stack_version" : "2.6"

}

}As known, the blueprint can trigger Ambari server to do the automatic installation of hdp without manual operations. The underlying procedure implemented in ansible script is same as the standard blueprint installation as in the following link

https://community.hortonworks.com/content/kbentry/47171/automate-hdp-installation-using-ambari-bluep...

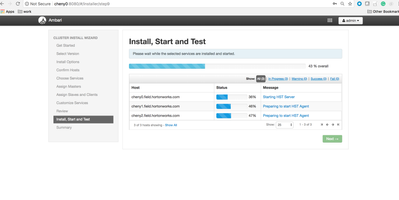

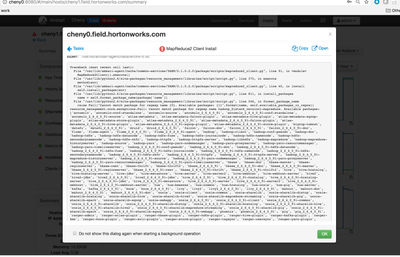

And now when you turn to web browser and open the first page of ambari server, you'll see the following installation progress bar

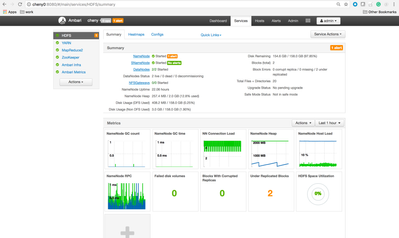

And finally, we will get an installed hdp launched on your cluster

Now, need to explain why I don't employ the latest version 2.6.1.5-3 of Ambari server, because I tried it coupled with HDP 2.6.4.0-91, often reapt the failure on master node.

That error says cannot match package for hadoop_${stack_version}-mapreduce when it's trying to install mapreduce2 client. I ever tried to first manually successfully install the hpd, and then export blueprint and use this same blueprint to trigger the installation and also block at the same failure.

I've checked that this package is actually installed on the master node during ambari server installation, it should not prompt up again when installing hdp. It might be a bug, but here need more effort to dive into the concret ambari Python script, so a way around is downgrade ambari server, that is why here we use ambari server 2.5*.

More important thing that I almost forget is my full deck of ansible script that you leave your mailbox address below, that I can share, sorry for the time being cannot share in the clould.

Created on 07-11-2018 07:07 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Chen,

Can you please share the fulld eck of ansible script?

My mail id - gvfriend2003@gmail.com

Greatly appreciate your effort in creating teh ansible scripts for cluster automation.

Thanks,

Venkat