Community Articles

- Cloudera Community

- Support

- Community Articles

- Running Hive LLAP on specific Nodes using YARN Nod...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-06-2018 04:59 PM - edited 08-17-2019 09:10 AM

In this tutorial we will walkthrough the steps to enable YARN Node labels so that we can run LLAP on a specific set of nodes in the cluster. (Stack Version HDP 2.6.3)

In the first step we will check if we have any NodeLabels created on the cluster and the command is as follows

[$] yarn cluster --list-node-labels

18/02/05 10:32:29 INFO client.RMProxy: Connecting to ResourceManager at stocks.hdp/192.168.1.200:8050 18/02/05 10:32:31 INFO client.AHSProxy: Connecting to Application History server at stocks.hdp/192.168.1.200:10200

Node Labels:

This shows that there are no node labels configured on the cluster.

We now try to add a new node label, we will use this node label to assign to LLAP queue, so that LLAP runs on that specific node(s).

For now we will keep the node label exclusive, so that only users in LLAP queue can access these nodes

[$] yarn rmadmin -addToClusterNodeLabels "interactive(exclusive=true)”

18/02/05 10:33:25 INFO client.RMProxy: Connecting to ResourceManager at stocks.hdp/192.168.1.200:8141 addToClusterNodeLabels: java.io.IOException: Node-label-based scheduling is disabled. Please check yarn.node-labels.enabled

We get an exception saying Node-label-based scheduling is disabled, you may or may not get this error depending on your cluster config. If you do get this error, you will have to enable yarn.node-labels.enabled property in yarn-site.xml.

(Note: If user don’t specify “(exclusive=…)”, execlusive will be true by default)

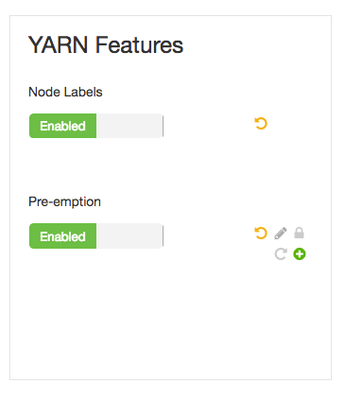

In Ambari you can use YARN config to enable node-labels, while we are at it we will also enable pre-emption. If Premption is enabled, higher-priority application do not have to wait because lower priority application have taken up the available capacity. With Preemption enabled, under-served queues can begin to claim their allocated cluster resources almost immediately, without having to wait for other queues applications to finish running.

We can now create a node label

[yarn@stocks ~]$ yarn rmadmin -addToClusterNodeLabels "interactive(exclusive=true)"

18/02/05 17:00:42 INFO client.RMProxy: Connecting to ResourceManager at stocks.hdp/192.168.1.200:8141

[yarn@stocks ~]$ yarn rmadmin -addToClusterNodeLabels "high-mem(exclusive=true)"

18/02/05 17:00:54 INFO client.RMProxy: Connecting to ResourceManager at stocks.hdp/192.168.1.200:8141

[yarn@stocks ~]$ yarn rmadmin -addToClusterNodeLabels "balanced(exclusive=true)"

18/02/05 17:02:37 INFO client.RMProxy: Connecting to ResourceManager at stocks.hdp/192.168.1.200:8141

We list the Node Labels again

[yarn@stocks ~]$ yarn cluster --list-node-labels

18/02/06 09:41:34 INFO client.RMProxy: Connecting to ResourceManager at stocks.hdp/192.168.1.200:8050

18/02/06 09:41:35 INFO client.AHSProxy: Connecting to Application History server at stocks.hdp/192.168.1.200:10200

Node Labels: <high-mem:exclusivity=true>,<interactive:exclusivity=true>,<balanced:exclusivity=true>

Now we have Node Label for high-men which we will use for spark jobs, interactive label will be used for LLAP and balanced will be used for the rest of the jobs.

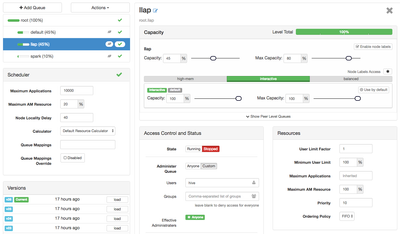

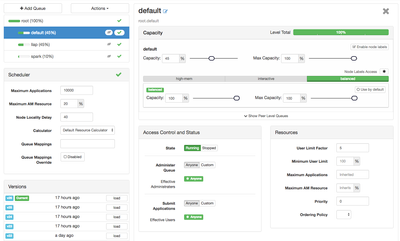

We can now go back to Ambari and view the YARN Queue Manager, node labels will now be available for the YARN Queues.

We assign Node Label interactive to LLAP and Node Label high-mem to spark queue, and give balanced to the default queue. 100% capacity of each Node Label to the queue (exclusive, adjust based on your workload or if multiple queues are sharing the Node Label).

- Node Label Assignment for LLAP Queue

- Node Label Assignment for Spark Queue

- Node Label Assignment for Default Queue

If you need to remove node labels you can use below command

[yarn@stocks ~]$ yarn rmadmin -removeFromClusterNodeLabels “<Node Label Name1>,<Node Label Name2>”

Make sure the Node labels are not assigned to any queue otherwise you will get an exception like “cannot remove nodes label=<label>, because queue=blahblah is using this label. Please remove label on queue before remove the label”

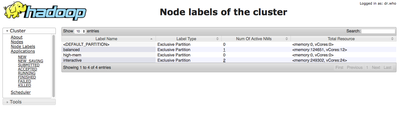

If we look at the YARN Resource Manager UI, you will now be able to see the Node Labels

Note that none of the node labels have any active NodeManagers assigned to them and hence no resources < memory:0, vCores:0>, lets assign some nodes to each of the labels and revisit the YARN UI.

Since we have only 3 nodes in my environment, we will assign a single node to each NodeLabel, you can decide how many nodes you want to assign to each label based on the amount of memory and vCores for each type of workload.

[yarn@stocks ~]$ yarn rmadmin -replaceLabelsOnNode "dn1.stocks.hdp=interactive"

18/02/06 10:10:26 INFO client.RMProxy: Connecting to ResourceManager at stocks.hdp/192.168.1.200:8141

[yarn@stocks ~]$ yarn rmadmin -replaceLabelsOnNode "dn2.stocks.hdp=balanced"

18/02/06 10:10:44 INFO client.RMProxy: Connecting to ResourceManager at stocks.hdp/192.168.1.200:8141

[yarn@stocks ~]$ yarn rmadmin -replaceLabelsOnNode “stocks.hdp=interactive"

18/02/06 10:10:55 INFO client.RMProxy: Connecting to ResourceManager at stocks.hdp/192.168.1.200:8141

Now when we revisit the Yarn UI, we will see the resources assigned to each NodeLabel

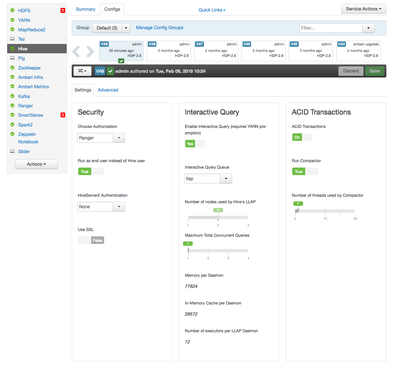

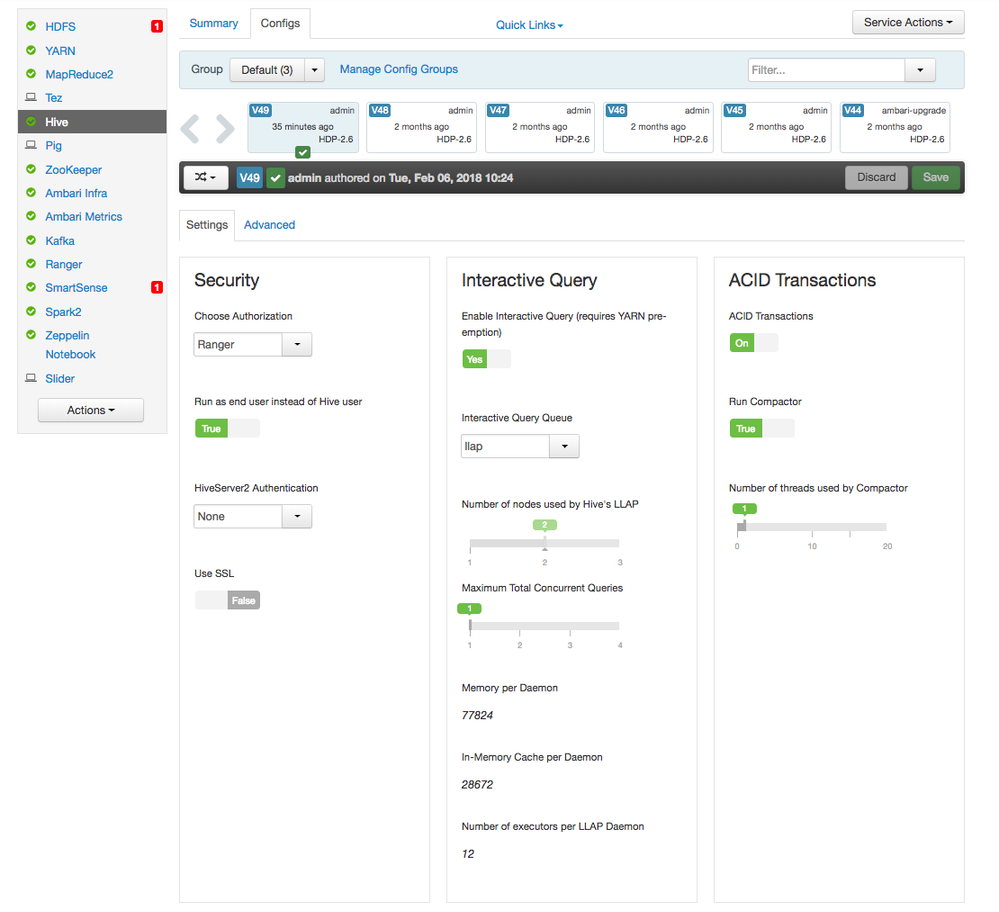

We now start LLAP on the cluster, assign llap queue which has the two nodes assigned with Node Labels, memory per daemon ~ 78Gb, in-memory cache per daemon ~ 29GB, and number of executors per daemon = 12.

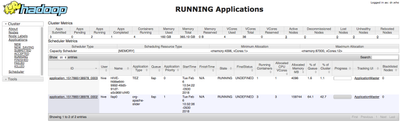

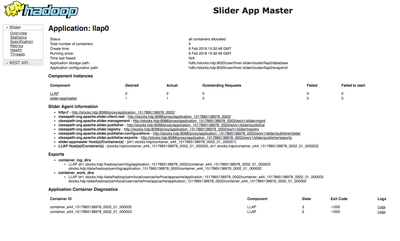

If we look at the Running Applications in YARN we will see 1 TEZ AM Container and 1 Slider App Master and 2 LLAP Daemons running

Created on 04-03-2019 07:56 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

According tto the documentation in this link:

https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.1.0/data-operating-system/content/partition_a_c...

the information in this articulo is wrong because if you assing all the nodes to 3 different exclusive Node Label partitions, AND you do not set the default Node Label in the default Queue then you will not have resources available to run any Job sent to the default queue without an explicit label.

Created on 07-27-2020 11:04 PM - edited 07-27-2020 11:07 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@mqadri

Thank you for sharing very useful information.

I tried configuring Hive LLAP daemons with YARN Node labelling in HDP3 by following your post. But LLAP daemons not running on YARN node label, Instead LLAP daemons running on another nodes which are not part of Node label..(there was no errors in RM and HiveInteractive server)

Is HDP3 has different Node label setup for LLAP daemons? Could you please guide me?

Thanks in advance!