Community Articles

- Cloudera Community

- Support

- Community Articles

- Setup cross realm trust between two MIT KDC

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 08-11-2016 07:16 AM

How to setup cross realm trust between two MIT KDC – In this post, we will see how to setup cross realm trust between two MIT KDC. We can access and copy data from one cluster to another if the cross realm trust is setup correctly.

.

In our example, we have 2 clusters with same HDP version(2.4.2.0) and Ambari version(2.2.2.0)

.

Cluster 1:

172.26.68.47 hwx-1.hwx.com hwx-1 172.26.68.46 hwx-2.hwx.com hwx-2 172.26.68.45 hwx-3.hwx.com hwx-3

Cluster 2:

172.26.68.48 support-1.support.com support-1 172.26.68.49 support-2.support.com support-2 172.26.68.50 support-3.support.com support-3

.

Below are the steps:

.

Step 1: Make sure both the clusters are kerberized with MIT KDC. You can use below automated script for configuring Kerberos on HDP.

.

Step 2: Please configure /etc/hosts file on both the clusters to have Ip <-> hostname mappings.

Example:

On both clusters /etc/hosts file should look like below:

172.26.68.47 hwx-1.hwx.com hwx-1 172.26.68.46 hwx-2.hwx.com hwx-2 172.26.68.45 hwx-3.hwx.com hwx-3 172.26.68.48 support-1.support.com support-1 172.26.68.49 support-2.support.com support-2 172.26.68.50 support-3.support.com support-3

.

Step 3: Configure krb5.conf:

.

3.1 Configure [realm] section to add another cluster’s KDC server details – This is required to find KDC to authenticate user which belongs to another cluster.

Example on Cluster1:

[realms]

HWX.COM = {

admin_server = hwx-1.hwx.com

kdc = hwx-1.hwx.com

}

SUPPORT.COM = {

admin_server = support-1.support.com

kdc = support-1.support.com

}

.

3.2 Configure [domain_realm] section to add another cluster’s domain <-> realm mapping.[domain_realm] .hwx.com = HWX.COM hwx.com = HWX.COM .support.com = SUPPORT.COM support.com = SUPPORT.COM

.

3.3 Configure [capaths] to add another cluster’s realm[capaths]

HWX.COM = {

SUPPORT.COM = .

}On Cluster 1, the krb5.conf should look like below:

[libdefaults]

renew_lifetime = 7d

forwardable = true

default_realm = HWX.COM

ticket_lifetime = 24h

dns_lookup_realm = false

dns_lookup_kdc = false

#default_tgs_enctypes = aes des3-cbc-sha1 rc4 des-cbc-md5

#default_tkt_enctypes = aes des3-cbc-sha1 rc4 des-cbc-md5

[logging]

default = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

kdc = FILE:/var/log/krb5kdc.log

[realms]

HWX.COM = {

admin_server = hwx-1.hwx.com

kdc = hwx-1.hwx.com

}

SUPPORT.COM = {

admin_server = support-1.support.com

kdc = support-1.support.com

}

[domain_realm]

.hwx.com = HWX.COM

hwx.com = HWX.COM

.support.com = SUPPORT.COM

support.com = SUPPORT.COM

[capaths]

HWX.COM = {

SUPPORT.COM = .

}Note – Please copy modified /etc/krb5.conf to all the nodes in Cluster 1

.

Similarly on Cluster2, the krb5.conf should look like below:

[libdefaults]

renew_lifetime = 7d

forwardable = true

default_realm = SUPPORT.COM

ticket_lifetime = 24h

dns_lookup_realm = false

dns_lookup_kdc = false

#default_tgs_enctypes = aes des3-cbc-sha1 rc4 des-cbc-md5

#default_tkt_enctypes = aes des3-cbc-sha1 rc4 des-cbc-md5

[logging]

default = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

kdc = FILE:/var/log/krb5kdc.log

[realms]

SUPPORT.COM = {

admin_server = support-1.support.com

kdc = support-1.support.com

}

HWX.COM = {

admin_server = hwx-1.hwx.com

kdc = hwx-1.hwx.com

}

[domain_realm]

.hwx.com = HWX.COM

hwx.com = HWX.COM

.support.com = SUPPORT.COM

support.com = SUPPORT.COM

[capaths]

SUPPORT.COM = {

HWX.COM = .

}Note – Please copy modified /etc/krb5.conf to all the nodes in Cluster 2

.

Step 4: Modify below property in hdfs-site.xml on a cluster from where you want to execute distcp command ( specifically speaking - client side )

dfs.namenode.kerberos.principal.pattern=*

.

Step 5: Add a common trust principal in both the KDCs. Please keep same password for both the principals

.

On Cluster 1 and 2, execute below commands in kadmin utility:

addprinc krbtgt/HWX.COM@SUPPORT.COM addprinc krbtgt/SUPPORT.COM@HWX.COM

.

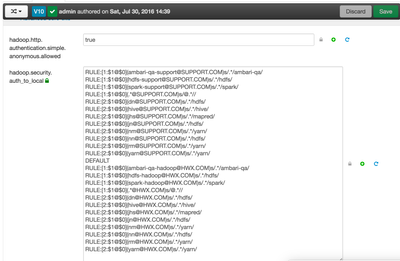

Step 6: Configure auth_to_local rules on both the clusters:

.

On Cluster1, append auth_to_local rules from Cluster2

Example on Cluster 1:

RULE:[1:$1@$0](ambari-qa-hadoop@HWX.COM)s/.*/ambari-qa/ RULE:[1:$1@$0](hdfs-hadoop@HWX.COM)s/.*/hdfs/ RULE:[1:$1@$0](spark-hadoop@HWX.COM)s/.*/spark/ RULE:[1:$1@$0](.*@HWX.COM)s/@.*// RULE:[2:$1@$0](dn@HWX.COM)s/.*/hdfs/ RULE:[2:$1@$0](hive@HWX.COM)s/.*/hive/ RULE:[2:$1@$0](jhs@HWX.COM)s/.*/mapred/ RULE:[2:$1@$0](jn@HWX.COM)s/.*/hdfs/ RULE:[2:$1@$0](nm@HWX.COM)s/.*/yarn/ RULE:[2:$1@$0](nn@HWX.COM)s/.*/hdfs/ RULE:[2:$1@$0](rm@HWX.COM)s/.*/yarn/ RULE:[2:$1@$0](yarn@HWX.COM)s/.*/yarn/ DEFAULT RULE:[1:$1@$0](ambari-qa-support@SUPPORT.COM)s/.*/ambari-qa/ RULE:[1:$1@$0](hdfs-support@SUPPORT.COM)s/.*/hdfs/ RULE:[1:$1@$0](spark-support@SUPPORT.COM)s/.*/spark/ RULE:[1:$1@$0](.*@SUPPORT.COM)s/@.*// RULE:[2:$1@$0](dn@SUPPORT.COM)s/.*/hdfs/ RULE:[2:$1@$0](hive@SUPPORT.COM)s/.*/hive/ RULE:[2:$1@$0](jhs@SUPPORT.COM)s/.*/mapred/ RULE:[2:$1@$0](jn@SUPPORT.COM)s/.*/hdfs/ RULE:[2:$1@$0](nm@SUPPORT.COM)s/.*/yarn/ RULE:[2:$1@$0](nn@SUPPORT.COM)s/.*/hdfs/ RULE:[2:$1@$0](rm@SUPPORT.COM)s/.*/yarn/ RULE:[2:$1@$0](yarn@SUPPORT.COM)s/.*/yarn/

.

On Cluster2, append auth_to_local rules from Cluster1

Example on Cluster 2:

RULE:[1:$1@$0](ambari-qa-support@SUPPORT.COM)s/.*/ambari-qa/ RULE:[1:$1@$0](hdfs-support@SUPPORT.COM)s/.*/hdfs/ RULE:[1:$1@$0](spark-support@SUPPORT.COM)s/.*/spark/ RULE:[1:$1@$0](.*@SUPPORT.COM)s/@.*// RULE:[2:$1@$0](dn@SUPPORT.COM)s/.*/hdfs/ RULE:[2:$1@$0](hive@SUPPORT.COM)s/.*/hive/ RULE:[2:$1@$0](jhs@SUPPORT.COM)s/.*/mapred/ RULE:[2:$1@$0](jn@SUPPORT.COM)s/.*/hdfs/ RULE:[2:$1@$0](nm@SUPPORT.COM)s/.*/yarn/ RULE:[2:$1@$0](nn@SUPPORT.COM)s/.*/hdfs/ RULE:[2:$1@$0](rm@SUPPORT.COM)s/.*/yarn/ RULE:[2:$1@$0](yarn@SUPPORT.COM)s/.*/yarn/ DEFAULT RULE:[1:$1@$0](ambari-qa-hadoop@HWX.COM)s/.*/ambari-qa/ RULE:[1:$1@$0](hdfs-hadoop@HWX.COM)s/.*/hdfs/ RULE:[1:$1@$0](spark-hadoop@HWX.COM)s/.*/spark/ RULE:[1:$1@$0](.*@HWX.COM)s/@.*// RULE:[2:$1@$0](dn@HWX.COM)s/.*/hdfs/ RULE:[2:$1@$0](hive@HWX.COM)s/.*/hive/ RULE:[2:$1@$0](jhs@HWX.COM)s/.*/mapred/ RULE:[2:$1@$0](jn@HWX.COM)s/.*/hdfs/ RULE:[2:$1@$0](nm@HWX.COM)s/.*/yarn/ RULE:[2:$1@$0](nn@HWX.COM)s/.*/hdfs/ RULE:[2:$1@$0](rm@HWX.COM)s/.*/yarn/ RULE:[2:$1@$0](yarn@HWX.COM)s/.*/yarn/

.

Step 7: Login to Cluster 2, do a kinit by local user and try to access hdfs files of Cluster 1

Example:

hdfs dfs -ls hdfs://hwx-2.hwx.com:8020/tmp Found 8 items drwx------ - ambari-qa hdfs 0 2016-07-29 23:24 hdfs://hwx-2.hwx.com:8020/tmp/ambari-qa drwxr-xr-x - hdfs hdfs 0 2016-07-29 22:02 hdfs://hwx-2.hwx.com:8020/tmp/entity-file-history drwx-wx-wx - ambari-qa hdfs 0 2016-07-29 23:25 hdfs://hwx-2.hwx.com:8020/tmp/hive -rwxr-xr-x 3 hdfs hdfs 1414 2016-07-29 23:50 hdfs://hwx-2.hwx.com:8020/tmp/id1aac2d44_date502916 -rwxr-xr-x 3 ambari-qa hdfs 1414 2016-07-29 23:26 hdfs://hwx-2.hwx.com:8020/tmp/idtest.ambari-qa.1469834803.19.in -rwxr-xr-x 3 ambari-qa hdfs 957 2016-07-29 23:26 hdfs://hwx-2.hwx.com:8020/tmp/idtest.ambari-qa.1469834803.19.pig drwxr-xr-x - ambari-qa hdfs 0 2016-07-29 23:53 hdfs://hwx-2.hwx.com:8020/tmp/tezsmokeinput

Note – hwx-2.hwx.com is the Active Namenode of Cluster 1.

.

You can try copying files from Cluster 2 to Cluster 1 using distcp

Example:

[kuldeepk@support-1 root]$ hadoop distcp hdfs://hwx-1.hwx.com:8020/tmp/test.txt /tmp/

16/07/30 22:03:27 INFO tools.DistCp: Input Options: DistCpOptions{atomicCommit=false, syncFolder=false, deleteMissing=false, ignoreFailures=false, maxMaps=20, sslConfigurationFile='null', copyStrategy='uniformsize', sourceFileListing=null, sourcePaths=[hdfs://hwx-1.hwx.com:8020/tmp/test.txt], targetPath=/tmp, targetPathExists=true, preserveRawXattrs=false}

16/07/30 22:03:27 INFO impl.TimelineClientImpl: Timeline service address: http://support-3.support.com:8188/ws/v1/timeline/

16/07/30 22:03:27 INFO client.RMProxy: Connecting to ResourceManager at support-3.support.com/172.26.68.50:8050

16/07/30 22:03:28 INFO hdfs.DFSClient: Created HDFS_DELEGATION_TOKEN token 20 for kuldeepk on 172.26.68.47:8020

16/07/30 22:03:28 INFO security.TokenCache: Got dt for hdfs://hwx-1.hwx.com:8020; Kind: HDFS_DELEGATION_TOKEN, Service: 172.26.68.47:8020, Ident: (HDFS_DELEGATION_TOKEN token 20 for kuldeepk)

16/07/30 22:03:29 INFO impl.TimelineClientImpl: Timeline service address: http://support-3.support.com:8188/ws/v1/timeline/

16/07/30 22:03:29 INFO client.RMProxy: Connecting to ResourceManager at support-3.support.com/172.26.68.50:8050

16/07/30 22:03:29 INFO hdfs.DFSClient: Created HDFS_DELEGATION_TOKEN token 24 for kuldeepk on ha-hdfs:support

16/07/30 22:03:29 INFO security.TokenCache: Got dt for hdfs://support; Kind: HDFS_DELEGATION_TOKEN, Service: ha-hdfs:support, Ident: (HDFS_DELEGATION_TOKEN token 24 for kuldeepk)

16/07/30 22:03:29 INFO mapreduce.JobSubmitter: number of splits:1

16/07/30 22:03:29 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1469916118318_0003

16/07/30 22:03:29 INFO mapreduce.JobSubmitter: Kind: HDFS_DELEGATION_TOKEN, Service: 172.26.68.47:8020, Ident: (HDFS_DELEGATION_TOKEN token 20 for kuldeepk)

16/07/30 22:03:29 INFO mapreduce.JobSubmitter: Kind: HDFS_DELEGATION_TOKEN, Service: ha-hdfs:support, Ident: (HDFS_DELEGATION_TOKEN token 24 for kuldeepk)

16/07/30 22:03:30 INFO impl.YarnClientImpl: Submitted application application_1469916118318_0003

16/07/30 22:03:31 INFO mapreduce.Job: The url to track the job: http://support-3.support.com:8088/proxy/application_1469916118318_0003/

16/07/30 22:03:31 INFO tools.DistCp: DistCp job-id: job_1469916118318_0003

16/07/30 22:03:31 INFO mapreduce.Job: Running job: job_1469916118318_0003

16/07/30 22:03:43 INFO mapreduce.Job: Job job_1469916118318_0003 running in uber mode : false

16/07/30 22:03:43 INFO mapreduce.Job: map 0% reduce 0%

16/07/30 22:03:52 INFO mapreduce.Job: map 100% reduce 0%

16/07/30 22:03:53 INFO mapreduce.Job: Job job_1469916118318_0003 completed successfully

16/07/30 22:03:53 INFO mapreduce.Job: Counters: 32

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=142927

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=346

HDFS: Number of bytes written=45

HDFS: Number of read operations=12

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=14324

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=7162

Total vcore-seconds taken by all map tasks=7162

Total megabyte-seconds taken by all map tasks=7333888

Map-Reduce Framework

Map input records=1

Map output records=1

Input split bytes=118

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=77

CPU time spent (ms)=1210

Physical memory (bytes) snapshot=169885696

Virtual memory (bytes) snapshot=2337554432

Total committed heap usage (bytes)=66584576

File Input Format Counters

Bytes Read=228

File Output Format Counters

Bytes Written=45

org.apache.hadoop.tools.mapred.CopyMapper$Counter

BYTESSKIPPED=0

SKIP=1Note – hwx-1.hwx.com is the Active Namenode of Cluster 1.

.

Please comment if you have any feedback/questions/suggestions. Happy Hadooping!! 🙂

References:

https://community.hortonworks.com/articles/18686/kerberos-cross-realm-trust-for-distcp.html

Created on 08-11-2016 12:25 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

In the auth-to-local rule set examples, DEFAULT should be the last rule.

Also, this is a bit more than setting up the trust relationship between two MIT KDCs. It also includes some details about allowing two clusters to access each other's data. To do this, I believe that there are a few more steps. See https://community.hortonworks.com/articles/18686/kerberos-cross-realm-trust-for-distcp.html

Created on 08-12-2016 05:17 AM - edited 08-17-2019 10:50 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Robert Levas - DEFAULT at the middle worked when I tried this setup.

I checked given article and I agree that modifying dfs.namenode.kerberos.principal.pattern was somehow missed while writing this article. I will add that missing step now.

Thank you! 🙂