Community Articles

- Cloudera Community

- Support

- Community Articles

- Spark Remote Debugging

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-07-2016 11:28 AM - edited 08-17-2019 01:18 PM

In the big data and distributed system world you may have done a world class job in dev and Unit testing, chances are you still did it on sample data moreover in dev you might not have done it in a truly distributed system but more on one machine simulating distribution. Hence on the prod cluster you could rely on logs and sometime you would also like to connect a remote debugger and other tools you are used to. In this blog post I will go over an example in Java and Eclipse. Partly because I use those and also because I see mostly scala examples so let’s give java a little love.

Setting up

I have installed a Hortonworks sandbox for this exercise, you can donwload it here. You will also need to open up a port to bind on, on the last post I used port 7777 i’ll stick with this here as well.

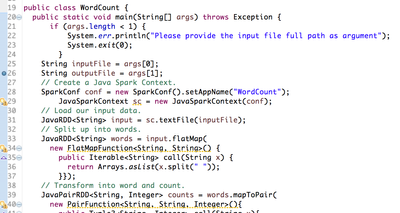

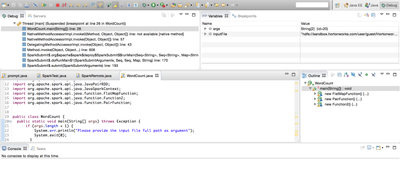

First we will use a very standard wordcount example you can get in all the spark tutorials. Set up a quick maven project to bundle your jar and dependent libs you might be using. Notice the breakpoint on the 26th line, it will make sense later on.

Once your code is done, your unit tests have passed and you are ready to deploy to the cluster, let’s go ahead an build it and push it out the cluster.

Spark deployment mode

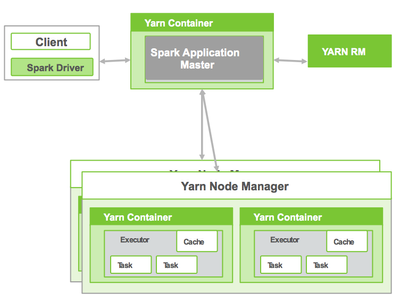

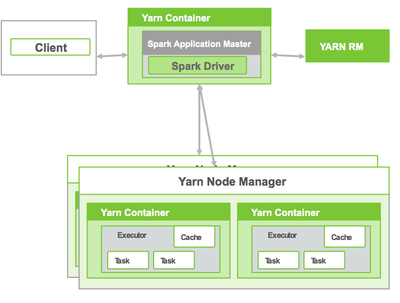

Spark has three modes Standalone,Yarn,Mesos. Most examples talk about standalone so I will focus on Yarn. In yarn every application has an application master process where the first container for the application is started, it responsible for requesting ressources for the application and driving the application as a whole.

For spark this means you get to choose if the yarn application master runs the whole application and hence the spark driver or if your client is active and keeps the spark driver.

Remote debug launch

Now that your jar is on the cluster and you are ready todebug you need to submit your spark job with debug options so your IDE can bind to it. Depending on your Spark version there are different ways to go about this, I am in spark > 1.0 so I will use:

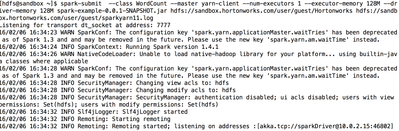

Notice address=7777, back to my port I talked about earlier and suspend=y to have the process wait for my IDE to bind. let’s now launch our normal spark submit command

If we look at the line a little bit closer I have specified low memory settings for my specific sandbox context and given an input file Hortonworks and an outputfile sparkyarn11.log. Notice we are using here the –master yarn-client deployment mode. As the prompt shows us the system is now waiting on port 7777 for the IDE to bind.

IDE

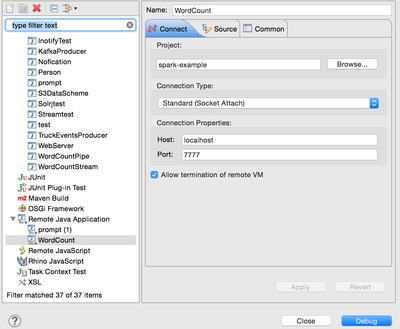

Now back in our IDE we will use the remote debug function to bind to our spark cluster.

Once you run debug the prompt on your cluster will start showing your IDE bind and you can now run your work from Eclipse directly

Back in Eclipse you will be prompted to the debug view and you can go ahead and skip from breakpoint to breakpoint. Remember the breakpoint I pointed out in the code. Your IDE is on this breakpoint waiting to move one. On the right variable panel you can see the file input variable we get from the command line, it is set tohdfs://sandbox.hortonworks.com/user/guest/Hortonworks exactly like our command line input.

Great we have just setup our first remote debug on Spark, you should go ahead and try it with–master yarn-cluster and see what it changes.

Created on 12-01-2016 03:38 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@nmaillard When I run the spark submit command in sandbox after setting the SPAK_SUBMIT_OPTS as specified above, I am getting the below error

************

ERROR: transport library not found: dt-socket ERROR: JDWP Transport dt-socket failed to initialize, TRANSPORT_LOAD(509) JDWP exit error AGENT_ERROR_TRANSPORT_LOAD(196): No transports initialized [../../../src/share/back/debugInit.c:750] FATAL ERROR in native method: JDWP No transports initialized, jvmtiError=AGENT_ERROR_TRANSPORT_LOAD(196) Aborted

***********

Can you please help me to resolve the issue!

Created on 12-13-2016 02:33 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

When I run the spark submit command in sandbox after setting the SPAK_SUBMIT_OPTS as specified above, I am getting the below error

************

ERROR: transport library not found: dt-socket ERROR: JDWP Transport dt-socket failed to initialize, TRANSPORT_LOAD(509) JDWP exit error AGENT_ERROR_TRANSPORT_LOAD(196): No transports initialized [../../../src/share/back/debugInit.c:750] FATAL ERROR in native method: JDWP No transports initialized, jvmtiError=AGENT_ERROR_TRANSPORT_LOAD(196) Aborted

***********

Can you please help me to resolve the issue!

Created on 02-28-2017 04:37 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks for the tutorial. I was able to set up the debugging in standalone mode. Few questions, i tried many other port, but it worked only on 7777. It said "no transports initialized". Next, is there any way to control whether I want to run in debug mode or regular mode. Sorry if its a very beginner's question. Thanks again.