Community Articles

Find and share helpful community-sourced technical articles.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Announcements

Now Live: Explore expert insights and technical deep dives on the new Cloudera Community Blogs — Read the Announcement

- Cloudera Community

- Support

- Community Articles

- Support Video: How to configure Ambari Metrics Sys...

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Community Manager

Created on

12-10-2019

03:42 AM

- edited on

01-04-2021

05:01 AM

by

K23

This video explains how to configure Ambari Metrics System AMS High Availability.

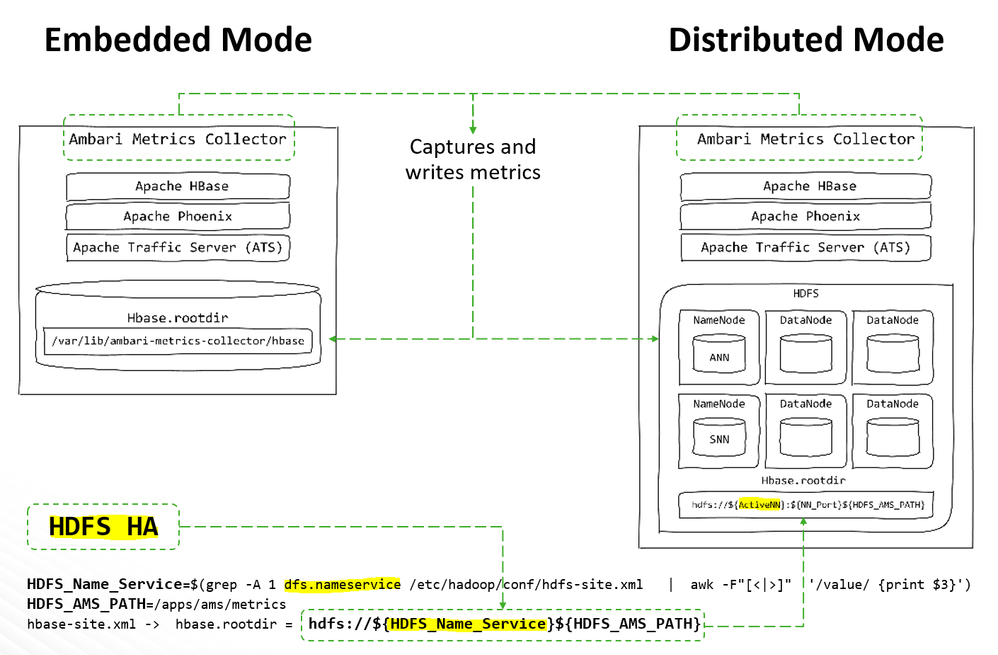

To enable AMS high availability, the collector has to be configured to run in a distributed mode. When the Collector is configured for distributed mode, it writes metrics to HDFS, and the components run in distributed processes, which helps to manage CPU and memory.

The following steps assume a cluster configured for a highly available NameNode.

- Set the HBase Root directory value to use the HDFS name service instead of the NameNode hostname.

- Migrate existing data from the local store to HDFS prior to switching to a distributed mode.

To switch the Metrics Collector from embedded mode to distributed mode, update the Metrics Service operation mode and the location where the metrics are being stored. In summary, the following steps are required:

- Stop Ambari Metrics System

- Prepare the Environment to migrate from Local File System to HDFS

- Migrate Collector Data to HDFS

- Configure Distributed Mode using Ambari

- Restart all affected and Monitoring Collector Log

- Stop all the services associated with the AMS component using Ambari

AmbariUI / Services / Ambari Metrics / Summary / Action / Stop

- Prepare the Environment to migrate from Local File System to HDFS

AMS_User=ams AMS_Group=hadoop AMS_Embedded_RootDir=$(grep -C 2 hbase.rootdir /etc/ambari-metrics-collector/conf/hbase-site.xml | awk -F"[<|>|:]" '/value/ {print $4}' | sed 's|//||1') ActiveNN=$(su -l hdfs -c "hdfs haadmin -getAllServiceState | awk -F '[:| ]' '/active/ {print \$1}'") NN_Port=$(su -l hdfs -c "hdfs haadmin -getAllServiceState | awk -F '[:| ]' '/active/ {print \$2}'") HDFS_Name_Service=$(grep -A 1 dfs.nameservice /etc/hadoop/conf/hdfs-site.xml | awk -F"[<|>]" '/value/ {print $3}') HDFS_AMS_PATH=/apps/ams/metrics - Create the folder for Collector data in HDFS

su -l hdfs -c "hdfs dfs -mkdir -p ${HDFS_AMS_PATH}" su -l hdfs -c "hdfs dfs -chown ${AMS_User}:${AMS_Group} ${HDFS_AMS_PATH}" - Update permissions to be able to copy collector data from local file system to HDFS

namei -l ${AMS_Embedded_RootDir}/staging chmod +rx ${AMS_Embedded_RootDir}/staging - Copy collector data from local file system to HDFS

su -l hdfs -c "hdfs dfs -copyFromLocal ${AMS_Embedded_RootDir} hdfs://${ActiveNN} :${NN_Port}${HDFS_AMS_PATH}" su - hdfs -c "hdfs dfs -chown -R ${AMS_User}:${AMS_Group} ${HDFS_AMS_PATH}" - Configure collector to distrubute mode using Ambari:

AmbariUI / Services / Ambari Metrics / Configs / Metrics Service operation mode = distributed AmbariUI / Services / Ambari Metrics / Configs / Advanced ams-hbase-site / hbase.cluster.distributed = true AmbariUI / Services / Ambari Metrics / Configs / Advanced ams-hbase-site / HBase root directory = hdfs://AMSHA/apps/ams/metrics AmbariUI / Services / HDFS / Configs / Custom core-site hadoop.proxyuser.hdfs.groups = * hadoop.proxyuser.root.groups = * hadoop.proxyuser.hdfs.hosts = * hadoop.proxyuser.root.hosts = * AmbariUI / Services / HDFS / Configs / HDFS Short-circuit read /Advanced hdfs-site = true (check) AmbariUI -> Restart All required

Note: Impersonation is the ability to allow a service user to securely access data in Hadoop on behalf of another user. When proxy users is configured, any access using a proxy are executed with the impersonated user's existing privilege levels rather than those of a superuser, like HDFS. The behavior is similar when using proxy hosts. Basically, it limits the hosts from which impersonated connections are allowed. For this article and testing purposes, all users and all hosts are allowed.

Additionally, one of the key principles behind Apache Hadoop is the idea that moving computation is cheaper than moving data. With Short-Circuit Local Reads, since the client and the data are on the same node, there is no need for the DataNode to be in the data path. Rather, the client itself can simply read the data from the local disk improving performance

Once the AMS is up and running, in the Metrics Collector Log the following message is displayed:

Since distributed mode is already enabled, after adding the collector, start the service.

Once the AMS is up and running, the following message is displayed in the Metrics Collector Log:

2018-12-12 01:21:12,132 INFO org.eclipse.jetty.server.Server: Started @14700ms 2018-12-12 01:21:12,132 INFO org.apache.hadoop.yarn.webapp.WebApps: Web app timeline started at 6188 2018-12-12 01:21:40,633 INFO org.apache.ambari.metrics.core.timeline.availability.MetricCollectorHAController: ######################### Cluster HA state ######################## CLUSTER: ambari-metrics-cluster RESOURCE: METRIC_AGGREGATORS PARTITION: METRIC_AGGREGATORS_0 c3132-node2.user.local_12001 ONLINE PARTITION: METRIC_AGGREGATORS_1 c3132-node2.user.local_12001 ONLINE ##################################################According to above message, there a cluster with only one collector. The next logical step, will be adding an additional Collector from Ambari Server. To do this, run the following:

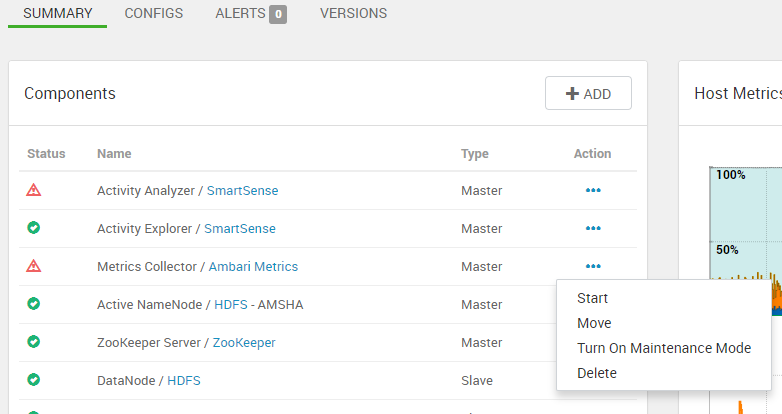

AmbariUI / Hosts / c3132-node3.user.local / Summary -> +ADD -> Metrics CollectorNote: c3132-node3.user.local is the node where you will be adding the Collector.

Since distributed mode is already enabled, after adding the collector, start the service.

Once the AMS is up and running, the following message is displayed in the Metrics Collector Log:

2018-12-12 01:34:56,060 INFO org.apache.ambari.metrics.core.timeline.availability.MetricCollectorHAController: ######################### Cluster HA state ######################## CLUSTER: ambari-metrics-cluster RESOURCE: METRIC_AGGREGATORS PARTITION: METRIC_AGGREGATORS_0 c3132-node2.user.local_12001 ONLINE PARTITION: METRIC_AGGREGATORS_1 c3132-node3.user.local_12001 ONLINE ##################################################According to the above message, the cluster has two collectors.