Community Articles

- Cloudera Community

- Support

- Community Articles

- Tackling Data Science challenges with Hortonworks ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-03-2018 04:47 AM - edited 09-16-2022 01:42 AM

In this article, we will see how an organization can tackle the data science challenge for an Internet of things use case. This article shows how a trucking company can leverage data and analytics to solve its logistics problem by leveraging Hortonworks Data Platform (HDP), Apache NiFi, and IBM’s Data Science Experience (DSX) to build a real-time data science solution to predict any violation events by the driver. IBM DSX is a data science platform from IBM that provides all favorite data science toolkits at one place. DSX and HDP solve some of the major pain points of running data science at scale such as - ease of deployment and maintenance of machine learning models, scaling the developed model to big data scale, ease of using different open source tools, and collaboration. DSX comes in multiple flavors: cloud, desktop, and local. DSX can run on top of you HDP cluster to provide advanced analytics capabilities. Following diagram show major stages of a data science project -

Stage 1: Problem Definition

Imagine a trucking company that dispatches trucks across the country. The trucks are outfitted with sensors that collect data. For instance, the location of the driver, weather conditions, and recent events such as speeding, the truck weaving out of its lane, or following too closely. This data is generated once per second and streamed back to the company’s servers. The company needs a way to process this stream of data and run some analysis on the data so that it can make sure trucks are traveling safe and that the driver is not likely to make any violations anytime soon. And all this has to be done in real-time.

Stage 2: ETL and Feature Extractions

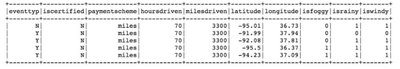

I am creating a Jupyter notebook with Python to solve this problem which is provided on DSX. DSX also offer the choice of Scala and R. This saves me time in installations and preparing my work environment. Now, to predict violation events, we need to decide which features of the gathered data are important. We are using historical data that has been gathered about the trucks. With DSX we don't worry about fetching data, DSX offer connectors that let a user fetch data from multiple sources such as HDFS, Hive, DB2 or any other source. Our data resides in HDFS with several features such as location, miles driven by a driver, and weather conditions. We perform feature engineering to create new features, perform exploratory analysis to find correlations between different features. DSX supports open source libraries such as Matplotlib, Seaborn and also offer IBM visualization libraries such as Brunel and PixieDust.

Stage 3: Machine Learning

Once the data is cleaned and ready, we build a binary classification model. In this demo, we are using SparkML Random Forest classifier to classify if a driver is likely to make violation (T) or it's unlikely (F). We split the data into training and testing [80:20] and train the model on training data. After training the model is evaluated for accuracy, precision & recall, and area under ROC curve against the test data. Once satisfied with the model accuracy, we save the model and deploy it.

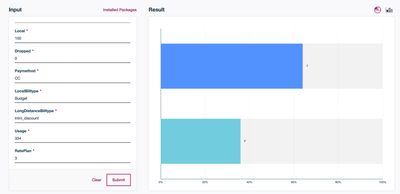

Stage 4: Model Deployment and Management

DSX models are deployed as REST endpoints which allow developers to easily access these deployed models and integrate into the applications. This approach drastically reduces the time to build, deploy, and integrate new models. All that is required to be changed in existing code is the REST endpoint. DSX Models can be tested over DSX UI or by making RESTful API calls from terminals.

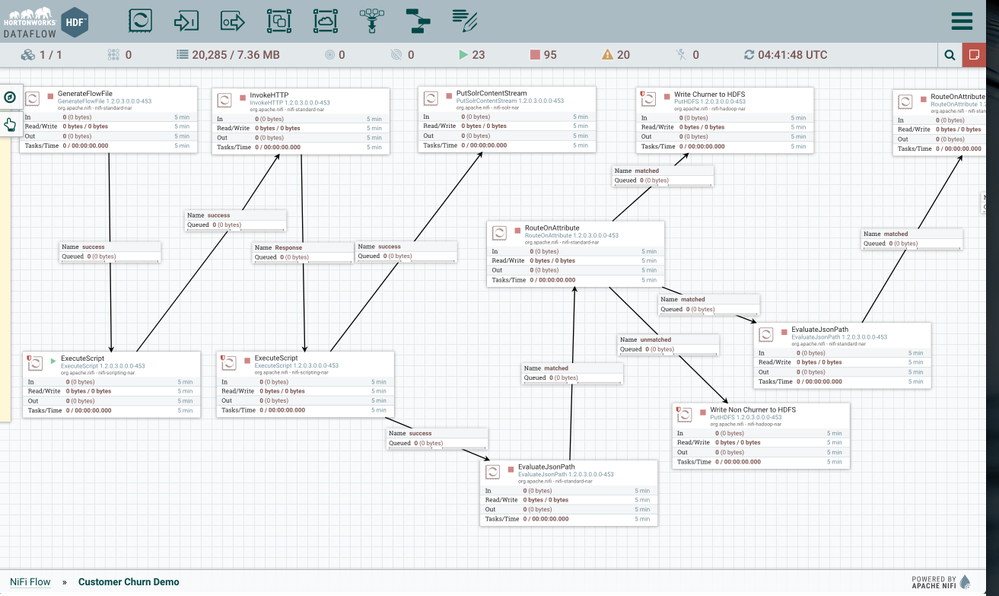

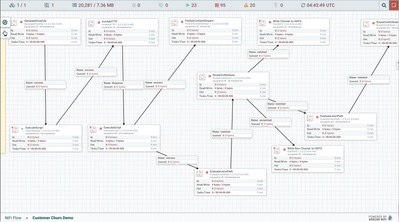

In addition to the ease of model deployment, managing the deployed models is easier too. DSX offers scheduling evaluations of a model that have been deployed, so a user can consistently monitor model performance and retrain the model if the performance of current model falls short of the expected level. Now, as the model has been deployed we can integrate this model into NiFi to demonstrate real-time event scoring. We simulate an end-to-end flow in Apache NiFi shown in the diagram below.

End-To-End Application Flow.

We are generating simulated events using one of the processors, these events are sent to the model that is being hosted at REST endpoint. The results from the model are then returned as T or F based on the probability of whether the incoming event can be a violation or not. Based on the prediction we create a subsequent flow where we can alter the driver.

Summary

Next step in this demo can be to build a dashboard that would allow to visualize the result and improve performance monitoring and trigger alerts for the trucking fleet. These alerts would be both useful to the trucking management as well as to the individual drivers who could take corrective action to diminish the probability of a violation.

For further reading and detail demo, please refer to this articleIOT AND DATA SCIENCE – A TRUCKING DEMO ON DSX LOCAL WITH APACHE NIFI