Community Articles

- Cloudera Community

- Support

- Community Articles

- Tensorflow Serving with Docker on YARN

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 01-16-2019 12:07 PM - edited 08-17-2019 05:12 AM

Abstract

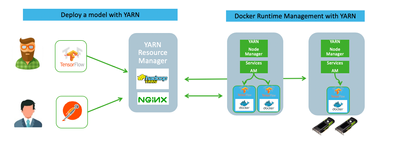

Tensorflow-serving with Apache Hadoop 3.1 and YARN resource management. YARN manages the startup, control and destroys the Tensorflow-serving Docker container in a Hadoop cluster. Client applications using RESTful API calls to communicate with the ML application, i.e. to make predictions.

Infrastructure Setup

Allocate a Centos 7.4 virtual machine and install:

- Docker CE Installation and start Docker

- Installation of required packages: amber, curl, wget, unzip, git

- Install and Download HDP Sandbox version see this Article Docker on Yarn - Sandbox

wget https://github.com/SamHjelmfelt/Amber/archive/master.zip unzip master.zip cd Amber-master ./amber.sh createFromPrebuiltSample samples/yarnquickstart/yarnquickstart-sample.ini

Output snippet:

... Verifying ambari-agent process status... Ambari Agent successfully started Agent PID at: /run/ambari-agent/ambari-agent.pid Agent out at: /var/log/ambari-agent/ambari-agent.out Agent log at: /var/log/ambari-agent/ambari-agent.log Waiting for agent heartbeat... Starting all services. Visit http://localhost:8080 to view the status

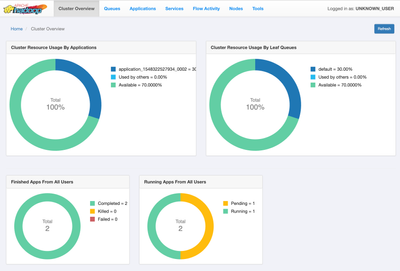

Check that the installation is successful by web browser login to Ambari or http://hostname:8080 Login:admin/admin and go to YARN -> Resource Manager UI

alternative checking docker state command with the CLI

# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 3da7cecdb948 samhjelmfelt/amber_yarnquickstart:3.0.1.0-187 "/usr/sbin/init" 36 minutes ago Up 36 minutes 0.0.0.0:8042->8042/tcp, 0.0.0.0:8080->8080/tcp, 0.0.0.0:8088->8088/tcp resourcemanager.yarnquickstart

Download and update the tenforflow/serving Image

Next we need to load the following to the tensorflow/serving image from the docker repository.

see: Tensorflow Serving Docker

Download the Tensorflow model i.e. from Github

mkdir -p /tmp/tfserving cd /tmp/tfserving git clone https://github.com/tensorflow/serving

Output: Cloning into 'serving'... remote: Enumerating objects: 134, done. remote: Counting objects: 100% (134/134), done. remote: Compressing objects: 100% (71/71), done. remote: Total 14717 (delta 93), reused 93 (delta 63), pack-reused 14583 Receiving objects: 100% (14717/14717), 4.20 MiB | 163.00 KiB/s, done. Resolving deltas: 100% (10777/10777), done.

Deploy the model: saved_model_half_plus_two_cpu with the Tensorflow Serving REST API. This model is a very simple function that the input parameter values is divided by two and then adding two i.e. input 1.0 output 2.5 math=((1.0/2)+2).

docker run -p 8501:8501 --mount type=bind,source=/tmp/tfserving/serving/tensorflow_serving/servables/tensorflow/testdata/saved_model_half_plus_two_cpu,target=/models/half_plus_two -e MODEL_NAME=half_plus_two -t tensorflow/serving

Now we need to add a valid user and group to the running tensorflow container. We provision jobs in YARN with the ambari-aq (uid: 1002) unix user, who belongs to the unix group hadoop (gid: 1001). The uid and gui are required because YARN starts the docker container by these numbers.

First identify the container (here we use the ID)

docker ps

output

CONTAINER ID IMAGE COMMAND 1bbcb1a800a8 tensorflow/serving "/user/bin/tf_serving..." 2d01ca988f2f samhjelmfeld/amber.. "/user/sbin/int"

Next add the system user, group and initial model to the tensorflow container. Then we create a new image with the docker commit

docker exec -it 1bbcb1a800a8 sh -c "groupadd hadoop -g 1001" docker exec -it 1bbcb1a800a8 sh -c "useradd ambari-qa -u 1002 -g 1001" cd tmp/tfserving/serving/tensorflow_serving/servables/tensorflow/testdata/ docker cp saved_model_half_plus_two_cpu 1bbcb1a800a8:/models/half_plus_two docker commit 1bbcb1a800a8 tensorflow/yarn

Note that we have change the name of the image to tensorflow/yarn.

Create YARN Application definition

Create a YARN service definition by copy below definition into a file name i.e. tf.json

{

"name": "tensorflow-serving",

"version": "1.0.0",

"description": "Tensorflow example",

"components" :

[

{

"name": "tensorflow",

"number_of_containers": 1,

"artifact": {

"id": "tensorflow/serving",

"type": "DOCKER"

},

"launch_command": "",

"resource": {

"cpus": 1,

"memory": "512"

},

"configuration": {

"env": {

"YARN_CONTAINER_RUNTIME_DOCKER_RUN_OVERRIDE_DISABLE": "true"

}

}

}

]

}

Now you can deploy the application Tensorflow Serving with YARN API.

curl -X POST -H "Content-Type: application/json" http://frothkoe10.field.hortonworks.com:8088/app/v1/services?user.name=ambari-qa -d @tf.json

output

{"uri":"/v1/services/tensorflow-serving","diagnostics":"Application ID: application_1547627739370_0003","state":"ACCEPTED"}If you successfully provisioned the job to YARN the return state is "ACCEPTED". It will need a few seconds to allocate the resources and start the docker container.

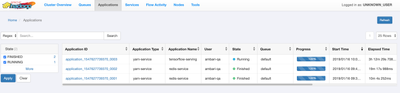

See the application Tensorflow Serving is running with the YARN UI http://nodename:8088/ui2/#/yarn-apps/apps (check column Application Name):

More details of the YARN application you get with the CLI command and REST API

curl http://localhost:8088/app/v1/services/tensorflow-serving?user.name=ambari-qa | python -m json.tool

output

{

"components": [

{

"artifact": {

"id": "tensorflow/yarn",

"type": "DOCKER"

},

"configuration": {

"env": {

"MODEL_BASE_PATH": "/models",

"MODEL_NAME": "half_plus_two",

"YARN_CONTAINER_RUNTIME_DOCKER_RUN_OVERRIDE_DISABLE": "true"

},

"files": [],

"properties": {}

},

"containers": [

{

"bare_host": "resourcemanager.yarnquickstart",

"component_instance_name": "tensorflow-0",

"hostname": "tensorflow-0.tensorflow-serving.ambari-qa.EXAMPLE.COM",

"id": "container_e09_1548104697693_0003_01_000002",

"ip": "172.17.0.3",

"launch_time": 1548165579806,

"state": "READY"

}

],

"dependencies": [],

"launch_command": "/usr/bin/tf_serving_entrypoint.sh",

"name": "tensorflow",

"number_of_containers": 1,

"quicklinks": [],

"resource": {

"additional": {},

"cpus": 1,

"memory": "256"

},

"restart_policy": "ALWAYS",

"run_privileged_container": false,

"state": "STABLE"

}

],

"configuration": {

"env": {},

"files": [],

"properties": {}

},

"description": "Tensorflow example",

"id": "application_1548104697693_0003",

"kerberos_principal": {},

"lifetime": -1,

"name": "tensorflow-serving",

"quicklinks": {},

"state": "STABLE",

"version": "1.0.0"

}

Add HTTP Proxy

Because the YARN containers not expose ports to external network we add a http proxy service, i.e. nginx.

mkdir proxy cd proxy mkdir conf.d

To configure the proxy service we need two configuration files

nginx.conf

This file in the proxy directory.

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

client_max_body_size 200m;

proxy_connect_timeout 60s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

proxy_buffering on;

proxy_buffer_size 128k;

proxy_buffers 100 128k;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}

conf.d/http.conf

In this file you have evtl. update the ip address of the previous started container section "ip": "172.17.0.3" in the output or the YARN REST API.

server {

listen 8500;

location / {

proxy_pass http://172.17.0.3;

}

}

server {

listen 8501;

location / {

proxy_pass http://172.17.0.3;

}

}

Now we can run the nginx docker container with this configuration (here we use hortonworks/sanbox-proxy image). The -v options mount the two above configuration files to the container.

docker run --name yarn.proxy --network=amber -v /home/centos/Amber-master/proxy/nginx.conf:/etc/nginx/nginx.conf -v /home/centos/Amber-master/proxy/conf.d:/etc/nginx/conf.d -p 8500:8500 -p 8501:8501 -d hortonworks/sandbox-proxy:1.0

This docker command will download the image and start proxy incoming http request on the port 8500/8501 to the docker container tensorflow/yarn.

Information:

yarn.nodemanager.runtime.linux.docker.default-container-network = amber

This is the docker network where the Tensorflow containers running and it is important to understand that the http proxy is in the same docker network as the tensorflow containers. You can view or change that in the Ambari YARN advanced configuration.

Check that Tensorflow Model is deployed and accessible

Let's check that it works end-to-end and run the cmd from a remote client.

curl http://hostname_or_ip:8501/v1/models/half_plus_two

output:

{

"model_version_status": [

{

"version": "123",

"state": "AVAILABLE",

"status": {

"error_code": "OK",

"error_message": ""

}

}

]

}

The state should be "AVAILABLE"

Use the Tensorflow Model

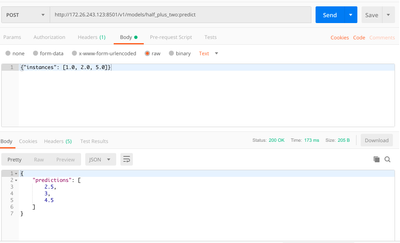

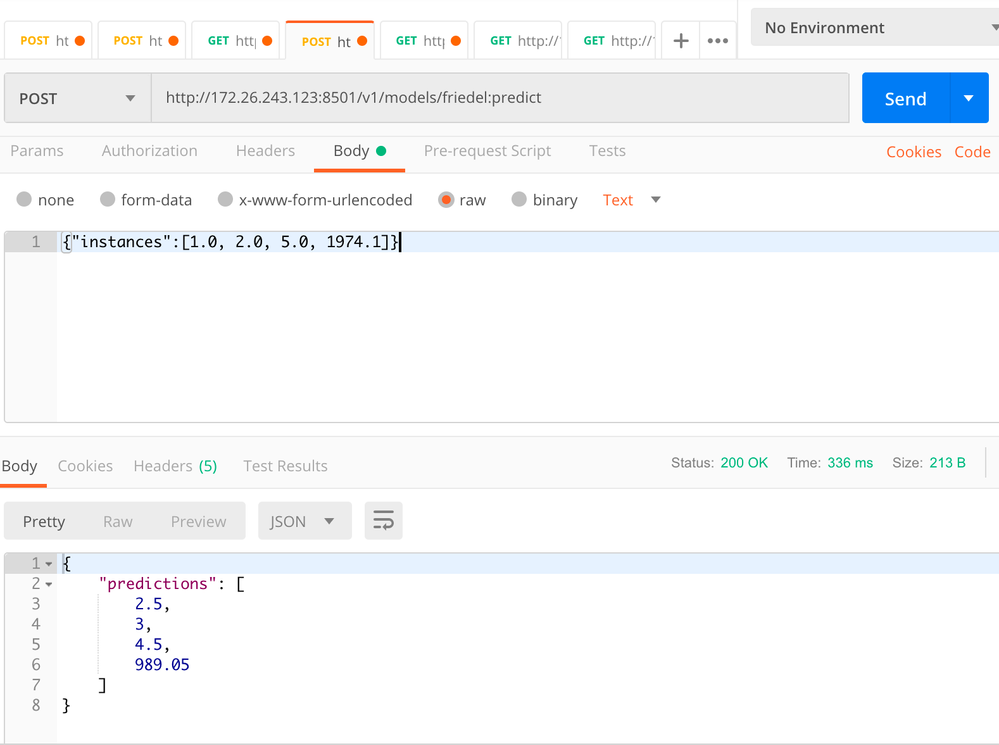

You can now start using the tensorflow applicaiton i.e. with Crome Postman UI

or with a command line interfaces.

curl -d '{"instances": [1.0, 2.0, 5.0]}' \ -X POST http:/hostname:8501/v1/models/half_plus_two:predict

{ "predictions": [2.5, 3.0, 4.5] }

Destroy the YARN Application

curl -X DELETE http://localhost:8088/app/v1/services/tensorflow/serving?user.name=ambari-qa

Created on 02-13-2019 02:07 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi frothkoetter,

Thanks for this amazing article. I am trying to replicate the same architecture. I want yarn to manage my serving containers. I followed everything from your article.

- Downloaded and updated the tensorflow/serving image

- Created a Yarn definition and posted the image to Yarn

It was accepted and I can see Yarn running my tensorflow serving image.

But when I try to create a proxy for yarn applications

httpd.conf

server {

listen 8500;

location / {

proxy_pass http://my_cluster_yarn_master;

}

}

server {

listen 8501;

location / {

proxy_pass http://my_cluster_yarn_master;

}

}And when I run the docker for yarn proxy with the following docker command

docker run --name yarn.proxy --network=host \ -v /home/centos/Amber-master/proxy/nginx.conf:/etc/nginx/nginx.conf \ -v /home/centos/Amber-master/proxy/conf.d:/etc/nginx/conf.d \ -p 8500:8500 \ -p 8501:8501 \ -d hortonworks/sandbox-proxy:1.0

I get the Bind error with the port 8501 and my reverse proxy docker container never starts. Kindly tell me if I am missing something. I am running in AWS and my yarn master already has an elastic IP associated with it.

Created on 05-27-2019 08:06 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello and thanks for your article. I did not quite get what is the role of HDP here. I Have already hdp sandbox and it has Yarn on it. Then I should install docker where and when? Thanks,