Community Articles

- Cloudera Community

- Support

- Community Articles

- The Changing Landscape of Data Ingest, Continuous ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

01-27-2020

01:35 AM

- edited on

02-10-2020

11:57 PM

by

VidyaSargur

There are a number of technology trends today that organizations are thinking about to take their business applications to the next level. Cloud adoption and machine learning are two of these. However, one of the biggest changes we’ve observed at our customers is the drive to build and scale out streaming based applications.

It’s a given that many organizations have legacy analytics systems that are built on traditional batch ingestion technology. Depending on the use case, that might be fine; but it’s clear that there are many advantages to considering the benefits of a real-time approach to data ingestion, processing, monitoring, and data analysis.

Whilst we’re not suggesting that you completely ditch your batch processing systems (well not just yet!), here are some considerations regarding your existing applications that may be designed in this way:

- By the time you process the data and perform any analysis - is the data already out of date?

- What is the opportunity cost to the business to not have access to the latest information? Is there a cost-benefit or competitive advantage to the business to be able to respond to events or make decisions in real-time? What are your competitors doing?

- What volume of data is being delivered and over what time period does it need to be processed? Why not process the data continuously as it arrives to spread out the workload and deal with any issues in real-time?

- Are you throwing data away because the current system can’t handle the ingestion and processing volume? What additional benefits can be materialized if you could handle a higher throughput of data and larger data volumes.

Then, there are new use cases being driven by the Internet of Things (IoT). These are essentially streaming patterns by definition. Over the past few years, the cost of sensors, bandwidth, and processing power have all dropped considerably driving the adoption of IoT devices. It’s interesting to note that IDC estimates there will be 46.6 billion connected IoT devices generating 79.4 zettabytes (ZB) of da... and that worldwide spending on the Internet of Things is forecast to reach $745 billion in 2019 alone.

IoT use cases include smart wearables, smart cities, smart homes, and smart cars. However, even if you don’t have a ‘smart’ project it’s clear there are benefits of bringing real-time processing to applications such as predictive maintenance, fraud detection, cybersecurity, etc. Adopting a stream processing architecture means organizations can deliver data products that have a business impact in real-time and can have a real impact on the bottom line.

Cloudera has been supporting customers such as Bosch and Vodafone with a streaming requirement for many years. The historical approach that Cloudera took to building a streaming architecture is based on using a combination of Apache Flume and Apache Kafka to make a so-called Flafka deployment.

We have many customers using this architectural pattern in production systems today. Apache Flume is essentially an agent that is deployed, per source system, to deliver messages to Apache Kafka. Apache Kafka then acts as a publish and subscribe message brokering system.

However, there are some limitations with this setup.

- Apache Flume doesn’t come with a user interface (UI) or any method for deploying and managing agents at scale.

- Although Apache Flume supports using interceptors to manipulate and transform data there is no UI for the transformation and data routing process.

- There is no embedded versioning or any UI graphs that describe the lineage or provenance of data from source to target.

Apache Flume continues to be supported by Cloudera in CDH 5, CDH 6 and in HDP 2. However, these versions of the software are end of support as follows:

- CDH 5: December 2020

- CDH 6: December 2022

- HDP 2: December 2020

Flume is already no longer supported in HDP 3.

You can review the end of support policies here for the respective platforms:

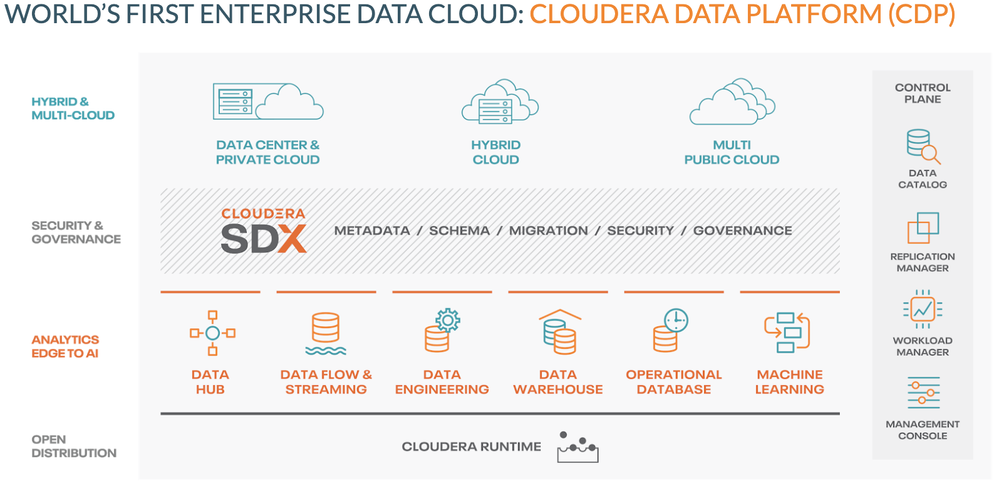

Moving forward, customers will need to upgrade their platforms to the Cloudera Data Platform (CDP) which is the new platform release from Cloudera.

However, Apache Flume has been deprecated from CDP and replaced with components from Cloudera DataFlow (CDF). In part 2 we’ll cover off CDF in a bit more detail and discuss some of the benefits of adopting this modern streaming architecture.