Community Articles

- Cloudera Community

- Support

- Community Articles

- Transparent Data Encryption Explained: High-Level ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 03-02-2018 09:07 PM - edited 08-17-2019 08:30 AM

Introduction

Transparent Data Encryption (TDE) encrypts HDFS data on disk ("at rest"). It works thorough an interaction of multiple Hadoop components and security keys. Although the general idea may be easy to understand, major misconceptions can occur and the detailed mechanics and many acronyms can get confusing. Having an accurate and detailed understanding can be valuable, especially when working with security stakeholders who often serve as strict gatekeepers in allowing technology in the enterprise.

Transparent Data Encryption: What it is and is not

TDE encrypts HDFS data on disk in way that is transparent to the user, meaning the user accesses the HDFS data encrypted on disk identical to accessing non-encrypted data. No knowledge or implementation changes are needed on the client side and the user sees the data in its unencrypted form. TDE works at the file path or encryption zone level: all files written to designated paths (zones) are encrypted on disk.

The goal of TDE is to prevent anyone who is inappropriately trying to access data from doing so. It guards against the threat, for example, of someone finding a disk in a dumpster or stealing one, or someone poking around HDFS who is not a user of the application or use case accessing the data.

The goal of TDE is not to hide sensitive data elements from authorized users (e.g. masking social security numbers). Ranger policies for authorization, column masking and row-filtering is for that. Do note that Ranger policies like masking can be applied on top of TDE data because Ranger policies are implemented post-decryption. That again is the transparent part of TDE.

The below chart provide a brief comparison of TDE with related approaches to data security.

| Data Security Approach | Description | Threat category |

| Encrypted drives & Full disk encryption | Contents of entire disk is encrypted. Access requires proper authentication key or password. Accessed data is unencrypted. | Unintended access to hard drive contents. |

| Transparent Data Encryption on Hadoop | Individual HDFS files are encrypted. All files in designated paths (zones) are encrypted whereas those outside these paths are not. Access requires user, group or service inclusion in policy against the zone. Accessed data is unencrypted. Authorization includes read and/or write for zone. | Unintended access to designated HDFS data on hard drive. |

| Ranger access policies

(e.g. authorization to HDFS paths, Hive column masking, row filtering, etc) |

No relevance to encryption. Access requires user or group inclusion in authorization policy. Accessed data is either full file, table, etc, masked columns or filtered rows etc. Authorization includes read, write, create table, etc. | Viewing HDFS data or data elements that is inappropriate to the user (e.g social security number). |

Accessing TDE Data: High Level View

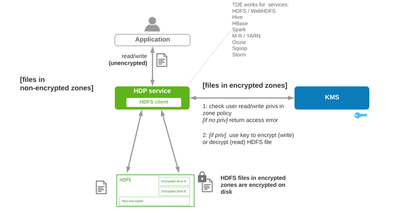

In Hadoop, users access HDFS through a service (HDFS, Hive, etc). The service accesses HDFS data through its own HDFS client, which is abstracted from the user. For files written to or read from encryption zones, the service’s HDFS client contacts a Key Management Sevice (KMS) to validate the user and/or service read/write permissions to the zone and, if permitted, the KMS provides the master key to encrypt (write) or decrypt (read) the file.

To decrypt a Hive table, for example, the encrypted zone would represent the path to the table data and the hive service would need read/write permissions to that zone.

The KMS master key is called the Enterprise Zone Key (EZK) and encrypting /decrypting involves two additional keys and a detailed sequence events. This sequence is described below. Before that though, lets get to know the acronyms and technology components that are involved and then put all of the pieces together.

One note on encryption zones: EZs can be nested within parent EZs, i.e. an EZ can hold encrypted files and an EZ that itself holds encrypted files and so on. (More on this later).

Acronym Soup

Let’s simply name the acronyms before we understand them.

TDE: Transparent Data Encryption

EZ: Encryption Zone

KMS: Key Management Server (or Service). Ranger KMS is part of Hortonworks Stack.

HSM: Hardware Security Module

EZK: Encryption Zone Key

DEK: Data Encryption Key

EDEK: Encrypted Data Encryption Key

The Puzzle Pieces

In this section let’s just look at the pieces of the puzzle before putting them all together. Let’s first look at the three kinds of keys and how they map to the technology components on Hadoop that are involved in TDE.

Key Types

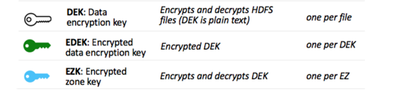

Three key types are involved in encrypting and decrypting files.

Main idea is that each file in an EZ is encrypted and decrypted by a DEK. Each file has its own unique DEK.

The DEK will be encrypted into an EDEK and decrypted back to the DEK by an EZK for the particular EZ the file belongs. Each zone has its own single EZK. (We will see that EZKs can be rotated, in which case the new EZK retains its name but increments a new version number).

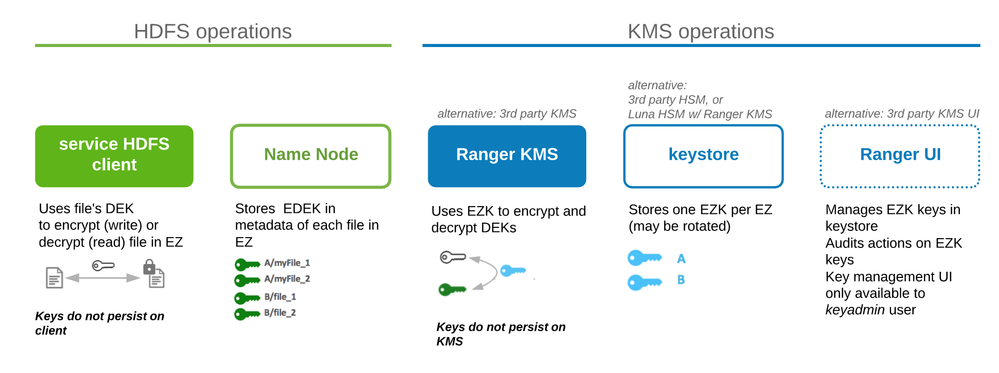

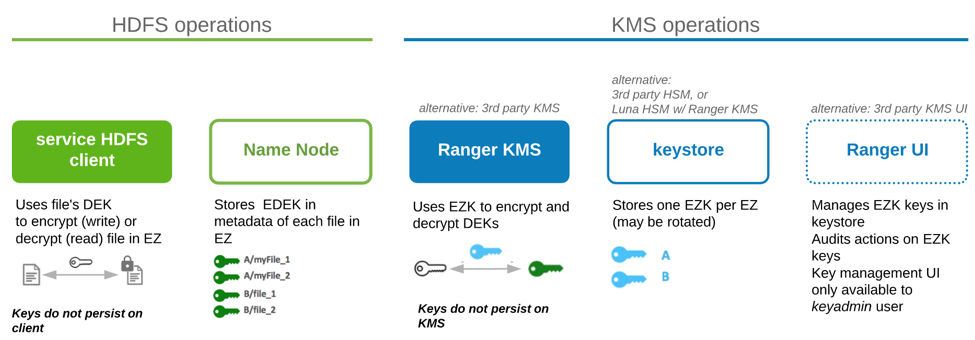

Hadoop Components

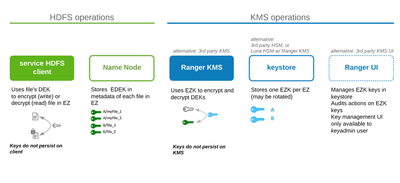

Five Hadoop components are involved in TDE.

Service HDFS client: Uses a file’s DEK to encrypt/decrypt the file.

Name Node: Stores each file’s EDEK (encrypted DEK) in file’s metadata.

Ranger KMS: Uses zone’s EZK to encrypt DEK to EDEKor decrypt EDEK to DEK

keystore: stores each zone’s EZK

Ranger UI: UI tool for special admin (keyadmin) to manage EZKs (create, name, rotate) and policies against the EZK (user, group, service that can use it it read and/or write files to EZ)

Notes

- Ranger KMS and UI is part of the Hortonworks Hadoop stack but 3rd party KMSs may be used.

- Keystore is Java keystore native to Ranger and thus software based. Compliance requirements like PCI required by financial institutions demand that keys are managed are stored in hardware (HSM) which is deemed more secure. Ranger KMS can integrate with SafeNet Luna HSM in this case.

Implementing TDE: Create encryption zone

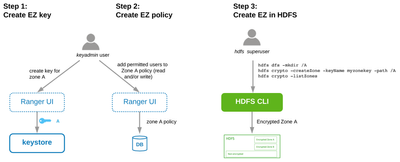

Below are the steps to create an encryption zone. All files written to an encryption zone will be encrypted.

First, the keyadmin user leverages the Ranger UI to create and name an EZK for a particular zone. Then the keyadmin applies a policy against that key and thus zone (who can read and/or write to the zone). The who here are actual services (e.g. Hive) and users and groups, which can be synced from an enterprise AD/LDAP instance.

Next an hdfs superuser runs the hdfs commands shown above to instantiate the zone in HDFS. At this point, files can be transparently written/encrypted to the zone and read/decrypted from it.

Recall the importance of the transparent aspect: applications (e.g. SQL client accessing Hive) need no special implementation to encrypt/decrypt to/from disk because that implementation is abstracted away from the application client. The details of how that works are shown next.

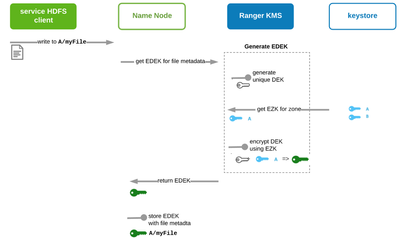

Write Encrypted File (Part 1): generate file-level EDEK and store on Name Node

When a file is written to an encryption zone an EDEK (which encrypts a DEK unique to the file) is created and stored in the Name Node metadata for that file. This EDEK will be involved with both writing the encrypted file and decrypting it when reading.

When the service HDFS client tells the Name Node it wants to write a file to the EZ, the Name Node requests the KMS to return a unique DEK encrypted as and EDEK. The KMS does this by generating a unique DEK, pulling from the keystore the EZK for the files intended zone, and using this to encrypt the DEK to EDEK. This EDEK is returned to the NameNode and stored along with the rest of the file’s metadata.

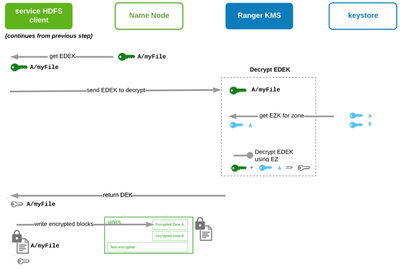

Write Encrypted File (Part 2): decrypt EDEK to use DEK for encrypting file

Immediate next step to the above is for the service HDFS client to use the EDEK on the NameNode to expose its DEK to then encrypt the file to HDFS.

The process is shown in diagram above. The KMs again uses the EZK to but this time to decrypt the EDEK to DEK. When the service HDFS client retrieves the DEK it uses it to encrypt the file blocks to HDFS. (These encrypted blocks are replicated as such).

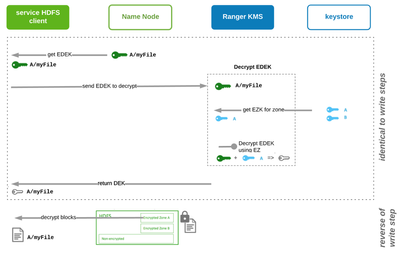

Read Encrypted File: decrypt EDEK and use DEK to decrypt file

Reading the encrypted file runs the same process as writing (sending EDEK to KMS to return DEK). Now the DEK is used to decrypt the file. The decrypted file is returned to the user who transparently needs not know or do anything special in accessing the file in HDFS. The process is shown explicitly below.

Miscellaneous

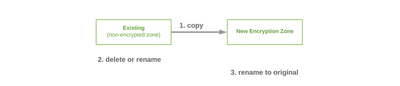

Encrypt Existing Files in HDFS

Encrypting existing files in HDFS cannot be done where they reside. The files must be copied to an encryption zone to do so (distcp is an effective way to do this, but other tools like NiFi or shell scripts with HDFS commands could be used). Deleting the original directory name and renaming the encryption zone to it leaves the end state identical to the beginning but with the files encrypted.

Rolling Keys

Enterprises often require keys to roll (replace) at intervals, typically 2 years or so. Rolling EZKs does NOT require existing encrypted files to be re-encrypting. It works as follows:

- Keyadmin updates EZK via Ranger UI. Name is retained and KMS increments key to new version.

- EDEKs on NameNodes are reencrypted (KMS decrypts EDEK with old key, resulting DEK encrypted with new key, resulting EDEK placed back on NameNode file metadata.

- All new writes and reads of existing files use new EZK.

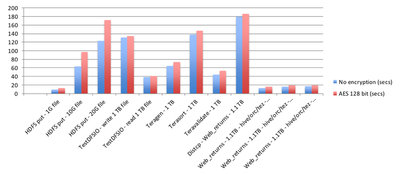

TDE performance benchmarking

TDE does effect performance but it is not extreme. In general, writes are affected more than reads, which is good because performance SLAs are usually focused more on users reading data than users or batch processes writing it. Below is a representative benchmarking.

Encrypting Intermediate Map-Reduce or Tez Data

Map-reduce and Tez write data to disk as intermediate steps in a multi-step job (Tez less so than M-R and thus its faster performance). Encrypting this intermediate data can be done by setting the configurations shown in this link. Encrypting intermediate data will add greater performance overhead and is especially cautious since the data remains on disk for short durations of time and much less likely to be compromised compared to HDFS data.

A Side Note on HBase

Apache HBase has its own encryption at rest framework that is similar to HDFS TDE (see link). This is not officially supported on HDP. Instead, encryption at rest for HBase should use HDFS TDE as described in this article and specified here.

References

TDE general

https://hortonworks.com/blog/new-in-hdp-2-3-enterprise-grade-hdfs-data-at-rest-encryption/ slide

https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-hdfs/TransparentEncryption.html

Ranger KMS

https://hadoop.apache.org/docs/stable/hadoop-kms/index.html

TDE Implementation Quick Steps

TDE Best Practices

Created on 10-27-2018 09:58 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Nice work Greg, a wonderful, clear and very detailed article!

Based on this

and other articles of yours in this forum, it seems clear that the people of

Hortonworks should seriously consider giving you a greater participation

in the elaboration and supervision of the (currently outdated, fuzzy, inexact and overall low qualilty) material presented in their

expensive official courses.

The difference in quality between his work and the work published there is so great that it is sometimes rude.

All my respects Mr!!

Created on 02-09-2019 12:51 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks for this document. But i have one doubt here, as while reading the file from HDFS, client passes the EEDK to KMS for getting DEK and then gets the blocks of that file from data node and decrypt them using DEK. So it can be possible that in between somebody sniffs and gets the DEK, which is coming from KMS. And then also sniffs the data blocks coming from data node. Then both can be used to decrypt data. So how is this taken care of? What type of communication is b/w KMS and the client, so that no one in between can get the DEK?

Created on 08-29-2019 01:33 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

great

Created on 10-03-2019 10:33 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

this is great. Thanks sir.

Created on 10-10-2019 03:35 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This is really a nice article. Kudos to you.