Community Articles

- Cloudera Community

- Support

- Community Articles

- Update Zeppelin JDCB Interpreter To Support Solr S...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 11-04-2016 02:29 AM - edited 08-17-2019 08:29 AM

Objective

In many organizations "search" is a common requirement for a user friendly means of accessing data. When people thing of "search", they often think of Google. Many organizations use Solr as their enterprise search engine. It is commonly used to power public website search from within the site itself. Organizations will often build custom user interfaces to tailor queries to meet their internal or external end-user needs. In most of these scenarios, users are shielded from the complexity of the Solr query syntax.

Solr has a long list of features and capabilities; you can read more here Apache Solr Features. Solr 6 has a new feature which allows you to submit SQL queries via JDBC. This opens up new ways to interact with Solr. Using Zeppelin with SQL is now possible because of this new feature. This should make you more productive because you can use a language syntax with which you are already familiar: SQL!

This tutorial will guide you through the process of updating the Zeppelin JDBC interpreter configuration to enable submitting SQL queries to Solr via JDBC. We'll use the Hortonworks HDP 2.5 Docker sandbox and Apache Solr 6.2.1.

NOTE: Solr 6 is being deployed as a standalone application within the sandbox. HDP 2.5 ships with Solr 5.5.2 via HDPSearch which does not include the JDBC SQL functionality.

Prerequisites

- You should have already completed the following tutorial Installing Docker Version of Sandbox on Mac

- You should have already downloaded Apache Solr 6.2.1: Apache Solr 6.2.1

Scope

- Mac OS X 10.11.6 (El Capitan)

- Docker for Mac 1.12.1

- HDP 2.5 Docker Sandbox

- Apache Solr 6.2.1

Steps

Start Sandbox

If you completed the tutorial listed in the prerequisites, then you should be ready to start up your Docker sandbox container.

docker start sandbox

NOTE: If your container is still running from performing the other tutorial, you do not need to start it again.

Once the container is started, you need to login:

ssh -p 2222 root@localhost

Now you can start the services

/etc/init.d/startup_scripts start

NOTE: This process will take several minutes.

Create Solr user in the sandbox

We will be running the Solr process as the solr user. Let's create that user in our sandbox:

useradd -d /home/solr -s /bin/bash -U solr

Copy Solr archive file to sandbox

You should already have the Solr archive file downloaded. We will use scp to copy the file to the sandbox. You should do this in another terminal window as your current window should be logged into the sandbox. From your Mac run the following command:

scp -P 2222 ~/Downloads/solr-6.2.1.tgz root@localhost:/root/

NOTE: The ssh and scp commands use different parameters to specify the port and it's easy to confuse them. The ssh command uses -p to specify the port. The scp command uses -P to sepcify the port.

In my case, the Solr file was downloaded to ~/Downloads. Your location may be different.

Extract the Solr archive file

We'll run Solr out the /opt/ directory. This makes things a bit cleaner than using the installation script which places some files in /var.

cd /opt tar xvfz /vagrant/solr-6.2.1.tgz

Now we need to give the solr user ownership over the directory.

chown -R solr:solr /opt/solr-6.2.1/

Install JDK 8

Solr 6.x requires JDK 8 which is not on the current version of the sandbox. You will need to install it before you can run Solr.

yum install java-1.8.0-openjdk-devel

Start Solr

Now that Solr is installed, we can start up a SolrCloud instance. The Solr start script provides a handy way to start a 2 node SolrCloud cluster. The -e flag tells Solr to start the cloud example. The -noprompt flag tells Solr to use default values.

cd /opt/solr-6.2.1 bin/solr start -e cloud -noprompt Welcome to the SolrCloud example! Starting up 2 Solr nodes for your example SolrCloud cluster. Creating Solr home directory /opt/solr-6.2.1/example/cloud/node1/solr Cloning /opt/solr-6.2.1/example/cloud/node1 into /opt/solr-6.2.1/example/cloud/node2 Starting up Solr on port 8983 using command: bin/solr start -cloud -p 8983 -s "example/cloud/node1/solr" Waiting up to 30 seconds to see Solr running on port 8983 [\] Started Solr server on port 8983 (pid=4952). Happy searching! Starting up Solr on port 7574 using command: bin/solr start -cloud -p 7574 -s "example/cloud/node2/solr" -z localhost:9983 Waiting up to 30 seconds to see Solr running on port 7574 [|] Started Solr server on port 7574 (pid=5175). Happy searching! Connecting to ZooKeeper at localhost:9983 ... Uploading /opt/solr-6.2.1/server/solr/configsets/data_driven_schema_configs/conf for config gettingstarted to ZooKeeper at localhost:9983 Creating new collection 'gettingstarted' using command: http://localhost:8983/solr/admin/collections?action=CREATE&name=gettingstarted&numShards=2&replicati... { "responseHeader":{ "status":0, "QTime":28324}, "success":{ "192.168.56.151:8983_solr":{ "responseHeader":{ "status":0, "QTime":17801}, "core":"gettingstarted_shard1_replica1"}, "192.168.56.151:7574_solr":{ "responseHeader":{ "status":0, "QTime":18096}, "core":"gettingstarted_shard1_replica2"}}} Enabling auto soft-commits with maxTime 3 secs using the Config API POSTing request to Config API: http://localhost:8983/solr/gettingstarted/config {"set-property":{"updateHandler.autoSoftCommit.maxTime":"3000"}} Successfully set-property updateHandler.autoSoftCommit.maxTime to 3000 SolrCloud example running, please visit: http://localhost:8983/solr

As you can see from the output, we have 2 Solr instances. One instance is listening on port 8983 and the other is listening on 7574. They are using an embedded Zookeeper instance for coordination and it is listening on port 9983. If we were going to production, we would the HDP cluster Zookeeper instance for more reliability.

Index sample data

Now that our SolrCloud cluster is running, we can index sample data into the cluster. We'll execute our SQL queries against this data. Fortunately, Solr ships with a number of example data sets. For this tutorial index XML data which contains sample product information.

bin/post -c gettingstarted example/exampledocs/*.xml

This command posts the xml documents in the specified path. The -c option defines which collection to use. The command we used previously to create the SolrCloud cluster automatically created a gettingstarted collection using the data_driven_schema_configs configuration. This configuration is what we call schemaless because the fields are dynamically added to the collection. Without dynamic fields, you have to explicitly define every field you want to have in your collection.

You should see something like this:

bin/post -c gettingstarted example/exampledocs/*.xml /usr/lib/jvm/java/bin/java -classpath /opt/solr-6.2.1/dist/solr-core-6.2.1.jar -Dauto=yes -Dc=gettingstarted -Ddata=files org.apache.solr.util.SimplePostTool example/exampledocs/gb18030-example.xml example/exampledocs/hd.xml example/exampledocs/ipod_other.xml example/exampledocs/ipod_video.xml example/exampledocs/manufacturers.xml example/exampledocs/mem.xml example/exampledocs/money.xml example/exampledocs/monitor2.xml example/exampledocs/monitor.xml example/exampledocs/mp500.xml example/exampledocs/sd500.xml example/exampledocs/solr.xml example/exampledocs/utf8-example.xml example/exampledocs/vidcard.xml SimplePostTool version 5.0.0 Posting files to [base] url http://localhost:8983/solr/gettingstarted/update. Entering auto mode. File endings considered are xml,json,jsonl,csv,pdf,doc,docx,ppt,pptx,xls,xlsx,odt,odp,ods,ott,otp,ots,rtf,htm,html,txt,log POSTing file gb18030-example.xml (application/xml) to [base] POSTing file hd.xml (application/xml) to [base] POSTing file ipod_other.xml (application/xml) to [base] POSTing file ipod_video.xml (application/xml) to [base] POSTing file manufacturers.xml (application/xml) to [base] POSTing file mem.xml (application/xml) to [base] POSTing file money.xml (application/xml) to [base] POSTing file monitor2.xml (application/xml) to [base] POSTing file monitor.xml (application/xml) to [base] POSTing file mp500.xml (application/xml) to [base] POSTing file sd500.xml (application/xml) to [base] POSTing file solr.xml (application/xml) to [base] POSTing file utf8-example.xml (application/xml) to [base] POSTing file vidcard.xml (application/xml) to [base] 14 files indexed. COMMITting Solr index changes to http://localhost:8983/solr/gettingstarted/update. Time spent: 0:00:02.379

Query Solr data

Now we can use curl to run a test query against Solr. The following command will query the gettingstarted collection for all documents. It also returns the results as JSON instead of the default XML.

curl -XGET 'http://localhost:8983/solr/gettingstarted/select?q=*:*&wt=json&indent=true'

You should see something like this:

curl -XGET 'http://localhost:8983/solr/gettingstarted/select?q=*:*&wt=json&indent=true'

{

"responseHeader":{

"zkConnected":true,

"status":0,

"QTime":11,

"params":{

"q":"*:*",

"indent":"true",

"wt":"json"}},

"response":{"numFound":32,"start":0,"maxScore":1.0,"docs":[

{

"id":"GB18030TEST",

"name":["Test with some GB18030 encoded characters"],

"features":["No accents here",

"这是一个功能",

"This is a feature (translated)",

"这份文件是很有光泽",

"This document is very shiny (translated)"],

"price":[0.0],

"inStock":[true],

"_version_":1550023359021973504},

{

"id":"IW-02",

"name":["iPod & iPod Mini USB 2.0 Cable"],

"manu":["Belkin"],

"manu_id_s":"belkin",

"cat":["electronics",

"connector"],

"features":["car power adapter for iPod, white"],

"weight":[2.0],

"price":[11.5],

"popularity":[1],

"inStock":[false],

"store":["37.7752,-122.4232"],

"manufacturedate_dt":"2006-02-14T23:55:59Z",

"_version_":1550023359918505984},

{

"id":"MA147LL/A",

"name":["Apple 60 GB iPod with Video Playback Black"],

"manu":["Apple Computer Inc."],

"manu_id_s":"apple",

"cat":["electronics",

"music"],

"features":["iTunes, Podcasts, Audiobooks",

"Stores up to 15,000 songs, 25,000 photos, or 150 hours of video",

"2.5-inch, 320x240 color TFT LCD display with LED backlight",

"Up to 20 hours of battery life",

"Plays AAC, MP3, WAV, AIFF, Audible, Apple Lossless, H.264 video",

"Notes, Calendar, Phone book, Hold button, Date display, Photo wallet, Built-in games, JPEG photo playback, Upgradeable firmware, USB 2.0 compatibility, Playback speed control, Rechargeable capability, Battery level indication"],

"includes":["earbud headphones, USB cable"],

"weight":[5.5],

"price":[399.0],

"popularity":[10],

"inStock":[true],

"store":["37.7752,-100.0232"],

"manufacturedate_dt":"2005-10-12T08:00:00Z",

"_version_":1550023360204767232},

{

"id":"adata",

"compName_s":"A-Data Technology",

"address_s":"46221 Landing Parkway Fremont, CA 94538",

"_version_":1550023360573865984},

{

"id":"asus",

"compName_s":"ASUS Computer",

"address_s":"800 Corporate Way Fremont, CA 94539",

"_version_":1550023360584351744},

{

"id":"belkin",

"compName_s":"Belkin",

"address_s":"12045 E. Waterfront Drive Playa Vista, CA 90094",

"_version_":1550023360586448896},

{

"id":"maxtor",

"compName_s":"Maxtor Corporation",

"address_s":"920 Disc Drive Scotts Valley, CA 95066",

"_version_":1550023360587497472},

{

"id":"TWINX2048-3200PRO",

"name":["CORSAIR XMS 2GB (2 x 1GB) 184-Pin DDR SDRAM Unbuffered DDR 400 (PC 3200) Dual Channel Kit System Memory - Retail"],

"manu":["Corsair Microsystems Inc."],

"manu_id_s":"corsair",

"cat":["electronics",

"memory"],

"features":["CAS latency 2, 2-3-3-6 timing, 2.75v, unbuffered, heat-spreader"],

"price":[185.0],

"popularity":[5],

"inStock":[true],

"store":["37.7752,-122.4232"],

"manufacturedate_dt":"2006-02-13T15:26:37Z",

"payloads":["electronics|6.0 memory|3.0"],

"_version_":1550023360602177536},

{

"id":"VS1GB400C3",

"name":["CORSAIR ValueSelect 1GB 184-Pin DDR SDRAM Unbuffered DDR 400 (PC 3200) System Memory - Retail"],

"manu":["Corsair Microsystems Inc."],

"manu_id_s":"corsair",

"cat":["electronics",

"memory"],

"price":[74.99],

"popularity":[7],

"inStock":[true],

"store":["37.7752,-100.0232"],

"manufacturedate_dt":"2006-02-13T15:26:37Z",

"payloads":["electronics|4.0 memory|2.0"],

"_version_":1550023360647266304},

{

"id":"VDBDB1A16",

"name":["A-DATA V-Series 1GB 184-Pin DDR SDRAM Unbuffered DDR 400 (PC 3200) System Memory - OEM"],

"manu":["A-DATA Technology Inc."],

"manu_id_s":"corsair",

"cat":["electronics",

"memory"],

"features":["CAS latency 3, 2.7v"],

"popularity":[0],

"inStock":[true],

"store":["45.18414,-93.88141"],

"manufacturedate_dt":"2006-02-13T15:26:37Z",

"payloads":["electronics|0.9 memory|0.1"],

"_version_":1550023360648314880}]

}}

By default Solr will return the top 10 documents. If you look at the top of the results, you will notice there are 32 documents in our collection.

...

"response":{"numFound":32,"start":0,"maxScore":1.0,"docs":[

...

Modify Zeppelin JDBC interpreter

Now we need to modify the existing JDBC interpreter in Zeppelin. By default, this interpreter will work with Hive, Postgres and Phoenix. We will be adding Solr to the configuration.

Open the Zeppelin UI. You can either use the link in Ambari or directly via http://localhost:9995. You should see something like this:

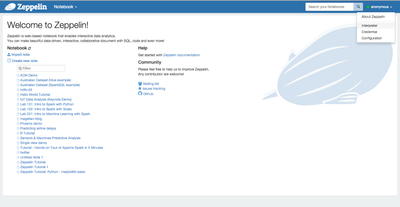

Click on the user menu in the upper right. You are logged into Zeppelin as anonymous. You should see a menu like this:

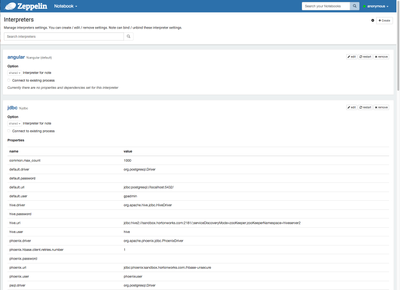

Click on the Interpreter link. You should see something like this:

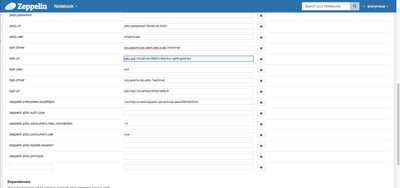

You should see the jdbc interpreter near the top of the list. If you don't, you can either scroll down or use the build-in search feature at the top of the patch. You should click on the edit button for the jdbc interpreter. You will notice the screen changes to allow you to add new properties or modify existing ones. You should see something like this:

Scroll down until you see the empty entry line. You should see something like this:

We need to add 3 properities/values here.

solr.url jdbc:solr://localhost:9983?collection=gettingstarted

solr.user solr

solr.driver org.apache.solr.client.solrj.io.sql.DriverImpl

Why are we using port 9983? That is because we are in SolrCloud mode. We are pointing to the Zookeeper instance. If one of the nodes goes down, Zookeeper will know and direct us to a node that is working.

Add each of these properties and click the + button after each entry. You should now have 3 new properties in your list:

Now we need to add an artifact to the Dependencies section. It's just below the properties. We are going to add the following:

org.apache.solr:solr-solrj:6.2.1

Click the + button. You should see something like this:

Now click the blue Save button to save the changes.

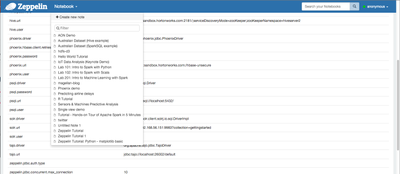

Create a new notebook

Now that we have our JDBC interpreter updated, we are going to create a new notebook. Click the Notebook drop down menu in the upper left. You should see something like this:

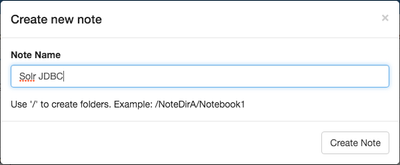

Click the + Create a new note link. You should see something like this:

Give the notebook the name Solr JDBC, then click the Create Note button.

You should see something like this:

We can query Solr using a prefix for jdbc like %jdbc(solr). The prefix refers to the name of the prefix of the properties in the JDBC interpreter we setup. If you recall, there were properties like:

solr.url phoenix.url hive.url psql.url

Our prefix is solr. Create the following query as the first note:

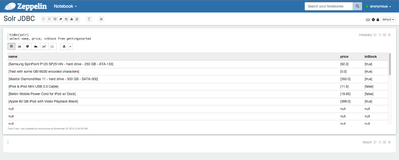

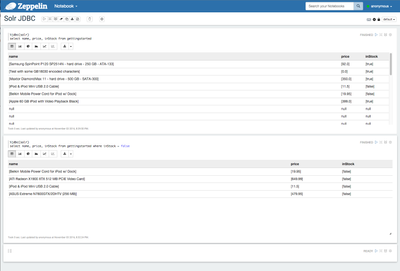

%jdbc(solr) select name, price, inStock from gettingstarted

Now click the run arrow icon. This will run the query against Solr and return results if our configuration is correct. You should see something like this:

Now add another note below our first one with the following query:

%jdbc(solr) select name, price, inStock from gettingstarted where inStock = false

You should see something like this:

And finally add one more note below our second one with the following query:

%jdbc(solr) select price, count(*) from gettingstarted group by price order by price desc

You should see something like this:

As you can see it was easy to simple queries and more complex aggregations using pure SQL. For comparison, here is Solr query that does the same thing as our second note:

curl -XGET 'http://localhost:8983/solr/gettingstarted/select?fl=price,name,inStock&indent=on&q=inStock:true&wt=json'

If you ran this command in the terminal, you should see something like this:

curl -XGET 'http://localhost:8983/solr/gettingstarted/select?fl=price,name,inStock&indent=on&q=inStock:true&wt=json'

{

"responseHeader":{

"zkConnected":true,

"status":0,

"QTime":16,

"params":{

"q":"inStock:true",

"indent":"on",

"fl":"price,name,inStock",

"wt":"json"}},

"response":{"numFound":17,"start":0,"maxScore":0.2578291,"docs":[

{

"name":["Test with some GB18030 encoded characters"],

"price":[0.0],

"inStock":[true]},

{

"name":["Apple 60 GB iPod with Video Playback Black"],

"price":[399.0],

"inStock":[true]},

{

"name":["CORSAIR XMS 2GB (2 x 1GB) 184-Pin DDR SDRAM Unbuffered DDR 400 (PC 3200) Dual Channel Kit System Memory - Retail"],

"price":[185.0],

"inStock":[true]},

{

"name":["CORSAIR ValueSelect 1GB 184-Pin DDR SDRAM Unbuffered DDR 400 (PC 3200) System Memory - Retail"],

"price":[74.99],

"inStock":[true]},

{

"name":["A-DATA V-Series 1GB 184-Pin DDR SDRAM Unbuffered DDR 400 (PC 3200) System Memory - OEM"],

"inStock":[true]},

{

"name":["One Dollar"],

"inStock":[true]},

{

"name":["One British Pound"],

"inStock":[true]},

{

"name":["Dell Widescreen UltraSharp 3007WFP"],

"price":[2199.0],

"inStock":[true]},

{

"name":["Samsung SpinPoint P120 SP2514N - hard drive - 250 GB - ATA-133"],

"price":[92.0],

"inStock":[true]},

{

"name":["Maxtor DiamondMax 11 - hard drive - 500 GB - SATA-300"],

"price":[350.0],

"inStock":[true]}]

}}

Now here is the query for the aggregations:

curl -XGET 'http://localhost:8983/solr/gettingstarted/select?facet.field=price&facet=on&fl=price&indent=on&q=*:*&wt=json'

Which do you find easier to use? My guess is the SQL syntax. 😉

Review

If you successfully followed along with this tutorial, we were able to install Solr and run it in SolrCloud mode. We indexed some sample xml documents. We updated our Zeppelin interpreter configuration to support Solr JDBC queries. We created a notebook and ran a few queries against Solr using SQL. And finally we saw the comparatively more complex native Solr query syntax.

You can read more here:

Created on 11-04-2016 06:17 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Would be good to link to the Apache Solr documentation for this specifically:

Created on 11-04-2016 07:24 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Good catch. Tutorial has been updated to provide more links.