Community Articles

- Cloudera Community

- Support

- Community Articles

- Use NiFi to process Excel spreadsheets in automate...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 12-20-2017 07:34 PM - edited 08-17-2019 09:44 AM

Introduction

Use of data in Excel spreadsheets is commonplace in the enterprise and often results in widespread yet highly manual and loosely defined dataflows. The manual and loose nature of Excel data flows are at odds with the automated way we expect data to move through the enterprise. As a result, these Excel data flows lack the accuracy, speed and transparency leveraged by automated flows. Shouldn’t Excel data flows be automated too?

In this article I show how to use NiFi to automate the reading, writing and validation of Excel contents (using the full capability set humans use) and how to fit this into a larger workflow. I focus here on building an Excel client to operate on Excel data during your workflow. Designing your own Excel workflows depends on how you do things manually today and on your ability to innovate new ways to leverage Excel data in your larger data landscape and initiatives.

Technologies

The key technology for reading and writing to Excel is Apache’s POI Java API. The key technology to building an automated workflow is NiFi. A NiFi ExecuteScript processor calling a Groovy script that implements POI bridges the two: it serves as an Excel client at any step where the automated workflow needs to interact with Excel.

Note that there is an out-of-the-box NiFi processor called ConvertExcelToCSV Processor that uses POI to convert the binary format of Excel into a CSV file for each workbook (sheet) in the spreadsheet. That is all it does however.

Using the full POI API in a Groovy script allows you to do in Groovy/Java pretty much any read, write or processing operation you can do manually. This includes the following and more:

- Select and output data to CSV or Excel format

- Insert or change the value of one or more cells

- Insert or read a comment to a cell

- Format a cell or range of cells (borders, colors, font, etc) including conditional formatting

- Insert a hyperlink into a cell

- Freeze panes or merge cells

- Create pre-populated dropdown lists for data inputs

- Set zoom level

- Set a cell formula or evaluate an existing formula

See this quick overview of POI capabilities and implementations; more references are provided at the end of this article.

As mentioned, the main design feature is to use a NiFi ExecuteScript processor that implement a Groovy script which itself implements POI to work with contents of the Excel spreadsheet. From ExecuteScript, you can output the altered Excel file as an Excel file itself (e.g to email as attachment to its author, with comments added where validation errors are detected) or output the data as CSV for downstream routing, processing, conversion and storage.

A note on Groovy and Java: Groovy is a scripting abstraction of Java that allows you to write Java code in a lightweight (Groovyesque J) scripting style that is like writing Python for example. In Groovy scripts, Java can be used natively among the Groovy code and that is how we import and implement the POI libraries.

That takes care of the Excel client part. The workflow orchestration part is classic NiFi processing (get file, route on content or failure/success, put to file or hive, etc) which numerous HCC articles explore and demonstrate (see references below).

Example Flow

Below is a simple example of an automated Excel workflow. Your own automated Excel flows will vary and likely be more elaborate. Main focus on the example flow here will be processing the spreadsheet, i.e. building an automated NiFi Excel client as a step in the larger workflow.

In this flow I:

- get an Excel workbook file from a local file system

- use POI to parse one sheet from the file and then to read cell values of all rows

- write out the row values as a CSV flow file

- split the CSV into separate rows

- validate contents of each row to make sure the fields are in proper format and ranges

- send all failed rows to a file in the local file system

- send all successful rows to a Hive table

The NiFi Excel Client: ExecuteScript + Groovy + POI

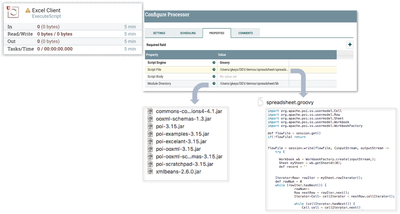

My step (2) operates on the Excel data. To do this, the ExecuteScript processor is configured to access a Groovy script and its library dependencies, including POI libraries.

This is everything you need to know in order to work with Excel in NiFi:

- ExecuteScript processor receives a flowfile in Excel format and calls a Groovy script to process it.

- This ExecuteScript processor is configured to point to the Groovy script and to the local file path holding all of the script’s dependencies (including POI jars).

- This Groovy script reads manipulates the Excel workbook. At this point, the challenge is mostly learning and using the POI API. Note that the script uses native Java in places... Groovy does not constrain you to Groovy syntax.

Full script is shown here. It is quite simple but will get you started. The full power is in POI capabilities which you should learn how to leverage. Note that you could output the flow file as Excel and not convert to CSV.

import org.apache.commons.io.IOUtils

import java.nio.charset.*

import java.text.SimpleDateFormat

import org.apache.poi.ss.usermodel.Cell

import org.apache.poi.ss.usermodel.Row

import org.apache.poi.ss.usermodel.Sheet

import org.apache.poi.ss.usermodel.Workbook

import org.apache.poi.ss.usermodel.WorkbookFactory

def flowFile = session.get()

if(!flowFile) return

def date = new Date()

flowFile = session.write(flowFile, {inputStream, outputStream ->

try {

Workbook wb = WorkbookFactory.create(inputStream,);

Sheet mySheet = wb.getSheetAt(0);

def record = ''

// processing time, inserted as first column

def tstamp = new SimpleDateFormat("YYYY-MM-dd HH:mm:ss.SSS").format(date)

Iterator<Row> rowIter = mySheet.rowIterator();

def rowNum = 0

while (rowIter.hasNext()) {

rowNum++

Row nextRow = rowIter.next();

Iterator<Cell> cellIterator = nextRow.cellIterator();

while (cellIterator.hasNext()) {

Cell cell = cellIterator.next()

record = record + cell.toString() + ','

}

if(rowNum > 1){

// insert tstamp, row num, drop last comma and add end line.

// Note: tstamp + row num are composite key

record = tstamp + ',' + rowNum + ',' + record[0..-2] + '\n'

outputStream.write(record.getBytes(StandardCharsets.UTF_8))

}

record = ''

}

}

catch(e) {

log.error("Error during processing of spreadsheet name = xx, sheet = xx", e)

//session.transfer(inputStream, REL_FAILURE)

}

} as StreamCallback)

def filename = flowFile.getAttribute('filename').split('\.')[0] + '_' + new SimpleDateFormat("YYYYMMdd-HHmmss").format(date)+'.csv'

flowFile = session.putAttribute(flowFile, 'filename', filename)

session.transfer(flowFile, REL_SUCCESS)

A Reference Workflow

The below shows a possible automated Excel workflow. The goal of this flow is to receive Excel data from multiple users into a Hive table for analytics (reporting, BI, dashboarding, advanced analytics, data science, etc). Errors are loaded to their own separate Hive table so analytics can be performed on them, with the goal of improving user data accuracy. One important feature is that the Excel attachment filename maps to the Hive table the data will be inserted (and the Groovy script that will validate it). In this way the same NiFi flow can be used for different data flows of spreadsheet data to Hive tables.

The goal of this flow is to input Excel data from multiple users into a Hive table for analytics (reporting, BI, dashboarding, advanced analytics, data science, etc). Errors have their own table to perform analytics on errors with the goal of improving user accuracy with inputting data. One important feature is Excel attachment filename maps to the Hive table the data will be inserted (and the Groovy script that will validate it). In this way the same NiFi flow can be used for different data flows of spreadsheet to Hive. Key details are explained below.

Happy Path

| Get email | ConsumePOP3 retrieves email |

| Extract email headers | ExtractEmailHeaders writes “from” header as attribute |

| Extract attachment | ExtractEmailAttachments extracts Excel attachment as flowfile and writes filename as attribute |

| Validate contents | ExecuteScript

|

|

Insert to hive |

PutHDFS puts to file path of Hive external table. Note there are many ways to put CSV data to Hive tables. Optimized Hive tables ORC files. To convert CSV to ORC and then insert to Hive, see this HCC article. |

Error Paths

| Send error email with attachment | PutEmail returns email to user. Subject of email is taken from attribute written from Validate Contents script. Failure at this point is either “Bad workflow name” because attached filename did not map to workflow name or “Bad data” because on or more cells had invalid data. If the user did not include an attachment the flow fails at ExtractAttachments and an UpdateAttribute processor (not shown in diagram) writes “No attachment” as subject. The “Bad workflow name” case attaches the original Excl workbook. The “Bad data” case attaches the Excel modified with inserted error comments. The “No attachment” case does not return an attachment |

| Convert error comments | ExecuteScript converts error comments into cell coordinates (Excel row, column) and error description into a CVS with each record holding: timestamp, emailFrom, workflowName, errorRow, errorCol, errorCode (or description). |

| Insert to hive | Same as above |

How can you leverage NiFi for Excel Data?

The above is only one automated Excel workflow. Use your imagination to explore the diverse and powerful ways that:

- NiFi can move and manipulate data throughout your data landscape

- Groovy with POI can work with Excel the way humans do

- you can combine the two to leverage Excel data in automated workflows running through your system

Think of all of the manual work performed on Excel data at your workplace. Ask yourself, How can we leverage NiFi and POI for Excel data? Then get your design cap on and start building NiFi flows to automate Excel data movement and processing like you do with the rest of your data.

References

NiFi

- Apache NiFi Overview

- Getting Started with Apache NiFi

- Hortonworks Apache NiFi Overview

- NiFi Intro HCC

Groovy

POI

Template for first flow

Created on 09-27-2019 02:50 AM - edited 09-27-2019 02:51 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This is exactly what I was looking for - works perfectly!

Created on 12-17-2019 02:08 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great job!! It works perfectly!

I am trying to adapt you code to my task but I am a bit confused.

I need to make some small modifications in an Excel file before converting it to a CSV. The original excel file contains only one sheet with two tables with fix dimension. The aim is to convert this tables to a csv format. To do this I have tried to create an auxiliary sheet in which I copy each table and this sheet will be exported thanks to your code.

I have found a POI+Groovy Rowcopy function here that could fit my necessity with some small modifications but I don't know how to integrate this function in the main script. Could you give me a hand?

I am newbie in Groovy, Nifi and POI.

Thanks in advance.

Regards,

Created on 09-02-2020 01:36 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

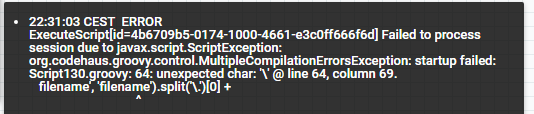

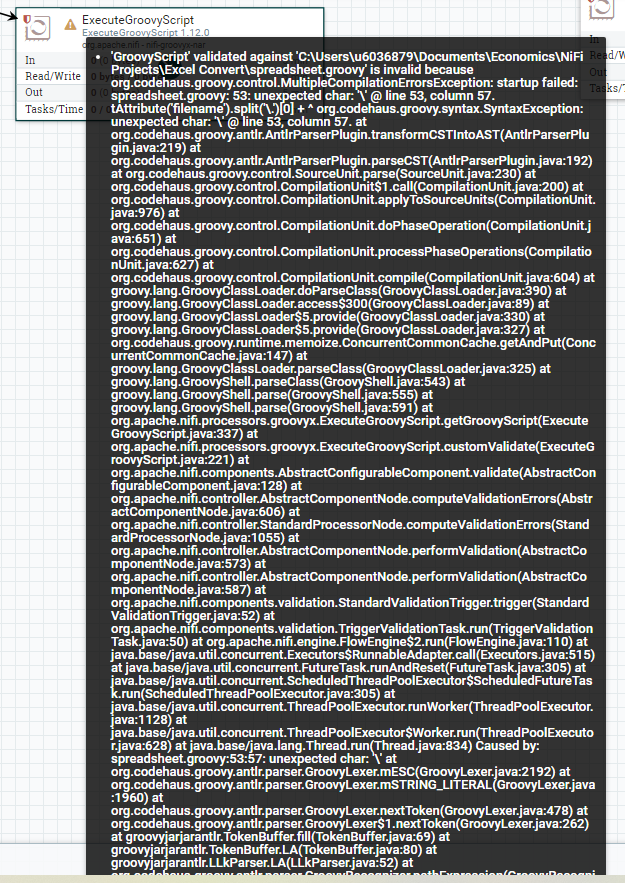

I have been trying to follow your guide, but I am stuck in the groovy script part. I constantly get this error on the script part:

I have no idea how to fix it.

I am feeding xls files after unpacking them from zip file.

Can I get some help?

Created on 09-02-2020 10:53 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi hiderto, it looks like you have some errors in your script. Is it modified from the one supplied by the original thread author?

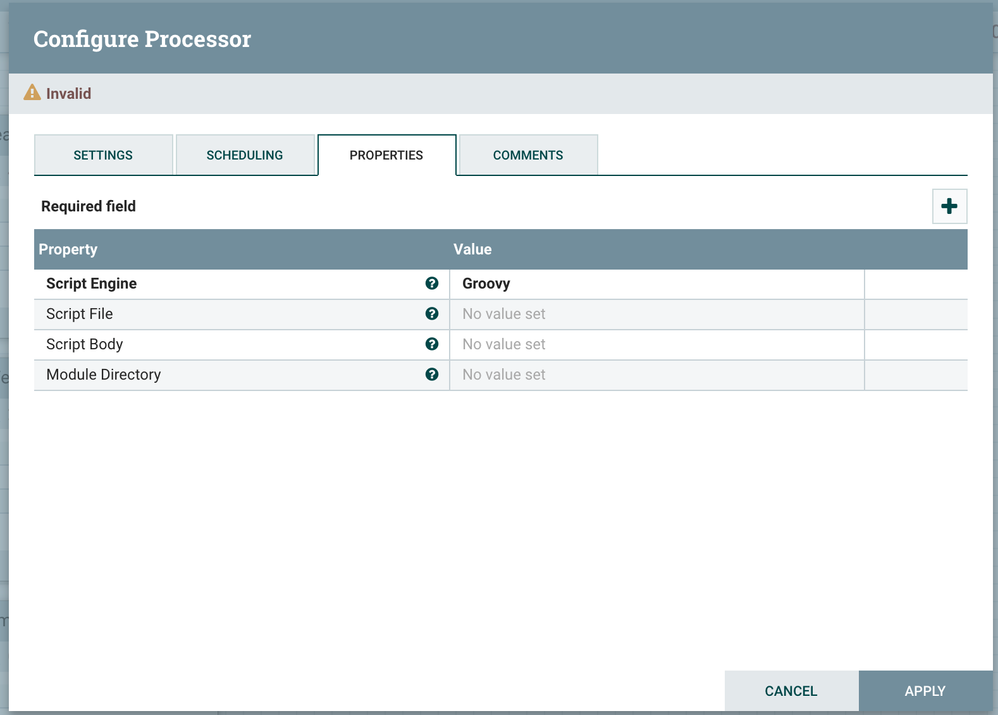

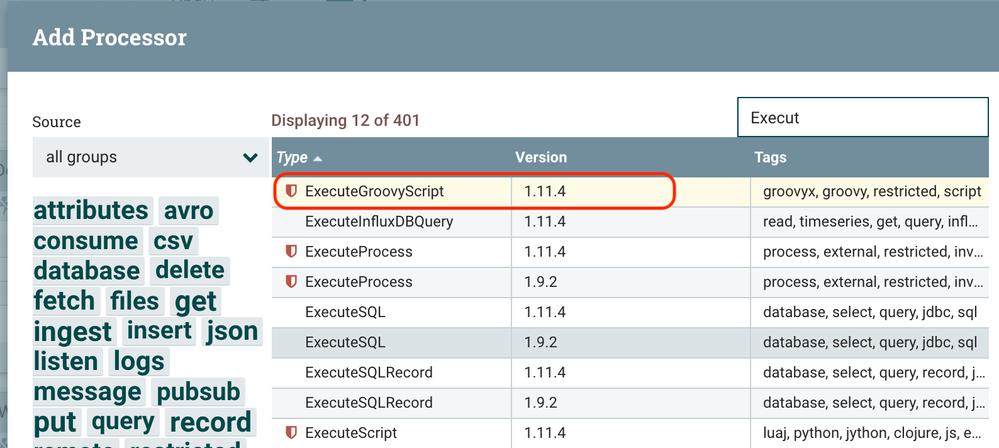

Something else that might be an issue: you have your script in an "ExecuteScript" processor. Does the script code give the same error when you put it in a "ExecuteGroovyProcessor" instead? In your current "ExecuteScript" processor, under "Properties", have you chosen "Groovy" as the script engine?

Created on 09-03-2020 12:47 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi jenka13all,

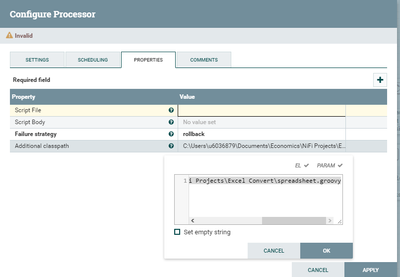

thanks for your reply. I am using exactly the same script as the OP. After changing to ExecuteGroovyScript, the processor is not even validated:

These are my settings for the processor:

I have no clue why this is happening. It looks like the script does not recognize the filename attribute or the '\' sign. I even installed Groovy on my computer, just to check if that was the problem.

Can be because I am on Windows? I am not using docker or VM, it is Nifi purely on Windows 10.

Best Regards,

Created on 09-03-2020 10:46 PM - edited 09-03-2020 10:57 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi,

would you mind posting the code for the ExecuteScript? Maybe that will provide a clue. The thing with regular expressions can be tricky. I have a similar script, I could compare.

A few more ideas: in the script, can you log the filename you're trying to use the regular expressions on? Maybe that's the problem.

Finally, if you just can't get it to work, you may be able to use the processor "ConvertExcelToCSVProcessor", which is available in NiFi version 1.11.4. (depending on what you need to do in your workflow)

Created on 09-04-2020 10:42 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

After posting the last comment, I found the solution for this particular problem. The solution is to change from:

.split('\.')to

.split("\\.")

Also, I added a missing library from before, ooxml-schemas-1.4.jar.

I tried to use the ConvertExcelToCSVProcessor before, but it is not possible for the specific excel files I need (in OLE2 format). That is why I need these POI libraries and why I want at least part of this guide to work for me.

It seems now is working after adding another library that was causing problems (the lack of it) commons-math3-3.6.1.jar.

Let's see for how long this solution works.

Best Regards,

Created on 09-28-2022 02:03 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi! and thank you for this great explanation.

I am trying to read (and write) on a existing excel file (from getfile processor) and export it to the outpustream to send it to another processor (very important to keep the existing file because there is some vba macro on it), but i can't succeed to do that.

here is my very basic code for the moment :

Created on 12-09-2022 08:07 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @ollie_

Did you success to process your xls file?

I want to write the flowfile content (wih csv format) into an XLS file, can you help please?