Community Articles

- Cloudera Community

- Support

- Community Articles

- Using Ansible to deploy HDP on AWS

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Objective

This tutorial will walk you through the process of using Ansible to deploy Hortonworks Data Platform (HDP) on Amazon Web Services (AWS). We will use the ansible-hadoop Ansible playbook from ObjectRocket to do this. You can find more information on that playbook here: ObjectRocket Ansible-Hadoop

This tutorial is part 2 of a 2 part series. Part 1 in the series will show you how to use Ansible to create instances on Amazon Web Services (AWS). Part 1 is avaiablle here: HCC Article Part 1

This tutorial was created as a companion to the Ansible + Hadoop talk I gave at the Ansible NOVA Meetup in February 2017. You can find the slides to that talk here: SlideShare

Prerequisites

- You must have an existing AWS account.

- You must have access to your AWS Access and Secret keys.

- You are responsible for all AWS costs incurred.

- You should have 3-6 instances created in AWS. If you completed Part 1 of this series, then you have an easy way to do that.

Scope

This tutorial was tested using the following environment and components:

- Mac OS X 10.11.6 and 10.12.3

- Amazon Web Services

- Anaconda 4.1.6 (Python 2.7.12)

- Ansible 2.1.3.0

- git 2.10.1

Steps

Create python virtual environment

We are going to create a Python virtual environment for installing the required Python modules. This will help eliminate module version conflicts between applications.

I prefer to use Continuum Anaconda for my Python distribution. Therefore the steps for setting up a python virtual environment will be based on that. However, you can use standard python and the virtualenv command to do something similar.

To create a virtual environment using Anaconda Python, you use the conda create command. We will name our virtual environment ansible-hadoop. The the following command: conda create --name ansible-hadoop python will create our virtual environment with the name specified. You should see something similar to the following:

$ conda create --name ansible-hadoop python

Fetching package metadata .......

Solving package specifications: ..........

Package plan for installation in environment /Users/myoung/anaconda/envs/ansible-hadoop:

The following NEW packages will be INSTALLED:

openssl: 1.0.2k-1

pip: 9.0.1-py27_1

python: 2.7.13-0

readline: 6.2-2

setuptools: 27.2.0-py27_0

sqlite: 3.13.0-0

tk: 8.5.18-0

wheel: 0.29.0-py27_0

zlib: 1.2.8-3

Proceed ([y]/n)? y

Linking packages ...

cp: /Users/myoung/anaconda/envs/ansible-hadoop:/lib/libcrypto.1.0.0.dylib: No such file or directory

mv: /Users/myoung/anaconda/envs/ansible-hadoop/lib/libcrypto.1.0.0.dylib-tmp: No such file or directory

[ COMPLETE ]|################################################################################################| 100%

#

# To activate this environment, use:

# $ source activate ansible-hadoop

#

# To deactivate this environment, use:

# $ source deactivate

#

Switch python environments

Before installing python packages for a specific development environment, you should activate the environment. This is done with the command source activate <environment>. In our case the environment is the one we just created, ansible-hadoop. You should see something similar to the following:

$ source activate ansible-hadoop

As you can see there is no output to indicate if we were successful in changing our environment.

To verify, you can use the conda info --envs command list the available environments. The active environment will have a *. You should see something similar to the following:

$ conda info --envs # conda environments: # ansible-hadoop * /Users/myoung/anaconda/envs/ansible-hadoop root /Users/myoung/anaconda

As you can see, the ansible-hadoop environment has the * which means it is the active environment.

If you want to remove your python virtual environment, you can use the following command: conda remove --name <environment> --all. If you want to remove the environment we just created you should see something similar to the following:

$ conda remove --name ansible-hadoop --all

Package plan for package removal in environment /Users/myoung/anaconda/envs/ansible-hadoop:

The following packages will be REMOVED:

openssl: 1.0.2k-1

pip: 9.0.1-py27_1

python: 2.7.13-0

readline: 6.2-2

setuptools: 27.2.0-py27_0

sqlite: 3.13.0-0

tk: 8.5.18-0

wheel: 0.29.0-py27_0

zlib: 1.2.8-3

Proceed ([y]/n)? y

Unlinking packages ...

[ COMPLETE ]|################################################################################################| 100%

HW11380:test myoung$ conda info --envs

# conda environments:

#

root * /Users/myoung/anaconda

Install Python modules in virtual environment

The ansible-hadoop playbook requires a specific version of Ansible. You need to install Ansible 2.1.3.0 before using the playbook. You can do that easily with the following command:

pip install ansible==2.1.3.0

Using a Python Virtual environment allows us to easily use Ansbile 2.1.3.0 for our playbook without impacting the default Python versions.

Clone ansible-hadoop github repo

You need to clone the ansible-hadoop github repo to a working directory on your computer. I typically do this in ~/Development.

$ cd ~/Development $ git clone https://github.com/objectrocket/ansible-hadoop.git

You should see something similar to the following:

$ git clone https://github.com/objectrocket/ansible-hadoop.git Cloning into 'ansible-hadoop'... remote: Counting objects: 3879, done. remote: Compressing objects: 100% (6/6), done. remote: Total 3879 (delta 1), reused 0 (delta 0), pack-reused 3873 Receiving objects: 100% (3879/3879), 6.90 MiB | 0 bytes/s, done. Resolving deltas: 100% (2416/2416), done.

Configure ansible-hadoop

You should make the ansible-hadoop repo directory your current working directory. There are a few configuration items we need to change.

$ cd ansible-hadoop

You should already have 3-6 instances available in AWS. You will need the public IP address of those instances.

Configure ansible-hadoop/inventory/static

We need to modify the inventory/static file to include the public IP addresses of our AWS instances. We need to assign master and slave nodes in the file. The instances are all the same configuration by default, so it doesn't matter which IP addresses you put for master and slave.

The default version of the inventory/static file should look similar to the following:

[master-nodes] master01 ansible_host=192.168.0.2 bond_ip=172.16.0.2 ansible_user=rack ansible_ssh_pass=changeme #master02 ansible_host=192.168.0.2 bond_ip=172.16.0.2 ansible_user=root ansible_ssh_pass=changeme [slave-nodes] slave01 ansible_host=192.168.0.3 bond_ip=172.16.0.3 ansible_user=rack ansible_ssh_pass=changeme slave02 ansible_host=192.168.0.4 bond_ip=172.16.0.4 ansible_user=rack ansible_ssh_pass=changeme [edge-nodes] #edge01 ansible_host=192.168.0.5 bond_ip=172.16.0.5 ansible_user=rack ansible_ssh_pass=changeme

I'm going to be using 6 instances in AWS. I will put 3 instances as master servers and 3 instances as slave servers. There are a couple of extra options in the default file we don't need. The only values we need are:

hostname: which should be master, slave or edge with a 1-up number likemaster01andslave01ansible_host: should be the AWS public IP address of the instancesansible_user: should be the username you SSH into the instance using the private key.

You can easily get the public IP address of your instances from the AWS console. Here is what mine looks like:

If you followed the part 1 tutorial, then the username for your instances should be centos. Edit your inventory/static. You should have something similar to the following:

[master-nodes] master01 ansible_host=#.#.#.# ansible_user=centos master03 ansible_host=#.#.#.# ansible_user=centos master03 ansible_host=5#.#.#.# ansible_user=centos [slave-nodes] slave01 ansible_host=#.#.#.# ansible_user=centos slave02 ansible_host=#.#.#.# ansible_user=centos slave03 ansible_host=#.#.#.# ansible_user=centos #[edge-nodes]

Your public IP addresses will be different. Also note the #[edge-nodes] value in the file. Because we are not using any edge nodes, we should comment that host group line in the file.

Once you have all of your edits in place, save the file.

Configure ansible-hadoop/ansible.cfg

There are a couple of changes we need to make to the ansible.cfg file. This file provides overall configuration settings for Ansible. The default file in the playbook should look similar to the following:

[defaults] host_key_checking = False timeout = 60 ansible_keep_remote_files = True library = playbooks/library/cloudera

We need to change the library line to be library = playbooks/library/site_facts. We will be deploying HDP which requires the site_facts module. We also need to tell Ansible where to find the private key file for connecting to the instances.

Edit the ansible.cfg file. You should modify the file to be similar to the following:

[defaults] host_key_checking = False timeout = 60 ansible_keep_remote_files = True library = playbooks/library/site_facts private_key_file=/Users/myoung/Development/ansible-hadoop/ansible.pem

Note the path of your private_key_file will be different. Once you have all of your edits in place, save the file.

Configure ansible-hadoop/group_vars/hortonworks

This step is optional. The group_vars/hortonworks file allows you to change how HDP is deployed. You can modify the version of HDP and Ambari. You can modify which components are installed. You can also specify custom repos and Ambari blueprints.

I will be using the default file, so there are no changes made.

Run bootstrap_static.sh

Before installing HDP, we need to ensure our OS configuration on the AWS instances meet the installation prerequisites. This includes things like ensuring DNS and NTP are working and all of the OS packages are updated. These are tasks that you often find people doing manually. This would obviously be tedious across 100s or 1000s of nodes. It would also introduce a far greater number of opportunties for human error. Ansible makes it incredibly easy to perform these kinds of tasks.

Running the bootstrap process is as easy as bash bootstrap_static.sh. This script essentially runs ansible-playbook -i inventory/static playbooks/boostrap.yml for you. This process will typically take 7-10 minutes depending on the size of the instances you selected.

When the script is finished, you should see something similar to the following;

PLAY RECAP ********************************************************************* localhost : ok=3 changed=2 unreachable=0 failed=0 master01 : ok=21 changed=15 unreachable=0 failed=0 master03 : ok=21 changed=15 unreachable=0 failed=0 slave01 : ok=21 changed=15 unreachable=0 failed=0 slave02 : ok=21 changed=15 unreachable=0 failed=0 slave03 : ok=21 changed=15 unreachable=0 failed=0

As you can see, all of the nodes had 21 total task performed. Of those tasks, 15 tasks required modifications to be compliant with the desire configuration state.

Run hortonworks_static.sh

Now that the bootstrap process is complete, we can install HDP. The hortonworks_static.sh script is all you have to run to install HDP. This script essentially runs ansible-playbook -i inventory/static playbooks/hortonworks.yml for you. The script installs the Ambari Server on the last master node in our list. In my case, the last master node is master03. The script also installs the Ambari Agent on all of the nodes. The installation of HDP is performed by submitting an request to the Ambari Server API using an Ambari Blueprint.

This process will typically take 10-15 minutes depending on the size of the instances you selected, the number of master nodes and the list of HDP components you have enabled.

If you forgot to install the specific version of Ansible, you will likely see something similar to the following:

TASK [site facts processing] ***************************************************

fatal: [localhost]: FAILED! => {"failed": true, "msg": "ERROR! The module sitefacts.py dnmemory=\"31.0126953125\" mnmemory=\"31.0126953125\" cores=\"8\" was not found in configured module paths. Additionally, core modules are missing. If this is a checkout, run 'git submodule update --init --recursive' to correct this problem."}

PLAY RECAP *********************************************************************

localhost : ok=4 changed=2 unreachable=0 failed=1

master01 : ok=8 changed=0 unreachable=0 failed=0

master03 : ok=8 changed=0 unreachable=0 failed=0

slave01 : ok=8 changed=0 unreachable=0 failed=0

slave02 : ok=8 changed=0 unreachable=0 failed=0

slave03 : ok=8 changed=0 unreachable=0 failed=0

To resolve this, simply perform the pip install ansible==2.1.3.0 command within your Python virtual environment. Now you can rerun the bash hortonworks_static.sh script.

The last task of the playbook is to install HDP via an Ambari Blueprint. It is normal to see something similar to the following:

TASK [ambari-server : Create the cluster instance] ***************************** ok: [master03] TASK [ambari-server : Wait for the cluster to be built] ************************ FAILED - RETRYING: TASK: ambari-server : Wait for the cluster to be built (180 retries left). FAILED - RETRYING: TASK: ambari-server : Wait for the cluster to be built (179 retries left). FAILED - RETRYING: TASK: ambari-server : Wait for the cluster to be built (178 retries left). FAILED - RETRYING: TASK: ambari-server : Wait for the cluster to be built (177 retries left). FAILED - RETRYING: TASK: ambari-server : Wait for the cluster to be built (176 retries left). FAILED - RETRYING: TASK: ambari-server : Wait for the cluster to be built (175 retries left). FAILED - RETRYING: TASK: ambari-server : Wait for the cluster to be built (174 retries left). FAILED - RETRYING: TASK: ambari-server : Wait for the cluster to be built (173 retries left). FAILED - RETRYING: TASK: ambari-server : Wait for the cluster to be built (172 retries left). FAILED - RETRYING: TASK: ambari-server : Wait for the cluster to be built (171 retries left). FAILED - RETRYING: TASK: ambari-server : Wait for the cluster to be built (170 retries left).

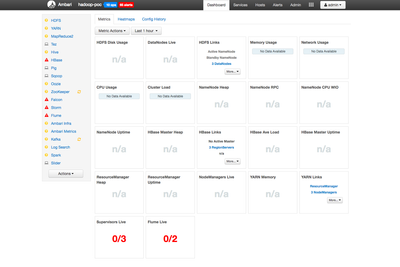

Once you see 3-5 of the retry messages, you can access the Ambari interface via your web browser. The default login is admin and the default password is admin. You should see something similar to the following:

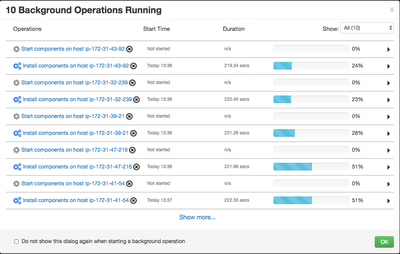

Click on the Operations icon that shows 10 operations in progress. You should see something similar to the following:

The installation task each takes between 400-600 seconds. The start task each take between 20-300 seconds. The master servers typically take longer to install and star than the slave servers.

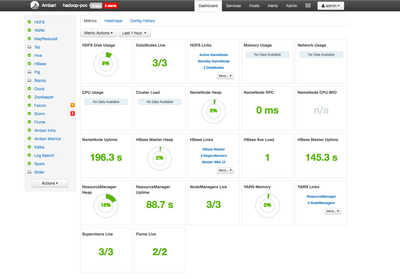

When everything is running properly, you should see something similar to this:

If you look back at your terminal window, you should see something similar to the following:

ok: [master03] TASK [ambari-server : Fail if the cluster create task is in an error state] **** skipping: [master03] TASK [ambari-server : Change Ambari admin user password] *********************** skipping: [master03] TASK [Cleanup the temporary files] ********************************************* changed: [master03] => (item=/tmp/cluster_blueprint) changed: [master03] => (item=/tmp/cluster_template) changed: [master03] => (item=/tmp/alert_targets) ok: [master03] => (item=/tmp/hdprepo) PLAY RECAP ********************************************************************* localhost : ok=5 changed=3 unreachable=0 failed=0 master01 : ok=8 changed=0 unreachable=0 failed=0 master03 : ok=30 changed=8 unreachable=0 failed=0 slave01 : ok=8 changed=0 unreachable=0 failed=0 slave02 : ok=8 changed=0 unreachable=0 failed=0 slave03 : ok=8 changed=0 unreachable=0 failed=0

Destroy the cluster

You should remember that you will incur AWS costs while the cluster is running. You can either shutdown or terminate the instances. If you want to use the cluster later, then use Ambari to stop all of the services before shutting down the instances.

Review

If you successfully followed along with this tutorial, you should have been able to easy deploy Hortonworks Data Platform 2.5 on AWS using the Ansible playbook. The process to deploy the cluster typicall takes 10-20 minutes.

For more information on how the instance types and number of master nodes impacted the installation time, review the Ansbile + Hadoop slides I linked at the top of the article.