Community Articles

- Cloudera Community

- Support

- Community Articles

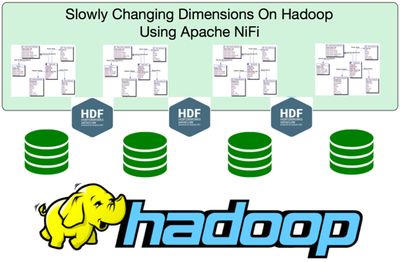

- Using Apache NiFi for Slowly Changing Dimensions o...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 08-02-2016 07:30 PM - edited 08-17-2019 10:59 AM

I have been working on EDW for last 10 years. Applying well established relational concepts to Hadoop I have seen many anti-patterns. How about some patterns which work? Lets get to work.

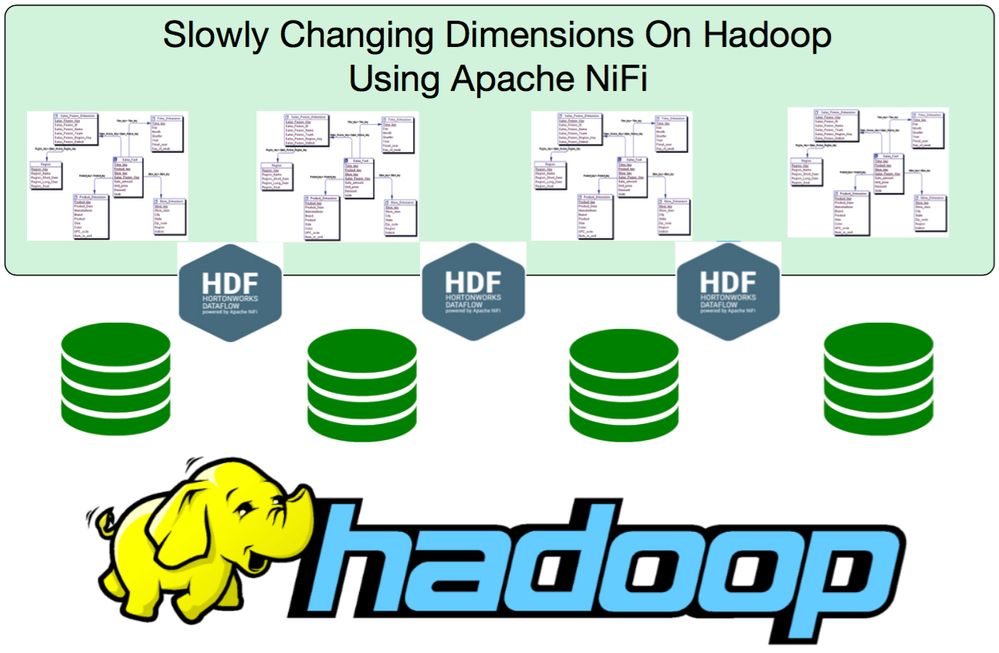

Slowly changing dimensions are a known and well established design pattern. Patterns were established on relational theory. Why? Those were the dominant database tech used by virtually everyone. This article in no way expresses the only way to do SCD on Hadoop. I am sharing with you a few patterns which lead to victory.

What is relational theory you ask?

"In physics and philosophy, a relational theory is a framework to understand reality or a physical system in such a way that the positions and other properties of objects are only meaningful relative to other objects." - wiki

So now we have a challenge. Hadoop and all the integrated animals were not based or found on relational theory. Hadoop was built on software engineering principles. This is extremely important to understand and absorb. Do not expect a 1:1 functionality. The platform paradigms are completely different. There is no lift and shift operation or turn key solution. If a vendor is selling you that..challenge them. Understand relational theory and how it different then software engineering principles.

So that is out of the way lets start focusing on slowing changing dimension type 1.

What is SCD type 1?

"This methodology overwrites old with new data, and therefore does not track historical data." - Wiki

This in my opinion is the easiest out of the several SCD types. Simply upset based on surrogate key.

Requirements -

- Data ingested needs simple processing

- Target tables (Facts, and Dims) are of type 1. Simply upsert based on surrogate or natural key

- There are known and unknown query patterns (consumption of end product)

- There are know query patters (during integration/ETL)

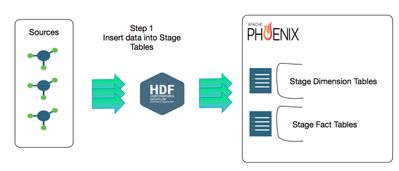

Step 1

We will first build staging tables in Phoenix (HBase). Why Phoenix? Phoenix/HBase handles upserts very well and handles known query patterns like a champ. I'm going with Phoenix. ETL will be performed on the staging tables and then finally load into our product/final output tables. The final output tables are the golden records. They will host all your post ETL'd data. Those tables will be available for end consumption for down stream BI, analytics, ETL, and etc. Using Apache NiFi, simply drag and drop your sources and your Phoenix staging tables onto the canvas and connect them. Do any simple transformation you wish here as well. Some requirements may state to land raw data and store transformed data into another table. Essentially creating a System of Record. That will work as well. We will mostly work with the post System of Record tables here.

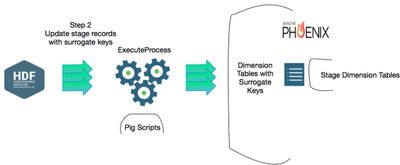

Step 2

Next we want to assign a primary keys to all records in the staging table. This primary key can either be a surrogate or natural key hash. Build a pig script to join both stage and final dimension records based on natural key. Records which have a match, use the primary key and upsert stage table for those records. For records that do not match you will need to generate a primary key. Again either generate a surrogate key or use natural key hash. Important the ExecuteProcess is a processor within Apache NiFi. I am just calling it out to be clear what needs to be done during the workflow.

The part I purposely left out is the "how" to generate a surrogate key. There are many ways to skin a cat. Disgusting. I hate that phrase but you get the idea. Here are some ways of generate a surrogate key

- http://hadooptutorial.info/writing-custom-udf-in-hive-auto-increment-column-hive/

- https://github.com/manojkumarvohra/hive-hilo

- http://hortonworks.com/blog/four-step-strategy-incremental-updates-hive/

- Using Pig Rank function

- http://amintor.com/1/post/2014/07/implement-scd-type-2-in-hadoop-using-hive-transforms.html

Another option to point out - Use a RDBMS. I know many cringe when they hear this. I don't care. It works. Do the SCD1 processing for that incremental data set on a free and open source RDBMS. Then use RDBMS table to update Phoenix stage table. Want to join both data sets? You can also use Spark to join both RDBMS tables & HBase table. The connector information is here. Then you can do step 2 processing in Spark. I plan to write another article on this in the coming days/weeks. Stay tuned. This may end up being the dominant pattern.

Step 4

Referential integrity. What is Referential integrity?

Referential integrity is a property of data which, when satisfied, requires every value of one attribute (column) of a relation (table) to exist as a value of another attribute (column) in a different (or the same) relation (table).

For this topic I plan on creating a separate article. Basically you either code up all your validation here or build a rules engine. The rules engine will be leveraged to manage referential integrity.

Bottom line. Hadoop does not adhere to relational theory. Applying relational theory concepts does not come naturally. There is some thinking involved. I call it engineering. Don't be afraid to take this on. Again I will post article on this.

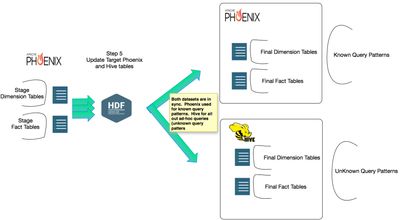

Step 5

Now we have stage table with our beautiful surrogate keys. Time to update our final tables. But notice I do not only update Phoenix tables. I have built the same tables and data set in hive. Why? For known query pattern Phoenix kicks butt. For unknown query patterns (Ad Hoc BI queries) I rather leverage Hive on Tez. Therefore using Apache NiFi pull your stage tables and upsert Phoenix and Hive final tables. Hive ACID is in technical preview. If you rather not do upsert in hive then this will involve another processing setup. This is well documented here so no reason for me to regurgitate that.

I hope this help with your SCD type 1 design on Hadoop. Leverage Apache NiFi and other animals in Hadoop. Many way to skin..ahh i'm going there. Next article I will post design pattern on Hadoop for SCD type 2.

Created on 11-16-2017 10:07 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Smanjee,

I think you missed the right link here:

"This is well documented here so no reason for me to regurgitate that."