Community Articles

- Cloudera Community

- Support

- Community Articles

- Using Cloudbreak recipes to deploy Anaconda and Te...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This tutorial will walk you through the process of using Cloudbreak recipes to install TensorFlow for Anaconda Python on an HDP 2.6 cluster during cluster provisioning. We'll then update Zeppelin to use the newly install version of Anaconda and run a quick TensorFlow test.

Prerequisites

- You should already have a Cloudbreak v1.14.4 environment running. You can follow this article to create a Cloudbreak instance using Vagrant and Virtualbox: HCC Article

- You should already have created a blueprint that deploys HDP 2.6 with Spark 2.1. You can follow this article to get the blueprint setup. Do not create the cluster yet, as we will do that in this tutorial: HCC Article

- You should already have credentials created in Cloudbreak for deploying on AWS (or Azure). This tutorial does not cover creating credentials.

Scope

This tutorial was tested in the following environment:

- Cloudbreak 1.14.4

- AWS EC2

- HDP 2.6

- Spark 2.1

- Anaconda 2.7.13

- TensorFlow 1.1.0

Steps

Create Recipe

Before you can use a recipe during a cluster deployment, you have to create the recipe. In the Cloudbreak UI, look for the mange recipes section. It should look similar to this:

If this is your first time creating a recipe, you will have 0 recipes instead of the 2 recipes show in my interface.

Now click on the arrow next to manage recipes to display available recipes. You should see something similar to this:

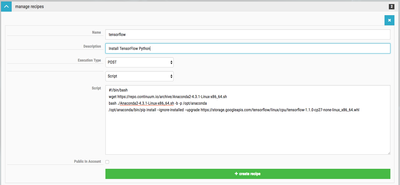

Now click on the green create recipe button. You should see something similar to this:

Now we can enter the information for our recipe. I'm calling this recipe tensorflow. I'm giving it the description of Install TensorFlow Python. You can choose to run the script as either pre-install or post-install. I'm choosing to do the install post-install. This means the script will be run after the Ambari installation process has started. So choose the Execution Type of POST. The script is fairly basic. We are going to download the Anaconda install script, then run it in silent mode. Then we'll use the Anaconda version of pip to install TensorFlow. Here is the script:

#!/bin/bash wget https://repo.continuum.io/archive/Anaconda2-4.3.1-Linux-x86_64.sh bash ./Anaconda2-4.3.1-Linux-x86_64.sh -b -p /opt/anaconda /opt/anaconda/bin/pip install --ignore-installed --upgrade https://storage.googleapis.com/tensorflow/linux/cpu/tensorflow-1.1.0-cp27-none-linux_x86_64.whl

You can read more about installing TensorFlow on Anaconda here: TensorFlow Docs.

When you have finished entering all of the information, you should see something similar to this:

If everything looks good, click on the green create recipe button.

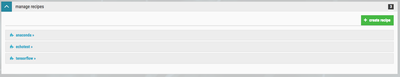

You should be able to see the recipe in your list of recipes:

NOTE: You will most likely have a different list of recipes.

Create a Cluster using a Recipe

Now that our recipe has been created, we can create a cluster that uses the recipe. Go through the process of creating a cluster up to the Choose Blueprint step. This step is where you select the recipe you want to use. The recipes are not selected by default; you have to select the recipes you wish to use. You can specify recipes for 1 or more host groups. This allows you to run different recipes across different host groups (masters, slaves, etc). You can also select multiple recipes.

We want to use the hdp26-spark-21-cluster blueprint. This will create an HDP 2.6 cluster with Spark 2.1 and Zeppelin. You should have created this blueprint when you followed the prerequisite tutorial. You should see something similar to this:

In our case, we are going to run the tensorflow recipe on every host group. If you intend to use something like TensorFlow across the cluster, you should install it on at least the slave nodes and the client nodes.

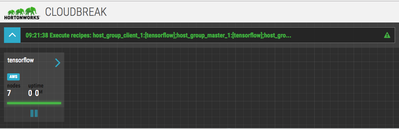

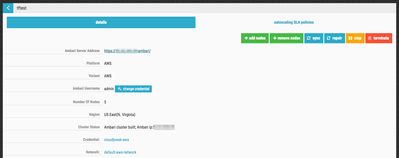

After you have selected the recipe for the host groups, click the Review & Launch button, then launch the cluster. As the cluster is building, you should see a message in the Cloudbreak UI that indicates the recipe is running. When that happens, you will see something similar to this:

If you click on the building cluster, you can see more detailed information. You should see something similar to this:

Once the cluster has finished building, you should see something similar to this:

Cloudbreak will create logs for each recipe that runs on each host. These logs are located at /var/log/recipe and have the name of the recipe and whether it is pre or post install. For example, our recipe log is called post-tensorflow.log. You can tail this log file to following the execution of the script.

NOTE: Post install scripts won't be executed until the Ambari server is installed and the cluster is building. You can always monitor the /var/log/recipe directory on a node to see when the script is being executed. The time it takes to run the script will vary depending on the cloud environment and how long it takes to spin up the cluster.

On your cluster, you should be able to see the post-install log:

$ ls /var/log/recipes post-tensorflow.log post-hdfs-home.log

Verify Anaconda Install

Once the install process is complete, you should be able to verify that Anaconda is installed. You need to ssh into one of the cloud instances. You can get the public ip address from the Cloudbreak UI. You will login using the corresponding private key to the public key you entered when you created the Cloudbreak credential. You should login as the cloudbreak user. You should see something similar to this:

$ ssh -i ~/Downloads/keys/cloudbreak_id_rsa cloudbreak@#.#.#.#

The authenticity of host '#.#.#.# (#.#.#.#)' can't be established.

ECDSA key fingerprint is SHA256:By1MJ2sYGB/ymA8jKBIfam1eRkDS5+DX1THA+gs8sdU.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '#.#.#.#' (ECDSA) to the list of known hosts.

Last login: Sat May 13 00:47:41 2017 from 192.175.27.2

__| __|_ )

_| ( / Amazon Linux AMI

___|\___|___|

https://aws.amazon.com/amazon-linux-ami/2016.09-release-notes/

25 package(s) needed for security, out of 61 available

Run "sudo yum update" to apply all updates.

Amazon Linux version 2017.03 is available.

Once you are on the server, you can check the version of Python:

$ /opt/anaconda/bin/python --version Python 2.7.13 :: Anaconda 4.3.1 (64-bit)

Update Zeppelin Interpreter

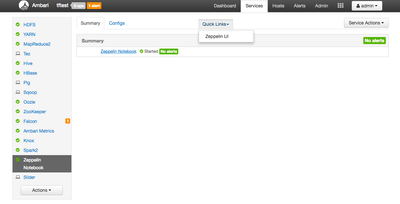

We need to update the default spark2 interpreter configuration in Zeppelin. We need to access the Zeppelin UI from Ambari. You can login to Ambari for the new cluster from the Cloudbreak UI cluster details page. Once you login to Ambari, you can access the Zeppelin UI from the Ambari Quicklink. You should see something similar to this:

After you access the Zeppelin UI, click the blue login button in the upper right corner of the interface. You can login using the default username and password of admin. After you login to Zeppelin, click the admin button in the upper right corner of the interface. This will expose the options menu. You should see something similar to this:

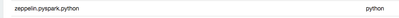

Click on the Interpreter link in the menu. This will display all of the configured interpreters. Find the spark2 interpreter. You can see the default setting for zeppelin.pyspark.python is set to python. This will use whichever Python is found in the path. You should see something similar to this:

We will need to change this to /opt/anaconda/bin/python which is where we have Anaconda Python installed. Click on the edit button and change zeppelin.pyspark.python to /opt/anaconda/bin/python. You should see something similar to this:

Now we can click the blue save button at the bottom. The configuration changes are now saved, but we need to restart the interpreter for the changes to take affect. Click on the restart button to restart the interpreter.

Create Zeppelin Notebook

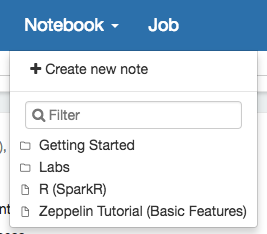

Now that our spark2 interpreter configuration has been updated, we can create a notebook to test Anaconda + TensorFlow. Click on the Notebook menu. You should see something similar to this:

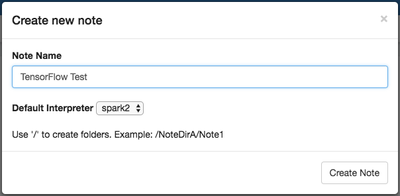

Click on the Create new note link. You can give the notebook any descriptive name you like. Select spark2 as the default interpreter. You should see something similar to this:

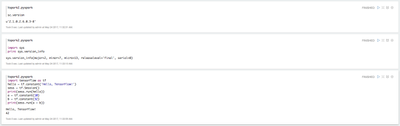

Your notebook will start with a blank paragraph. For the first paragraph, let's test the version of Spark we are using. Enter the following in the first paragraph:

%spark2.pyspark sc.version

Now click the run button for the paragraph. You should see something similar to this:

u'2.1.0.2.6.0.3-8'

As you can see, we are using Spark 2.1 Now in the second paragraph, we'll test the version of Python. We already know the command line verison is 2.7.13. Enter the following in the second paragraph:

%spark2.pyspark import sys print sys.version_info

Now click the run button for the paragraph. You should see something similar to this:

sys.version_info(major=2, minor=7, micro=13, releaselevel='final', serial=0)

As you can see, we are runnig Python version 2.7.13.

Now we can test TensorFlow. Enter the following in the third paragraph:

%spark2.pyspark

import tensorflow as tf

hello = tf.constant('Hello, TensorFlow!')

sess = tf.Session()

print(sess.run(hello))

a = tf.constant(10)

b = tf.constant(32)

print(sess.run(a + b))

This simple code comes from the TensorFlow website: [TensorFlow] (https://www.tensorflow.org/versions/r0.10/get_started/os_setup#anaconda_installation). Now click the run button for the paragraph. You may see some warning messages the first time you run it, but you should also see the following output:

Hello, TensorFlow! 42

As you can see, TensorFlow is working from Zeppelin which is using Spark 2.1 and Anaconda. If everything works properly, your notebook should look something similar this:

Admittedly this example is very basic, but it demonstrates the components are working together. For next steps, try running other TensorFlow code. Here are some examples you can work with: GitHub.

Review

If you have successfully followed along with this tutorial, you should have deployed an HDP 2.6 cluster in the cloud with Anaconda installed under /opt/anaconda and added the TensorFlow Python modules using a Cloudbreak recipe. You should have created a Zeppelin notebook which uses Anaconda Python, Spark 2.1 and TensorFlow.