Community Articles

- Cloudera Community

- Support

- Community Articles

- Using PutMongoRecord to put CSV into MongoDB (Apa...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 10-13-2017 03:34 PM - edited 08-17-2019 10:39 AM

Objective

This tutorial demonstrates how to use the PutMongoRecord processor to easily put data from a CSV file into MongoDB.

Note: The PutMongoRecord processor was introduced in NiFi 1.4.0. As such, the tutorial needs to be done running Version 1.4.0.

Environment

This tutorial was tested using the following environment and components:

- Mac OS X 10.11.6

- Apache NiFi 1.4.0

- MongoDB 3.4.9

PutMongoRecord (CSVReader)

Demo Configuration

MongoDB

For my environment, I had a local MongoDB 3.4.9 instance installed.

Start MongoDB and create a database "hcc" and a collection "movies" for use with this tutorial:

>use hcc

switched to db hcc

> db.createCollection("movies")

{ "ok" : 1 }

> show collections

movies

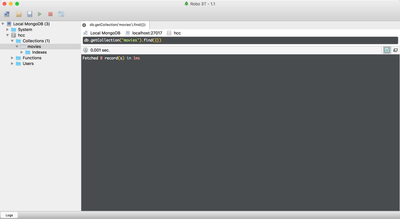

I like to use Robot 3T to manage/monitor my MongoDB instance:

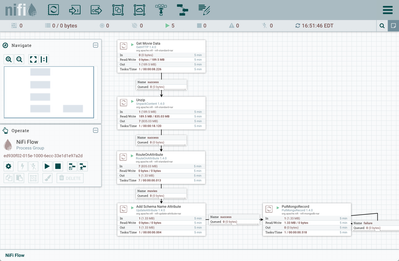

Initial Flow

A powerful feature about the record-oriented functionality in NiFi is the ability to re-use Record Readers and Writers. In conjunction with the Record processors, it is quick and easy to change data formats and data destinations.

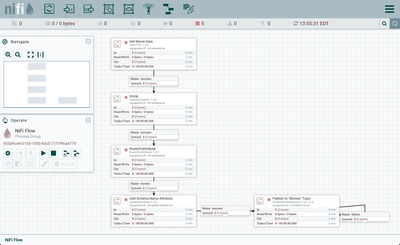

For example, let's assume you have the flow working from the article "Using PublishKafkaRecord_0_10 (CSVReader/JSONWriter)" with all of the necesary controller services enabled.

(Note: The template for this flow can be found in the article as well as step-by-step instructions on how to configure it.)

As currently configured, the flow:

1. Pulls a .zip file of movie data (titles, tags, ratings, etc.) from a website.

2. Unzips the file.

3. Sends only the movie title information on in the flow.

4. Adds Schema Name "movies" as an attribute to the flowfile.

5. Uses PublishKafkaRecord_0_10 to convert the flowfile contents from CSV to JSON and publish to a Kafka topic.

Instead of publishing that movie data to Kafka, we now want to put it in MongoDB. The following steps will demonstrate how to do that quickly and simply by replacing the PublishKafkaRecord processor with a PutMongoRecord processor and re-using the CSVReader that references an Avro Schema Registry where the movies schema is defined.

PutMongoRecord Flow Setup

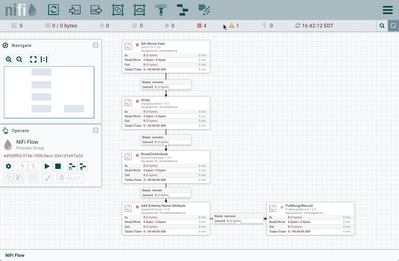

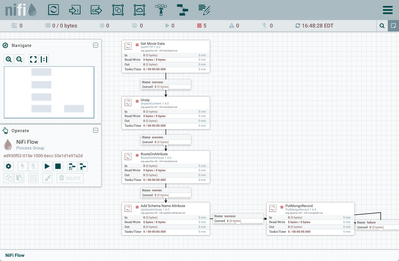

1. Delete the connection between the UpdateAttribute and PublishKafkaRecord_0_10 processors. Now delete the PublishKafkaRecord_0_10 processor or set it off to the side.

2. Add a PutMongoRecord to the canvas.

3. Connect the UpdateAttribute processor to the PutMongoRecord processor:

4. Open the Configure dialog for the PutMongoRecord process. On the Settings tab, auto-terminate the "success" relationship.

5. On the canvas, make a "failure" relationship connection from the PutMongoRecord to itself.

6. On the Properties tab:

- Add "mongodb://localhost:27017" for the Mongo URI property

- Add "hcc" for the Mongo Database Name property

- Add "movies" for the Mongo Collection Name property

- Since it and related schema were already defined for the original PublishKafka flow, simply select "CSVReader" for the Record Reader property.

Select "Apply".

The flow is ready to run.

Flow Results

Start the flow.

(Note: If you had run the original PublishKafka flow previously, don't forget to clear the state of the GetHTTP processor so that the movie data zip is retrieved again.)

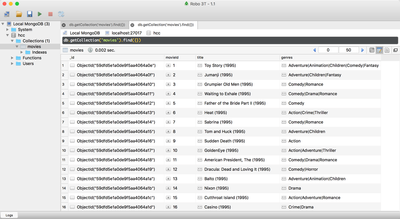

The movie data is now in MongoDB:

Helpful Links

Here are some links to check out if you are interested in other flows which utilize the record-oriented processors and controller services in NiFi:

- Convert CSV to JSON, Avro, XML using ConvertRecord (Apache NiFi 1.2+)

- Installing a local Hortonworks Registry to use with Apache NiFi

- Running SQL on FlowFiles using QueryRecord Processor (Apache NiFi 1.2+)

- Using PublishKafkaRecord_0_10 (CSVReader/JSONWriter) in Apache NiFi 1.2+

- Using PutElasticsearchHttpRecord (CSVReader) in Apache NiFi 1.2+

- Using PartitionRecord (GrokReader/JSONWriter) to Parse and Group Log Files (Apache NiFi 1.2+)

- Geo Enrich NiFi Provenance Event Data using LookupRecord

Created on 01-29-2018 06:53 PM - edited 08-17-2019 10:39 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

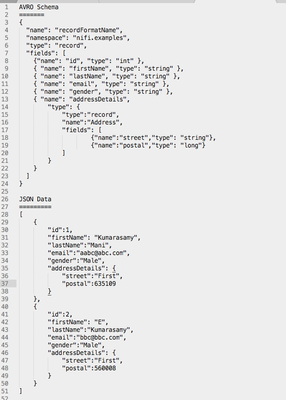

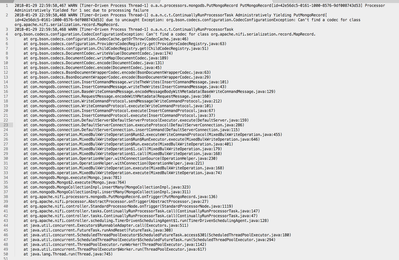

Hi Andrew, I have used PutMongoRecord for nested JSON object, the moment my JSON has nested structure it fails with an error org.bson.codecs.configuration.CodecConfigurationException and the insertion to mongodb fails. Please find the AvroSchema in the attached image. I could get the JSON validated against the schema correctly.

Attached the stack trace for the error as well.

Am i missing anything on the AvroSchema Registry config or any other config