Community Articles

- Cloudera Community

- Support

- Community Articles

- Using Recipes to Simplify Data Hub Setup Tasks

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 08-16-2022 07:59 AM - edited 09-22-2022 07:26 AM

Oftentimes data hubs will need additional steps performed in order to support your specific workload or use case. For me this usually is something like adding a jar to enable database connectivity on a Data Flow or Streams Messaging hub. Recently I was working with Kafka Connect on a Streams Messaging data hub for MySQL change data capture. This required uploading a particular jar file to each node in the cluster, placing it in a specific folder, renaming it, and changing the permissions. The tasks themselves weren't difficult, but by the time I had done it on the 3rd broker node I was ready for a better solution. By the end of this article we will have that better way implemented.

Enter CDP Recipes. Recipes are essentially bash or python scripts that execute while the cluster is spinning up. They are analogous to "user data" when you spin up an EC2 instance, which can run commands to make initializing an instance easier so it's ready when you first sign in. Recipes run as root, so there isn't much limit to what you can do in a recipe. You can also specify at what point in the cluster creation process you want your recipe to execute, including prior to termination in case there are cleanup steps you need to perform before the cluster snuffs it.

- after cluster install

- before Cloudera Manager startup

- after Cloudera Manager startup

- before cluster termination

Official CDP Recipe Documentation: https://docs.cloudera.com/data-hub/cloud/recipes/topics/mc-creating-custom-scripts-recipes.html

So let's create a recipe and put it to use.

Step 0: Decide what your recipe needs to do

My motivation was to make the steps necessary for getting a Streams Messaging data hub ready for CDC capture from MySQL. That entire process is detailed in this article , but it amounts to uploading a file and changing the permissions. As a shell script, it would look something like this. Download the file, move it to the right folder, rename it, and change the permissions. Remember, recipes run as root so you don't need to include sudo instructions.

#!/bin/bash

wget https://repo1.maven.org/maven2/mysql/mysql-connector-java/8.0.29/mysql-connector-java-8.0.29.jar -P /var/lib/kafka_connect_jdbc/

mv /var/lib/kafka_connect_jdbc/mysql-connector-java-8.0.29.jar /var/lib/kafka_connect_jdbc/mysql-connector-java.jar

chmod 644 /var/lib/kafka_connect_jdbc/mysql-connector-java.jar

Step 1: Create a Recipe

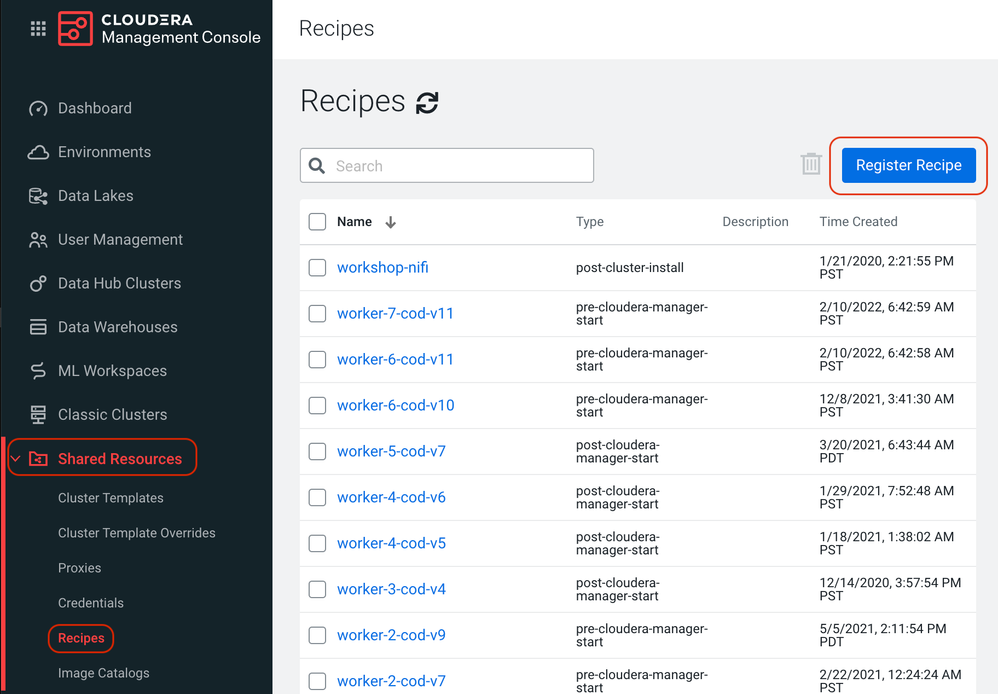

From the CDP Management Console, navigate to Recipes, under Shared Resources. Then click Register Recipe.

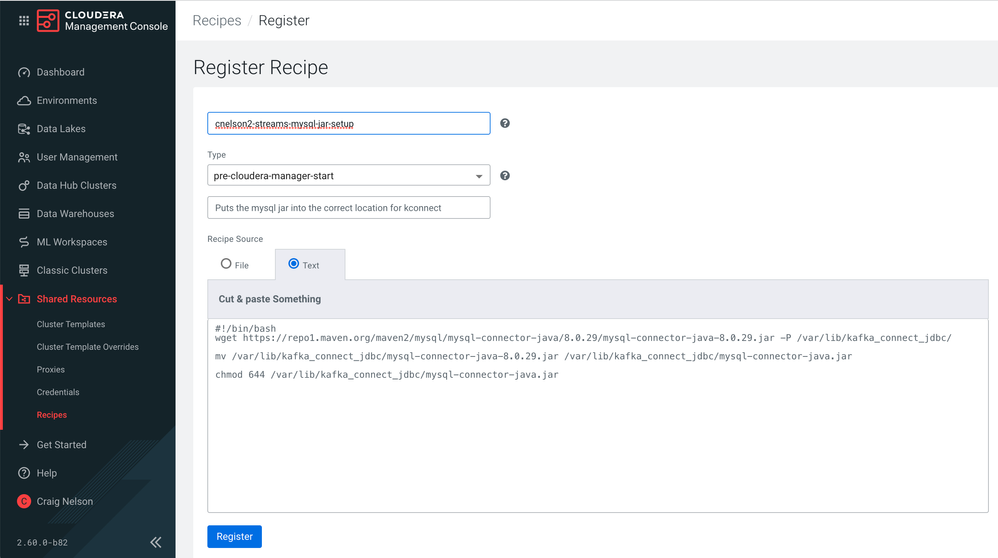

Next, enter the name of your recipe in the first box, which contains the text "Enter Environment Name". Don't ask me why it says 'Environment' rather than 'Recipe,' I don't have a good answer. Maybe when you read this it will have changed to something more intuitive.

Then select the type of recipe, which is really just a way of defining when your recipe will run. Details about each type can be found in the documentation. This particular set of actions needs Kafka services to be restarted to take effect, so I've chosen to have my recipe run prior to Cloudera Manager starting, because that will be prior to Kafka having been started.

A description is optional, but probably a best practice as you collect more recipes or if you expect other people on your team to use them. Lastly, either upload your script or paste it in as plain text, and then click Register.

Step 2: Create a new Data Hub using your Recipe

My recipe is designed to work with a Streams Messaging data hub, so we'll have to create one. But there is a new step that we must take before we click the Provision Cluster button.

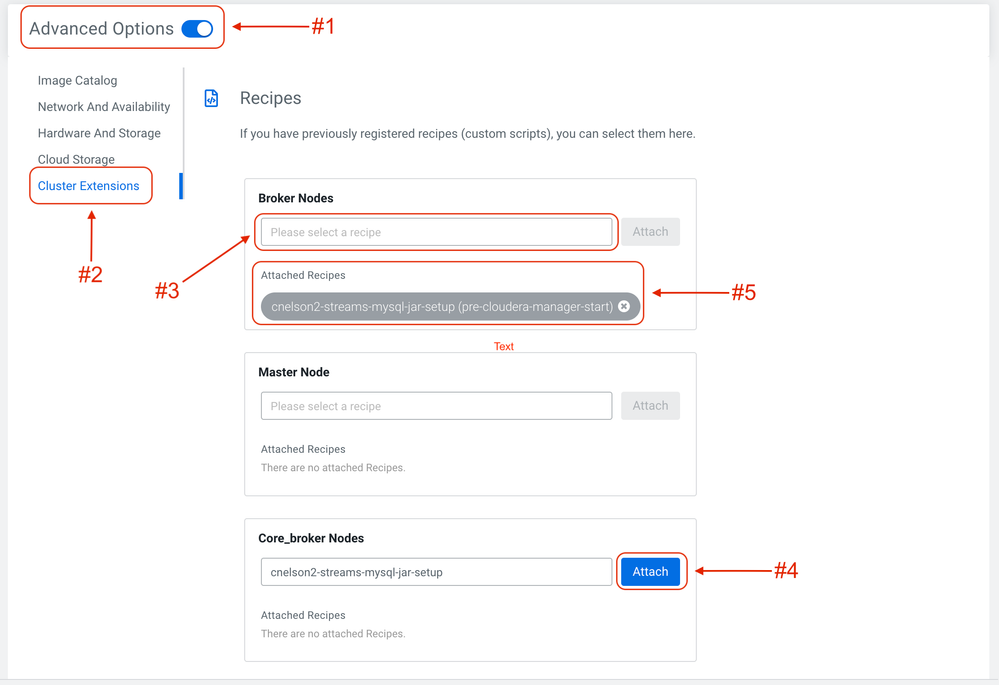

- Under Advanced Options (#1 in the diagram)...

- Click on the Cluster Extensions (#2 in the diagram)

- Our recipe needs to run on the broker nodes, so let's attach the recipe to brokers and core brokers. In the box labeled "Please select a recipe" (#3 in the diagram) you can start typing the name of your recipe until you find it.

- Once you have your recipe selected, click Attach (#4 in the diagram).

- It will then become a chip under the Attached Recipes section for that node type (#5 in the diagram).

- Do this for all the node types you upon which want your recipe applied.

- Now provision your cluster

Step 3: Verify the Results

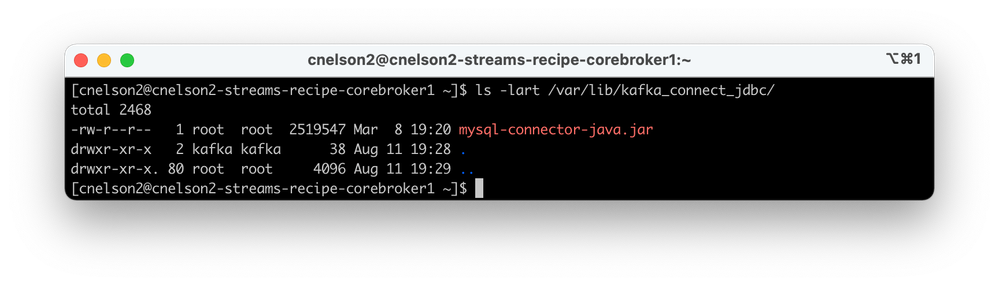

To validate that your recipe did what you needed it to do, the most direct method is to SSH into each node and check if the files are located where you expect them with the proper permissions, since that's the crux of what this recipe did.

Here you can see that the jar file is in the /var/lib/kafka_connect_jdbc folder, has been renamed to mysql-connector-java.jar, and has had the permissions changed to 644....which is exactly what the recipe was supposed to have done.

The Thrilling Conclusion

Without much effort at all you should now be able to create & utilize a recipe when spinning up a data hub. I've shared how I used a recipe, let me know how you are using recipes by commenting below. I'm sure there is some really cool stuff you can do with recipes, don't be afraid to share what you've been able to do.