Community Articles

- Cloudera Community

- Support

- Community Articles

- Using Transparent Data Encryption in HDFS (Non-Ke...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 06-29-2016 01:54 PM - edited 08-17-2019 11:51 AM

Security is a key element when discussing Big Data. A common requirement with security is data encryption. By following the instructions below, you'll be able to setup transparent data encryption in HDFS on defined directories otherwise known as encryption zones "EZ".

Before starting this step-by-step tutorial, there are three HDP services that are essential (must be installed):

1) HDFS

2) Ranger

3) Ranger KMS

Step 1: Prepare environment

As explained in the HDFS "Data at Rest" Encryption manual

a) If using Oracle JDK, verify JCE is installed (OpenJDK has JCE installed by default)

If the server running Ranger KMS is using Oracle JDK, you must install JCE (necessary for Ranger KMS to run) instructions on installing JCE can be found here

b) CPU Support for AES-NI optimization

AES-NI optimization requires an extended CPU instruction set for AES hardware acceleration.

There are several ways to check for this; for example:

cat /proc/cpuinfo | grep aes

Look for output with flags and 'aes'.

c) Library Support for AES-NI optimization

You will need a version of the libcrypto.so library that supports hardware acceleration, such as OpenSSL 1.0.1e. (Many OS versions have an older version of the library that does not support AES-NI.)

A version of the libcrypto.so libary with AES-NI support must be installed on HDFS cluster nodes and MapReduce client hosts -- that is, any host from which you issue HDFS or MapReduce requests. The following instructions describe how to install and configure the libcrypto.so library.

RHEL/CentOS 6.5 or later

On HDP cluster nodes, the installed version of libcrypto.so supports AES-NI, but you will need to make sure that the symbolic link exists:

sudo ln -s /usr/lib64/libcrypto.so.1.0.1e /usr/lib64/libcrypto.so

On MapReduce client hosts, install the openssl-devel package:

sudo yum install openssl-devel

d) Verify AES-NI support

To verify that a client host is ready to use the AES-NI instruction set optimization for HDFS encryption, use the following command:

hadoop checknative

You should see a response similar to the following:

15/08/12 13:48:39 INFO bzip2.Bzip2Factory: Successfully loaded & initialized native-bzip2 library system-native 14/12/12 13:48:39 INFO zlib.ZlibFactory: Successfully loaded & initialized native-zlib library Native library checking: hadoop: true /usr/lib/hadoop/lib/native/libhadoop.so.1.0.0 zlib: true /lib64/libz.so.1 snappy: true /usr/lib64/libsnappy.so.1 lz4: true revision:99 bzip2: true /lib64/libbz2.so.1 openssl: true /usr/lib64/libcrypto.so

Step 2: Create an Encryption key

This step will outline how to create an encryption key using Ranger.

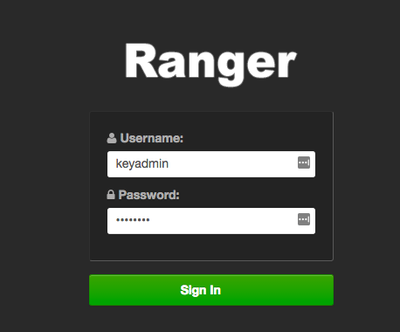

a) Login to Ranger

* To access Ranger KMS (Encryption) - login using the username "keyadmin", the default password is "keyadmin" - remember to change this password

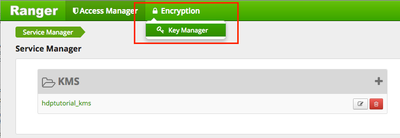

b) Choose Encryption > Key Manager

* In this tutorial, "hdptutorial" is the name of the HDP cluster. Your name will be different, depending on your cluster name.

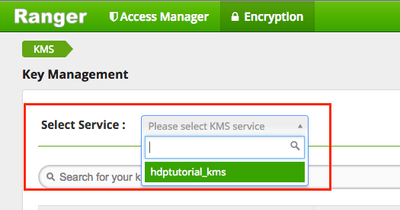

c) Choose Select Service > yourclustername_kms

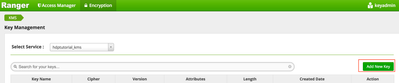

d) Choose "Add New Key"

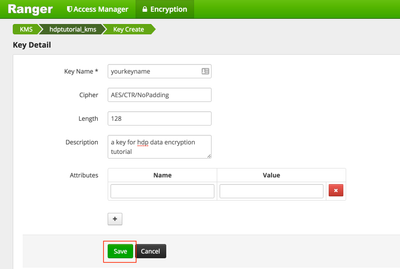

e) Create the new key

Length - either 128 or 256 * Length of 256 requires JCE installed on all hosts in the cluster"The default key size is 128 bits. The optional -size parameter supports 256-bit keys, and requires the Java Cryptography Extension (JCE) Unlimited Strength Jurisdiction Policy File on all hosts in the cluster. For installation information, see the Ambari Security Guide."

Step 3: Add KMS Ranger Policies for encrypting directory

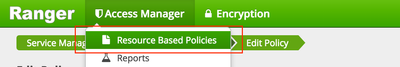

a) Login to Ranger

* To access Ranger KMS (Encryption) - login using the username "keyadmin", the default password is "keyadmin" - remember to change this password

b) Choose Access Manager > Resource Based Policies

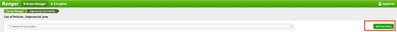

c) Choose Add New Policy

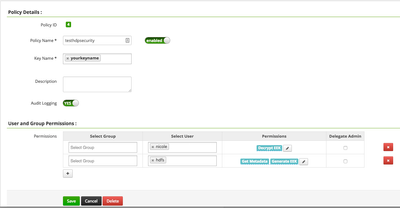

d) Create a policy

- the user hdfs must be added to GET_METDATA and GENERATE_EEK -> using any user calls the user hdfs in the background

- the user "nicole" is a custom user I created to be able to read/write data using the key "yourkeyname"

Step 4: Create an Encryption Zone

a) Create a new directory

hdfs dfs -mkdir /zone_encr

* Leave the directory empty until the directory has been encrypted (recommend using a superuser to create the directory)

b) Create an encryption zone

hdfs crypto -createZone -keyName yourkeyname -path /zone_encr

* Using the user "nicole" above to create the encryption zone

c) Validate the encryption zone exists

hdfs crypto -listZones

* must be a superuser to call this command (or part of a superuser group like hdfs)

The command should output:

[nicole@hdptutorial01 security]$ hdfs crypto -listZones /zone_encr yourkeyname

* You will now be able to read/write data to your encrypted directory /zone_encr. If you receive any errors - including "IOException:" when creating an encryption zone in Step 4 (b) take a look at your Ranger KMS server /var/log/ranger/kms/kms.log -> there usually is a permission issue accessing the key

* To find out more about how transparent data encryption in HDFS works, refer to the Hortonworks blog here

Tested in HDP: 2.4.2