Community Articles

- Cloudera Community

- Support

- Community Articles

- Using the new MXNet-Model-Server with Apache NiFi

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 12-27-2017 10:53 PM - edited 08-17-2019 09:36 AM

There is an open source model server for Apache MXNet that I recently tried. It's very easy to install and use. You must have Apache MXNet and Python installed.

Installation and Setup

To install the Model Server it's a simple. I am using Pip3 to make sure I install to Python3 as I also have Python 2.7 installed on my laptop.

pip3 install mxnet --pre --user pip3 install mxnet-model-server pip3 install imdbpy pip3 install dataset

http://127.0.0.1:9999/api-description

{

"description": {

"host": "127.0.0.1:9999",

"info": {

"title": "Model Serving Apis",

"version": "1.0.0"

},

"paths": {

"/api-description": {

"get": {

"operationId": "api-description",

"produces": [

"application/json"

],

"responses": {

"200": {

"description": "OK",

"schema": {

"properties": {

"description": {

"type": "string"

}

},

"type": "object"

}

}

}

}

},

"/ping": {

"get": {

"operationId": "ping",

"produces": [

"application/json"

],

"responses": {

"200": {

"description": "OK",

"schema": {

"properties": {

"health": {

"type": "string"

}

},

"type": "object"

}

}

}

}

},

"/squeezenet/predict": {

"post": {

"consumes": [

"multipart/form-data"

],

"operationId": "squeezenet_predict",

"parameters": [

{

"description": "data should be image which will be resized to: [3, 224, 224]",

"in": "formData",

"name": "data",

"required": "true",

"type": "file"

}

],

"produces": [

"application/json"

],

"responses": {

"200": {

"description": "OK",

"schema": {

"properties": {

"prediction": {

"type": "string"

}

},

"type": "object"

}

}

}

}

}

},

"schemes": [

"http"

],

"swagger": "2.0"

}

}

http://127.0.0.1:9999/ping

{

"health": "healthy!"

}

Because each server can specify a port, you can have many running at once. I am running two at once. One for SSD and one for SqueezeNet. In the MXNet Model Server github you will find a Model Zoo containing many image processing libraries and examples.

mxnet-model-server --models squeezenet=https://s3.amazonaws.com/model-server/models/squeezenet_v1.1/squeezenet_v1.1.model --service mms/model_service/mxnet_vision_service.py --port 9999

/usr/local/lib/python3.6/site-packages/mms/service_manager.py:14: DeprecationWarning: the imp module is deprecated in favour of importlib; see the module's documentation for alternative uses import imp [INFO 2017-12-27 08:50:23,195 PID:50443 /usr/local/lib/python3.6/site-packages/mms/mxnet_model_server.py:__init__:87] Initialized model serving. Downloading squeezenet_v1.1.model from https://s3.amazonaws.com/model-server/models/squeezenet_v1.1/squeezenet_v1.1.model. [08:50:26] src/nnvm/legacy_json_util.cc:190: Loading symbol saved by previous version v0.8.0. Attempting to upgrade... [08:50:26] src/nnvm/legacy_json_util.cc:198: Symbol successfully upgraded! [INFO 2017-12-27 08:50:26,701 PID:50443 /usr/local/lib/python3.6/site-packages/mms/serving_frontend.py:add_endpoint:182] Adding endpoint: squeezenet_predict to Flask [INFO 2017-12-27 08:50:26,701 PID:50443 /usr/local/lib/python3.6/site-packages/mms/serving_frontend.py:add_endpoint:182] Adding endpoint: ping to Flask [INFO 2017-12-27 08:50:26,702 PID:50443 /usr/local/lib/python3.6/site-packages/mms/serving_frontend.py:add_endpoint:182] Adding endpoint: api-description to Flask [INFO 2017-12-27 08:50:26,703 PID:50443 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric errors for last 30 seconds is 0.000000 [INFO 2017-12-27 08:50:26,703 PID:50443 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric requests for last 30 seconds is 0.000000 [INFO 2017-12-27 08:50:26,703 PID:50443 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric cpu for last 30 seconds is 0.335000 [INFO 2017-12-27 08:50:26,704 PID:50443 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric memory for last 30 seconds is 0.005696 [INFO 2017-12-27 08:50:26,704 PID:50443 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric disk for last 30 seconds is 0.656000 [INFO 2017-12-27 08:50:26,704 PID:50443 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric overall_latency for last 30 seconds is 0.000000 [INFO 2017-12-27 08:50:26,705 PID:50443 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric inference_latency for last 30 seconds is 0.000000 [INFO 2017-12-27 08:50:26,705 PID:50443 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric preprocess_latency for last 30 seconds is 0.000000 [INFO 2017-12-27 08:50:26,720 PID:50443 /usr/local/lib/python3.6/site-packages/mms/mxnet_model_server.py:start_model_serving:101] Service started successfully. [INFO 2017-12-27 08:50:26,720 PID:50443 /usr/local/lib/python3.6/site-packages/mms/mxnet_model_server.py:start_model_serving:102] Service description endpoint: 127.0.0.1:9999/api-description [INFO 2017-12-27 08:50:26,720 PID:50443 /usr/local/lib/python3.6/site-packages/mms/mxnet_model_server.py:start_model_serving:103] Service health endpoint: 127.0.0.1:9999/ping [INFO 2017-12-27 08:50:26,730 PID:50443 /usr/local/lib/python3.6/site-packages/werkzeug/_internal.py:_log:87] * Running on http://127.0.0.1:9999/ (Press CTRL+C to quit)

For the SSD example, I fork the AWS Github (https://github.com/awslabs/mxnet-model-server.git) and change directory to the example/ssd directory and follow the setup to prepare the model.

mxnet-model-server --models SSD=resnet50_ssd_model.model --service ssd_service.py --port 9998

/usr/local/lib/python3.6/site-packages/mms/service_manager.py:14: DeprecationWarning: the imp module is deprecated in favour of importlib; see the module's documentation for alternative uses import imp [INFO 2017-12-27 09:02:22,800 PID:55345 /usr/local/lib/python3.6/site-packages/mms/mxnet_model_server.py:__init__:87] Initialized model serving. [INFO 2017-12-27 09:02:24,510 PID:55345 /usr/local/lib/python3.6/site-packages/mms/serving_frontend.py:add_endpoint:182] Adding endpoint: SSD_predict to Flask [INFO 2017-12-27 09:02:24,510 PID:55345 /usr/local/lib/python3.6/site-packages/mms/serving_frontend.py:add_endpoint:182] Adding endpoint: ping to Flask [INFO 2017-12-27 09:02:24,511 PID:55345 /usr/local/lib/python3.6/site-packages/mms/serving_frontend.py:add_endpoint:182] Adding endpoint: api-description to Flask [INFO 2017-12-27 09:02:24,511 PID:55345 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric errors for last 30 seconds is 0.000000 [INFO 2017-12-27 09:02:24,512 PID:55345 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric requests for last 30 seconds is 0.000000 [INFO 2017-12-27 09:02:24,512 PID:55345 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric cpu for last 30 seconds is 0.290000 [INFO 2017-12-27 09:02:24,513 PID:55345 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric memory for last 30 seconds is 0.014777 [INFO 2017-12-27 09:02:24,513 PID:55345 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric disk for last 30 seconds is 0.656000 [INFO 2017-12-27 09:02:24,513 PID:55345 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric overall_latency for last 30 seconds is 0.000000 [INFO 2017-12-27 09:02:24,514 PID:55345 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric inference_latency for last 30 seconds is 0.000000 [INFO 2017-12-27 09:02:24,514 PID:55345 /usr/local/lib/python3.6/site-packages/mms/metric.py:start_recording:118] Metric preprocess_latency for last 30 seconds is 0.000000 [INFO 2017-12-27 09:02:24,514 PID:55345 /usr/local/lib/python3.6/site-packages/mms/mxnet_model_server.py:start_model_serving:101] Service started successfully. [INFO 2017-12-27 09:02:24,514 PID:55345 /usr/local/lib/python3.6/site-packages/mms/mxnet_model_server.py:start_model_serving:102] Service description endpoint: 127.0.0.1:9998/api-description [INFO 2017-12-27 09:02:24,514 PID:55345 /usr/local/lib/python3.6/site-packages/mms/mxnet_model_server.py:start_model_serving:103] Service health endpoint: 127.0.0.1:9998/ping [INFO 2017-12-27 09:02:24,524 PID:55345 /usr/local/lib/python3.6/site-packages/werkzeug/_internal.py:_log:87] * Running on http://127.0.0.1:9998/ (Press CTRL+C to quit)

http://127.0.0.1:9998/api-description

{

"description": {

"host": "127.0.0.1:9998",

"info": {

"title": "Model Serving Apis",

"version": "1.0.0"

},

"paths": {

"/SSD/predict": {

"post": {

"consumes": [

"multipart/form-data"

],

"operationId": "SSD_predict",

"parameters": [

{

"description": "data should be image which will be resized to: [3, 512, 512]",

"in": "formData",

"name": "data",

"required": "true",

"type": "file"

}

],

"produces": [

"application/json"

],

"responses": {

"200": {

"description": "OK",

"schema": {

"properties": {

"prediction": {

"type": "string"

}

},

"type": "object"

}

}

}

}

},

"/api-description": {

"get": {

"operationId": "api-description",

"produces": [

"application/json"

],

"responses": {

"200": {

"description": "OK",

"schema": {

"properties": {

"description": {

"type": "string"

}

},

"type": "object"

}

}

}

}

},

"/ping": {

"get": {

"operationId": "ping",

"produces": [

"application/json"

],

"responses": {

"200": {

"description": "OK",

"schema": {

"properties": {

"health": {

"type": "string"

}

},

"type": "object"

}

}

}

}

}

},

"schemes": [

"http"

],

"swagger": "2.0"

}

}

http://127.0.0.1:9998/ping

{

"health": "healthy!"

}

With this call to Squeeze net we get some classes of guesses and probabilities (0.50 -> 50%).

curl -X POST http://127.0.0.1:9999/squeezenet/predict -F "data=@TimSpann2.jpg"

{

"prediction": [

[

{

"class": "n02877765 bottlecap",

"probability": 0.5077430009841919

},

{

"class": "n03196217 digital clock",

"probability": 0.35705313086509705

},

{

"class": "n03706229 magnetic compass",

"probability": 0.02305465377867222

},

{

"class": "n02708093 analog clock",

"probability": 0.018635360524058342

},

{

"class": "n04328186 stopwatch, stop watch",

"probability": 0.015588048845529556

}

]

]

}

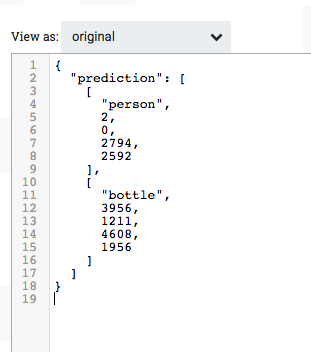

With this test call to SSD, you will see it identifies a person (me) and provides coordinates of a box around me.

curl -X POST http://127.0.0.1:9998/SSD/predict -F "data=@TimSpann2.jpg"

{

"prediction": [

[

"person",

405,

139,

614,

467

],

[

"boat",

26,

0,

459,

481

]

]

}

/opt/demo/curl.sh

curl -X POST http://127.0.0.1:9998/SSD/predict -F "data=@$1"

/opt/demo/curl2.sh

curl -X POST http://127.0.0.1:9999/squeezenet/predict -F "data=@$1"

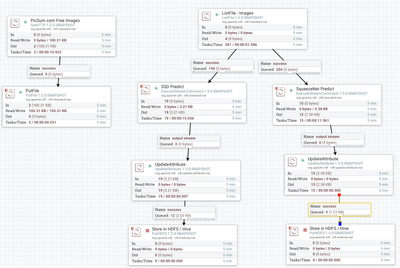

The Apache NiFi flow is easy, I call the REST URL and pass an image. This can be done with a Groovy Script or by executing a Curl Shell.

Logs From Run

[INFO 2017-12-27 17:41:33,447 PID:90860 /usr/local/lib/python3.6/site-packages/werkzeug/_internal.py:_log:87] 127.0.0.1 - - [27/Dec/2017 17:41:33] "POST /SSD/predict HTTP/1.1" 400 -

[INFO 2017-12-27 17:41:36,289 PID:90860 /usr/local/lib/python3.6/site-packages/mms/serving_frontend.py:predict_callback:440] Request input: data should be image with jpeg format.

[INFO 2017-12-27 17:41:36,289 PID:90860 /usr/local/lib/python3.6/site-packages/mms/request_handler/flask_handler.py:get_file_data:133] Getting file data from request.

[INFO 2017-12-27 17:41:37,035 PID:90860 /usr/local/lib/python3.6/site-packages/mms/serving_frontend.py:predict_callback:475] Response is text.

[INFO 2017-12-27 17:41:37,035 PID:90860 /usr/local/lib/python3.6/site-packages/mms/request_handler/flask_handler.py:jsonify:156] Jsonifying the response: {'prediction': [('motorbike', 270, 877, 1944, 3214), ('car', 77, 763, 2113, 3193)]}

Apache NiFi Results of the Run

One of the Images Processed

Apache NiFi Flow Template

Resources

- https://github.com/awslabs/mxnet-model-server

- https://github.com/awslabs/mxnet-model-server/blob/master/docs/README.md

- https://github.com/awslabs/mxnet-model-server/blob/master/docs/server.md

- https://github.com/awslabs/mxnet-model-server/blob/master/examples/ssd/README.md

- http://gluon.mxnet.io/

- https://github.com/awslabs/mxnet-model-server/blob/master/docs/model_zoo.md

- https://mxnet.incubator.apache.org/gluon/index.html

- http://mxnet.incubator.apache.org/tutorials/index.html https://github.com/apache/incubator-mxnet/tree/master/example

- https://github.com/apache/incubator-mxnet/tree/master/example#deep-learning-examples

- http://mxnet.incubator.apache.org/model_zoo/index.html

- https://github.com/apache/incubator-mxnet/releases/tag/1.0.0 https://github.com/gluon-api/gluon-api

- http://mxnet.incubator.apache.org/tutorials/r/classifyRealImageWithPretrainedModel.html

- https://www.slideshare.net/JulienSIMON5/an-introduction-to-deep-learning-with-apache-mxnet

- https://mxnet.incubator.apache.org/get_started/why_mxnet.html

- https://www.slideshare.net/JulienSIMON5/deep-learning-for-developers-december-2017

- https://aws.amazon.com/blogs/aws/aws-contributes-to-milestone-1-0-release-and-adds-model-serving-cap...