Community Articles

- Cloudera Community

- Support

- Community Articles

- Virtual Integration of Hadoop with External System...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 04-13-2016 04:58 PM - edited 08-17-2019 12:48 PM

Virtual Integration of Hadoop with External Systems

The challenge of merging data from disparate systems has been a leading driver behind investments in data warehousing systems, as well as, in Hadoop. While data warehousing solutions are ready-built for RDBMS integration, Hadoop adds the benefits of infinite and economical scale – not to mention the variety of structured and non-structured formats that it can handle. Whether using a data warehouse or Hadoop or both, physical data movement and consolidation is the primary method of integration.

However, there will be data in external systems that need to remain siloed for a variety of reasons (legal, political, economic, etc.). There may also be challenges with synchronizing rapidly changing data from a system of record to a consolidated platform (DW or Hadoop). This introduces the need for “data federation” or “data virtualization”, where data is integrated without copying data between systems.

Best stated in the wiki, “A federated database system is a type of meta-database management system (DBMS), which transparently maps multiple autonomous database systems into a single federated database.“ https://en.wikipedia.org/wiki/Federated_database_system

The following article outlines 3 patterns to address the challenge of Data Federation with Hadoop and considers Pros and Cons associated with each option.

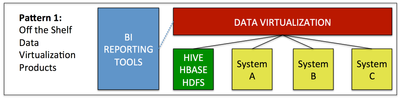

Pattern 1: Off the Shelf Data Virtualization Products

For a true, OOTB option, several products have been specifically designed to address data federation challenges. Defining their domain as “data virtualization”, these products provide an external, metadata layer above multiple source systems.

Within these tools, operators can use metadata to model virtual tables or views that combine data from a variety of sources (RDBMS, Files, Web Services) including Hadoop-specific services (Hive, HBase, HDFS). After the combined view has been created, the results can be expressed as either a relational model or via Web Services.

The intelligence built-in to these products is focused on reducing the movement of data across a network and intelligent query re-write. Predicate pushdown (i.e. the ability to limit data via filters) serves to both limit data movement and also reduce stress on source systems. Query re-write takes a query against a single “virtual” table and breaks it up into multiple, optimal sub-queries in the language native to each integrated source system.

Consider this option if you require true separation of the semantic layer from underlying source systems, especially useful when source systems change frequently or during system migrations.

Notable Products:

Cisco Data Virtualization, Denodo, Informatica

Pros:

- Handles a variety of sources

- Built-In UI for designing and publishing federated views

- Automates query rewrite to source systems

- Can express federated views as a tabular schema or as web services

- 100% External; allowing metadata to live independent of data sources.

Cons:

- Adds another system or layer to be managed within the enterprise

- Requires additional hardware

- Requires licenses

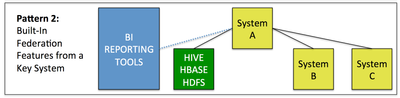

Pattern 2: Built-In Federation Features from a Key System

While not built specifically for federation, some data warehouse or analytics platforms offer pass-through integration with other systems. Meaning, without copying data between systems, metadata can be shared and data can be pulled “on demand” for reporting. Virtual tables from external sources appear side by side with native, locally stored data, so that the end user is abstracted to back-end storage locations. Instead, they work with the familiar interface of a key system within the enterprise.

Similar to data virtualization products, predicate pushdown is also supported. However, as these products are not specifically designed for data virtualization, the number of supported source systems can be limited as will variety (primarily RDBMS).

If your BI reporting is primarily focused on a key data warehousing or analytics application, consider Hadoop integration through the lens of the primary source system.

Notable Products:

Teradata QueryGrid, SAP HANA Vora

Pros:

- Built-In UI for designing and publishing federated views

- Automates query rewrite to source systems

- Familiar interface for BI and metadata management

- Leverage existing tools and hardware

Cons:

- May require additional licenses

- Support for external sources may be limited

- Federation strategy is bound to a specific source

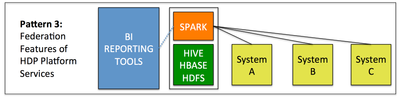

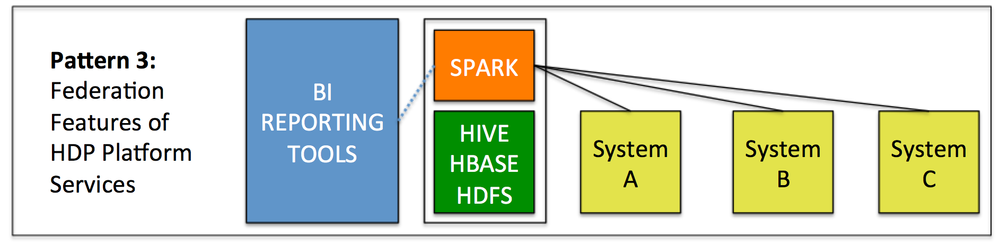

Pattern 3: Federation Features of HDP Platform Services

There are services within the Hortonworks Hadoop distribution (HDP) that can also be used to integrate external data. Unlike the option of integrating through the lens of a primary source (e.g. Teradata or SAP), you can leverage a storage neutral service, like Spark, to integrate native Hadoop stores (Hive, HBase and HDFS) with external RDBMS.

Using Spark, internal or external data can be loaded as RDDs (resilient distributed datasets), then exposed as SparkSQL tables using an embedded JDBC service for BI Reporting. The ability to register a Spark context with a Thrift Server makes this possible. Further, for optimal performance, external tables can be “cached” into local memory – which reduces continuous network traffic and buffers source systems from direct query. While technically, the in-memory data is a “copy” from an external source, it is not persisted to local disk and does not need to be managed as a permanent asset.

This approach is less “turn-key” than the previous options in that there is no easy-to-use or familiar UI available for data modeling. Instead, it involves some minor coding in Spark to attach-to and serve up the content from remote and local sources. But it does have the benefit of running natively in Hadoop and leveraging power of distributed computing. Also, Spark is a service engine, existing independent of storage, so you are not binding your federation approach to a single source.

Consider this option when you need to serve both Data Science and BI Analytics requirements and/or when Hadoop-based storage options (Hive, HBase, HDFS) are a primary source of data for reporting.

Notable Products:

Spark, SparkSQL

Pros:

- Distributed, in-memory option for best performance

- Serves Data Science team in Spark and external BI Reporting tools.

- Leverage existing tools and hardware

- No licenses

Cons:

- SQL support is less mature than data warehouse or data virtualization options

- Some programming required to stage external tables

Conclusions:

Each approach to integrating Hadoop with external systems has merit and should be measured against requirements and long-term strategy.

- If you want a true, off-the-shelf data federation capability, there are products that are already built for you.

- If you are heavily invested in an existing data warehouse or analytics approach (e.g. Teradata, SAP), look to your preferred vendors for guidance.

- If your long-term strategy positions Hadoop as a “Data Lake” or central data management platform, look to leverage your investment and consider Spark. It’s not just for Data Scientists anymore (although they love it)..

SparkSQL Federation article and demo links:

https://community.hortonworks.com/content/repo/29883/sparksql-data-federation-demo.html

Further reading and associated links:

Cisco Data Virtualization (http://www.cisco.com/c/en/us/services/enterprise-it-services/data-virtualization.html)

Denodo (http://www.denodo.com/en)

Teradata QueryGrid (http://www.teradata.com/products-and-services/query-grid/#tabbable=0&tab1=0&tab2=0)

SAP Vora (http://www.cio.com/article/3043777/analytics/saps-hana-vora-bridges-divide-between-enterprise-and-ha...)

Spark Thrift Server and query from an external BI tool using JDBC (https://www.linkedin.com/pulse/query-internal-rdd-data-spark-streaming-from-outside-bo-yang).

Created on 07-13-2017 12:32 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Excellent article. Would presto fall into option #2?