Community Articles

- Cloudera Community

- Support

- Community Articles

- Visualizing Hive Data Using Microsoft Power BI

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 10-12-2016 04:43 PM - edited 08-17-2019 08:57 AM

Pre-requisites

- Power BI Desktop Edition https://powerbi.microsoft.com/en-us/desktop/

- HDP Installation either cluster or sandbox. Can be cloud or on-prem

- Hortonworks ODBC Driver for Apache Hive (v2.1.5). Scroll down to the Add-Ons section http://hortonworks.com/downloads/#sandbox

- Data set. I used TPC-DS which can be installed from here https://github.com/hortonworks/hive-testbench

Note: This exercise assumes a non-Kerberoized cluster

Step 1 – Setup the Environment

How to setup the environment is up to you. You have lots of options. I ended up using Windows Server 2016 Evaluation Edition running on VirtualBox. For HDP I’m running a 4 node cluster in the cloud. Here are a few possible scenarios:

- Leverage existing infrastructure. You will need hardware for HDP as well as a Windows or Mac box to run Power BI desktop

- Run both instances in a virtual environment using VMware or VirtualBox and install the HDP 2.5 sandbox.

- Run everything in the cloud with either Azure IaaS, HDInsight, or AWS.

- Run Power BI on-prem and utilize a cloud instance of HDP. (be careful of data transfer costs when loading the BI model).

Step 2 – Load the Data

Again, how you get data is up to you. I used TPC-DS data from the hive-testbench (see pre-reqs). I used a 5 GB dataset but this is configurable. Note: depending on the size of your cluster generating the data set as well as loading data into Power BI can take a long time. Be patient.

Another option would be to use Apache Sqoop to load data from an existing RDBMS data source. Following are instructions for moving data from SQL Server AdventureWorks to Hive:

Setup SQL Server (Windows)

- Install SQL

- Create SQL Server account hadoop\hadoop

- Disable shared memory

Configure Hadoop (Linux)

- Download sql driver to /usr/local. curl -L 'http://download.microsoft.com/download/0/2/A/02AAE597-3865-456C-AE7F-613F99F850A8/sqljdbc_4.0.2206.1...' | tar xz

- Copy driver to library cp sqljdbc_4.0/enu/sqljdbc4.jar /usr/hdp/current/sqoop-server/lib

- List databases to check connection

sqoop list-databases --connect jdbc:sqlserver://<IP Address>:<Port Number> --username <Username> --password <Password>

sqoop list-databases --connect jdbc:sqlserver://192.168.56.101:1433 --username hadoop --password hadoop

- Import table

sqoop import --connect "jdbc:sqlserver://<IP Address>;database=<Database Name>;username=<Username>;password=<Password>" --table <Table Name> --hive-import -- --schema <Schema Name>

sqoop import --connect "jdbc:sqlserver://192.168.56.101:1433;database=AdventureWorksDW2014;username=hadoop;password=hadoop" --table DimCustomer --hive-import

My TPC-DS data set looks like this. If you are familiar with data warehouse schemas, you will quickly notice the dataset is comprised of dimension tables and measure (fact) tables.

Step 3 – Connecting to Hive

Hiveserver2 allows for a connecting via standard ODBC. To do this you will need to download and install the ODBC driver on the system running Power BI desktop. The documentation can be found here http://hortonworks.com/wp-content/uploads/2016/08/Hortonworks-Hive-ODBC-Driver-User-Guide.pdf

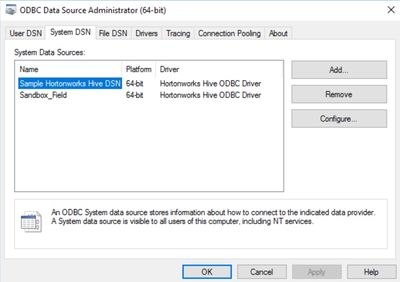

After the driver installs you will need to setup a System DSN. Open up the 64 bit ODBC Data Source Administrator and click on the System DSN tab.

You will want to highlight the Sample Hortonworks Hive DSN and click Configure

Following shows the settings for my DSN:

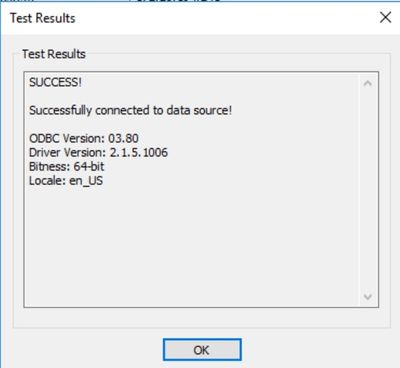

The primary areas you will want to consider is the IP address of the HDP cluster, the Authentication Mechanism which is set to User Name, and finally the name of the user connecting to Hive – in this case it is the user hive. Test the connection to make sure you can connect.

Once successful you can now open up Power BI and configure the ODBC connection to Hive. In Power BI click on the Get Data in the ribbon and select More…

As you can see Power BI has a wealth of data connection options. For our purposes we are going to select ODBC. From the ODBC wizard you will need to select the ODBC configuration setup in the previous step.

Once connected you are now ready to load table data.

Step 4 – Loading and Visualizing Hive data in Power BI

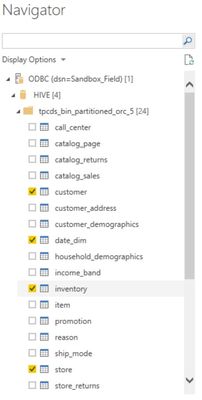

If your connection was successful in Step 3 you should now be able to drill down to your Hive schema using the Power BI navigator. You should see all the tables listed. It is here you can pick and choose which tables you want to view in your reports. I’d suggest only selecting a few by focusing on a single fact table with a few key dimensions. The load time especially on a small environment can be quite long.

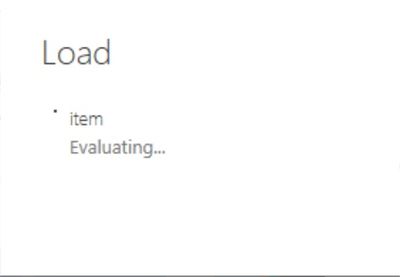

As the data loads you will get a loading screen as Power BI connects to Hive and executes queries to pull the data.

Once the tables are loaded they will appear in the right hand Fields column

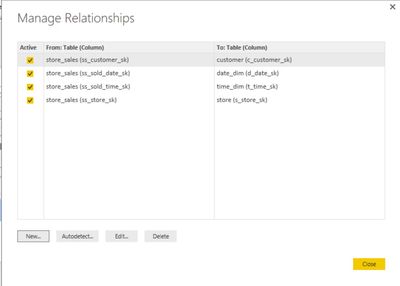

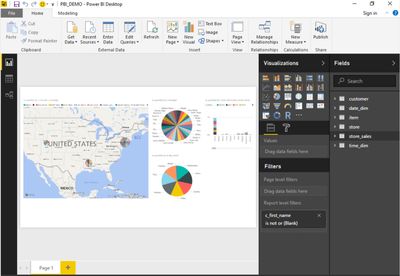

At this point you may be tempted to start visualizing the data but first we need to let Power BI know the relationships between the tables and model our star schema. We do this by selecting the Manage Relationship icon in the ribbon.

If you notice in the screen shot below I have already setup relationships between the store_sales measure and the various dimensions.

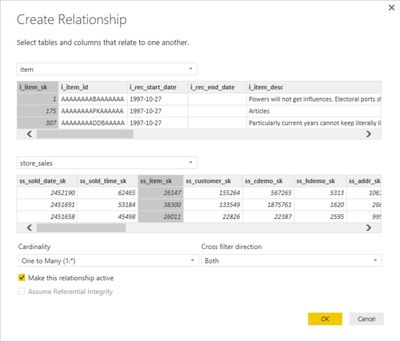

To create additional relationships you can click the New button. The example below I have selected the item table and highlighted the i_item_sk column which is the column representing a unique item. I then selected the store_sales table and highlighted the ss_item_sk as the foreign key. Clicking OK will set this relationship.

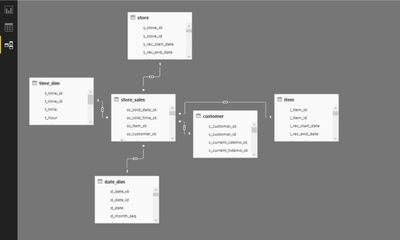

Clicking on the relationship button along the far left side will show you the relationships as a logical data model. Note the standard and familiar star schema pattern.

Now that the data is loaded and modelled you are now ready to visualize. Power BI is extremely easy to use and navigate. Expanding the tables and clicking on columns will insert them into your report. Feel free to experiment with the data and find out what insights you unlock.

Created on 03-05-2017 10:49 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Good article for beginners

Created on 05-11-2017 03:02 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Scott,

Below is the error I am getting on when I am trying to perform ODBC data connection.

"UNABLE TO CONNECT"

Encountered an error while trying to connect to ODBC

Details: "ODBC: ERROR [HY000] [Hortonworks][Hardy] (34) Error from server: SASL(-1): generic failure: GSSAPI Error: Unspecified GSS failure. Minor code may provide more information (Internal credentials cache error). ERROR [HY000] [Hortonworks][Hardy] (34) Error from server: SASL(-1): generic failure: GSSAPI Error: Unspecified GSS failure. Minor code may provide more information (Internal credentials cache error)."

I am able to sucssefully test the connection from Hortonworks Hive ODBC Driver DSN Setup

Thanks for any help :)