Community Articles

- Cloudera Community

- Support

- Community Articles

- What's New in Cloudbreak 2.5.0 TP

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 04-04-2018 09:24 PM - edited 08-17-2019 08:06 AM

Cloudbreak 2.5.0 Technical Preview is available now. Here are the highlights: Creating HDF Clusters

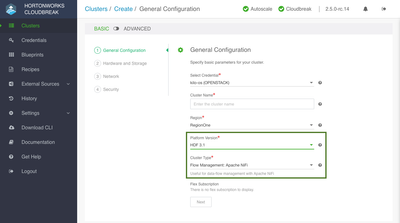

You can use Cloudbreak to create HDF clusters from base images on AWS, Azure, Google Cloud and OpenStack. In the Cloudbreak web UI, you can do this by selecting "HDF 3.1" under Platform Version and then selecting an HDF blueprint.

Cloudbreak includes one default HDF blueprint "Flow Management: Apache NiFi" and supports uploading your own custom HDF 3.1.1 NiFi blueprints.

Note the following when creating NiFi clusters:

- When creating a cluster, open 9091 TCP port on the NiFi host group. Without it, you will be unable to access the UI.

- Enabling kerberos is mandatory. You can either use your own kerberos or select for Cloudbreak to create a test KDC.

- Although Cloudbreak includes cluster scaling (including autoscaling), scaling is not fully supported by NiFi. Downscaling NiFi clusters is not supported - as it can result in data loss when a node is removed that has not yet processed all the data on that node. There is also a known issue related to scaling listed in the Known Issues below.

For updated create cluster instructions, refer to Creating a Cluster instructions for your chosen cloud provider. For updated blueprint information, refer to Default Blueprints.

For a tutorial on creating a NiFi cluster with Cloudbreak, refer to the following HCC post.

> HDF options in the create cluster wizard:

Using External Databases for Cluster Services

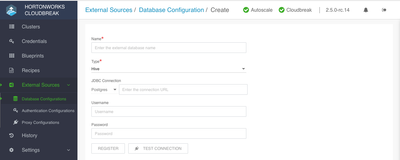

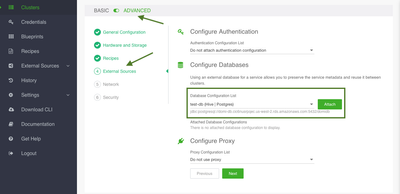

You can register an existing external RDBMS in the Cloudbreak UI or CLI so that it can be used for those cluster components which have support for it. After the RDBMS has been registered with Cloudbreak, it will be available during the cluster create and can be reused with multiple clusters.

Only Postgres is supported at this time. Refer to component-specific documentation for information on which version of Postgres (if any) is supported.

For more information, refer to Register an External Database.

> UI for registering an external DB:

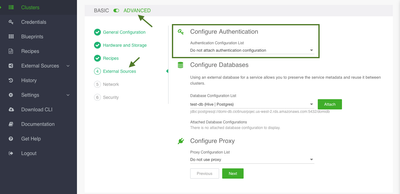

> UI for selecting a previously registered DB to be attached to a specific cluster:

Using External Authentication Sources (LDAP/AD) for Clusters

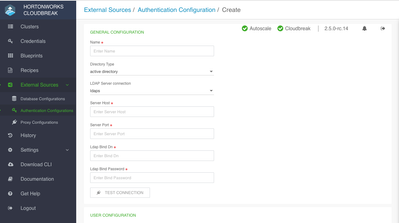

You can configure an existing LDAP/AD authentication source in the Cloudbreak UI or CLI so that it can later be associated with one or more Cloudbreak-managed clusters. After the authentication source has been registered with Cloudbreak, it will be available during the cluster create and can be reused with multiple clusters.

For more information, refer to Register an Authentication Source.

> UI for registering an existing LDAP/AD with Cloudbreak:

> UI for selecting a previously registered authentication source to be attached to a specific cluster:

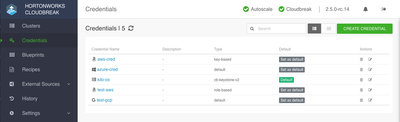

Modifying Existing Cloudbreak Credentials

Cloudbreak allows you to modify existing credentials by using the edit option available in Cloudbreak UI or by using the credential modify command in the CLI. For more information, refer to Modify an Existing Credential.

> UI for managing Cloudbreak credentials:

Configuring Cloudbreak to Use Existing LDAP/AD

You can configure Cloudbreak to use your existing LDAP/AD so that you can authenticate Cloudbreak users against an existing LDAP/AD server. For more information, refer to Configuring Cloudbreak for LDAP/AD Authentication.

Launching Cloudbreak in Environments with Restricted Internet Access or Required Use of Proxy

You can launch Cloudbreak in environments with limited or restricted internet access and/or required use of a proxy to obtain internet access. For more information, refer to Configure Outbound Internet Access and Proxy.

Auto-import of HDP/HDF Images on OpenStack

When using Cloudbreak on OpenStack, you no longer need to import HDP and HDF images manually, because during your first attempt to create a cluster, Cloudbreak automatically imports HDP and HDF images to your OpenStack. Only Cloudbreak image must be imported manually.

More

To learn more, check out the Cloudbreak 2.5.0 docs.

To get started with HDF, check out this HCC post.

If you are part of Hortonworks, you can check out the new features by accessing the internal hosted Cloudbreak instance.

Created on 04-13-2018 03:08 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Dominika Bialek Looks like the CloudBreak 2.5 docs were removed.

Created on 04-13-2018 04:35 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Ancil McBarnett Thanks for letting me know. They are back online now.