Community Articles

- Cloudera Community

- Support

- Community Articles

- Working with Cloudera Data Platform (CDP) OpDB Dat...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

03-30-2020

04:03 AM

- edited on

03-30-2020

06:55 AM

by

VidyaSargur

This article walks you through the setup of a default 7 node CDP OpDB Data Hub cluster for running Operational workloads in the Public Cloud.

In this article, I have used our internal Sandbox environment which was on CDP 7.0.2 release, in which the users are already set up and authenticated via Okta SSO. In this article, I am using AWS for Public Cloud. You can use Microsoft Azure as well. Support for Google Cloud Platform is currently targeted for later this year.

- Navigate to Okta, or your SSO provider home page, if enabled. Search for CDP Public Cloud tile:

Or, click directly on the CDP Public Cloud tile - Click Data Hub Clusters in the portal:

- Click Create Data Hub:

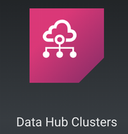

- Select the following options to create OpDB DataHub Cluster on AWS in the Sandbox environment. Feel free to enter your own Cluster Name under General Settings as shown below:

Also, review the Advanced Options. You need not update anything under it, for this exercise. Note the Services as part of this template. There is no Phoenix to work with yet in Public Cloud Sandbox Environment. - Click Provision Cluster. This might take a few minutes to complete.

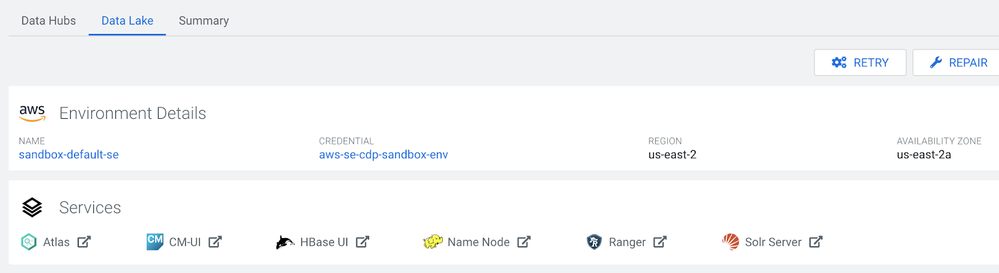

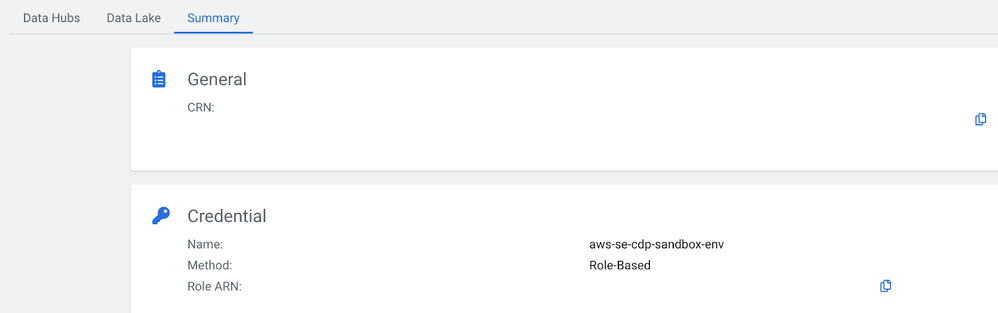

- While this is provisioning, you can explore the Data Lake and Summary tabs for interesting details about this environment:

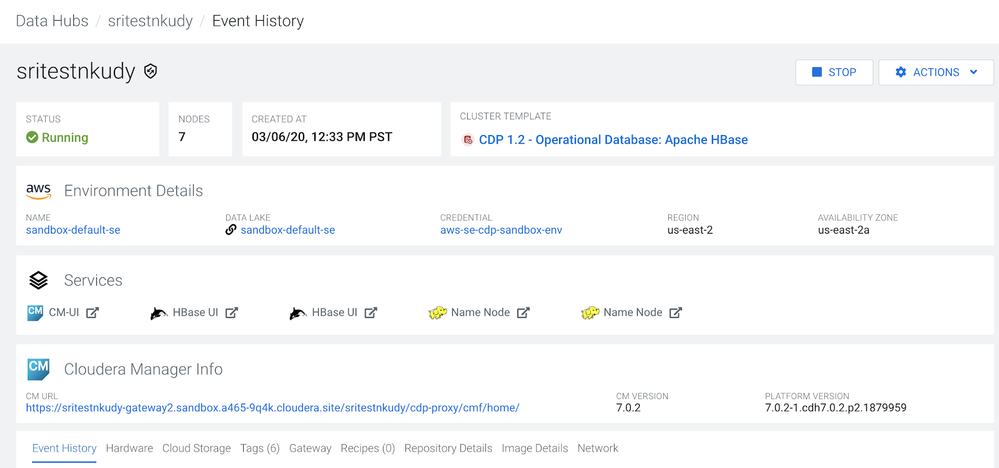

Note: Some of the details have been masked in the screenshot above. - Once provisioned, the status should be Running as shown below:

- Click on your Cluster Name to view the event history and review the additional details under each tab at the bottom: For example, the hardware tab lists the 7 nodes in detail.

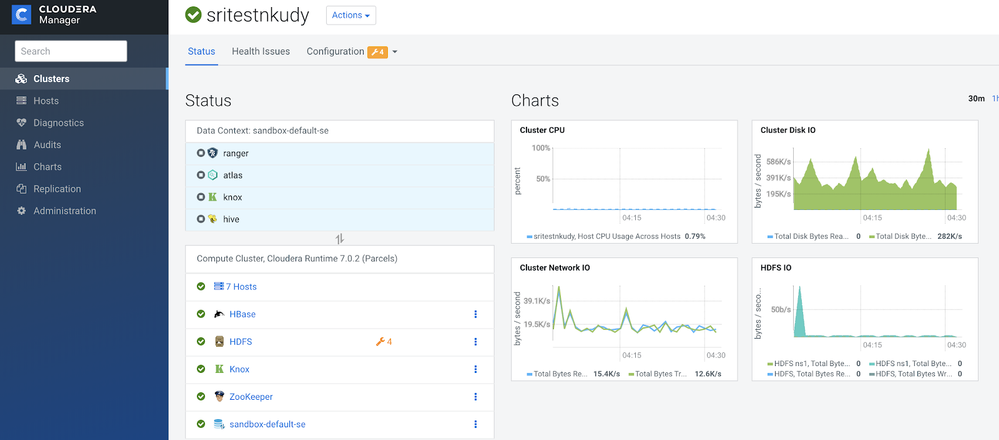

- Navigate to CM UI, HBase UI, and Name Node to explore additional details by clicking on the links under the Services section. For example, CM UI installed on the gateway node:

If you are able to access CM UI, please proceed to Step 10.

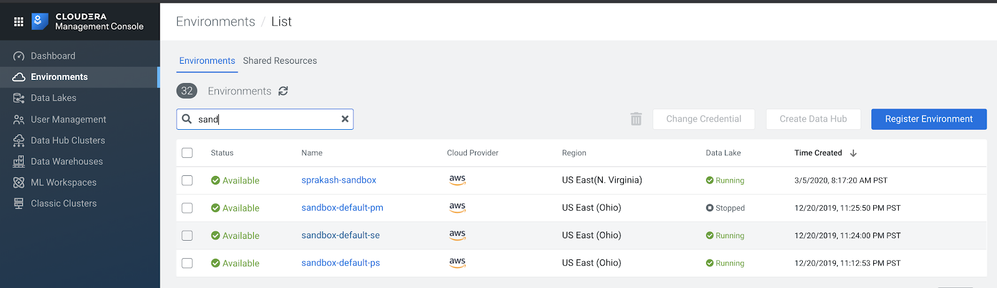

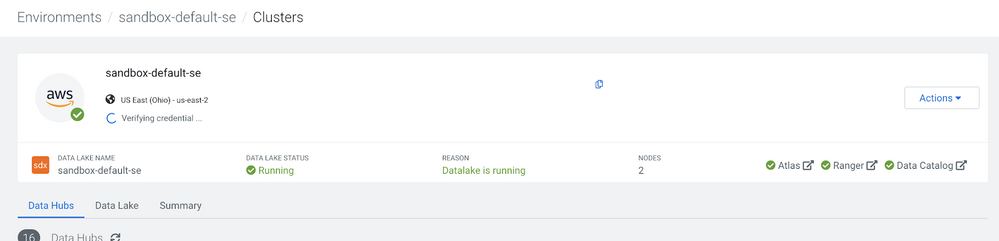

If you are unable to access CM UI, there might be access issues specific to your login on this environment or Public Cloud Data Lake named “sandbox-default-se”. Navigate to the environment in the left pane and filter down to a specific environment as shown below:

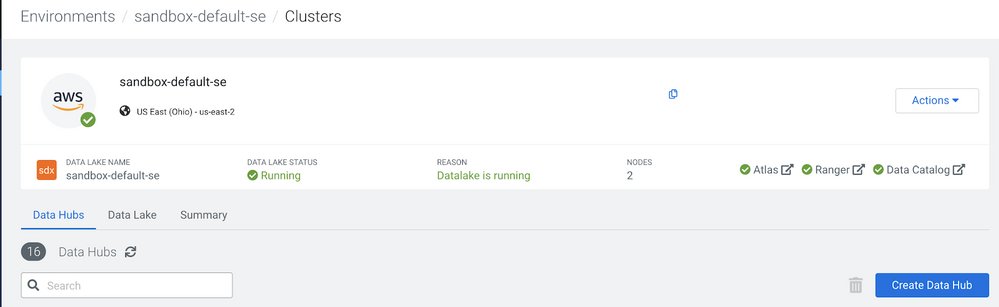

Click on the environment name “sandbox-default-se”. Note that some of the details are masked in the screenshot below.

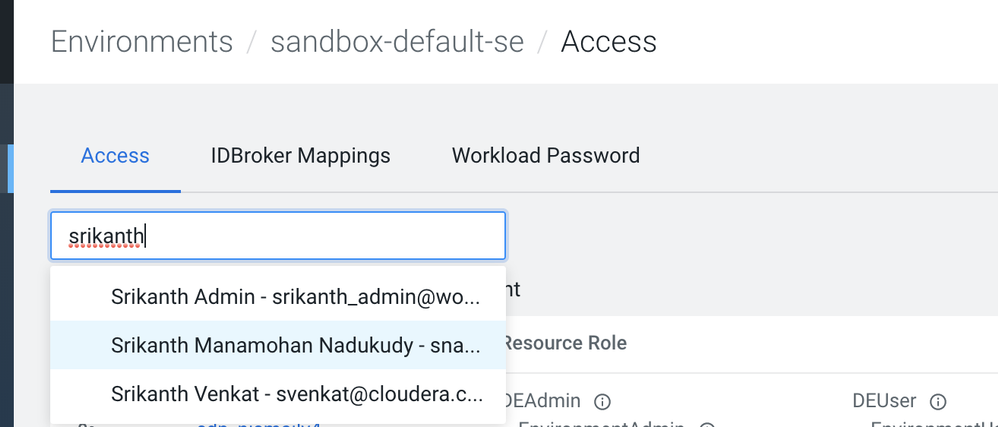

Click on Actions | Manage Access. Filter on your name as shown below:

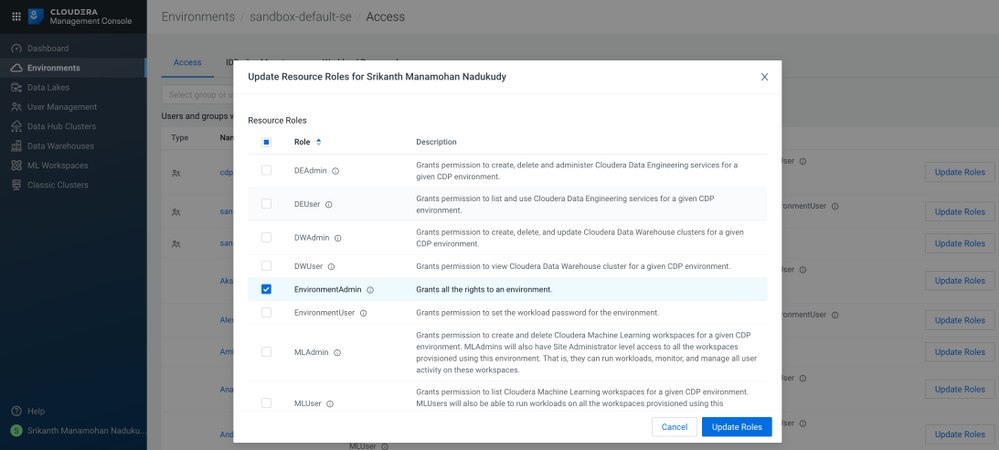

Select the appropriate role in this screen. I am assuming an Admin for this environment and click on Update Roles as shown below:

Navigate back to the environment sandbox-default-se. Note that some of the details are masked in the screenshot below:

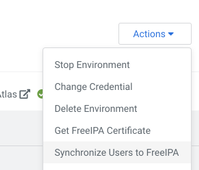

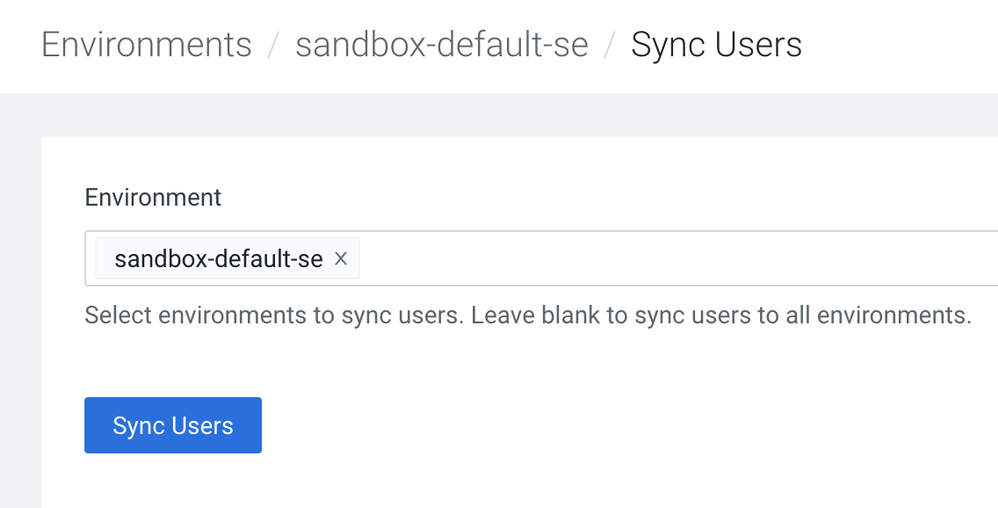

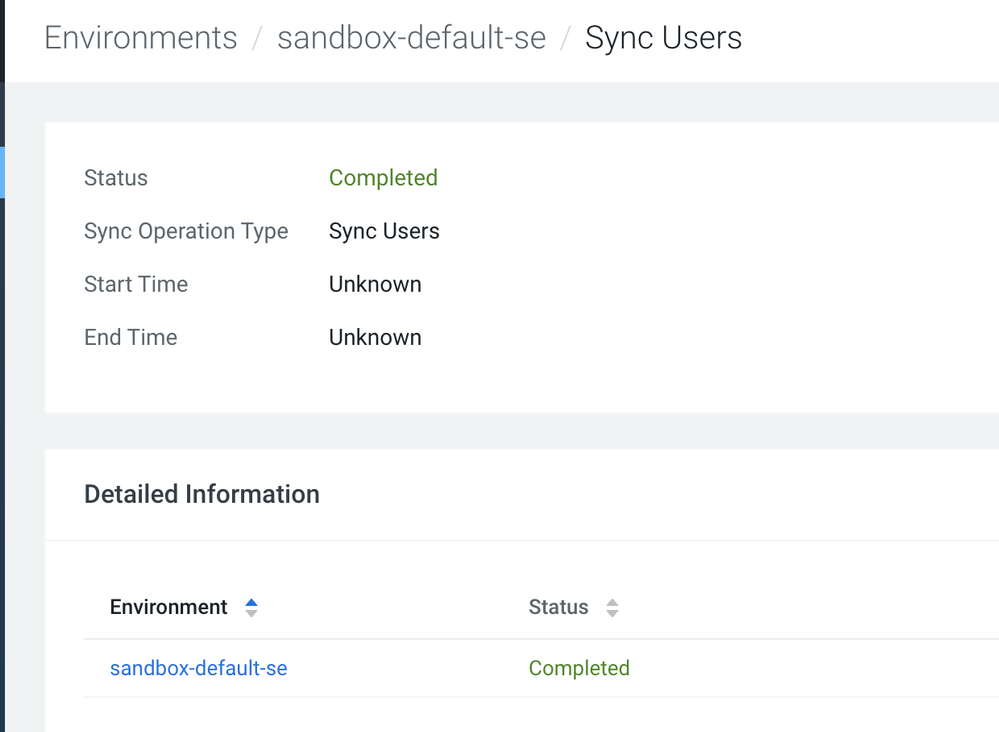

Click on Actions | Synchronize Users to FreeIPA. This updates the access rights for all users in this Cloud Data Lake. Note that users can have different access to each Cloud Data Lake that is registered in this CDP Public Cloud environment. - This might take a few minutes to complete.

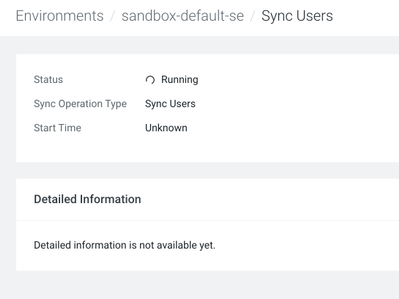

- Once completed successfully, you should see the following message:

Try accessing the CM UI again. If you still have any issues accessing CM, submit a Support ticket with Cloudera Team. - Now that you are able to access CM UI, login to the Gateway node using CLI as shown below:

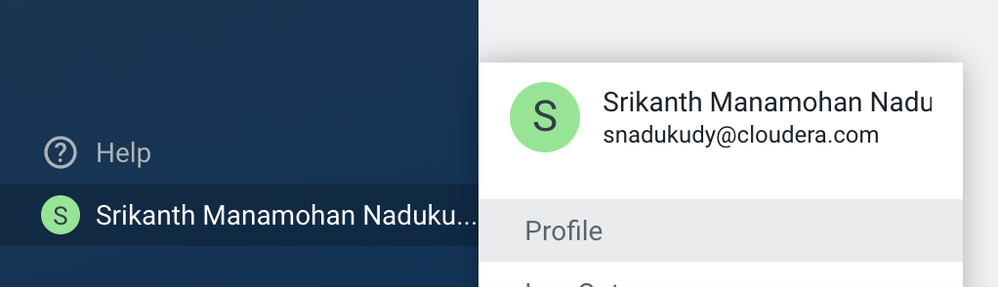

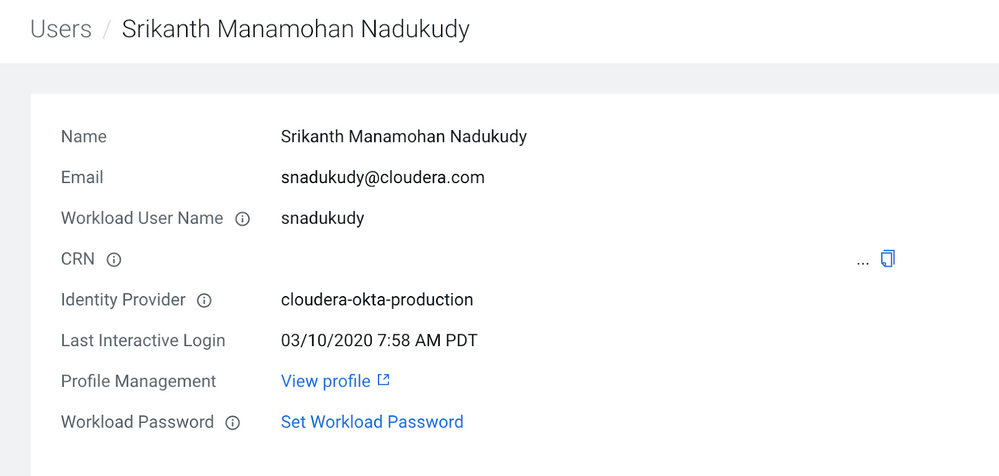

Srikanths-MacBook-Pro:~ snadukudy$ ssh sritestnkudy-gateway2.sandbox.a465-9q4k.cloudera.site Password: (enter your workload password when prompted) Last login: Sun Mar 8 01:20:01 2020 from 76.126.250.37 | | | | ___| | ___ _ _ __| | ___ _ __ __ _ / __| |/ _ \| | | |/ _` |/ _ \ '__/ _` | | (__| | (_) | |_| | (_| | __/ | | (_| | \___|_|\___/ \__,_|\__,_|\___|_| \__,_| ================================================= * : [snadukudy@sritestnkudy-gateway2 ~]$If you need to reset your workload password, please navigate to:

And then click on Set Workload Password to reset it. Note that some of the details are masked in the screenshot below. -

Open HBase Shell:

[snadukudy@sritestnkudy-gateway2 ~]$ hbase shellHBase Shell Use "help" to get list of supported commands. Use "exit" to quit this interactive shell. For Reference, please visit: http://hbase.apache.org/2.0/book.html#shell Version 2.2.0.7.0.2.2-51, rUnknown, Thu Feb 13 19:38:56 UTC 2020 Took 0.0009 seconds hbase(main):001:0> -

Create HBase table named emp or you can run your own HBase commands now:

hbase(main):021:0> create 'emp', 'rowkey','name', 'email','address' Created table emp Took 8.3149 seconds => Hbase::Table - emp -

Show the emp table details:

hbase(main):022:0> desc 'emp' Table emp is ENABLED emp COLUMN FAMILIES DESCRIPTION {NAME => 'address', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_EN CODING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRIT E => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'} {NAME => 'email', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCO DING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'} {NAME => 'name', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCOD ING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE = > 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'} {NAME => 'rowkey', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENC ODING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'} 4 row(s) QUOTAS 0 row(s) Took 0.0440 seconds -

Insert rows into emp table by executing these commands:

put 'emp', 'rowkey:1', 'name','rich' put 'emp', 'rowkey:1', 'email','rich1111@cloudera.com' put 'emp', 'rowkey:1', 'address','395 Page Mill Rd' put 'emp', 'rowkey:2', 'name',sri put 'emp', 'rowkey:2', 'email','sri1111@cloudera.com' put 'emp', 'rowkey:2', 'address','395 Page Mill Rd' put 'emp', 'rowkey:3', 'name',brandon put 'emp', 'rowkey:3', 'email','brandon1111@cloudera.com' put 'emp', 'rowkey:3', 'address','395 Page Mill Rd' -

View the emp table details:

hbase(main):079:0> scan 'emp' ROW COLUMN+CELL rowkey:1 column=address:, timestamp=1583635789786, value=395 Page Mill Rd rowkey:1 column=email:, timestamp=1583635860821, value=rich1111@cloudera.com rowkey:1 column=name:, timestamp=1583635732346, value=rich rowkey:2 column=address:, timestamp=1583635521804, value=395 Page Mill Rd rowkey:2 column=email:, timestamp=1583635402242, value=sri111@cloudera.com rowkey:2 column=name:, timestamp=1583634956908, value=sri rowkey:3 column=address:, timestamp=1583635819282, value=395 Page Mill Rd rowkey:3 column=email:, timestamp=1583638851663, value=brandon1111@cloudera.com rowkey:3 column=name:, timestamp=1583635898253, value=brandon 3 row(s) Took 0.0088 seconds -

Disable emp table first to drop the test data:

hbase(main):080:0> disable 'emp' Took 4.3165 seconds -

Drop emp table to delete the test data and see if there are any other HBase tables using the list command:

hbase(main):081:0> drop 'emp' Took 8.2271 seconds hbase(main):082:0> list TABLE 0 row(s) Took 0.0019 seconds => [] hbase(main):083:0> -

Now, exit HBase Shell using the following command:

hbase(main):083:0> exit 20/03/08 03:44:01 INFO impl.MetricsSystemImpl: Stopping s3a-file-system metrics system... 20/03/08 03:44:01 INFO impl.MetricsSystemImpl: s3a-file-system metrics system stopped. 20/03/08 03:44:01 INFO impl.MetricsSystemImpl: s3a-file-system metrics system shutdown complete. [snadukudy@sritestnkudy-gateway2 ~]$ -

Logout of the gateway node:

[snadukudy@sritestnkudy-gateway2 ~]$ exit logout Connection to sritestnkudy-gateway2.sandbox.a465-9q4k.cloudera.site closed. Srikanths-MacBook-Pro:~ snadukudy$ -

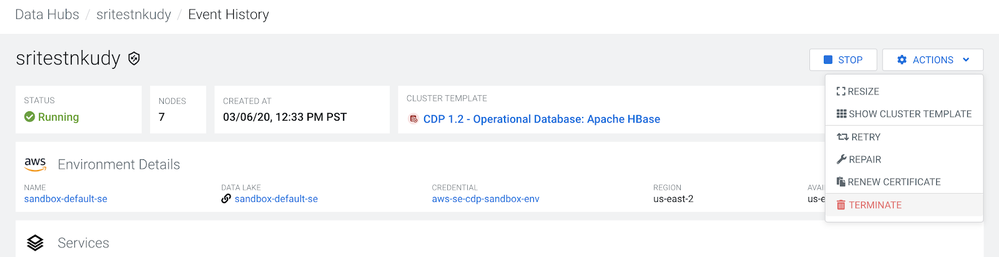

Terminate DataHub Cluster to free up the resources by going under Actions:

-

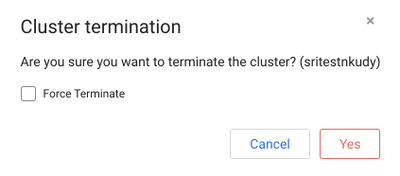

Click on Terminate. This generates the following prompt:

-

Click on Yes to proceed.

This might take a few minutes to complete and this DataHub Cluster will be no longer listed on the page. This is the end of the sneak peek into OpDB Data Hub Experience in CDP.

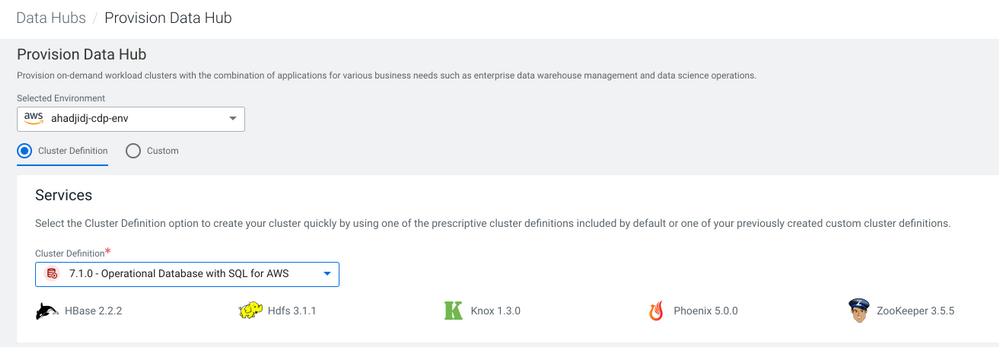

The following is the screenshot for the services included (with the support for Apache Phoenix in CDP DataHub 7.1 released on March 2nd, 2020).

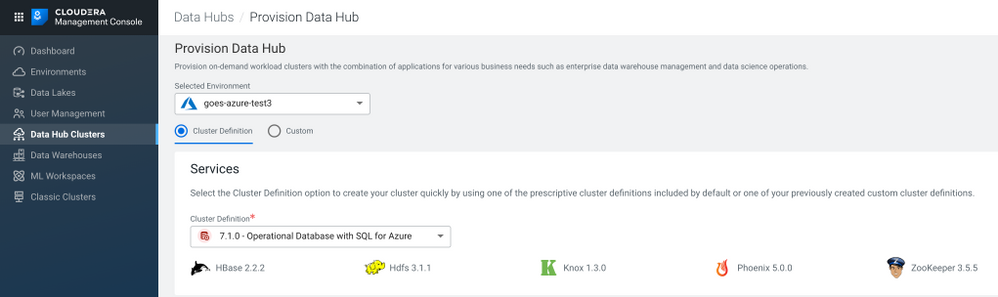

OpDB Data Hub On Azure:

This is my first community article and hope you enjoy playing with it as I did. Special thanks to Krishna Maheshwari, Josiah Goodson and Brandon Freeman for their guidance as well! Looking forward to your feedback.

Have a great one!

Cheers,

Sri